Test effectiveness

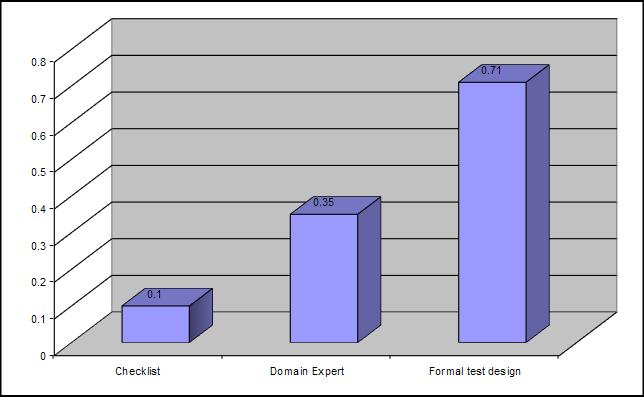

Boris Biezer stated black box testing was approximately 35 to 65% effective. I had also read that Gerald Weinberg conducted studies at IBM with similar results. I recently spoke at the SQS conference in London and in the opening presentation Bob Barlett stated that SQS studies indicated that formal test design was almost twice as effective in defect detection per test case as compared to expert (exploratory) type testing, and of course put into perspective the infamous "death by checklist" syndrome.

About 4 years ago I began a 3 year study at Microsoft to verify assertions on testing effectiveness from a black box approach. I used Weinberg’s famous Triangle paradigm for the assessment. Given a brief functional requirement participants in the case study were asked to define tests to validate a program written in C# against the stated requirements. The basic requirements are outlined in Glendford Myer’s book The Art of Software Testing as “A program reads three (3) integer values. The three values are interpreted as representing the lengths of the sides of a triangle. The program displays a message that states whether the triangle is scalene, isosceles, or equilateral.”

Based on the implementation in C# (pseudo code below) and assuming that all inputs are valid integer values we determined the minimum number of tests to validate a program against this functional requirement is 11 tests as outlined below.

if (a + b <= c) or (b + c <= a) or (a + c <= b)

then invalid triangle

else if (a equals b) and (b equals c)

then equilateral triangle

else if (a not equal b) and (b not equal c) and (a not equal c)

then scalene triangle

else isosceles triangle

The minimum tests for conditional control flow and data flow (again assuming valid integer inputs)

· 6 tests to validate the invalid triangle path

a + b < c

a + b = c

etc.

· 1 test for the equilateral path

· 1 tests for scalene

· 3 tests for isosceles (which actually verify the false outcomes in the sub-expressions of the scalene predicate statement)

I collected data for 3 years with more than 500 participants ranging from < 6 months to more than 5 years testing experience but non having formal training in testing techniques or methodologies. Interestingly enough, the data changed very little from the first few groups. The empirical results of this case study demonstrate the average effectiveness of tests in the most critical area of the program was only 36%. This literally means that of the minimum 11 tests for control and data flow coverage this section of code the average tester defined only 4 tests (1 test for invalid, 1 for equilateral, 1 for scalene, and 1 for isosceles). During this time period Microsoft was also making a transition to hire testers with greater technical competence and coding skills. Perhaps not surprising to most, the testers with a coding background increased the test effectiveness ratio by 50%.

This is just a small snap shot of the overall case study, but the overall conclusions determined that untrained testers using only an exploratory black box approach to testing are less effective and non-technical testers are 50% more likely to perform redundant or ineffective tests as compared to testers with greater technical competence (not necessarily coding skills, but a greater understanding of the entire system under test.)

Some managers at Microsoft scoffed at these results. One said that if he asked one of his non-technical testers to test the design of a new coffee cup that person would probably do better as compared to someone with a computer science background. OK…he probably has a point. But, I would argue that Microsoft and many other software companies are in the business of producing technological solutions to customers, and not in the business of making mugs (unless perhaps Microsoft’s ceramic team is in building 7 and that is a new LOB I don’t know about). The bottom line is that formal training in established, time proven formal functional and structural techniques can increase effectiveness of testers and reduce potential risk in a software project.