What's New in Audio for Windows 10

In my previous posts I gave a detailed overview of the Windows 8.1 audio stack. If you want to read more about the Windows 8.1 audio stack, please look at the following posts:

- Overview of the Windows 8.1 audio stack

- Audio categories

- H/W offload

- Audio processing (audio effects)

- Background audio playback, volume policies and routing

In this post I will explain the differences between the Windows 8.1 audio stack and the Windows 10 audio stack. During the development of Windows 10, I spent a considerable amount of time working with the MSDN doc writers and the audio developers, in order to update the MSDN documentation for audio. So, in order to avoid duplicating information, I will try in this post to provide a quick summary of each new feature and then point you to the appropriate MSDN article, which will go into more depth.

Also, if you like listening to videos more than reading blog posts and documentation, then you're in luck. I worked with Andy Wen from our PC Ecosystem team and we presented an overview of the changes in WinHEC China 2015. You can find our presentation "Enabling Great Audio Experiences in Windows 10" (the video is translated in real-time from Mandarin to English. You can also download the English version of the slides from that page as well).

These are the areas, where the audio stack changed in Windows 10:

- Low latency Audio

- AudioGraph: a new API for Media Creation scenarios

- New signal processing modes and audio categories

- Offload APOs: APOs can be loaded on top of the offload pin

- Cortana Voice Activation

- New mechanism for Background Audio Playback

- New audio codecs: ALAC, FLAC, AMR-NB

- Windows Universal Drivers for Audio: The same driver works in both Windows 10 Desktop SKU and Windows 10 Mobile SKU

- Resource management for audio drivers

- PNP Rebalance support for audio drivers

Looking at the above list of all changes in the Windows 10 audio stack, I just realized that I owned 80% of those features (I'm including a couple that I co-owned)! Not bad :)

In the following sections I will provide a quick overview of the change and point you to the corresponding MSDN links for additional information.

1. Low latency audio

Audio latency is the delay between that time that sound is created and when it is heard. Having low audio latency is very important for several key scenarios, such as the following.

- Pro Audio

- Music Creation

- Communications

- Virtual Reality

- Games

The total latency of a device is the sum of the latencies of the following components:

- OS

- APO

- Audio driver

- H/W

In Windows 10 we minimized the latency of the OS to a bare minimum. Without any driver changes, all applications in Windows 10 will have 4.5-16ms lower latency. In addition, if the driver has been updated to take advantage of the new low latency DDIs that use small buffers to process audio data, then the latency can be reduced even more. In fact, as is shown in the following diagram, if a driver supports 3ms audio buffers, then the roundtrip latency is ~10ms. And today there are systems in the market that support <3ms buffers :)

In order to have a deeper dive of the low latency optimizations in the Windows stack, as well as the new low latency mechanisms that we provide for applications and drivers, please look at the Windows 10 low latency whitepaper. Also, you can look at our WASAPI low latency sample in GitHub.

2. AudioGraph

AudioGraph is a very simple API that reduces lots of the overhead in Media Creation scenarios. It simplifies implementation by using the concept of an "audio graph".

An audio graph is a set of interconnected audio nodes through which audio data flows. Audio input nodes supply audio data to the graph from audio input devices, audio files, or from custom code. Audio output nodes are the destination for audio processed by the graph. Audio can be routed out of the graph to audio output devices, audio files, or custom code. The last type of node is a submix node which takes audio from one or more nodes and combines them into a single output that can be routed to other nodes in the graph. After all of the nodes have been created and the connections between them set up, you simply start the audio graph and the audio data flows from the input nodes, through any submix nodes, to the output nodes. This model makes scenarios like recording from a device's microphone to an audio file, playing audio from a file to a device's speaker, or mixing audio from multiple sources quick and easy to implement.

A good starting point to learn more is the MSDN page for AudioGraph. If you want to start experimenting with the AudioGraph API is to use our AudioGraph sample in GitHub.

3. New audio categories and signal processing modes

In my previous posts, I explained the concepts of audio categories and signal processing modes.

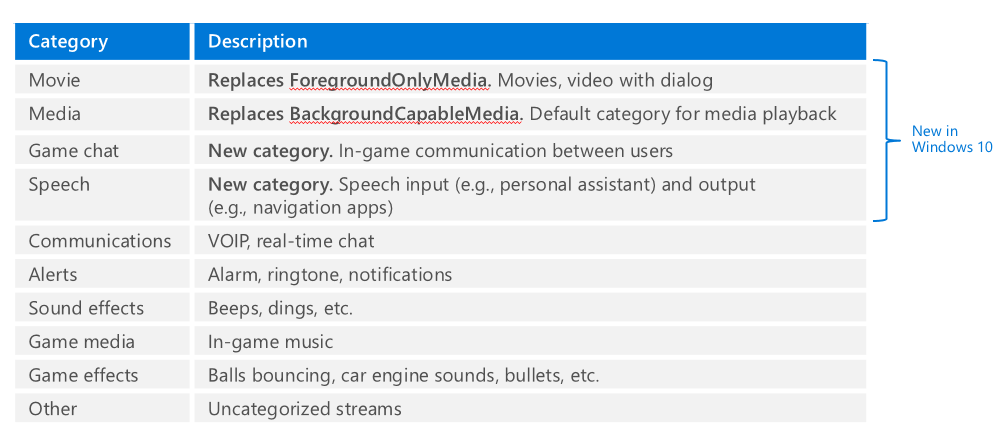

The following matrix shows the full list of audio categories in Windows 10:

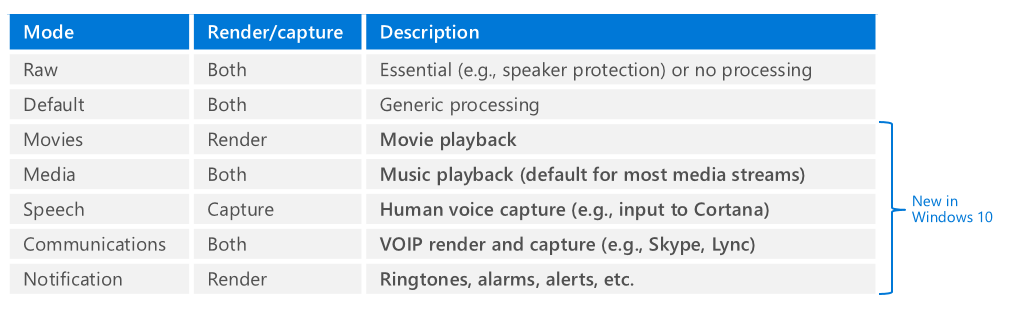

The following matrix shows the full list of audio processing modes in Windows 10:

Let me clarify a few things between audio categories and audio signal processing modes:

- Applications tag each of their streams with one of the 10 audio categories that are provided by Windows

- Each audio category is mapped to a specific signal processing mode.

- Applications do not have the option to change the mapping between an audio category and a signal processing mode.

- Applications have no awareness of the concept of an "audio processing mode". They cannot find out what mode is used for each of their streams

- Each signal processing mode needs to include audio processing (i.e. APOs) that optimize the audio signal for the scenarios described above.

- However, it is possible that not all modes might be available for a particular system

- Windows only mandate that the driver supports raw and/or default signal processing modes

- Drivers define which signal processing modes they support (i.e. what types of APOs are installed as part of the driver) and inform Windows accordingly

- If a particular mode is not supported by the driver, then Windows will use the next best matching mode

- It is recommended that IHVs/OEMs utilize the new modes to add audio effects that optimize the audio signal to provide the best user experience

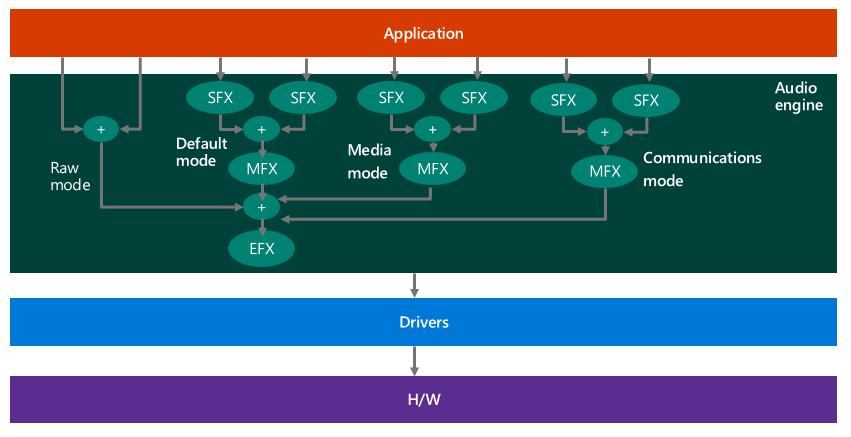

The following diagram shows a system that supports multiple modes:

For additional information about audio categories and audio signal processing modes, please visit the MSDN page for "Audio Processing Object Architecture" and "Audio Signal Processing Modes".

4. Offload APOs

In my post about the Windows 8.1 H/W offload, I had written that Windows 8.1 that does not support loading of APOs on top of the offload pin. However, in Windows 10, we added support for this. This change enables some new scenarios, e.g.:

- In Windows 8.1, if the audio effects cannot be implemented in H/W (e.g. because the DSP is not powerful enough), then there was no practical way for the system to support offload. In Windows 10, these systems would implement the audio effects in S/W, in the form of APOs on top of the offload pin.

- In Windows 10, we can split the audio processing between S/W and H/W. Also, the processing can change dynamically (i.e. some/all processing might be moved from the S/W APO to the DSP when there are enough H/W resources and then moved back to the S/W APO when the load in the DSP increases)

Here is a diagram showing both the offload pin and the host pin:

For more information about offload APOs, please visit the MSDN page titled "Implementing Hardware Offloaded APO Effects".

5. Cortana Voice Activation

Cortana, the personal assistant technology introduced on Windows Phone 8.1, is now supported on Windows 10 devices. Voice activation is a feature that enables users to invoke a speech recognition engine from various device power states by saying a specific phrase - "Hey Cortana". The "Hey Cortana" Voice Activation (VA) feature allows users to quickly engage an experience (e.g., Cortana) outside of his or her active context (i.e., what is currently on screen) by using his or her voice.

You can find more information about this feature at the MSDN page for Voice Activation.

6. Background Audio Playback

In my previous post about Windows 8.1 background audio playback,I had explained that there were different mechanisms for apps to render audio from the background between the Desktop SKU and the Mobile SKU. In Windows 10, we have 1 mechanism that works for both SKUs and it is similar to the Windows 8.1 Mobile SKU mechanism.

More specifically, a Windows 10 application that wants to play audio from the background needs to consist of two processes. The first process is the main app, which contains the app UI and client logic, running in the foreground. The second process is the background playback task, which implements IBackgroundTask like all UWP app background tasks. The background task contains the audio playback logic and background services. The background task communicates with the system through the System Media Transport Controls.

The following diagram is an overview of how the system is designed:

For more information, please visit the MSDN page for Background Audio.

7. Audio codecs

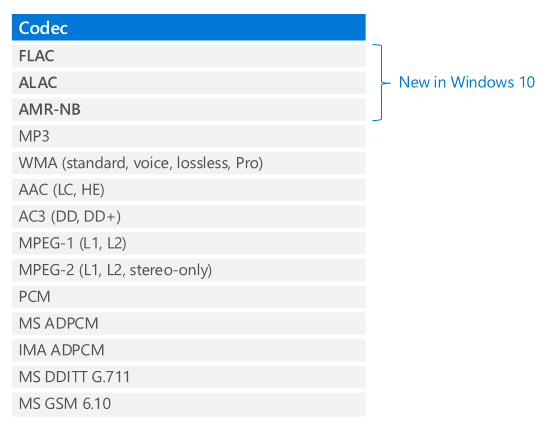

In Windows 10 we added native support for FLAC, ALAC and AMR-NB codecs.

The list of supported audio codecs is:

For more information, please visit the MSDN page for "Supported Codecs".

8. Windows Universal Drivers for Audio

Windows 10 supports one driver model that works for PC and 2:1’s and Windows 10 for phones and small screen tablets. This means that IHVs can develop their driver in one platform and that driver works in all devices (desktops, laptops, tablets, phones). The result is reduced development time and cost.

In order to develop Universal Audio Drivers, you should take advantage of the following tools:

- Visual Studio 2015: New driver setting to set “Target Platform” equal to “Universal”

- APIValidator: WDK tool that checks whether driver is Universal

- Audio samples in GitHub: Samples sysvad and SwapAPO have been converted to Universal Drivers

For more information, please visit the MSDN page for "Universal Windows Drivers for Audio".

9. Resource Management for Audio Drivers

One challenge with creating a good audio experience on a low cost mobile device, is that some devices have various concurrency constraints. For example, it is possible that the device can only play up to 6 audio streams concurrently and supports only 2 offload streams. When there is an active phone call on a mobile device, it is possible that the device supports only 2 audio streams. When the device is capturing audio, the device can only play up to 4 audio streams.

Windows 10 includes a mechanism to express concurrency constraints to insure that high-priority audio streams and cellular phone calls will be able to play. If the system does not have enough resources, then low priority streams are terminated. This mechanism is only available in phones and tablets not on desktops or laptops.

For more information, please visit the MSDN page for "Audio Hardware Resource Management"

10. PNP Rebalance for Audio Drivers

PNP rebalancing is used in certain PCI scenarios where memory resources need to be reallocated. In that case, some drivers are unloaded, and then reloaded at different memory locations, in order to create free contiguous memory space.

Rebalance can be triggered in two main scenarios:

1. PCI hotplug: A user plugs in a device and the PCI bus does not have enough resources to load the driver for the new device. Some examples of devices that fall into this category include Thunderbolt, USB-C and NVME Storage. In this scenario, memory resources need to be rearranged and consolidated (rebalanced) to support the additional devices being added.

2. PCI resizeable BARs: After a driver for a device is successfully loaded in memory, it requests additional resources. Some examples of devices include high-end graphics cards and storage devices.

For more information, please visit the MSDN page for "PNP rebalance for Audio drivers".

MSDN References: