Synchronous vs Asynchronous Transaction Commit Trading for Performances with Delayed Transaction Durability

Let me start this new blog post asking you a question: Which is your general approach when a new version of SQL Server is announced? Well, I normally look at (few) white-papers and overview videos on new features and functionalities, but at the very end, I start looking at the BooksOnLine documentation, items by item, entry by entry, to discover hidden gems in the new version:

Books Online for SQL Server 2014

http://msdn.microsoft.com/en-us/library/ms130214(v=sql.120).aspx

I made this also this time for SQL Server 2014, and my patience was successful even in this case, as always happened since SQL Server 6.5 many years ago, and finally discovered something new that, at least for OLTP RBMS purists like me, it’s really astonishing, that is “Delayed Transaction Durability”. If you try to make an educated guess, you will probably realize what is it and YES…. I can confirm you that it is the possibility to relax/remove/bypass one of the most ancient rule, principle or dogma, if you like the term, since SQL Server first version has born, that is synchronous commit of transactions.

Just to set a common start point for everyone, when an application commits a transaction on SQL Server, transaction log records containing the data modifications must be hardened on disk (stable media) before SQL Server can return the acknowledgement to the application that the transaction is committed. Synchronous commit to stable media will ensure that, in case of SQL Server crash/failure, every transaction confirmed back to the user/application before the crash, will be “replayed” in the database and then survived to the unexpected event. The key word in the period above is “synchronous”; remember it while reading the remaining part of this blog post.

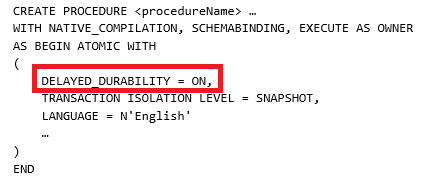

Ok, now you have a general idea, then let me show you an example of a piece of code, in this case a stored procedure, that will not cause synchronous transaction commit:

Forget for a moment other statements and the fact that this is a Hekaton natively compiled stored procedure: what will happen here is that the log records for the transaction are written to the log buffers in memory, but they may not have been written to disk when the commit reports success to the client. This essentially means two things:

- Since flushing to disk is now asynchronous, you will be not delayed by transaction log disk I/O latency, then resulting in higher throughput;

- Since flushing to disk is now asynchronous, you may experience data loss in case of SQL Server crash if happens after the commit, but before log records being hardened (flushed) to disk;

If you are a transactional RDBMS “purist” like me, you will probably consider, at least on a first glance, this feature totally unacceptable due to the data loss possibility: to be honest, after spending 15 years working on the biggest SQL Server OLTP systems in Italy, I changed my point of view, after working for a while on Azure and Big Data hybrid scenarios. The right way to consider this feature is not “one size fits all”, but using “Delayed Transaction Durability” only in appropriate scenarios where:

- Data loss can be tolerated or recovered

- There are several scenarios where data loss can happen without compromising business requirements, or missing data can be controlled (and recovered) at the application level and easily recovered. Examples include long/big batches where the application logic permits to restart/repair the processing without any data loss, or big data sets that can tolerate some records missing over billions, due to the qualitative nature of data analysis.

- Transaction log I/O is a huge bottleneck

- Given a fixed monetary budget for your SQL Server hardware, you maybe not able to buy more expense SSD drives or you have to use SQL Server in the Cloud where scale-up technical possibilities are limited. “Delayed Transaction Durability” offers the possibility to pump up your transaction log performances with no further expense, provided that minimal data loss is acceptable.

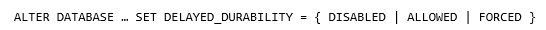

Ok, now let me go over the implementation details of “Delayed Transaction Durability” (DTD) in SQL Server 2014. First of all, despite the stored procedure I used as an example above, is not limited to Hekaton in-memory engine, a new database wide setting, called “DELAYED_DURABILITY”, has been introduced to governs this behavior:

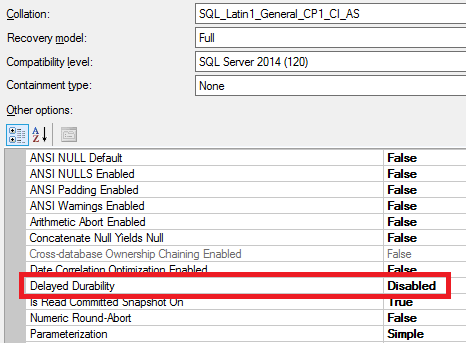

You can check if DTD is enabled for your database using the query below or the “Options” tab of database property page in SQL Server Management Studio (see below):

Select [name], [database_id], [delayed_durability], [delayed_durability_desc]

From sys.databases

Where (delayed_durability_desc = 'ALLOWED' OR delayed_durability_desc = 'FORCED' )

The default value is “DISABLED” (fully durable transactions) to ensure compatibility with existing code and applications, “FORCED” is obvious as well since every transaction in the current database will obey and use DTD without any code modification, finally “ALLOWED” is the real Interesting option: with this enabled, you have granular control over which code blocks will be permitted to use this feature. Based on my early experience with some partners using this feature, only a small subset of tables (and code!) will have benefits by using DTD, then it

makes perfect sense to decide where to use it. Essentially, you have two ways to decide where to use DTD:

- Hekaton Natively Compiled Stored Procedures

- You can include the option to enable “DELAYED_DURABILITY” directly into the ATOMIC part of the CREATE PROCEDURE syntax as shown in the example below:

Create procedure proc_test2 @c1 int, @c2 varchar(100)

With native_compilation, schemabinding, execute as owner

As

Begin atomic with (transaction isolation level=snapshot, language='english', delayed_durability = on)

Insert into dbo.tblTest1 values (@c1, @c2)

End

-

- Please note that you cannot use “DELAYED_DURABILITY” with regular stored procedures, only Hekaton natively compiled ones are currently supported.

- Explicit COMMIT TSQL statement

- If you previously set “DELAYED_DURABILITY” to value “ALLOWED” (or “FORCED”), you can use the following kind of COMMIT statement:

Begin transaction

Insert into dbo.tblTest1 values (2, 'Wello world!’)

Commit transaction with (delayed_durability = on)

Now that you have a clear (hopefully) understanding of this new feature and how is implemented in SQL Server 2014, you should ask yourself how is possible to control transaction log flushing to ensure that everything is hardened on disk: the answer is inside a new extended system stored procedure called “sp_flush_log” that will trigger all log buffers to be secured on stable media. Online documentation does not contain any interesting detail, except for this sentence:

sys.sp_flush_log (Transact-SQL)

http://msdn.microsoft.com/en-us/library/dn467645(v=sql.120).aspx

If you choose to use delayed transaction durability because of the performance benefits, but you also want to have a guaranteed limit on the amount of data that is lost on server crash or failover, then execute sys.sp_flush_log on a regular schedule. For example, if you want to make sure you do not lose more than x seconds worth of data, you would execute sp_flush_log every x seconds.

I made some tests to see how much delay delayed transaction could introduce, but generally it’s very fast to harden log records on disk, even with a busy system, but if you want to be 100% sure about no data loss you have to obey to the above official recommendation. During my tests, I also discovered a couple of interesting facts:

- If you ran any fully durable transaction with ID = (X), then all transaction log records owned by transactions with ID < (X) even with delayed durability, will be hardened to disk since the log chain must be serially written with no gaps.

- If you have a very busy system, even if you use delayed durability transactions then asynchronous commit, your log records maybe immediately flushed to disk if memory buffers used by transaction log will become full.

Finally, a consideration related to interoperability with other SQL Server features. I did not test on my own, but based on the BooksOnLine documentation I reviewed, the following considerations apply:

- Crash Recovery

- Consistency is guaranteed, but some changes from delayed durable transactions that have committed may be lost.

- Failover Clustering

- Some delayed durable transaction writes might be lost during failovers.

- Log Shipping

- Only transactions that have been made durable are included in the log that is shipped.

- Log Backup

- Only transactions that have been made durable are included in the backup.

- Change Tracking and Change Data Capture (CDC)

- All transactions with change tracking are fully durable. A transaction has the change tracking property if it does any write operations to tables that are enabled for either change tracking or change data capture (CDC).

- Cross-database and DTC

- If a transaction is cross-database or distributed, if is fully durable, regardless of any database or transaction commit setting.

- AlwaysOn Availability Groups and Mirroring

- Delayed durable transactions do not guarantee any durability on either the primary or any of the secondaries. In addition, they do not guarantee any knowledge about the transaction at the secondary. After COMMIT, control is returned to the client before any ACK is received from any synchronous secondary.

- Transaction Replication

- Delayed durable transactions do not guarantee their replication. Transactions are only replicated after they have been made durable.

At the end, I consider this a nice new feature that may be useful in several but specific scenarios, including SQL Server 2014 in Azure IaaS where you may easily hit transaction log performance bottlenecks; be also aware that several open-source database engines, including MySQL, include this feature as a customizable behavior to accommodate similar scenarios.

That’s all folks… Best regards.