Strict Transport Security

Ivan Ristic’s meticulously researched Bulletproof SSL & TLS book spurred me to spend some time thinking about the HTTP Strict Transport Security (HSTS) feature under development by the Internet Explorer team and already available in other major browsers. HSTS enables a website to opt-in to stricter client handling of HTTPS behavior. Specifically:

- All HTTP connections to the HSTS-enabled host must take place over HTTPS

- Any errors in the HTTPS certificate or handshake are fatal without override

HSTS was standardized in RFC6797 back in 2012, but the original feature idea dates back to 2008.

While conceptually relatively simple, there are a lot of interesting intricacies around HSTS that we will explore in this post.

Threats Mitigated

HSTS was designed to combat the threat model whereby an active network attacker is able to control the connection between a victim browser and the Internet at large. For instance, imagine you pull out your laptop at the coffee shop and innocently type paypal.com in the address bar and hit Go. Your network request is likely to hit the network as an insecure request for http://paypal.com and the attacker can easily intercept this and reply with a fake version of PayPal to collect your credentials.

Similarly, even if you were to type https://paypal.com, the attacker could intercept the request, and, after supplying a fake certificate, pose as the secure site. Your browser will typically show a certificate error page at this point, but too many users ignore such errors and proceed anyway. Researchers have built a variety of hacking tools like sslstrip to automate such attacks.

The stricter handling imposed by HSTS helps combat both of these threats.

Opting-In

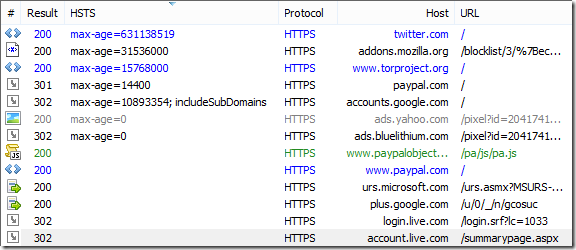

Typically, a site opts-in to HSTS by sending a Strict-Transport-Security response header with a max-age value and optionally an includeSubDomainsdirective. The max-age value defines the number of seconds for which the rule should remain in force; longer values are preferable. For instance, PayPal’s current rule specifies a max-age of 14400 seconds, which means that the protection expires every four hours. If you were to load PayPal at home before bed, your HSTS protection would have evaporated by the time you opened your laptop in Starbucks the following morning. In contrast, Twitter’s max-age=631138519 rule provides an incredible 20 years of protection, and addons.mozilla.org is protected for a reasonable one year (max-age=31536000).

The Bootstrap Problem

HSTS (and similar specifications) suffer from what’s called a “bootstrap problem”—until and unless a user visits the target site over a secure connection, the protection is not activated. To mitigate this problem, some browsers offer what’s called a preload list, in which some HSTS rules are distributed with the browser or its updates directly. This ensures that users are protected immediately and neatly resolves this problem as well as a privacy threat discussed later. The downside of this approach is that it requires the site operator collaborate with browser vendors instead of simply setting a HTTP header.

One exciting possibility is that some of the many new Top-Level-Domains may opt-in for their entire TLD tree, such that every single site under that domain (e.g. anexample1.secure, otherexample2.secure, etc) are all automatically protected.

The includeSubDomains Directive

The includeSubDomains directive enables a site to declare that the rule applies to subdomains of the current domain as well; a rule on a.example.com with includeSubDomains set will also apply to b.a.example.com and c.a.example.com, for instance. This directive is important because design flaws in HTTP cookies could lead to cookie injection and/or session fixation attacks (Bulletproof SSL & TLS explains at length) if includeSubDomains is not set.

The crux of the threat is that browsers can’t indicate where cookies originated when they are sent back to the server, and a child domain may set a cookie on its parent. So, even if you’re interacting with https://legit.example.com, an active network attacker could create a fake http://attacker.legit.example.com and use it to set a cookie that would be sent to the secure site. The secure site would not have a trivial way to detect the phony cookie.

By setting the includeSubDomains directive, a site can protect itself from an attacker-created insecure child, because any child domain would be required to present a valid certificate.

Aside: One proposal I liked for combatting the security limitations of cookies was nicknamed MagicNamedCookies—the idea was that we’d create some new rules for cookies based on their name-prefix. For instance, any cookie named starting with $$SEC- could only be set or received by a HTTPS site. Similarly, a $$SO- prefixed cookie would only ever be set in a same-origin/first-party context. Unfortunately, to my knowledge, no browser has plans to implement these modest and backwards-compatible improvements.

The Parent Problem

Unfortunately, even with the includeSubDomains directive, the unavailability of an includeParent directive means that an active man-in-the-middle attacker can perform a cookie-injection attack against an otherwise HSTS-protected victim domain. Consider the following scenario: The user visits https://sub.example.com and gets a HSTS policy with includeSubDomains set. All subsequent navigations to sub.example.com and its subdomains will be secure.

The attacker causes the victim's browser to navigate to http://example.com. Because the HSTS policy applies only to sub.example.com and its superdomain matches, this insecure navigation is not blocked by the user agent. The attacker intercepts this insecure request and returns a response that sets a cookie on the entire domain tree using a Set-Cookie header. All subsequent requests to https://sub.example.com carry the injected cookie, despite the use of HSTS.

Best Practice To mitigate this attack, HSTS-protected websites should perform a background fetch of a resource at the first-level domain. This resource should carry a HSTS header with an includeSubDomains directive that will apply to the entire domain and all subdomains.

Surveying for HSTS

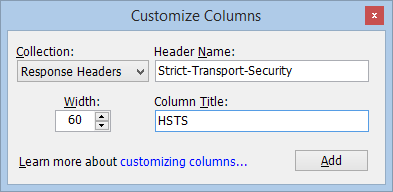

In Fiddler, you can easily add a temporary column to show the server’s Strict-Transport-Security response header, if any. In the black QuickExec box below the Web Sessions list, type:

cols add HSTS @response.Strict-Transport-Security

… and hit Enter. The HSTS column will appear and begin showing the response header’s value.

To permanently add the HSTS column to Fiddler’s UI, right-click the column headers, choose Customize Columns, and add the column like so:

In Chrome, you can see more about the HSTS list by visiting chrome://net-internals/#hsts

Why click-throughs are so dangerous

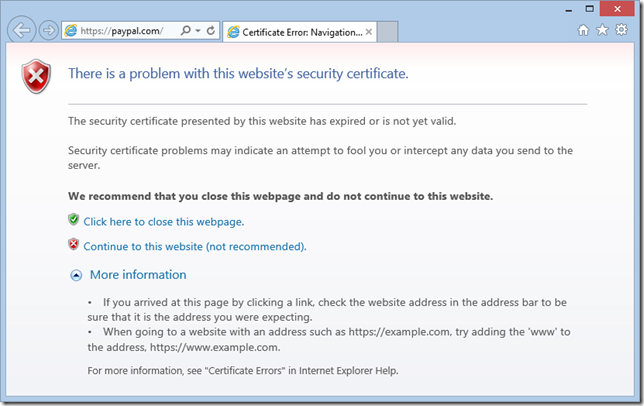

When the IE team replaced the IE6-era “traffic light” HTTPS error prompt:

…with the new blocking full-page errors, we heard a lot of negative feedback, especially from technically-savvy users. For instance, many users complained that the new pages don’t offer an easy way to see the certificate offered by the site, not recognizing that the site’s certificate could be entirely full of lies, deceptions that even technical users are ill-qualified to recognize.

Some users argued that throwing a full-page block for a expired certificate was too much—surely a recently expired certificate wasn’t really unsafe, right? A few of those complainers were experts with advanced understanding of cryptography, but a poor understanding of how the Certificate Ecosystem actually works. Certificates have expiration dates, in part, because the Certificate Authorities that issue them do not want the burden of maintaining revocation information for compromised certificates indefinitely-- the same reason credit cards originally included an expiration date—keeping track of and distributing the list of stolen cards is expensive. When a compromised certificate expires, the CA is no longer under any obligation to warn clients that the certificate had been revoked. The experts in question were appalled to learn that their “expert understanding” of the technology had actually put them at greater risk of having their data compromised.

Other enthusiasts argued: “ Well, okay, you’ve convinced me that a site with a bad certificate shouldn’t be trusted. But I enjoy watching Shark Week and I like looking at scary things. Why not just let me go through to the site but warn me if I try to enter any data in it? ”

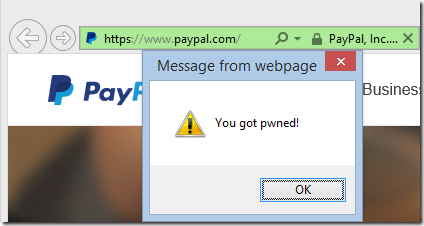

There are two serious problems with this. The first is cache poisoning. If an active man-in-the-middle is attacking your connection to PayPal.com and you click through the blocking page “just to look”, the attacker can send back malicious JavaScript and HTML files with expiration dates that are years in the future. The next time you visit PayPal, even on a trusted, uncompromised connection with the shiny green badge in the address bar, the bad guy’s code is still running in your browser:

The second problem is DOM hijacking. Most browsers do not store a “Bad certificate” flag on the Origin, which means that a browser window with a bogus version of the site (where you clicked through a certificate error) can freely use script to manipulate a browser window that loaded the site over a secure, trusted connection. Stated another way, if any of your connections to a site are compromised, in effect they all are.

Common Questions

Q: So, if click-through is so dangerous, why is it allowed for most errors?

A: Because legitimate sites often deploy HTTPS incorrectly, and if browsers blocked access to them, the user would find another browser. This isn’t a theory, it’s a demonstrated fact.

Q: Prior to the introduction of HSTS, does IE ever prevent override of certificate errors?

A: Yes. There are a few cases in which the user cannot override a certificate error:

- If the certificate is revoked

- If the certificate is deemed insecure (e.g. contains a 512-bit RSA key)

- If the page is in a “pinned site” instance

- If group policy is set to Prevent Ignoring Certificate Errors

HSTS Accidental Denial of Service

One risk with HSTS’ no override behavior is if there’s a configuration problem on the client that turns what would be an annoyance into a work-stopping issue. For instance, I recently sent my Surface2 into Microsoft for servicing, and when I got it back, unbeknownst to me, the system clock was set back a year. I was unable to apply any Windows Updates and it wasn’t until I tried to visit a HTTPS site that I recognized the problem with the clock.

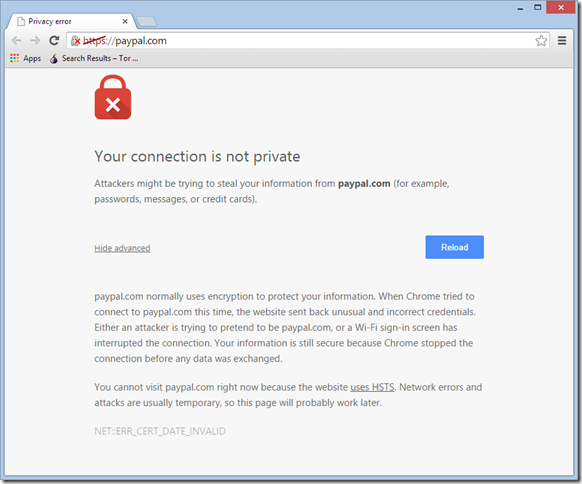

Unfortunately, Internet Explorer 11’s and Chrome 37’s error pages only help you recognize the incorrect system date problem if you know what you’re looking for.

Internet Explorer 11

Chrome 37 Certificate Expired Page

Chrome plans to address this in a future update.

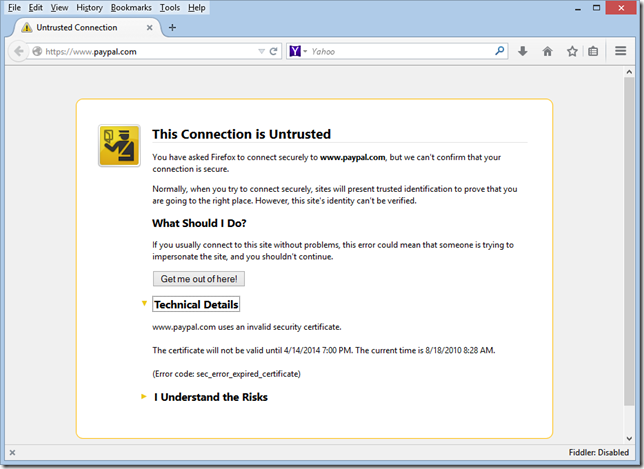

Firefox 32 does a little bit better: it at least shows the system’s reported “Current time” to allow a user to potentially say: “Wait, that’s not right!”

Firefox 32

I’ve filed a bug against IE to suggest that improving this error page should be a priority to help raise websites’ confidence when deploying HSTS.

Beyond the incorrect system date problem, another problem arises with captive portals, like those seen when you connect to WiFi at places like Starbucks—these locations intercept all traffic until you take some action like checking a “I agree with your terms of use” box on a page that the portal serves. Because the portal doesn’t have a valid certificate for the sites it is intercepting, HSTS will prevent loading of the “terms of use” page. Fortunately, most of these problems are easily resolved by simply visiting a HTTP page, and most modern operating systems offer a “captive portal detection” feature that will load an insecure page automatically when joining the network.

Implementation Concerns

For browser implementers, there are a number of interesting challenges when implementing HSTS. For instance, there’s a privacy concern that a local “attacker” could examine the browser’s data store for HSTS rules and thus determine whether the browser had ever visited a given site that uses HSTS but was not in the “pre-load” list. Similarly, a remote website could (by using a carefully constructed set of "probing" subdomains) create a token/identifier/"cookie" that could be probed to identify a user. Clearing the list of sites when the browser history is cleared, and partitioning when the browser is InPrivatemode mitigates this privacy risk but limits the effectiveness of the HSTS feature.

Unfortunately, the authors of the HSTS specification declined to require safe handling of Mixed Content (insecure resources pulled into a secure page) meaning that HSTS-implementers are free to allow Mixed Content into protected pages, or to allow users the ability to override mixed content blocking. This is a significant shortcoming in HSTS.

Implementers must also make a number of design decisions in their implementation; for instance, if a DOM includes an insecure reference to a site using HSTS, should that DOM reference itself be changed, or should the secure version of the URL silently be used? How should the “virtual redirection” be handled by Resource Timing and other specifications? Incorrect handling of these decisions could lead to probing attacks.

For Internet Explorer in particular, there's a question about the scope of the feature. Windows has several major HTTP stacks (WinINET, WinHTTP, and System.NET are the most common) and because IE extensions do not rely upon IE itself for networking, they could unknowingly circumvent HSTS protections unless HSTS state is shared across all of the relevant HTTP stacks.

Further Reading

If you’re interested in HSTS, you’ll also be interested in three other specifications:

- Public Key Pinning – PKP allows a site to limit which certificates are deemed acceptable for HTTPS connections.

- Content Security Policy – CSP allows a site to limit content retrieval to specific origins.

- Mixed Content Handling - Proposal for how User-Agents should handle Mixed Content.

-Eric Lawrence

MVP, Internet Explorer