How the density and distribution of data in the leading key column of an index affects the degree of parallelism chosen for index operations targeting that index?

There’s a topic in SQL Server’s documentation whose title is Configuring Parallel Index Operations, which mentions that the Database Engine can reduce the degree of parallelism if the leading key column of a non-partitioned index has a limited number of distinct values or the frequency of each distinct value varies significantly.

What does that exactly mean and how can that affect the performance of your index maintenance operations?

Related to this question, last week my PFE colleague Karoly Koszo encountered an extreme case which is quite common in many SAP tables. Most of SAP installations have only one client (or Mandant) registered in their ERP deployment. And most of their composed indexes have MANDT (that being the column name SAP uses for such attribute) as the leading key column. That means that for most SAP installations, all these composed indexes have a single value in the leading column. And in those corner cases where they have more than one MANDT, the cardinality of one of them is huge (representing their main company) and the cardinality for other values of MANDT is way smaller.

What Karoly identified in one customer is that when they rebuilt those indexes where the leading column had a density of 1 (i.e. all rows had identical values), the rebuild operation never ran in parallel. In some cases, rebuilding those indexes took hours, and he wanted to 1) identify the reason why it wasn’t parallelizing in the first place, and 2) whether there was a switch he could activate or an action he could take to induce such parallelization.

In order to illustrate what he was seeing, the following script creates two tables, each with its primary key implemented as a clustered index. For the table called EvenDistribution, the leading key column of the index has one different value in each row (highest possible selective). Representing the opposite corner, the table called SkewedDistribution uses the exact same value for the leading key column of the index on each and every row.

use master

go

if exists(select * from sys.databases where name = 'ParallelIndexRebuild')

drop database ParallelIndexRebuild

go

create database ParallelIndexRebuild

go

set nocount on

go

use ParallelIndexRebuild

go

if exists(select * from sys.tables where name = 'EvenDistribution')

drop table EvenDistribution

go

create table EvenDistribution (

c0 int not null,

c1 int not null,

c2 int,

c3 char(2000))

go

alter table EvenDistribution add constraint PK_EvenDistribution primary key clustered ([c0], [c1])

go

if exists(select * from sys.tables where name = 'SkewedDistribution')

drop table [SkewedDistribution]

go

CREATE TABLE [SkewedDistribution](

[c0] [int] not null DEFAULT 1,

[c1] [int] NOT NULL,

[c2] [int] NULL,

[c3] [char](2000) NULL)

go

alter table SkewedDistribution add constraint PK_SkewedDistribution primary key clustered ([c0], [c1])

go

declare @i int

set @i=1

while @i<=10000

begin

insert into EvenDistribution (c0, c1, c2, c3) values(@i, @i, rand()*200000, 'a')

insert into SkewedDistribution (c0, c1, c2, c3) values(default, @i, rand()*200000, 'a')

set @i=@i+1

end

go

Now, if we run the following two statements to rebuild both indices:

alter index PK_SkewedDistribution on SkewedDistribution

rebuild

go

alter index PK_EvenDistribution on EvenDistribution

rebuild

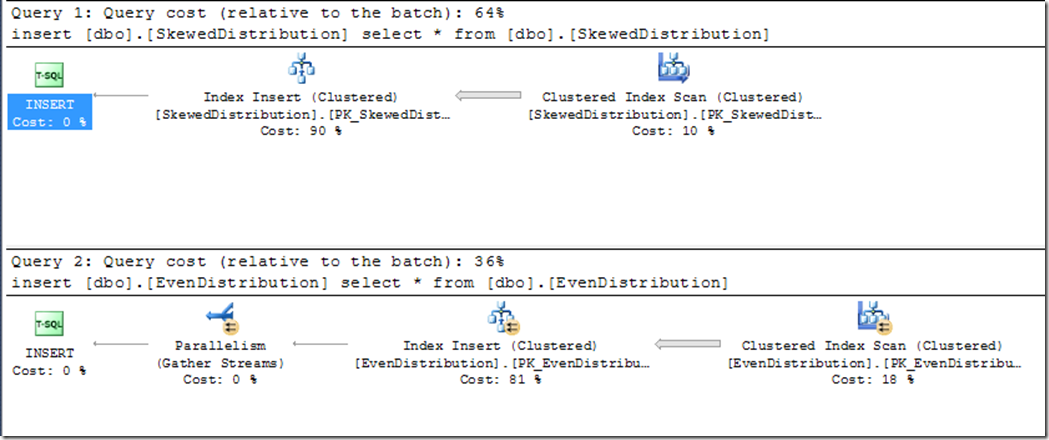

and retrieve their actual execution plan, this is what we get:

Notice that despite 1) the schema of both tables is identical (matching column names, data types, etc), 2) their page cardinality (i.e. number of pages allocated to each index) also matches, 3) both statements have been processed by the same instance of SQL Server – and provided nobody changed the global max degree of parallelism just between the execution of these two statements, as it was the case – the same set of SKU dependent parallelization restrictions were applied in both cases, SQL Server has decided to run the rebuild of SkewedDistribution.PK_SkewedDistribution serially and the rebuild of EvenDistribution.PK_EvenDistribution in parallel. And, no matter how many times you repeat this test, it will always behave exactly like that.

Now, the question is why SQL decides to parallelize the index rebuild in one case and not in the other?

Well, in both cases SQL determines that since the cost of the cheapest serial query plan produced is higher than the value set as “cost threshold for parallelism” (5 in my case), it goes through the Full optimization level where it tries to find an even cheaper parallel plan. During that phase of optimization, one of the factors it takes into consideration to decide whether it goes parallel or not is the number of reasonably evenly distributed partitions the histogram associated to the leading key column of the index can produce.

In the first case (that where the density of the first key column is 1), the function in charge of finding out whether or not the histogram can produce reasonably evenly distributed partitions, suggests the use of a single partition (i.e. the use of a serial plan).

This is a limitation imposed by SQL Server’s design. Basically, for SQL to be able to parallelize the index creation in a case like this, it would have to support the use of multi-column histograms, and that is something not available yet. Not even in the most recently released version, which is SQL Server 2012.

There are other cases though were the previous explanation wouldn’t fit so clearly to understand why the index operation is or is not parallelized. The following being one of them. In this case, we are working over the exact same table and index schema we had before, only that we have modified the data in it. This time, out of the 10K rows we have inserted, not all of them have the exact same value of 1 for column c0 (which is the leading column of the index). This time, 7501 rows (75.01%) have their column c0 set to 1, and the remaining 2499 (24.99%) have it set to 2.

use master

go

if exists(select * from sys.databases where name = 'ParallelIndexRebuild')

drop database ParallelIndexRebuild

go

create database ParallelIndexRebuild

go

set nocount on

go

use ParallelIndexRebuild

go

if exists(select * from sys.tables where name = 'SkewedDistribution')

drop table [SkewedDistribution]

go

CREATE TABLE [SkewedDistribution](

[c0] [int] not null DEFAULT 1,

[c1] [int] NOT NULL,

[c2] [int] NULL,

[c3] [char](2000) NULL)

go

alter table SkewedDistribution add constraint PK_SkewedDistribution primary key clustered ([c0], [c1])

go

declare @i int

set @i=1

while @i<=7501

begin

insert into SkewedDistribution (c0, c1, c2, c3) values(1, @i, rand()*200000, 'a')

set @i=@i+1

end

set @i=1

while @i<=2499

begin

insert into SkewedDistribution (c0, c1, c2, c3) values(2, @i, rand()*200000, 'a')

set @i=@i+1

end

go

If everything I have said until this point is true, the optimizer should this time decide that the rebuild index operation could be parallelized. Let’s give it a try then. So, running this:

alter index PK_SkewedDistribution on SkewedDistribution

rebuild

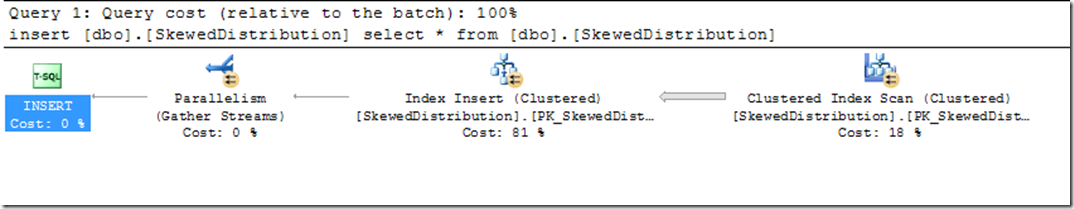

Uses this execution plan, which runs in parallel:

Q: How many threads do you think?

A: Indeed, two threads only. No matter how many schedulers are available to that instance of SQL Server and what is the maxdop globally configured.

Q: Why two threads only?

A: Because the histogram associated to column c0 tells the optimizer there exist only two different values (1 & 2).

And here, you may fairly ask:

Q: But which histogram? The index was created when the table was empty. So the statistics associated to that index, that contain the aforementioned histogram were empty just before the rebuild operation was issued, right?

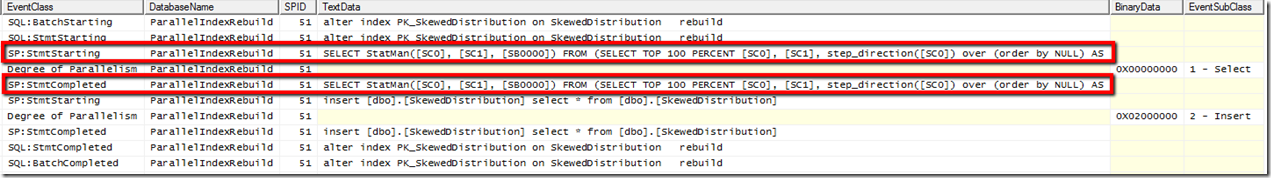

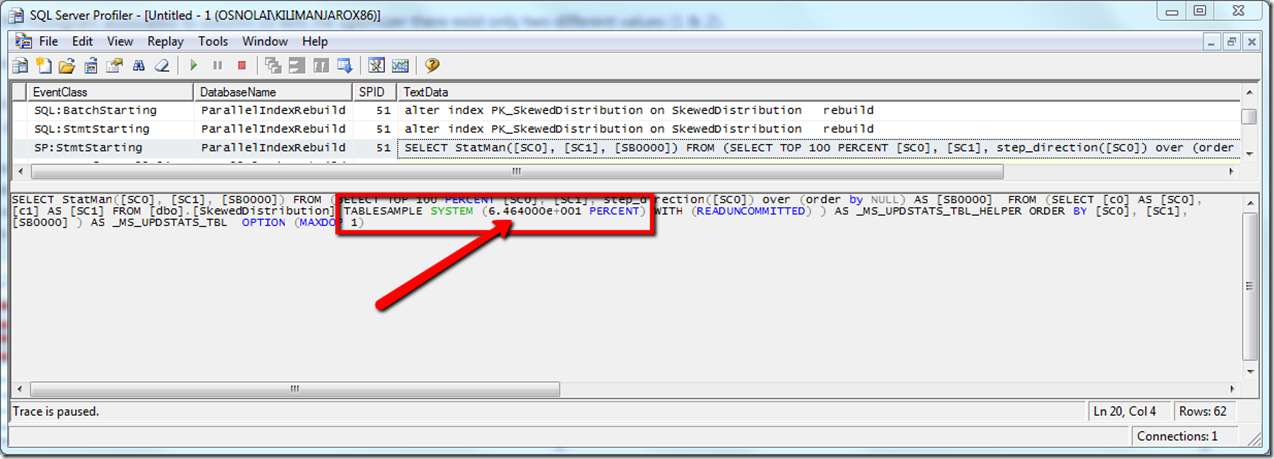

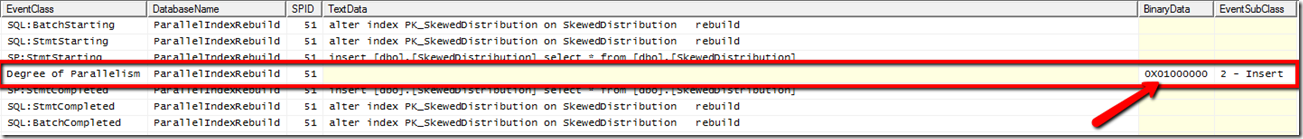

A: That’s absolutely correct. But when I issued the ALTER INDEX … REBUILD statement, the optimizer noticed that the stats were outdated, and since the database was configured to automatically update the stats whenever it was needed, it just did that. Observe, in the following trace, the highlighted statement – that runs in the context of the same session (51) – which just updates those stats. Notice also in the following trace, that the Degree of Parallelism event associated to the insert [dbo].[SkewedDistribution] select * from [dbo].[SkewedDistribution] (i.e. the statement that populates the new index structure), indicates that it is using a parallel plan (i.e. BinaryData >= 0x02000000).

So, that’s the histogram based on which that decision was made.

Q: Now, what would you expect to happen if we run the same ALTER TABLE … REBUILD statement one more time?

A: That SQL would use the exact same plan, right?

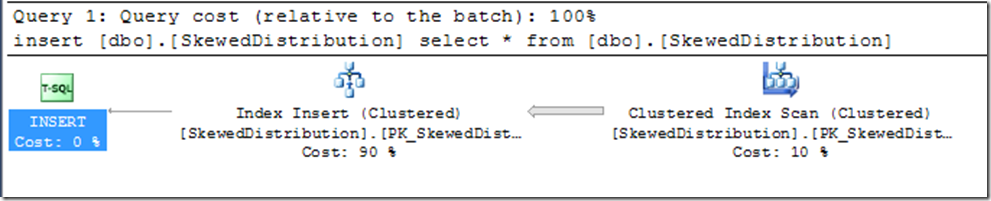

Well, let me tell you that’s not the case. If we run it again, it will use the following plan:

What the …?! Where has the parallelization gone?

Let me explain you a few more details about the way the function that determines the ideal number of partitions into which the index can be parallelized works.

To do so, let me bring back one assertion I made earlier. I said that during the Full optimization phase, one of the factors the optimizer takes into consideration to decide whether it goes parallel or not is the number of reasonably evenly distributed partitions the histogram associated to the leading key column of the index can produce.” Now, what does it mean “reasonably evenly” distributed partitions?

If anyone partition contains more than 3/4 (75%) of the total row cardinality, it considers an extremely skewed distribution and it doesn’t favor parallelization. The idea behind this is that if we have two partitions with 1/4 and 3/4 of the table size, the parallel plan is expected to have the same performance as that of a serial plan. So, only for partitioning which is more balanced than that, the parallelism will be favored.

If SQL would maintain multi column histograms, and index operations would leverage them in the partitioning phase, the problem described in this example wouldn’t be such. But, as it has been said already, this is something not present in released versions of the product or even something planned to be included in the short/medium term.

Still I owe you something, right? I owe you an explanation on why with the last sample script, the first time I ran the index operation it parallelized, even though the values of the 10k rows stored in the table didn’t produce multiple reasonably evenly distributed partitions (i.e. there were 7501 rows whose leading key column’s value was 1, that’s 75.01% which is over 75%).

Either we are overseeing something or the logic explained above doesn’t seem to fit.

We all agree that what is logical and expected, given the previous description of how that partitioning function works, is that neither the first nor any subsequent index operation attempts would have been run in parallel, because one of the partitions exceeds that largest size of 3/4.

So, why it did run in parallel the first time we executed the rebuild statement then?

As I explained before, the statistics associated to the index were created at the same time the index got created. That was when the table was empty. By the time the index operation is executed, the optimizer determines that they are outdated, and if possible (if auto update stats is enabled) they should be updated before continue seeking an optimal plan.

It happens that the optimizer decided that, in order to update those stats automatically, it didn’t need to scan all the existing rows (i.e. didn’t do a fullscan), but instead considered that a sample of 64.64% of the rows was sufficient to statistically represent the whole population, with a reasonable level of accuracy.

And it is true that it is sufficient for most of the decisions it must take. But in this corner case we are exposing here, it happens that inferring the cardinality of c0 = 1 and c0 = 2, based on the 64.64% sampled, results in a slightly inaccurate cardinality estimation of 7500 rows (c0=1) and 2500 (c0 =2) versus the actual cardinality of 7501 and 2499. With such estimation, we’re only one row away from the tipping point at which the partitions wouldn’t be considered reasonably evenly distributed, and it wouldn’t parallelize. However, observe that it is indeed running the parallel plan, only that it is being run serially (that is what BinaryData = 0x01000000 indicates.)

During the rebuild of the index, and since the index must be scanned beginning to end, the stats associated to it are recreated based on that fullscan (100% of the rows). And now, based on that histogram we can precisely know the two partitions are not reasonably evenly distributed, because it can precisely estimate 7501 rows for the partition where c0 = 1 and 2499 rows for the one where c0 = 2. And that is the reason why any subsequent attempt to run any index operation over this index won’t parallelize.