How the query execution engine calculates the threshold it uses to detect inaccurate cardinality estimation and fire the inaccurate_cardinality_estimate extended event?

Starting with SQL Server 2012, the query engine folks introduced an extended event, inaccurate_cardinality_estimate, you can use to identify those running queries for which any of its iterators outputs significantly more rows than those estimated by the Query Optimizer.

To be more precise, it is not the case with ANY iterator. This diagnosing functionality will not be attached to iterators like Assert, Exchange, or Scalar, because they don’t change the number of rows between their input and their output. Any cardinality estimation (CE) error for those iterators would always be defined by a child operator. By being selective on deciding which iterators will incorporate this diagnostics and which will not, the query engine avoids creating unnecessary flood.

Now, my colleague Guillaume Kieffer asked the following questions about this event:

- When does the event fire? Meaning what condition or threshold it uses to fire?

- What value does the actual_rows column of the event returns? It seems to be using thresholds as well. According to our testing it reports 10, then 60, etc. It’s easy to reproduce with the scripts below. When we keep adding rows, the actual_rows value has got nothing to do with the actual number of rows shown in the plan.

And this was the repro script he provided me with:

use AdventureworksPTO

go

if object_id ('dbo.cardTable') is not null

drop table dbo.cardTable

go

create table dbo.cardTable (c1 int)

go

set nocount on

begin tran

declare @i int = 0

while @i < 10000

begin

if (@i < 10)

begin

insert into cardTable values (-1)

end

if (@i < 8)

begin

insert into cardTable values (-2)

end

insert into cardTable values (@i)

insert into cardTable values (@i)

set @i = @i + 1

end

commit tran

create index ix_C1 on dbo.cardTable (c1)

go

if object_id ('dbo.p_test') is not null

drop procedure dbo.p_test

go

create procedure dbo.p_test @i int

as

select * from dbo.cardTable where c1 = @i

go

Run the following:

set statistics profile on

exec dbo.p_test 1

go

exec dbo.p_test -1

go

exec dbo.p_test -2

insert into cardTable values (-2)

go 100

--run again, the actual_rows value has got nothing to do with the actual number of rows shown in the plan:

set statistics profile on

exec dbo.p_test –2

So, let me start responding one question at a time.

When does the event fire? (before you proceed reading this post, make sure you have read The Building Blocks of Query Execution from Craig’s blog, to understand when the GetRow method is called for an iterator.)

First of all, there must be, at least one or more active extended event session started, which are subscribed to the inaccurate_cardinality_estimate event. If there are none, the query engine won’t waste any resources collecting the data it requires to spot such inaccuracy on any given iterator.

Secondly, once the query begins executing, the first time the GetRow method for a given iterator is invoked, it will compute an initial threshold. The threshold will be calculated based on the amount of rows the query optimizer estimated this particular iterator would return.

After that initial threshold value is found, every time the GetRow method is invoked for the iterator, it will keep good count of how many rows have been actually returned so far. Once the number of rows returned exceeds the threshold value, it will fire the event and will recalculate the threshold for that iterator. But in this occasion, it will not base the calculation on the cardinality estimated by query optimizer, because it would just return the same threshold value, but it will base it on the number of rows returned so far by the iterator.

If it would continue using the same threshold over and over again, it would fire the event too often. The idea is that subsequent firing uses a progressive threshold based on the actual number of rows so far.

All this means is that, for any single execution of a given query, and for any single iterator in the query plan which is being run, the event could be fired multiple times. How many? Well, it depends on how deviated was the estimated cardinality from the actual number of rows returned. More details on the figures used to calculate the threshold are available later in this post.

What value does the actual_rows column of the event returns? … When we keep adding rows, the actual_rows value has got nothing to do with the actual number of rows shown in the plan.

actual_rows returns the number of rows the iterator has returned so far at the point when the extended event is being fired. It doesn’t mean that is the total number of rows actually returned by the iterator when the query completes, because it cannot know that information until the query has finished executing.

The actual number of rows shown in the query plan for the iterator is a piece of information produced only when the iterator has completed execution.

And what is taking into consideration to calculate this threshold?

The base cardinality, wherever it comes from. As it was explained while answering the previous question, this value will initially correspond to the cardinality estimated by the query optimizer, and after the event has been fired once for an iterator during one execution of the plan, it will correspond to the actual number of rows produced by that iterator, to that point.

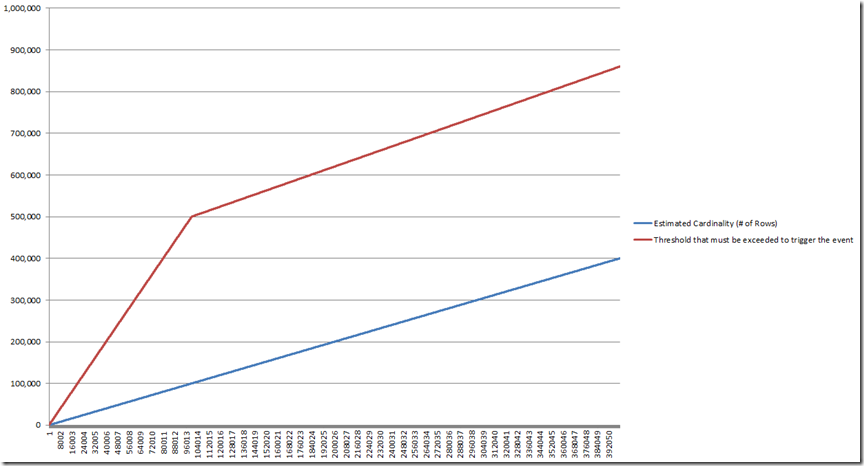

A crossover value, that will permit having different sensitivity depending on whether the number of rows exceeds this crossover value or not. The linear function that computes the threshold will use a different factor depending on whether the base cardinality exceeds this crossover value or not. By default, this value is set to one hundred thousand rows (100,000).

One high factor used to calculate the threshold when the base cardinality exceeds the crossover value. By default, this value is set to one point two (1.2).

One low factor used to calculate the threshold when the base cardinality has not reached the crossover value. By default, this value is set to five point zero (5.0).

With that, we have a linear function with the low factor when the base cardinality is up to the crossover value, and a linear function with the high factor, shifted so that it continues after crossover value rows without dropping.

if (base cardinality<= crossover)

{

threshold = floor(base cardinality * low factor));

}

else

{

threshold = floor( base cardinality * high factor + crossover * (high cardinality – low cardinality)))

}

}

To graphically understand how the function works, the following graph shows what would be the resulting threshold for a series of base cardinalities ranging from 0 to 400,000 rows.

In the example Guillaume provided, this is what happened:

- When the stored procedure is executed the first time, it is passed 1 as the value to its @i parameter. While compiling the plan for the SELECT, the optimizer accurately estimates a cardinality of 2 rows for the Index Seek, and attaches that estimated cardinality to the iterator in the compiled plan.

- This first time the query executes, the threshold is calculated based on the cardinality estimated by the QO (2). The resulting value is 10 rows. Meaning that if the Index Seek iterator would produce 10 rows, it would have to fire the extended event. But since the estimated cardinality is so precise, the Index Seek produces only 2 rows and never reaches that limit.

- The second time the stored procedure is invoked, it is passed -1 for the @i parameter. Since there is a compiled plan already cached for that query, it will use that one. And so, the first threshold it computes is based on the cardinality QO had estimated and attached to the iterator, which is 2 rows (remember that the plan was optimized for a value of @i == 1, for which there are only 2 rows in the index). The calculated threshold for such cardinality is 10 rows. And again, this means that if the Index Seek produces 10 rows, it will have to fire the event. Since there are ten rows in the index where c1 = -1, then it fires the event when the 10th row is read. It also recalculates a new threshold based on the total number of rows produced so far (10). That results in a threshold of 50. If this iterator would continue producing 50 more rows, it would have to fire the event again, but it has exhausted all rows it could find matching that seek predicate.

- The third time the stored procedure is invoked, @i is set to –2. The base cardinality used to calculate the threshold is still 2, and therefore the threshold is 10 rows. There are only 8 rows where c1 = -2, so the extended event is not fired in this case.

- One hundred more rows are inserted into the index with c1 = –2, and the stored procedure is executed again for @i set to –2. As per the same reasons explained before, base cardinality is 2 and the threshold is 10. This time, when the Index Seek produces its tenth row, the event is triggered, the new threshold is calculated using 10 (number of rows returned so far), which results in a threshold of 50 rows. That means that if the Index Seek produces 50 rows from the point where this new threshold was computed, it will have to fire the event again. And so it does. When? When it has returned (in total) 60 rows. After firing the event, it recalculates the threshold with a based cardinality of 60. That results in a threshold of 350. If it would produce 350 more rows, which is not the case because there are only 108 rows matching the SARG used, it would have to fire the event one more time, and continue the same loop.

Regarding the meaning of the information exposed through the different columns available in this event: column node_id contains the ID associated to the node (iterator) in the query plan tree for which the event has been produced, thread_id is the ID of the worker thread that was calling the GetRow method of the iterator and encountered the deviation between estimated and actual values, estimated_rows corresponds to what I referred to as “base cardinality” in this post, actual_rows is the number of rows produced so far by the iterator every time the event is fired, and fire_count is the number of times the event has been fired for the same iterator, within the same execution of the query.