More details on how Effects work

The last couple posts in this serieshave dug into features and example usage of Effects in WPF. Let’s go into some other aspects of the feature that are important to understand.

Software Rendering

When we discuss Effects, we typically talk about them being GPU-accelerated. This is typically the case. However, there are three important situations where the Effects cannot be GPU-accelerated:

- When the graphics card being run on does not support PixelShader 2.0 or above. This is becoming more and more rare, but is definitely still out there.

- When the WPF application is being remoted over Remote Desktop or Terminal Server or some other kind of mirror driver.

- When the WPF application is in a mode where software rendering is required – such as rendering to a RenderTargetBitmap, or printing.

In all of these cases, WPF will render Effects via software. What does that mean? As mentioned in an earlier post, Effects are written using HLSL for programming the GPU. WPF incorporates a “JITter” (Just-in-Time compiler) that takes the compiled HLSL bytecode, validates it, and dynamically generates x86 SSE instructions that can execute the HLSL in software the same way it would be executed in the GPU. SSE stands for “Streaming SIMD instructions”, which is an indicator that it’s a decent alternative when there isn’t a GPU available. It’s certainly considerably slower than the GPU (and considerably less parallel), and obviously taxes the CPU more, but given that it’s fundamentally a SIMD processor as well, it’s quite well suited to executing HLSL. All told, we’ve been very pleased, and very pleasantly surprised with how well the CPU does execute shaders in the absence of a GPU.

Interactivity

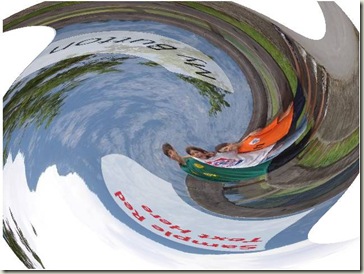

The Effects shown thus far in this series are all “in-place” effects, in the sense that they output some modification of the pixel that’s at the same location in the input texture as its destination in the output texture. That is, they just manipulate color, and not position. However, distortion types of effects, like swirls, bulges, pinches, etc., are all very powerful, important and achievable Effects. In those cases, you may get a result like this:

It so happens that the button and the textbox in the above image remain fully interactive and operable, and you can click on their onscreen position to give them focus. How does this work? The HLSL shaders know nothing about input? The way this works is that the Effect author has an opportunity to define the “EffectMapping” that the Effect subjects its points to. The author of the Effect is responsible for returning a GeneralTransform that takes a position and returns the post-Effect corresponding position. The author also needs to provide the inverse mapping. This is then used for input testing as well as for functionality like TransformTo{Ancestor,Descendant,Visual}.

(Note that GeneralTransform is a generalization of the Transform class that, while not heavily used, has existed since the first version of WPF, and represents transforms that cannot be expressed via an affine 3x2 matrix, and is thus necessary for representing the flexibility that Effects possess. They were introduced for exactly this sort of purpose.)

Full Trust Requirement

WPF will only run Effects within fully-trusted applications. There is no support currently for running Effects in partially-trusted or untrusted apps. This is something we want to move towards in a subsequent release (particurly for the software rendering implementation).

What sort of shaders?

GPUs expose multiple types of shaders. In shader model 3.0 and prior, there are both Vertex Shaders and Pixel Shaders. PS 4.0 adds Geometry Shaders. For the WPF Effects feature, we only support the use of Pixel Shaders. Furthermore, the only value that varies on per-pixel invocation of the shader is the incoming texture coordinate (uv), representing where on the output surface the shader is running. There are a number of powerful techniques that one can potentially achieve by introducing Vertex Shaders and more flexible input data for the Pixel Shader. However, for our first time getting into the GPU programming arena, WPF is only exposing the feature via Pixel Shaders as described here.

What about BitmapEffects?

The initial release of WPF introduced a class called BitmapEffect, and a property on UIElement also called BitmapEffect. These played much the same role, conceptually, as Effects do. However, they were riddled with performance problems. The main problems with them was that a) they executed in software, and b) they executed on the UI thread. The first issue means they didn’t take advantage of the GPU. The second issue is worse, and means that the entire rendering of everything in the tree leading up to the BitmapEffect needed to be rendered in software as well. Thus, the performance impact on an application was quite considerable. For certain cases (BitmapEffects on small pixel areas that aren’t updating often) things were OK. However, one quickly fell of the performance cliff into the problems I describe above.

In WPF 3.5 SP1, we still have BitmapEffects, though their use is discouraged and, in fact, they are marked [Obsolete] in the WPF assemblies, meaning that their use will generate compiler warnings. This is done so that they can be removed from the system in a future version (not sure just when that would happen), but not have existing apps that depend upon them break.

Of the built-in BitmapEffects, there are two that are far and away the most commonly used: BlurBitmapEffect and DropShadowBitmapEffect. For these, we’ve built equivalent (but hardware accelerated) Effect-based versions that you can attach to UIElement.Effect now. Also, we have an “emulation” layer so that apps that are already out there that hook up UIElement.BitmapEffect to the BlurBitmapEffect or DropShadowBitmapEffect will now result in use of the Effect-based ones. (Note that this won’t work in all cases – for instance, if you use a BitmapEffectGroup, this won’t get emulated.)

Additional RenderCapability APIs

With the addition of Effects, the System.Windows.Media.RenderCapability class gets two new members to allow applications to fine-tune their use of shader-based Effects:

- IsPixelShaderVersionSupported(major, minor) will tell you whether the system being run on supports the specified Pixel Shader version on the GPU. This is useful for understanding whether effects that you want to run will run in hardware, as you may potentially want to exclude them if they don't run in hardware.

- IsShaderEffectSoftwareRenderingSupported is a Boolean property that says whether the system being run on supports SSE2, which is the determinant for whether the software JITter described above will work.

That’s it for this post, and that pretty much wraps up the general information on using Effects and some of the information surrounding their use. If you’re interested in authoring Effects, you may well be clamoring for more details there. That will be the topic of the next set of posts in this series.