The Promise of Differential Privacy

We recently released a new whitepaper on Microsoft’s research in Differential Privacy written by Javier Salido on my team. To help set the stage, I’d like to provide some background on this timely topic.

Over the past few years, research has shown that ensuring the privacy of individuals in databases can be extremely difficult even after personally identifiable information (e.g., names, addresses and Social Security numbers) has been removed from these databases. According to researchers, this is because it is often possible, with enough effort, to correlate databases using information that is traditionally not considered identifiable. If any one of the correlated databases contains information that can be linked back to an individual, then information in the others may be link-able as well.

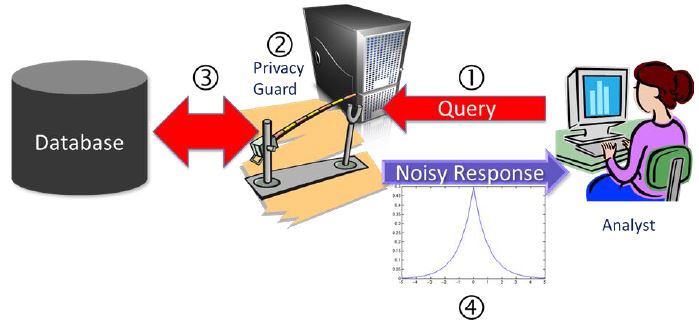

Differential Privacy (DP) offers a mathematical definition of privacy and a research technology that satisfies the definition. The technology helps address re-identification and other privacy risks as information is gleaned from a given database. Differential Privacy does this by adding noise to the otherwise correct results of database queries. The noise helps prevent results of the queries from being linked to other data that could later be used to identify individuals. Differential privacy is not a silver bullet and needs to be matched with policy protections, such as commitments not to release the contents of the underlying database, to reduce risk.

The first steps toward DP were taken in 2003, but it was not until 2006 that Drs. Cynthia Dwork and Frank McSherry of Microsoft Research (MSR), Dr. Kobbi Nissim of Ben-Gurion University, and Dr. Adam Smith of the Pennsylvania State University discussed the technology in its present form. Since then, a significant body of work has been developed by scientists from MSR and other research institutions around the world. DP is catching the attention of academics, privacy advocates and regulators alike.

As interest in this complex topic continues to rise (for example, two recent posts from the U.S. Federal Trade Commission’s technology blog that discuss DP, and a recent DP-focused workshop at Rutgers University. We hope our new whitepaper will illustrate the promise of Differential Privacy.

I think this exciting research technology shows how technology and policy can work in concert to tackle privacy challenges.

By Brendon Lynch, chief privacy officer, Microsoft