Putting data in cold storage with Windows Azure Table

Sometimes the data that’s being kept in SQL Azure relational storage doesn’t really need to be there. A couple of examples of this are blobs and historical records. Lots of folks have discovered the wonders of moving blobs from relational storage to Windows Azure blob storage. Just leave behind a pointer and add a line of code and instantly save relational storage capacity for more valuable pursuits.

It’s a little more complicated with historical records. Lots of transactional systems have a few big tables (such as invoice item). The rest or the tables can be thought of as forming contextual frameworks (customer / product) or provide reference (zip code). Most of the transactional records just sit there doing nothing most of the time. You input them, manage them through to a completed state (paid invoice, for example) then print a few reports. Done. Wouldn’t it be nice if you didn’t have to keep them in (relatively expensive) relational storage for the next few years though you must keep them for regulatory or compliance reasons (for example)?

The idea of expensive storage and cheap storage isn’t new. The main thing I wanted to do with this blog is remind folks of it and give an example of some code that implements one variation. In this example, I imagine that each and every invoice must be represented in the relational store with a tombstone so that it can be retrieved easily. You never know when someone will decide to reach back a couple months or years into the archive.

A tombstone in this context is just a record that says data exists, but not in the relational table. The tombstone contains enough information to find the original data at need. You could set up tombstones to represent single records or any grouping that you care to imagine and that makes sense. For example, maybe you permit people to reach into the archive to print a report for a single day and so you set up your tombstones to represent a whole day’s transactions.

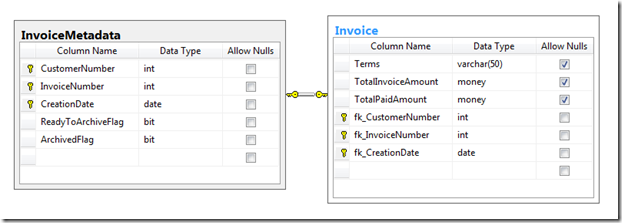

Ideally, the tombstone record is small relative to the data that you’re archiving. A few bytes to point to a lot of bytes. In my example, the invoice record has a invoice number, customer number and a creation date making up the primary key. The actual invoice record will have columns representing shipment method, payment terms, the amount of the invoice, the amount paid, and so on. For simplicity sake, I created an InvoiceMetadata table that has exactly one record for each Invoice record. The InvoiceMetadata table has the primary keys plus a couple of flags that are used for tracking archived data.

When the management of the invoice is complete (it’s paid), the ReadyToArchiveFlag is set to true. If the ArchivedFlag is set to true, it means that there is a copy of the Invoice record in the archive already.

There are 4 main use cases associated with this example:

- Create new invoice records and manage them through their lifecycle

In the sample code, this is accomplished through the “Reset” button and “Test” button. “Reset” clears the data store and then creates 50 invoices. “Test” sets some of them as “paid” and therefore ReadyToArchiveFlag is also set. Finally, a message is dropped into a queue. The message instructs a worker role to archive any invoices that are ready. - Archive invoices that have completed their lifecycle

The worker role finds the message and makes a copy of each invoice that is ready. It copies the records to Azure Table storage and deletes them from the SQL Azure table. It also sets the “ArchivedFlag” in InvoiceMetadata. - Bring back invoices that are needed for research, reporting, etc.

For this use case I have a “Report” button. I imagine that someone wants to print invoices for a certain date. The code behind the “Report” button drops a message into the queue. The worker role picks up the message and as instructed, copies the records from Table storage back to SQL Azure. - Remove invoices from relational storage that are no longer needed

After the report is printed, any invoice that’s in SQL Azure that has the “ArchivedFlag” set is deleted. There’s already a copy in the archive so nothing else needs to be done.

How can costs and savings be calculated? Both SQL Azure and Azure Table storage are charged by the gigabyte. SQL Azure storage charges are calculated daily. So – if your database has a max capacity of 5gb for the day, you’re charged 5 * $9.99 / (average # days / month). If you can do your retrieval of archived data without expanding your database, then it costs nothing extra to do your “reports” under this scheme. (If you do need to expand your database to hold archived data temporarily, use ALTER DATABASE.) Savings may be calculated as the number of gb that get archived * ($9.99 – .15) and prorated to the number of days in the month.

A few comments about the source code:

I built this starting with a plain cloud project + 1 web role + 1 worker role. I added a data model and used Entity Framework for data access. The deletes were done with embedded SQL so that I didn’t have to read the records into memory that I wanted to delete. Some readers may be familiar with sparse columns in SQL Server. SQL Azure doesn’t support sparse columns at this point, so I elected to split out the InvoiceMetadata.