Live Streaming with Azure Media Services - Part 1

One of the most frequent question that I receive from the discussion with customer and partner that are starting to adopt cloud services for media delivery, is about live streaming with Azure Media Service (AMS). Typical questions are about high availability , how to maintain the publishing URL for long running live streming, also in the case of encoder restart and other.

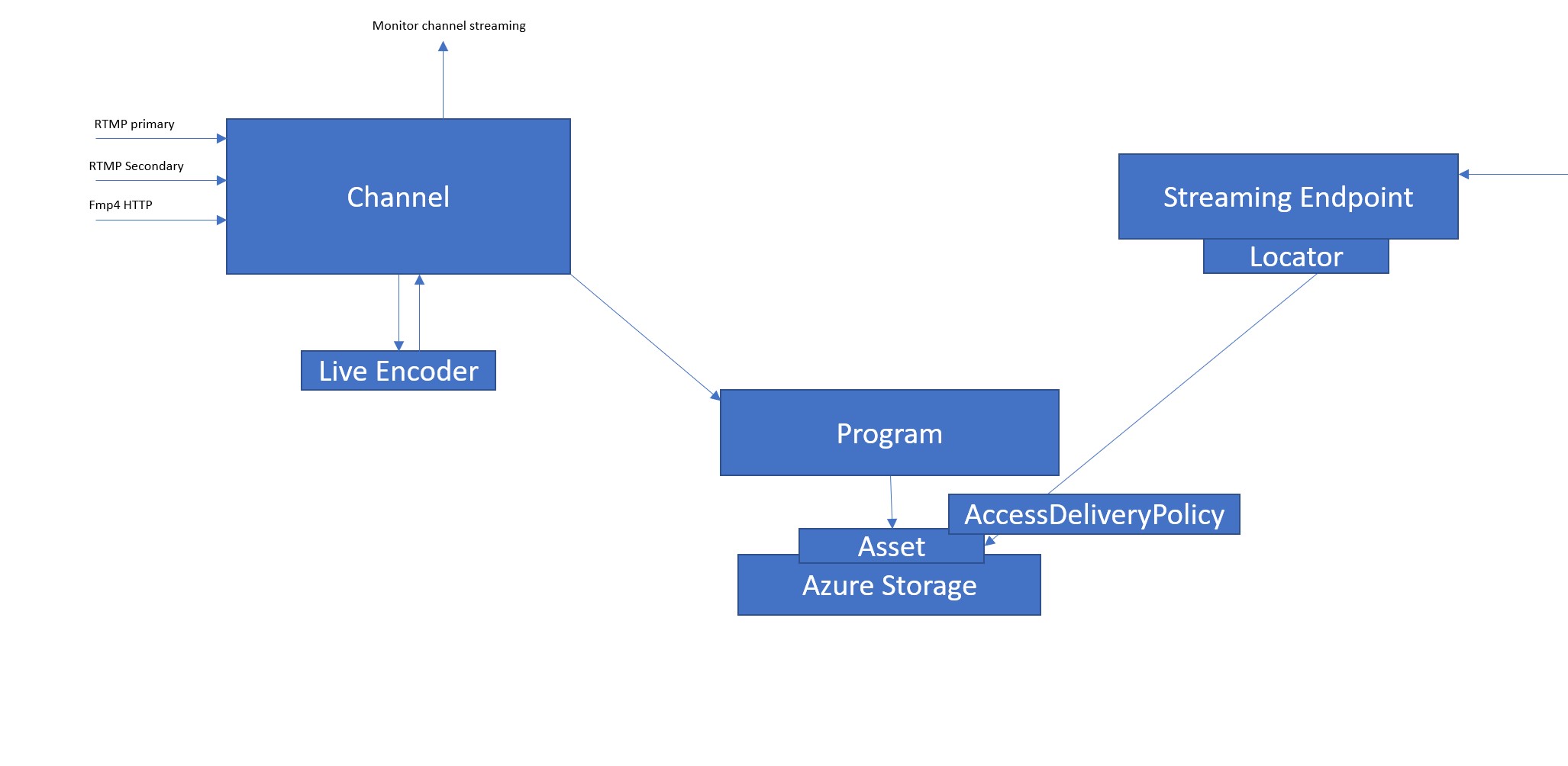

Before to start to respond , the first step is starting from a description of the AMS architecture, in order to understand more in depth how the live streaming works in this Platform, and after that , map the concepts that you need to implement on the platform architecture. In this part we are describing the AMS v2 API's that currently are in GA.

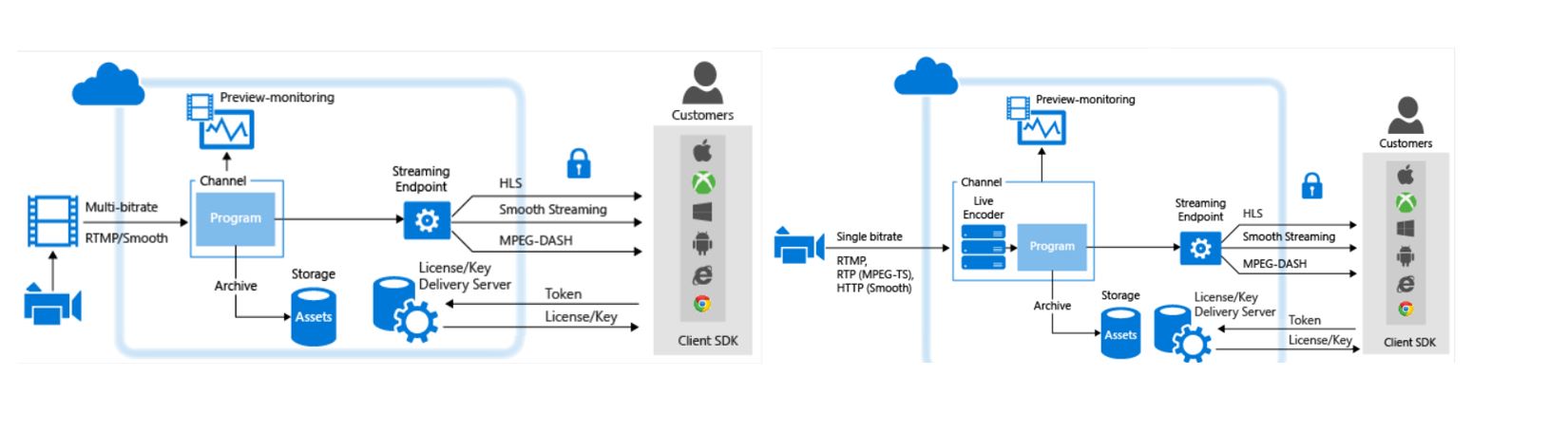

AMS is very flexible and useful to deliver live premium content. Today, we have to leverage a large number of formats and content protection technology, to deliver premium content to a large number of different device that are used in the consumer and enterprise space, and AMS offers with Dynamic Encryption and Dynamic Streaming, offers a very efficient way to deliver multiple format and content protection system, starting from a single video source.

You can stream using MPEG-DASH, HLSv3, HLSv4 , Smooth Streaming and leverage the most relevant DRM systems PlayReady, Widevine and FairPlay, that can permit to cover the most used endpoints (PC, Mac, Mobile Phone Tablet, Connected Tv, etc) in the market today.

You can leverage in input two main protocols in order to push the video content to the platform, like RTMP , fmp4 over HTTP (smooth streaming) , and you can use two different workflow to send the content and stream it:

- pass-throug workflow where you push all the quality levels that you would like to leverage in your streaming with a multibitrate input . With this workflow you can run 24h streaming.

- live encoder workflow where you push a single bitrate and leverage a live encoder in AMS, in order to create the multiple bitrate levels to stream the content at different quality level to the client . This workflow in the current version is suggested for live streaming with a duration no more of 8 hours .

This platform can be managed leveraging a specific set of REST API that you can use to create and manage the live channel services and integrate it with your workflow and application. You can also leverage the Azure Portal as front end, and the AMS Explorer tool that show you a sample of how to use the API from C#.

In addition to the streaming services, AMS offers also a preview monitoring feature and can ingest and record the content to offers it ondemand during the live streaming or after the live event, it has also a set of API with a JavaScrip application to clip and cut the content to repurpose it (Azure Media Clipper /en-us/azure/media-services/media-services-azure-media-clipper-overview https://azuremediaclipper.azurewebsites.net/), a customizable player (Azure Media Player https://ampdemo.azureedge.net/azuremediaplayer.html ) that you can leverage for several web client.

After a brief overview of the service, now we can start to go more in depth in the architectural detail that the service leverage.

The main component without the DRM part that we will analyze in a second step are:

- Channel

- Program

- Asset

- Access Policy

- Locator

- Streaming Endpoint

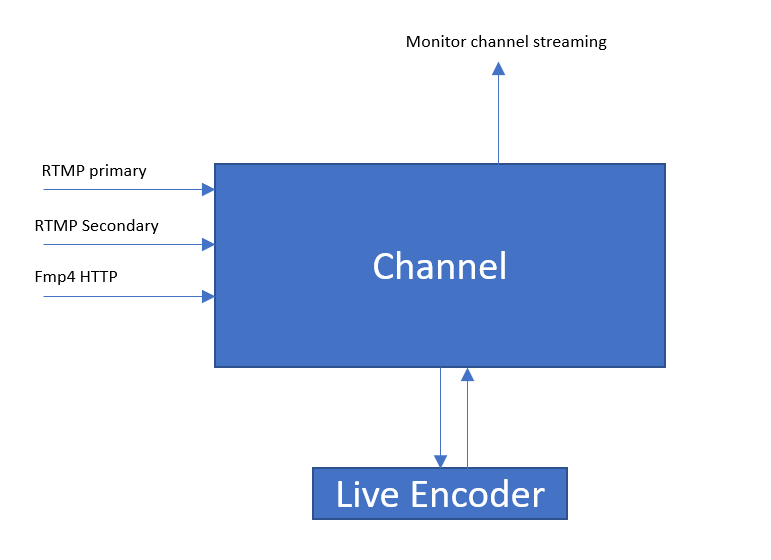

The channel is the entry point of the video content. It takes in input the video signal that we want stream and accepts it with some types of input. As mentioned it can work as passthrough or can leverage a live encoder . With pass-through you can send multiple stream with different bitrate/resolutions in order to have different quality levels in your streaming, to permit to the client consumer endpoint to leverage adaptive streaming and select the best quality based on the network conditions.

Using pass-through or live encoder you can leverage RTMP and fmp4 and you have 2 RTMP input (primary and secondary) to permit to implement an high available streaming, sending two set of streams with the same timecode aligned. We will talk more about how to implement this in a next part of the article.

The channel offers also an endpoint to monitor the streaming that the channel receive and prepare for streaming. Currently the preview is delivered with Smooth Streaming only and it is required a player compatible with Smooth Streaming.

At this link /en-us/azure/media-services/media-services-recommended-encoders you can find a list of recommended encoders that you can leverage to push content to Azure Media Services channel.

You have to select at the channel creation, if you would like to use pass-through or live encoder.

You can create the Channel using AMS REST API (https://rest.media.azure.net), Azure Portal , MS Explorer sample application or one of the SDK for AMS .

For delivering a test to create a channel leveraging REST API , you can also use Postman and the configurations required for this test are available at this link /en-us/azure/media-services/media-rest-apis-with-postman

A channel can receive different type of command:

- Start : to start the channel and put it in Running state, a state ready to receive the video stream

- Stop: to stop the channel and deallocate the resources connected to the live channel without destroy the channel configuration

- Reset: to reset the input channel in the case that you need to restart a new streaming in input

Possible state values include:

- Stopped. This is the initial state of the Channel after its creation. In this state, the Channel properties can be updated but streaming is not allowed.

- Starting. Channel is being started. No updates or streaming is allowed during this state. If an error occurs, the Channel returns to the Stopped state.

- Running. The Channel is capable of processing live streams.

- Stopping. The channel is being stopped. No updates or streaming is allowed during this state.

- Deleting. The Channel is being deleted. No updates or streaming is allowed during this state.

In order to reset , stop or delete a channel is required that it isn’t connected to a running program, we will describe more about program entity below in this article.

Be aware that there is a billing impact for live channel that you should remember that leaving a live channel in the "Running" state will incur billing charges. It is recommended that you immediately stop your running channels after your live streaming event is complete to avoid extra hourly charges.

A Channel can be created using a POST HTTP request, specifying property values and the Authentication token that you have to get from AAD Login for a user or service principal that has the permissions to manage live channel in the AMS instance in the subscription that you are using.

POST https://<accountname>.restv2.<location>.media.azure.net/api/Channels HTTP/1.1

DataServiceVersion: 3.0;NetFx

MaxDataServiceVersion: 3.0;NetFx

Accept: application/json;odata=minimalmetadata

Accept-Charset: UTF-8

x-ms-version: 2.11

Content-Type: application/json;odata=minimalmetadata

Host: <host URI>

User-Agent: Microsoft ADO.NET Data Services

Authorization: Bearer <token value>

{

"Id":null,

"Name":"testchannel001",

"Description":"",

"Created":"0001-01-01T00:00:00",

"LastModified":"0001-01-01T00:00:00",

"State":null,

"Input":

{

"KeyFrameInterval":null,

"StreamingProtocol":"FragmentedMP4",

"AccessControl":

{

"IP":

{

"Allow":[{"Name":"testName1","Address":"1.1.1.1","SubnetPrefixLength":24}]

}

},

"Endpoints":[]

},

"Preview":

{

"AccessControl":

{

"IP":

{

"Allow":[{"Name":"testName1","Address":"1.1.1.1","SubnetPrefixLength":24}]

}

},

"Endpoints":[]

},

"Output":

{

"Hls":

{

"FragmentsPerSegment":1

}

},

"CrossSiteAccessPolicies":

{

"ClientAccessPolicy":null,

"CrossDomainPolicy":null

}

}

As you can see from the body of the previous request , you have to specify the Input Protocol that you would like to use, the IP addresses that you would like to authorize to push the content to the input for the channel and for the preview, the Key Frame interval for the video content fragment (optional) and the number of HLS fragments that the Live Streaming have to use to create an HLS segment, Cross site Settings Policy used to manage cross domain request from client.

if the request is successful you will receive HTTP 202 accepted and the request will be processed asynchronously, and you can check the status of the channel to see when it move from starting to running.

To start the Channel after the creation complete you can send a specific POST like below:

POST https://<accountname>.restv2.<location>.media.azure.net/api/Channels('nb:chid:UUID:2c30f424-ab90-40c6-ba41-52a993e9d393')/Start HTTP/1.1

DataServiceVersion: 3.0;NetFx

MaxDataServiceVersion: 3.0;NetFx

Accept: application/json;odata=minimalmetadata

Accept-Charset: UTF-8

x-ms-version: 2.11

Content-Type: application/json;odata=minimalmetadata

Host: <host URI>

User-Agent: Microsoft ADO.NET Data Services

Authorization: Bearer <token value>

Also in this case, if the request is successful you will receive HTTP 202 accepted and the request will be processed asynchronously and you can check the status of the channel to see when it move from starting to running.

POST https://<accountname>.restv2.<location>.media.azure.net/api/Channels('nb:chid:UUID:2c30f424-ab90-40c6-ba41-52a993e9d393')/State HTTP/1.1

DataServiceVersion: 3.0;NetFx

MaxDataServiceVersion: 3.0;NetFx

Accept: application/json;odata=minimalmetadata

Accept-Charset: UTF-8

x-ms-version: 2.11

Content-Type: application/json;odata=minimalmetadata

Host: <host URI>

User-Agent: Microsoft ADO.NET Data Services

Authorization: Bearer <token value>

All the operations that are possible on a Channel, are available in the REST API documentation here https://docs.microsoft.com/en-us/rest/api/media/operations/channel

If you would like to create a channel from the portal or AMS explorer, you can input the same informations leveraging the user interface.

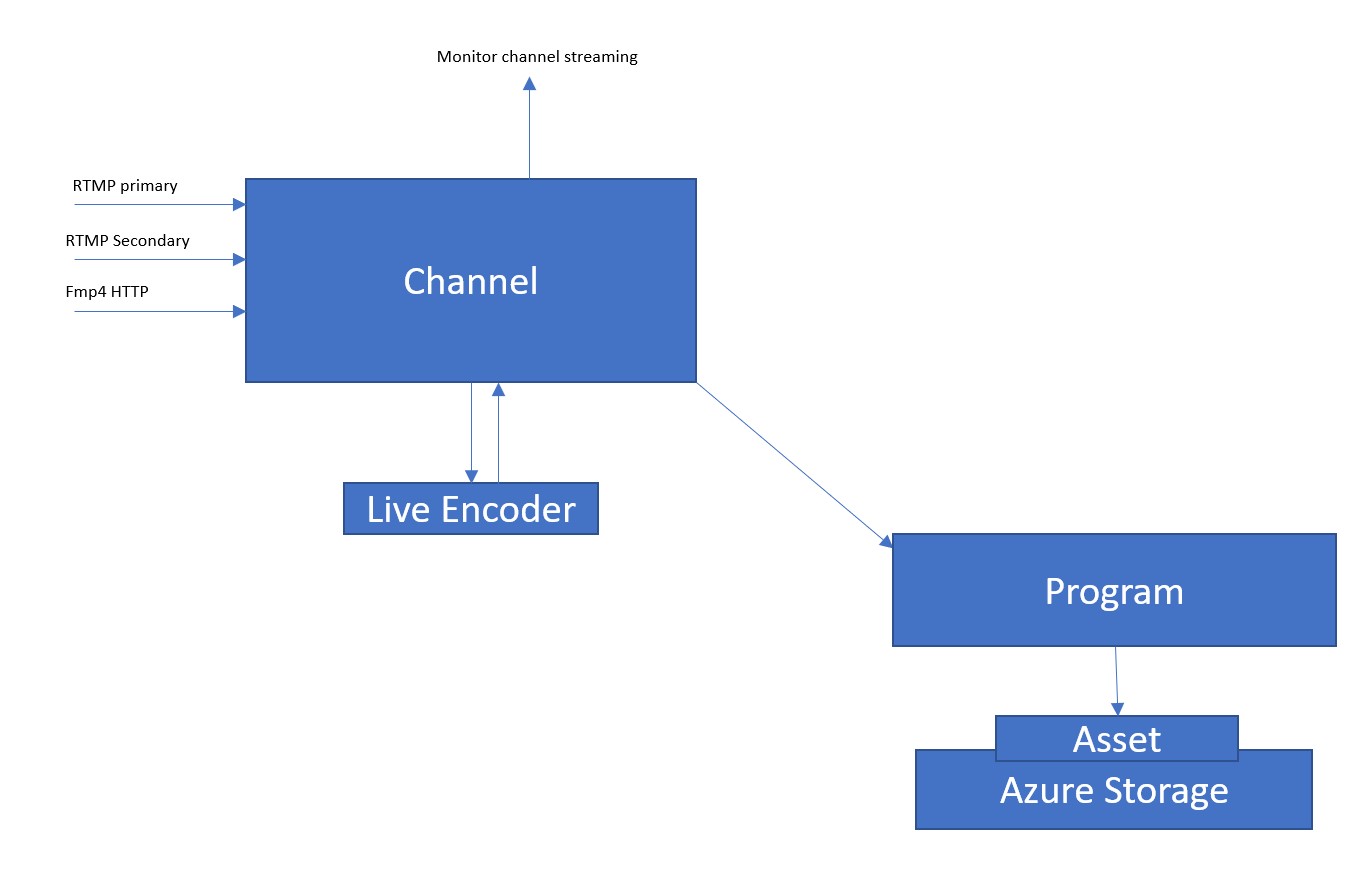

A Channel is associated with one or more Program. A Program is an entity that you can use to control the publishing of the streaming and the storage of segments in a live stream, to ingest the content in streaming. Channels manage programs. The channel and program relationship is similar to traditional media, where a channel has a constant stream of content and a program is scoped to some timed event on that channel. You can have multiple programs for every channel.

A Program enables you to control the publishing and storage of segments in a live stream. Programs can run concurrently. This allows you to publish and archive different parts of the event as needed.

For every Program is created an Asset that is stored in the Azure Storage. An Asset is an entity that rappresent a group of files and manifest that contains your video content , in this case ingested from the channel with the Program configured. When you create a Program you have to specify the channel connected to the program and the AssetId that can be created and connected to the program. You can specify in the Program configuration, the number of hours you want to retain the recorded content for the program by setting the ArchiveWindowLength property (up to 25 hours, min 5 minutes). Clients can seek through the archived content for the specified number of hours. If the program runs longer than the specified ArchiveWindowLength, the older content is removed. For example if you set 5 minutes of archive, only the last five minutes remain in the asset connected to the Program and are available for the client during the live and on-demand, when the Program will be stopped. Also if you delete a Program, you can decide to maintain the asset in the storage and reuse it to republish on-demand or to create sub-clipping.

Also for the program you can leverage AMS REST API, Azure Portal , MS Explorer sample application or one of the SDK for AMS . More information about how to use the REST API to manage program are available here /en-us/rest/api/media/operations/program

Below a sample of REST API call to create a Program:

POST https://<accountname>.restv2.<location>.media.azure.net/api/Programs HTTP/1.1

DataServiceVersion: 3.0;NetFx

MaxDataServiceVersion: 3.0;NetFx

Accept: application/json;odata=minimalmetadata

Accept-Charset: UTF-8

x-ms-version: 2.11

Content-Type: application/json;odata=minimalmetadata

Host: <host URI>

User-Agent: Microsoft ADO.NET Data Services

Authorization: Bearer <token value>

{"Id":null,"Name":"testprogram001","Description":"","Created":"0001-01-01T00:00:00","LastModified":"0001-01-01T00:00:00","ChannelId":"nb:chid:UUID:83bb19de-7abf-4907-9578-abe90adfbabe","AssetId":"nb:cid:UUID:bc495364-5357-42a1-9a9d-be54689cfae2","ArchiveWindowLength":"PT1H","State":null,"ManifestName":null}

If successful, a 202 Accepted status code is returned along with a representation of the created entity in the response body.

As you can see to create a Program you need to pass a name for the Program, the ChannelId of the Channel connected to the program and where the program ingest the video input.

A program can be in different state:

- Running : the program starts to ingest the signal from the channel and memorize it in the asset. If the program is published with a Locator, in this state the live content is available though the locator and also the Window Archive content on-demand already ingested

- Starting : the program is starting

- Stopped: the program is stopped and the ingest from the channel is stopped . In this state if the Program is published with a locator permit to reproduce on-demand the content ingested and the live is stopped

- Stopping : the program are stopping

As mentioned before an Asset represents a set of files that contains the video that the Program ingested from the channel. You can manage the configuration about Assets with the specific REST API or as usual Azure Portal , MS Explorer sample application or one of the SDK for AMS . More information about how to use the REST API to manage Asset is https://docs.microsoft.com/en-us/rest/api/media/operations/asset .

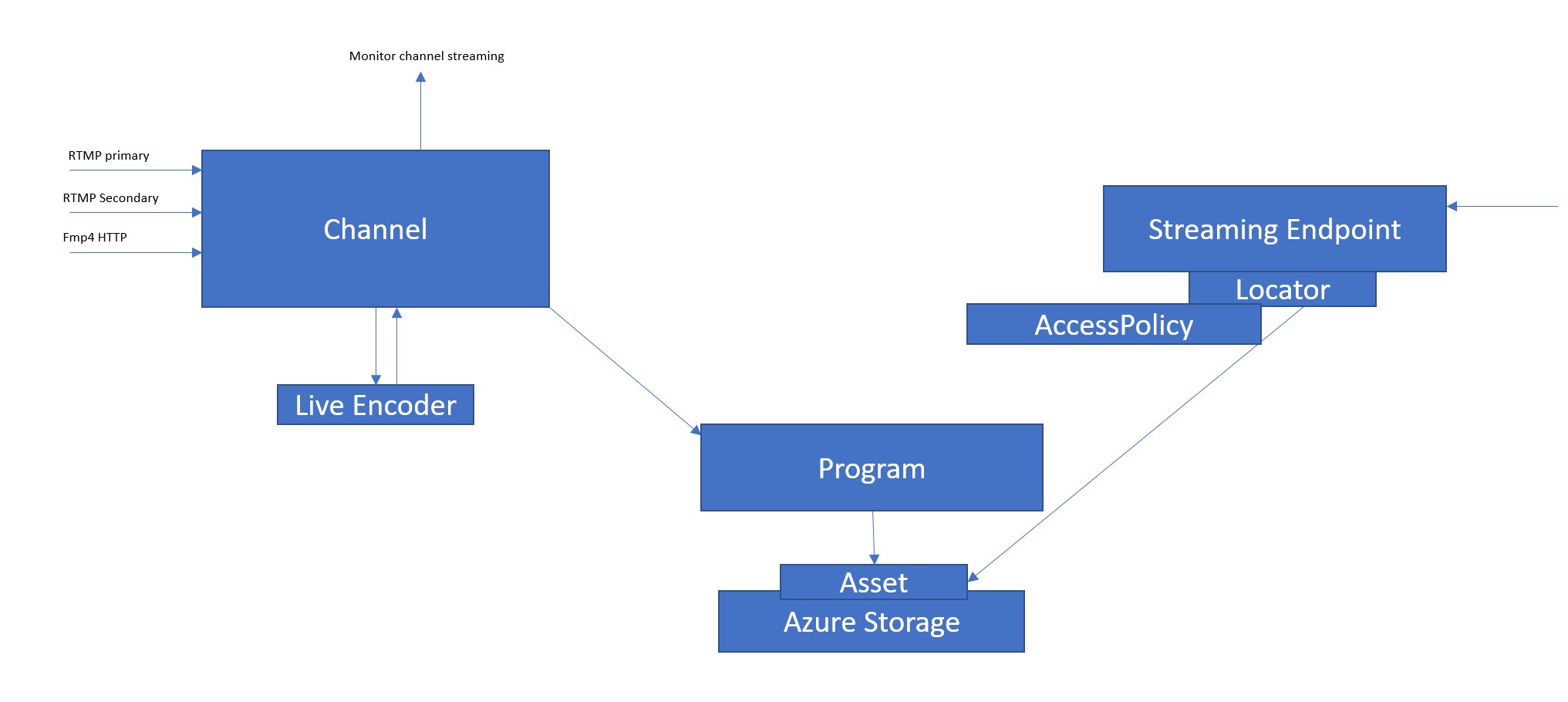

Another important concept in the picture of Live Streaming in AMS is the Locator that you can associate to an Asset connected to a Program. The Locator is an entity that represents the publishing information that are used to permit the access to the content from a specific URL published through a Streaming Endpoint in AMS and that can permit the streaming publishing.

More information about how to use the REST API to manage Locator is /en-us/rest/api/media/operations/locator . There are different types of Locator in Azure Media Service, but for a Live Streaming, when you create a Locator for an Asset connected to a Program, you have to create a streaming Locator.

Before to create a Locator you have to define and AccessPolicy that contains the information about the duration of the Locator is available.

More information about how to use the REST API to manage Access Policy is /en-us/rest/api/media/operations/accesspolicy

To create an AccessPolicy you can use a POST like in the follow example

POST https://<accountname>.restv2.<location>.media.azure.net/api/AccessPolicies HTTP/1.1

Content-Type: application/json;odata=verbose

Accept: application/json;odata=verbose

DataServiceVersion: 3.0

MaxDataServiceVersion: 3.0

x-ms-version: 2.11

Authorization: Bearer <token value>

Host: media.windows.net

Content-Length: 67

Expect: 100-continue

{

"Name": "StreamingAccessPolicy-test001",

"DurationInMinutes" : "525600",

"Permissions" : 1

}

The Permissions value can be one of the follow values and this value specifies the access rights the client has when interacting with the Asset. Valid values are:

- None = 0

- Read = 1

- Write = 2

- Delete = 4

- List = 8

To create a Locator you can use a POST like in the follow example:

POST https://<accountname>.restv2.<location>.media.azure.net/api/Locators HTTP/1.1

Content-Type: application/json;odata=verbose

Accept: application/json;odata=verbose

DataServiceVersion: 3.0

MaxDataServiceVersion: 3.0

x-ms-version: 2.11

Authorization: Bearer <token>

Host: media.windows.net

Content-Length: 182

Expect: 100-continue

{ "AssetId" : "nb:cid:UUID:d062e5ef-e496-4f21-87e7-17d210628b7c", "AccessPolicyId": "AccessPolicyId","StartTime" : "2014-05-17T16:45:53", "Type":2}

The AssetId is the Id returned from Asset creation connected to the Program created and AccessPolicyId is the value returned from the AssetPolicy creation.

Based on this configuration, the streaming endpoint starts to respond to the request received to the Locator url and dynamically transform the streaming in HLSv3, HLSv4 , MPEG-DASH and Smooth Streaming.

As mentioned before, when a program is in Running state and the Channel is receiving the video content, the streaming locator publish the manifest in the different formats the live and the archive window. When the Program is stopped, the manifest published in the different formats contains the last archive window ingested, published on-demand and also the manifest will be cached in the streaming endpoint because the live is stopped. This is an important point that we have to remember when we will discuss about the URL of the Live Streaming. Basically a Program is thinked to ingest the content and republish it on-demand when you stop the Program. Imagine a Live Streaming of an Event , starting a Program at the begin of the event you can ingest and register the event streaming, publish it during the live with an archive window and when the event is complete, you can stop the Program and if you maintain the Locator active, automatically the event remain published ondemand on the same URL with the archive window active and live stopped and the manifest is now an on-demand content and the client that point to the same URL noe can see the content registered and consume it ondemand. If you remove the Locator the content isn’t published and you can cancel the Program and decide if you would maintain the Asset ingested in the storage for future use or not.

The Streaming Endpoint entity represents a streaming service that can deliver content directly to a client player application, or to a Content Delivery Network (CDN) for further distribution. As for the others entity in the architecture, you can manage the configuration about Streaming Endpoint with the specific REST API or as usual Azure Portal , MS Explorer sample application or one of the SDK for AMS . More information about how to use the REST API to manage Asset is https://docs.microsoft.com/en-us/rest/api/media/operations/streamingendpoint.

The streaming delivery can use an AccessDeliveryPolicy defined at Asset level, to manage and control the access to the content and with an AccessDeliveryPolicy you can configure also the protection of the streaming ad apply DRM to the streaming.

The platform based on the AccessDeliveryPolicy configuration apply the encryption with the Key configured and the information about the DRM applied and license server URL information.

As described before about the architecture of Live Streaming, the steps that you have to plan and configure to put at work a Live Streaming are:

- Create and configure a Streaming Endpoint and the CDN when required that will be used to deliver the content to the client

- Create the Channel with the configuration that you would leverage for your live streaming (pass-trough, live encoder, keyframe , etc) and start it when you are ready to send the signal.

- Create an Asset and connect it to a Program with a specific archive window, AccessPolicy and Locator . If Encryption and DRM are required before to create a Locator you can define and AssetDeliveryPolicy for the Asset connected to the Program and when you are ready to start the Program and the live streaming .

In this first post we started to described the concept about the Architecture of Live Streaming in Azure Media Service. In the next part of the post, we will continue to analyze more in depth the architecture and we start to create a Live Channel and configure it and after that we will see also how manage the high availability and control the locator URL and the point of attention about this point.