Multi-language audio with IIS Smooth Streaming: An example from Radiovaticana Live Streaming

One of many useful features that comes with IIS Media Services 4.0 and Smooth Streaming is the ability to stream live and on-demand content with multiple language audio tracks that are selectable by the viewer. An example of this capability is the recent schedule of events that Vatican Radio (https://www.radiovaticana.org/) delivered during the last month. Vatican Radio delivered several events, such as Christmas night liturgical celebrations presided over by the Holy Father, World Day of Peace on January 1st, Jannuary 9 at 09.30 CET we have another event with the Pope, and other events with multiple audio tracks (Natural Audio, Italian, English, French, German, Spanish, Portuguese and Arabic) was delivered during the last month. The player used for these events was based on the Silverlight Media Framework (SMF) and provides the viewer with the ability to select which audio track to listen to. Below is an image of the player with the audio tracks selector shown:

Leveraging the integrated media platform capabilities of IIS Media Services (IIS MS) 4.0, the Vatican was also able to deliver the same events, using the same encoders and media server infrastructure, to Apple devices. Note that in this case only 1 audio track is available because these devices do not natively support multiple audio tracks.

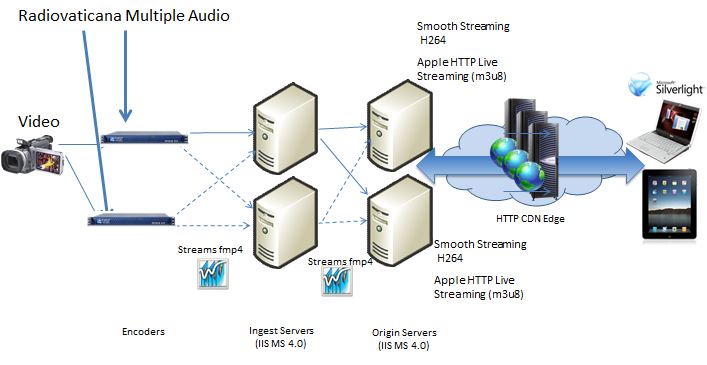

You can see the architecture implemented for these events below:

The Digital Rapids encoders received as inputs 8 audio signals from Vatican Radio channels and a video signal from the Vatican TV center. They generated 8 audio tracks in sync with 6 video tracks at different bit rates (quality levels) and pushed all the audio and video tracks to a publishing point on an IIS MS 4.0 ingest server. A subset of the video tracks and a single audio track went to a second publishing point for Apple devices on the same IIS MS 4.0 ingest server. The ingest server pushed the content of both publishing points to the origin server.

The first publishing point on the IIS MS origin server provided a client manifest (.ismc file) of all the available tracks and the actual content to Silverlight media players. The second origin server publishing point had the Apple Devices Adaptive Streaming feature selected. This enabled the origin server to do on-the-fly trans-muxing (repackaging from one file format to another) from the fragmented MP4 streams used by the Smooth Streaming format to the Apple HTTP Live Adaptive Streaming (HLS) format compatible with iPhone and iPad. It also created and published an HLS-compatible client manifest (.m3u8 file). An HTTP CDN (content delivery network) pulled the content from the origin publishing points and distributed it to viewers on their Silverlight or iPhone/iPad clients.

The Silverlight client player, based on SMF, read all tracks published in the manifest and transparently adapted the video quality as needed, based on the bandwidth available and video rendering capabilities of each client. The player also offered the possibility to the viewer to choose the audio track.

If you are interested in more details about Smooth Streaming architectures for Live Streaming, you can start with this blog post.

As I mentioned before, the multiple audio tracks feature is available not only for live streaming, but also for on-demand scenarios. In the case of on-demand you have more flexibility, as you could add more audio tracks to existing assets. The Smooth Streaming format permits to you to add additional audio tracks at any time without re-encoding the assets. This is because the Smooth Streaming format uses a server manifest to describe to the streaming server the available tracks and file sources that are available in the assets, and the client manifest is used to describe to the client the available tracks.

If you are looking more information about smooth streaming files you can read this post and the Technical Overview of Smooth Streaming.

Smooth Streaming is built on top of technologies that Microsoft has released via the Community Promise Initiative, including the Protected Interoperable File Format (PIFF) and the IIS Smooth Streaming Transport Protocol (SSTP). The Protected Interoperable File Format (PIFF) Specification defines a standard file format for multimedia content delivery and playback. It includes the audio-video container, stream encryption, and metadata to support content delivery for multiple bit rate adaptive streaming, optionally using a standard encryption scheme that can support multiple digital rights management (DRM) systems. The IIS Smooth Streaming Transport Protocol Specification describes how live and on-demand Smooth Streaming audio/video content is distributed and cached over an HTTP network. It enables third parties to build their own client implementations that interoperate with IIS Media Services.

At any time you can encode a new PIFF (Protected Interoperable File Format) asset, creating a fragmented MP4 file (.ismv, or .isma in the case of an audio-only file) that contains the additional audio tracks. You can update the manifests to describe the additional tracks to the server and client. Ideally, you would synchronize the new audio track with the existing video track.

Here is an example of a server manifest (.ism extension) with two video tracks and one audio track:

<smil xmlns="https://www.w3.org/2001/SMIL20/Language">

<head>

<meta

name="clientManifestRelativePath"

content="big_buck_bunny_1080p_surround.ismc" />

<metadata id="meta-rdf">

----omitted-------

</head>

<body>

<switch>

<video

src="big_buck_bunny_1080p_surround_330.ismv"

systemBitrate="330000">

<param

name="trackID"

value="2"

valuetype="data" />

<param

name="trackName"

value="video"

valuetype="data" />

<param

name="timeScale"

value="10000000"

valuetype="data" />

</video>

<video

src="big_buck_bunny_1080p_surround_230.ismv"

systemBitrate="230000">

<param

name="trackID"

value="2"

valuetype="data" />

<param

name="trackName"

value="video"

valuetype="data" />

<param

name="timeScale"

value="10000000"

valuetype="data" />

</video>

<audio

src="big_buck_bunny_1080p_surround_6000.ismv"

systemBitrate="128000">

<param

name="trackID"

value="1"

valuetype="data" />

<param

name="trackName"

value="audio1"

valuetype="data" />

<param

name="timeScale"

value="10000000"

valuetype="data" />

</audio>

</switch>

</body>

</smil>

As you can see, this file describes to the server how many video and audio tracks are available and the location of the physical PIFF file where the content is available.

Here is an example of a client manifest file (.ismc extension) for the same asset:

<SmoothStreamingMedia

MajorVersion="2"

MinorVersion="1"

Duration="5964800000">

<StreamIndex

Type="video"

Name="video"

Chunks="312"

QualityLevels="2"

Url="QualityLevels({bitrate})/Fragments(video={start time})">

<QualityLevel

Index="0"

Bitrate="6000000"

FourCC="WVC1"

MaxWidth="1920"

MaxHeight="1080"

CodecPrivateData="250000010FDBBE3BF21B8A3BF8EFF18044800000010E5A0040" />

<QualityLevel

Index="1"

Bitrate="4176000"

FourCC="WVC1"

MaxWidth="1920"

MaxHeight="1080"

CodecPrivateData="250000010FDBBE3BF21B8A3BF8EFF18044800000010E5A0040" />

<QualityLevel

Index="0"

Bitrate="330000"

FourCC="WVC1"

MaxWidth="480"

MaxHeight="272"

CodecPrivateData="250000010FDB8A3BF21B8A3BF8EFF18044800000010E5A0040" />

<QualityLevel

Index="1"

Bitrate="230000"

FourCC="WVC1"

MaxWidth="320"

MaxHeight="180"

CodecPrivateData="250000010FDB863BF21B8A3BF8EFF18044800000010E5A0040" />

<c

d="19999968"/>

-----omitted-------

</StreamIndex>

<StreamIndex

Type="audio"

Index="0"

Name="audio"

Chunks="299"

QualityLevels="1"

Url="QualityLevels({bitrate})/Fragments(audio={start time})">

<QualityLevel

FourCC="WMAP"

Bitrate="128000"

SamplingRate="44100"

Channels="2"

BitsPerSample="16"

PacketSize="5945"

AudioTag="354"

CodecPrivateData="1000030000000000000000000000E0000000" />

<c

d="20433560" />

----omitted ------

</StreamIndex>

</SmoothStreamingMedia>

As you can see, this file describes to the client the video and audio tracks and fragments ("chunks") available.

Again, if you would like to add an additional audio track, you can encode the track in a separate PIFF file and add it to the video asset by editing the server and client manifests to indicate the new track. Very flexible!!!

The job delivered from Vatican Radio Web Team is a very good example of how you can use the robust features and flexibility of IIS Smooth Streaming to deliver a compelling and more engaging experience to more viewers.