(UPDATED) How can I create thousands of clients to execute an End To End load test?

<After initially posting this article, I got some feedback from a customer who said that his company's apps aren't using web protocols. Their apps use RDP. I realized that I was making an assumption, and I can't afford to do that in my job, so I am updating the article to cover "all" protocols>

With all of the testing we see in our labs, we often get asked how we are able to emulate all of the clients in order to really see the full picture of how the entire system will behave under load. This comes up with web based systems that are heavy on the client-side java script or use a lot of client plugins, and also with thick client apps. My standard reply often catches people off-guard: "Why in the world would you want to do that?" Yeah, I am being dramatic here. I am actually way more polite with the answer I speak out loud [and also usually way more sarcastic with the answer I would *LIKE* to say].

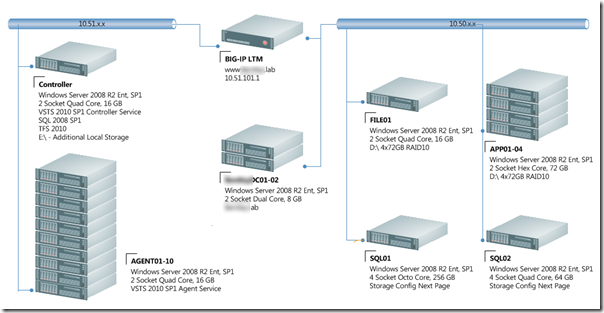

In order to perform proper end to end testing, there is no need to spin up actual clients or to simulate the client side functionality with all of the test users. All you need to do is provide a representative load to the server of the traffic it can expect and then add one or two full blown clients running a single user client end test. You can then get all of the data you need. Let's look at an example. Below is an infrastructure diagram from a lab engagement I performed a couple years ago:

- The 10.51.x.x network houses the test rig

- The 10.50.x.x network houses the SUT (System Under Test)

- The BigIP module in the middle represents the gateway that clients would take to get to the servers.

Web Clients

When we create web tests to drive load against the server, these tests only worry about generating the proper HTTP(s) traffic that will flow through the BigIP. After all, that is the only part of the client that the server will see and be aware of. If a client has to crunch data locally for a while, this does not impact other clients or the SUT. Therefore, we do not need to simulate that work for every single client we are trying to emulate. We can use the environment above, driving hundreds or thousands of concurrent users against the SUT, and then simply add one more machine that houses a real client and get our client timings, thereby also getting our end to end timings.

All Other Client Types

So when I talk about testing with thick clients, this could refer to any type of client that uses a protocol other than HTTP(S). These clients must be simulated with a test type other than Visual Studio Webtests. In my world, that usually means Visual Studio Unit Tests and sometimes Visual Studio CodedUI tests. However, at the end of the day, the concept is identical. In order to simulate load, I need to generate whatever traffic will flow out of a client onto the network and into a server, and then back again. The key here is that I am focused ONLY on the traffic that goes across the network. If my app uses a .NET application to talk to SQL via ADO.NET, all I need the harness to drive is the actual ADO.NET traffic. If it is a WCF app that uses NET.TCP binding, all I need to drive is the WCF payload creation and transmission, etc.

This table covers a few objections that I have heard before, as well as my responses to them:

| how do we know that the traffic we are sending to the server will be the same if we do not use Coded UI tests? | When we create web tests, we are recording the actual traffic. We can change the traffic patterns just as easily with web tests as we can with Coded UI. The bottom line is the tests will be as accurate as the time and planning you put into creating them. |

| What is wrong with using Coded UI tests? | For one thing, Coded UI tests are harder to maintain than web tests. They are also more fragile (meaning they are more susceptible to breaking because of application changes). |

| But we already have a suite of Coded UI tests we maintain for performing functional and integration testing. What's wrong with them? | Tests designed for functional and integration testing are often not the same types of tests that should be used for load and performance testing. They serve different purposes and should be designed with different data and step execution patterns. |

| If we are not driving the full client, how do we account for the delays the servers will see when clients are busy doing their processing? | This is part of developing a good test plan. You should have tests that use think times to simulate the client pauses, etc (see my post on think time). You should already have a good idea of how much time a single client will take from the development testing. |

| Are there other limitations with Coded UI tests for load testing? | Yes. While it is possible to drive load with Coded UI tests, you are limited to a maximum of one vUser per agent machine. |

| OK, what if I use Unit Tests instead of Coded UI tests. | You can obviously drive more vUsers with unit tests, but they are still more difficult to maintain (they do not have nearly as much built in support for dynamic load) and they still require more resources to execute each vUser (not as many concurrent tests per given machine), although to be fair, I have to say that "Your mileage may vary" (see this blog post). |

Wrapping it up

In order to decide what method I am going to use to test an application end to end, I consider the maintainability of the code and the cost of developing/executing the tests (including hardware costs and labor). Then I choose the method that will get me accurate results and still fit within my budget. 99.9% of the time, that will be to use web or unit tests that do NOT exercise the client code, but only send the necessary traffic across the wire. I then get client timing from a single client.