Security guidelines to detect and prevent DOS attacks targeting IIS/Azure Web Role (PAAS)

In a previous blog, we explained how to Install IIS Dynamic IP Restrictions in an Azure Web Role. In the present article, we'll provide guidelines to collect data and analyze it to be able to detect potential DOS/DDOS attacks. We'll also provide tips to protect against those attacks. While the article focuses on web applications hosted in Azure Web Role (PAAS), most of the article content is also applicable to IIS hosted on premise, on Azure VMs (IAAS) or Azure Web Site.

I – Archive Web Role logs

Without any history of IIS logs, there is no way to know if your web site has been attacked or hacked and when a potential threat started. Unfortunately, many customers are not keeping any history of their logs which is a real issue when the application is hosted as an Azure Web Role (PAAS) because PAAS VMs are "stateless " and can be reimaged/deleted on operations like scaling, new deployment …etc…

A comprehensive list of Azure logs is described in the following documents:

- Windows Azure PaaS Compute Diagnostics Data (see "Diagnostic Data Locations" section)

- Microsoft Azure Security and Audit Log Management (whitepaper)

To keep logs history, Windows Azure platform provides everything needed with Windows Azure Diagnostics (WAD). All you have to do is simply to turn the feature on by Configuring Windows Azure Diagnostics and you'll get your IIS logs automatically replicated to a central location in blob storage. One caveat is that bad configuration of WAD can prevent log replication and log scavenging/cleanup which in worst case may cause IIS logging to stop (see IIS Logs stops writing in cloud service). You also need to consider that keeping history of logs in Azure storage can affect you Azure bill and one "trick" is to Zip your IIS log files before transferring with Windows Azure Diagnostics. For on premise IIS, there are many resources describing how to archive IIS logs and you may be interested in this script: Compress and Remove Log Files (IIS and others).

In some cases related to Azure Web Role, there are situations where you need to immediately gather all logs manually. This is true if you've not setup WAD or if you can't wait for the next log replication. In this situation, you can manually gather all logs with minimal effort using the procedure described in Windows Azure PaaS Compute Diagnostics Data (see "Gathering The Log Files For Offline Analysis and Preservation"). The main limitation of this manual procedure is that you need to have RDP access to all VM instances.

Now that you have your logs handy, let's see how to analyze them.

II – Analyse your logs

LOGPARSER is the best tool to analyze all kinds of logs. If you don't like command line prompt, you can use LOGPARSER Studio (LPS) and read the following cool blog from my colleague Sylvain: How to analyse IIS logs using LogParser / LogParser Studio. In this section, we'll provide very simple LOGPARSER queries on IIS and HTTPERR logs to spot potential DOS attacks.

Before running any log parser query, you may have a quick look at the log files size and see if it is stable day after day or if you can spot unexpected "spikes". Typically, a DOS attack that is trying to "flood" a web application may translate itself into significant increase in HTTPERR and IIS logs. To check for logs size, you can use Explorer but you can also LPS/LOGPARSER as it provides a file system provider (FSLOG). In LPS, you can use the built in queries "FS / IIS Log File Sizes" to query on log file sizes:

SELECT Path, Size, LastWriteTime FROM '[LOGFILEPATH]' ORDER BY Size DESC

This first step can help to filter out "normal" logs and only keep "suspicious" logs. The next step is to start logs analysis. When it comes to IIS/Web Role analysis, there are 2 main log types to use:

- HTTPERR logs (default location: c:\system32\logfiles\httperr, location on web role : D:\WIndows\System32\LogFiles\HTTPERR)

- IIS logs (default location: C:\inetpub\logs\LogFiles, location on web role: C:\Resources\Directory\{DeploymentID}.{Rolename}.DiagnosticStore\LogFiles\Web)

II.1 Analyzing HTTPERR log

HTTPERR logs are generally small and this is expected (see Error logging in HTTP APIs for details). Common errors are HTTP 400 (bad request), Timer_MinBytesPerSecond and Timer_ConnectionIdle. Timer_ConnectionIdle is not really an error as it simply indicate that inactive client was disconnected after the HTTP keep alive timeout was reached (see Http.sys's HTTPERR and Timer_ConnectionIdle). Note that the default HTTP Keepalive timeout in IIS is 120 seconds and a browser like Internet Explorer uses a HTTP keep alive timeout value of 60 seconds. In this scenario, IE always disconnects first and this shouldn't cause any Timer_ConnectionIdle error in HTTPERR. Having a very high number of Timer_ConnectionIdle may indicate a DOS/DDOS attack where an attacker tries to consume all available connections but it can also be a non IE client or a proxy that is using a high keep alive timeout (> 120s). Also, seeing a lot of Timer_MinBytesPerSecond errors may indicate that malicious client(s) trying to waste connections by sending "slow requests" but it can also be that some clients are simply getting poor/slow network connections…

For logs analysis, I generally use a WHAT/WHO/WHEN approach:

WHAT |

SELECT s-reason, Count(*) as Errors FROM '[LOGFILEPATH]' GROUP BY s-reason ORDER BY Errors DESC |

WHO |

SELECT c-ip, Count(*) as Errors FROM '[LOGFILEPATH]' GROUP BY c-ip ORDER BY Errors DESC |

WHEN |

SELECT QUANTIZE(TO_TIMESTAMP(date, time), 3600) AS Hour, COUNT(*) AS Total FROM '[LOGFILEPATH]' GROUP BY Hour ORDER BY Hour |

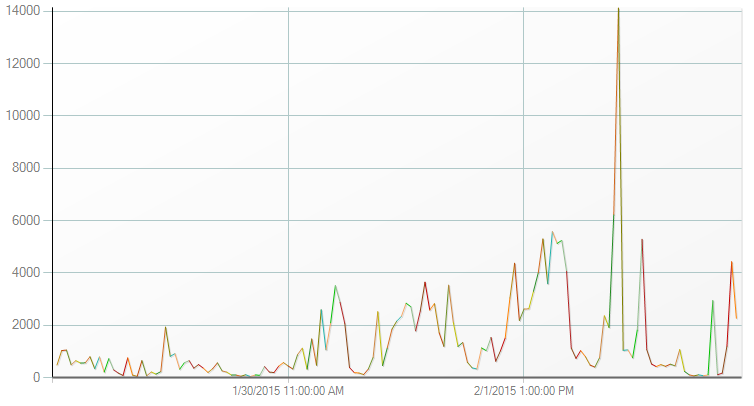

This allows to quickly see WHAT the top errors are, WHO triggered them (client IPs) and WHEN the errors occurred. Combined with the graph feature of Log Parser Studio, our WHEN query can quickly spot unexpected peak(s):

Depending on the results, some further filtering may be needed. For example, if the number of Timer_ConnectionIdle errors is very high, you can check the client IPs involved for this specific error:

SELECT c-ip, Count(*) as Errors FROM '[LOGFILEPATH]' WHERE s-reason LIKE '%Timer_ConnectionIdle%' GROUP BY c-ip ORDER BY Errors DESC

Also, we can do some filtering on a suspicious IP trying to check when suspicious access occurred:

SELECT QUANTIZE(TO_TIMESTAMP(date, time), 3600) AS Hour, COUNT(*) AS Total FROM '[LOGFILEPATH]' WHERE c-ip='x.x.x.x' GROUP BY Hour ORDER BY Hour

If the above queries are pointing to a suspicious IP, we can then check the client IP using a reverse DNS tools (https://whois.domaintools.com/) and blacklist it using either Windows firewall, PAAS/IAAS ACLs or IP Restrictions module…

II.2 Analyzing IIS logs

For the IIS logs, I use the same WHAT/WHO/WHEN approach as above:

WHAT |

SELECT cs-uri-stem, Count(*) AS Hits FROM '[LOGFILEPATH]' GROUP BY cs-uri-stem ORDER BY Hits DESC |

WHO |

SELECT c-ip, count(*) as Hits FROM '[LOGFILEPATH]' GROUP BY c-ip ORDER BY Hits DESC |

WHEN |

SELECT QUANTIZE(TO_TIMESTAMP(date, time), 3600) AS Hour, COUNT(*) AS Total FROM '[LOGFILEPATH]' GROUP BY Hour ORDER BY Hour |

The following query can also be very useful to check for errors over time :

ERRORS / HOUR |

SELECT date as Date, QUANTIZE(time, 3600) AS Hour, sc-status as Status, count(*) AS ErrorCount FROM '[LOGFILEPATH]' WHERE sc-status >= 400 GROUP BY date, hour, sc-status ORDER BY ErrorCount DESC |

The above queries are voluntary simples. Depending on results, we will need to "polish" them by adding filtering/grouping…etc There are already a lot of excellent articles covering this topic so I won't reinvent the wheel:

- Inside Microsoft.com - Analyzing Denial of Service Attacks

- Log Parser Example Queries

- Recommended LogParser queries for IIS monitoring?

- Forensic Log Parsing with Microsoft's LogParser

III – Mitigating Denial Of Service attacks (DOS)

Security guidelines for IIS/Azure Web Role are described in the Windows Azure Network Security Whitepaper (see section "Security Management and Threat Defense" and "Guidelines for Securing Platform as a Service"). While Azure implements sophisticated DOS/DDOS defense for large scale DOS attacks against Azure DC or DOS attacks initiated from the DC itself, the document clearly mentions that "it is still possible for tenant applications to be targeted individually". This basically means that web application in Azure should use similar means as on premise application to protect themselves against attackers and pragmatically, this means you have to put in place a couple of actions:

Install Dynamic IP Restrictions (DIR) :

- PAAS : Install IIS Dynamic IP Restrictions in an Azure Web Role

- Azure Web Sites : Configuring Dynamic IP Address Restrictions in Windows Azure Web Sites

- IAAS/on premise : IIS 8.0 Dynamic IP Address Restrictions

Note: if you setup Dynamic IP Restrictions with "Deny Action Type" set to "Abort Request", the requests will be aborted and logged as "Request_Cancelled" in the HTTPErr log. Therefore, HTTPERR log analysis may results in spikes related to the "Request_Cancelled" error. This is expected and simply shows that Dynamic IP Restrictions is doing his job.

use URL rewrite blocking rule to block unsolicited requests detected from IIS log analysis (in case unsolicited requests have a specific pattern)

use PAAS ACLs / IAAS ACLs whenever possible so that you whitelist/blacklist IP ranges allowed/denied to access the application

the following powershell script can be used to automate HTTPERR logs analysis and setup ACLs to blacklist frequently appearing IPs : Script to monitor and protect Azure VM against DOS

if you need to quickly setup Windows Firewall to block IP(s), remember that this can be done using : netsh advfirewall firewall add rule name=BLACKIPS dir=in interface=any action=block remoteip=x.x.x.x

IV - Mitigating Distributed Denial Of Service attacks (DDOS)

While the above mitigations are valid for simple DDOS attacks, there may not be enough to mitigate sophisticated DDOS attacks.

In some situation, it's possible to find a "pattern" through log analysis. For example, inspecting the HTTP referrer header may allow to see that DDOS requests coming from users visiting suspicious sites (typically porn sites). In this case, URLREWRITE can be used to filter malicious requests (see Blocking Image Hotlinking, Leeching and Evil Sploggers with IIS Url Rewrite).

Mitigating more sophisticated DDOS originating from SPAM/botnets requires additional methods when it is not possible to find a pattern to distinguish legitimate requests from malicious ones. In this case, we need to combine sophisticated approaches to mitigate a sophisticated attack:

- use an IP reputation database to filter requests from malicious IPs (Barracuda implements a reputation database)

- use Captcha (Introducing Three-Tier Captcha to Prevent DDOS Attack in Cloud Computing)

- use a SINKHOLE mechanism (Understanding DNS Sinkholes – A weapon against malware)

Unfortunately, implementing the above mitigations require to use custom code or rely on ISP capabilities (SINKHOLE). Dedicated security software like Barracuda Web Application Firewall implements some of the above features and provides an "all-in-one" approach to protect your web server. The following blog provides a quick summary of the setup steps: How to Setup and Protect an Azure Application with a Barracuda Firewall

V –Other security best practices

While this is unrelated to DOS/DDOS attack, it is worth mentioning some basic security rules:

make sure your Azure VMs are up to date

for Web Role (PAAS), don't use a specific GuestOS version unless this is absolutely necessary and understand how Guest OS are updated (see Role Instance Restarts Due to OS Upgrades)

enable anti malware which is now released for IAAS and PAAS : Microsoft Antimalware for Azure Cloud Services and Virtual Machines

Consider using and Azure monitoring and Application Insights to detect misbehaving web application which can be an indication of DOS/DDOS attack :

- How to Monitor Cloud Services

- Get started with Application Insights

- Install Application Insights Status Monitor to monitor website performance

- Setting up Application Insights for monitoring an Azure Cloud Service

Regularly check IIS/HTTPERR and windows logs for suspicious activity and malicious requests. Some script injection attacks can be detected using the following approach:

If you plan to do penetration testing in Azure, make sure to notify the operation team and follow the process described on https://security-forms.azure.com/penetration-testing/terms

If you are interested in Azure Security, the following page is a very good central repository of resources: Security in Azure.

I hope you'll find the above information useful and remember that "forewarned is forearmed"…

Emmanuel