Debugging and tuning HTTP.SYS output cache on W2K8R2 / IIS 7.5

During the past months, we've seen more and more customers moving from W2K3/IIS6 to W2K3R2/IIS7.5. QXL Ricardo is one of them and I had the chance to work with Mr Jeremy Calles on a couple of IIS performance issues. Jeremy was my contact at QLX Ricardo as the project manager in charge of the migration.

Because of the nature of his activity, QXL Ricardo's web site hosts millions of directories/static images on a shared NAS. A natural way to achieve great performances consists to kernel cache as much content as possible and limit unnecessary requests to the NAS.

To achieve this goal, Jeremy tweaked a couple of IIS settings in order to :

1- Minimize file access to the NAS

Since the NAS doesn't host any ASP.NET content, the tuning basically consisted to prevent SMB traffic caused by FCN (File Change Notifications) for both ASP.NET and static content :

HKLM\Software\Microsoft\ASP.NET\ FCNMode = 1

HKLM\System\CurrentControlSet\Services\InetInfo\Parameters\ DoDirMonitoringForUNC = 0

ApplicationHost.configallowSubDirConfig = false

The allowSubDirConfig tweak was done following W3WP process dump analysis showing dozen of threads blocked with the following callstack :

0:050> k

Child-SP RetAddr Call Site

00000000`06d0e1b8 000007fe`fdd51203 ntdll!ZwDelayExecution+0xa

00000000`06d0e1c0 000007fe`fb35125d KERNELBASE!SleepEx+0xab

00000000`06d0e260 000007fe`fb2111a6 iisutil!CReaderWriterLock3::_LockSpin+0xbd

00000000`06d0e2a0 000007fe`fb211b40 nativerd!CONFIG_CACHE::ReadLock+0x16

00000000`06d0e2d0 000007fe`f58e9775 nativerd!CONFIG_SYSTEM::GetUniqueConfigPath+0x130

00000000`06d0e960 000007fe`f58e7fd7 iiscore!W3_CONTEXT::SetupMetadata+0x248

00000000`06d0f0f0 000007fe`f58e8a11 iiscore!W3_CONTEXT::SetupStateMachinePhase2+0xdf

00000000`06d0f1f0 000007fe`f58e8ecb iiscore!W3_CONTEXT::SetupStateMachine+0xb40

00000000`06d0f700 000007fe`f79310d2 iiscore!W3_MAIN_CONTEXT::OnNewRequest+0x1d7

00000000`06d0f730 000007fe`f793109c w3dt!UL_NATIVE_REQUEST::DoWork+0x126

00000000`06d0f790 000007fe`f74f1fba w3dt!OverlappedCompletionRoutine+0x1c

00000000`06d0f7c0 000007fe`f74f2024 w3tp!THREAD_POOL_DATA::ThreadPoolThread+0x7a

00000000`06d0f810 000007fe`f74f20a1 w3tp!THREAD_POOL_DATA::ThreadPoolThread+0x34

00000000`06d0f840 00000000`77a6f56d w3tp!THREAD_MANAGER::ThreadManagerThread+0x61

00000000`06d0f870 00000000`77ba3021 kernel32!BaseThreadInitThunk+0xd

00000000`06d0f8a0 00000000`00000000 ntdll!RtlUserThreadStart+0x1d

The lock being held by a thread doing file I/Os with the NAS :

Child-SP RetAddr Call Site

00000000`00e0f6b8 000007fe`fd53860d ntdll!ZwQueryFullAttributesFile+0xa

00000000`00e0f6c0 000007fe`fac18048 KERNELBASE!GetFileAttributesExW+0x9d

00000000`00e0f790 000007fe`fac21f0d nativerd!FILE_HELPER::GetFileInfo+0x38

00000000`00e0f7c0 000007fe`fac55497 nativerd!CONFIG_CACHE::GetFileChanged+0x4d

00000000`00e0f880 000007fe`fac5552b nativerd!CONFIG_CACHE::HandleChangeNotificationRecursive+0xc7

00000000`00e0f8e0 000007fe`fac5552b nativerd!CONFIG_CACHE::HandleChangeNotificationRecursive+0x15b

00000000`00e0f940 000007fe`fac5552b nativerd!CONFIG_CACHE::HandleChangeNotificationRecursive+0x15b

00000000`00e0f9a0 000007fe`fac5552b nativerd!CONFIG_CACHE::HandleChangeNotificationRecursive+0x15b

00000000`00e0fa00 000007fe`fac5552b nativerd!CONFIG_CACHE::HandleChangeNotificationRecursive+0x15b

00000000`00e0fa60 000007fe`fac56020 nativerd!CONFIG_CACHE::HandleChangeNotificationRecursive+0x15b

00000000`00e0fac0 000007fe`fac561e0 nativerd!CONFIG_CACHE::HandleChangeNotification+0x250

00000000`00e0fca0 00000000`773df56d nativerd!NOTIFICATION_THREAD::ThreadProc+0x150

00000000`00e0fd30 00000000`77513021 kernel32!BaseThreadInitThunk+0xd

00000000`00e0fd60 00000000`00000000 ntdll!RtlUserThreadStart+0x1d

2 - Ensure that kernel (HTTP.SYS) cache holds as much as images as possible for the longest possible time

To achieve this, the following settings were tweaked :

HKLM\System\CurrentControlSet\Services\InetInfo\Parameters\FileAttributeCheckThreshold = FFFFFFFF

HKLM/System\CurrentControlSet\Services\InetInfo\Parameters\ObjectCacheTTL = FFFFFFFF

HKLM/System\CurrentControlSet\Services\HTTP\Parameters\URIScavengerPeriod = FFFFFFFF

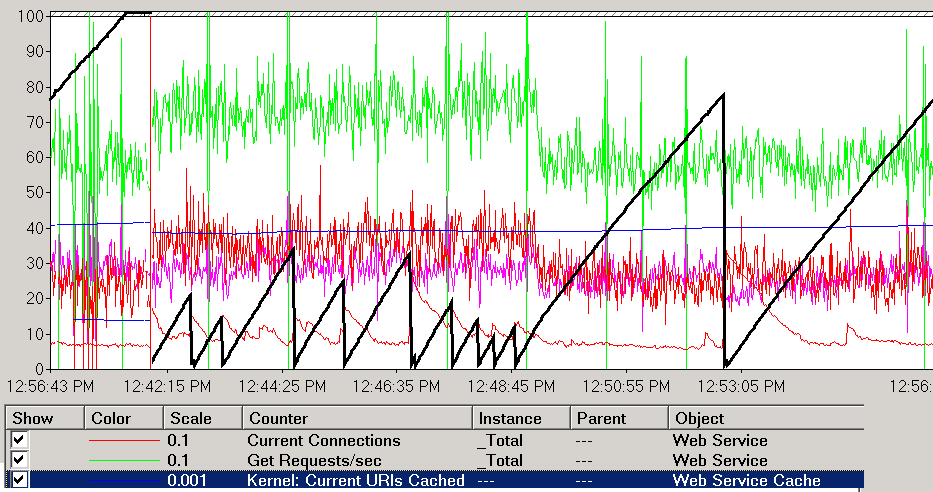

After having put all the tweaks in production, we monitored the HTTP.SYS cache using perfmon and observed the following behavior :

Unfortunately, the Kernel Output cache was flushed on a regular basis for unknown reasons. At this point, we decided to get a HTTP.SYS ETW log but, while the log was clearly showing URLs being flushed, it didn't tell us the reason for the flush. At this point, the only option was to debug HTTP.SYS hoping to break when cache entries were deleted and inspect call-stack to understand the cause of the flush. Fortunately, Jeremy was able to provide a reproduction scenario for the issue and we were able to setup a VM with KD configured. We then put a couple of breakpoints:

1: kd> bl

0 e fffff880`0237b3a0 0001 (0001) HTTP!UlFlushCache

1 e fffff880`02389b20 0001 (0001) HTTP!UlFlushCacheByUri

2 e fffff880`02353790 0001 (0001) HTTP!UlpFlushFilterPeriodicScavenger

3 e fffff880`02376880 0001 (0001) HTTP!UlFlushCacheByProcess

4 e fffff880`02389fa0 0001 (0001) HTTP!UlMgmtFlushCache

5 e fffff880`02389950 0001 (0001) HTTP!UlpFlushUri

6 e fffff880`02369f20 0001 (0001) HTTP!UlpZombifyEntry

The first breakpoint we hit was the following:

HTTP!UlFlushCacheByProcess:

fffff880`02376880 4883ec38 sub rsp,38h

1: kd> kp

Child-SP RetAddr Call Site

fffff880`044b2978 fffff880`02317071 HTTP!UlFlushCacheByProcess

fffff880`044b2980 fffff880`0238f56f HTTP!UlDetachProcessFromAppPool

fffff880`044b2a00 fffff800`02ba19af HTTP!UlCleanup

fffff880`044b2a30 fffff800`02b87604 nt!IopCloseFile

fffff880`044b2ac0 fffff800`02ba14a1 nt!ObpDecrementHandleCount

fffff880`044b2b40 fffff800`02ba13b4 nt!ObpCloseHandleTableEntry

fffff880`044b2bd0 fffff800`02889853 nt!ObpCloseHandle

…

The above call-stack is pretty self-explanatory. Cached entries are flushed whenever the application pool recycles. This makes sense. In this specific case, the application pool was recycling because of the idle timeout (20 minutes by default). However, there can be many other reasons for application pool recycling and you'd better know that all cached entries associated to the recycled application pool will be flushed!

Once we fix the recycling issue, we hit another breakpoint :

Child-SP RetAddr Call Site

fffff880`05a6e988 fffff880`02389db1 HTTP!UlFlushCacheByUri

fffff880`05a6e990 fffff800`02ba66b7 HTTP!UlFlushResponseCacheIoctl

fffff880`05a6ea10 fffff800`02ba6f16 nt!IopXxxControlFile

fffff880`05a6eb40 fffff800`02889853 nt!NtDeviceIoControlFile

fffff880`05a6ebb0 00000000`76f2ff2a nt!KiSystemServiceCopyEnd

00000000`00fef898 000007fe`f4e5128f ntdll!ZwDeviceIoControlFile

00000000`00fef8a0 000007fe`f4e54dba HTTPAPI!HttpApiSynchronousDeviceControl

00000000`00fef930 000007fe`fa5f39b4 HTTPAPI!HttpFlushResponseCache

00000000`00fef9a0 000007fe`fa53474e w3dt!UlAtqFlushUlCache

00000000`00fef9d0 000007fe`fa536084 cachhttp!UL_RESPONSE_CACHE_ENTRY::~UL_RESPONSE_CACHE_ENTRY

00000000`00fefa10 000007fe`fa533c7a cachhttp!UL_RESPONSE_CACHE_ENTRY::`scalar deleting destructor'

00000000`00fefa40 000007fe`f88f697c cachhttp!UL_RESPONSE_CACHE_ENTRY::DereferenceCacheData

00000000`00fefa70 000007fe`f88f94df IISUTIL!CLKRLinearHashTable::_DeleteNode

00000000`00fefaa0 000007fe`f88f5acf IISUTIL!CLKRLinearHashTable::_DeleteIf

00000000`00fefb20 000007fe`fa5327f9 IISUTIL!CLKRHashTable::DeleteIf

00000000`00fefb70 00000000`76ef4a2b cachhttp!UL_RESPONSE_CACHE::ScavengerCallback

00000000`00fefbc0 00000000`76ef4285 ntdll!RtlpTpTimerCallback

00000000`00fefc00 00000000`76ef4b1f ntdll!TppTimerpExecuteCallback

00000000`00fefc60 00000000`76cdf56d ntdll!TppWorkerThread

00000000`00feff60 00000000`76f13281 kernel32!BaseThreadInitThunk

After some investigation, we found out that the cache was flushed because of the 900 seconds value used for HttpResponseCacheTTL. We boosted this value and were finally able to stick our cache entries for the application pool lifetime.

We do hope the above tweaks and observations may be useful to other folks.

Happy Tuning!

Jeremy from QXL Ricardo & Emmanuel from the FRIIS support team