Windows Azure Guidance – Failure recovery – Part III (Small tweak, great benefits)

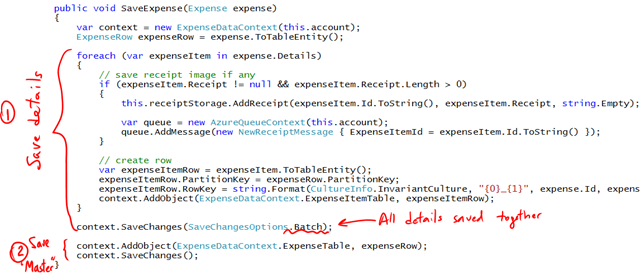

In the previous post, my question was about a small change in the code that would yield a big improvement. The answer is:

What changed?

- No try / catch

- We reversed the order of writes: first we write the details, then we write the “header” or “master” record for the expense.

If the last SaveChanges fails, then there will be orphaned records and images, but the user will not see anything (except for the exception), and presumably would re-enter the expense report. In the meantime a background process will eventually clean up everything. Simple and efficient.