Windows Azure Guidance – Background Processing III (creating files for another system)

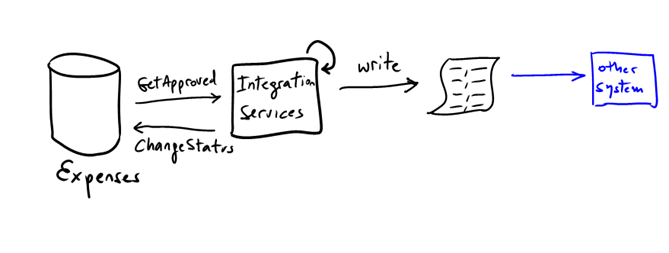

Last week Scott walked me through his current design for the “Integration Service” in our sample. Here’s some preview of this early thinking. As a reminder, our fictitious scenario has a process that runs every once in a while and generates flat files for some other system to process: it simply scans the database for “approved” expenses and then generates a flat file with information such as: employee, amount to be reimbursed, cost center, etc. After that, it updates the state of the expense to “processing”.

Note: in the real world, there would be a symmetric process that would import the results of that external process. For example, there would be another file coming back with a status update for each expense. We are just skipping that part for now.

Surprisingly, this very simple scenario raises quite some interesting questions on how to design it:

- How to implement the service?

- How to schedule the work?

- How to handle exceptions? What happens if the process/machine/data center fails in the middle of the process?

- How do we recover from those failures?

- Where does the “flat file” go?

- How do we send the “file” back to the on-premises “other system”?

Note: as you should know by now, we pick up our scenarios precisely because they are the perfect excuse to talk about this considerations. This is our “secret agenda” :-)

Option 1: No service

The simplest implementation would be to have no service at all. We could simply have a web page that returns the text file upon request. Potential disadvantages or considerations of this approach:

- More load on the app that might be serving users. Of course this depends highly on the computational needs of the process. One could argue that for a-Expense, it’s not that much. After all we are just collecting expense reports and the most computation intensive operation we have is adding all line items into a total value…not much really.

- If the process is much more complex than that, or if you have lots and lots of records to go over, then a synchronous operation (like a call to a web page) can have problems: timeouts, etc.

- The current storage architecture in aExpense (which is based on Azure Tables) is optimized towards a different use case (adding and retrieving individual user expenses). The integration process access strategy is slightly different (e.g. by date and expense state, but not by user for sure). So queries against the current schema might not be optimal. For example, they could lead to “table scans”.

- If you use SQL Azure this is less of an issue, because you can have multiple indexes of course.

Option 2: A worker dedicated to generating this files

This would be straight forward, but the question here is: how often do we need these process to run? Lets assume we run it say…twice a month? A dedicated worker might not be worth it. It might if the process is very computational intensive though (again, probably not the case for a-Expense).

Option 3: implement this as a “task” inside a Worker

This is what we discussed in the previous post: having a worker role running tasks or jobs. The advantage of the approach is that a Option 2 is a special case of Option 3. In a-Expense we already have some other tasks that run in the background (the image compressor + thumbnails generator), so we could just add another task for creating the files.

We decided to go with Option 3 with a twist. We split the task in two steps:

- A task to “flatten” the data model.

- A second process to create the files.

Task #1:

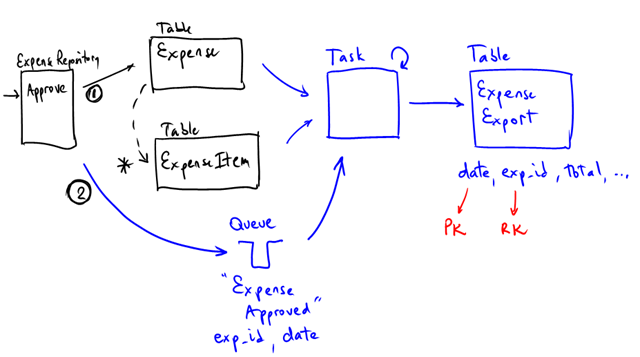

Every time there’s an approval (which updates the state of the expense report on the Expense table), a message is also written into a queue. The message contains the id of the expense and the date of approval.

The first job picks up the message, uses the id to get the expense from the (two) tables, compute the total (which is a the sum of all line items), store a single record to another table (Expense Export), updates the state of the expense to “in process” and finally deletes the message from the queue.

This “Expense Export” table is essentially an exact representation of the file to be exported. The Partition Key of the new table is the “approval date” and the Row Key is the expense id. The combination is obviously unique. Notice that this design optimizes access by date. Which is what we want.

If the task fails for whatever reason and the message is reprocessed (the message in the queue is the last thing the task does), then the insert to the export table will simply fail (duplicate key).

At this point we have two options for creating the files:

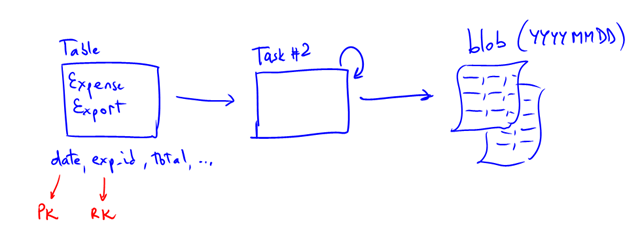

- Create another task to take records from the Export Table into a blob (using a naming convention to group expensed by date for example). This task would not be triggered by a message in a queue, but rather by time. The on-premises process will need to be modified to get the files from blob storage, most probably using the account keys.

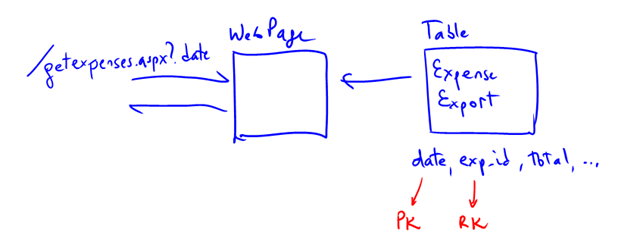

2. The other alternative is to use a variation of Option 1 described at the beginning of this article: have a web page that returns the file by simply reading the Export Table (which has now an optimized design for this type of access: by date):

But we still haven’t decided on which just yet.