Microsoft Office Communications Server 2007 Speech Server Development - Introduction

Office Communications Server 2007 is Microsoft's IP communication solution and it allows companies to leverage their network infrastructure for voice, video communication, instant messaging, audio/video calls and for much more. You may ask what's the benefit of using the computer network as opposed to the internal telephony network - you don't pay after the minute at neither of them. However, if it's the computer network, it's only the matter of software to integrate the telephony, video and IM with desktop applications. By routing the IP packages through a server in the DMZ, users can call each other at not cost wherever they are. The presence is also very important to mention - users can see each other's status (whether they are online, busy, away, on a meeting with their laptop, out of the office, etc). The presence icon is integrated into every Office applications and it tells the caller in advance whether the other party will be answering the call or not, or if it's not the right time for the call. This is integrated to every piece of the Office System (SharePoint and the Office client products). Online users will stop using mobiles, there's a whole change in the communication culture. This article outlines OCS 2007 Speech Server, which is an additional server role for Office Communications Server.

OCS 2007 Speech Server

Every software client/device is a UC endpoint in OCS - whether it's an IP phone, Office Communicator (the client of OCS, like Messenger), a video camera in an A/V meeting room, etc. Imagine that you have not only these endpoints, but that you also have non-human endpoints connected to your OCS/telephony infrastructure. These endpoints are software-driven and can communicate with callers on the phone. An example of such an endpoint is Exchange Server Voice Access, where you can get Exchange to read up your emails and you can do other clever things (say "Clear my calendar for today" - which sends a cancellation to every attendees of your meetings for today). You can write these applications using managed code and these application can be deployed and enabled in your OCS infrastructure. These numbers can be even enabled for callers outside of your organization (this is how Exchange Server Voice Access works at Microsoft).

How to write programs for Speech Server?

There are 3 important areas in a voice enabled system:

- Speech: the quality of the speech engine

- Voice recognition: the quality of the voice recognition engine and

- Programmability - how easy to develop voice-enabled applications on this platform.

I'll start with the programmability one and I let you to judge on the other two. There are two programming models that you can use: the web-programming model where the voice application is hosted in IIS as a web page and the dialog is represented by a set of post backs. The other programming model is using Windows Workflow Foundation to design the conversation's flow. I'll focus on the latter today and will skip the web-based one. For the workflow programming model, you need Visual Studio 2005 SP1, IIS, MSMQ and Speech Server installed on your PC (see the pre-requisites section).

Fire up your Visual Studio, there's a project template called "Voice Response Workflow Application" after you have install the development components. You can already start dragging and dropping workflow activities into your workflow designer to describe the conversation's flow. There are many workflow activities that you can use: Statement activity, QuestionAnswer activity, GetAndConfirm activity - this one won't step to the next activity unless the caller is confirmed his/her answer, Menu, etc. When your workflow asks something, you define the question for the activity, like "Can I have your employee ID please?", then you need to define what format you expect the answer in - this definition is called "Grammar". The grammar is a pre-defined pattern that defines the different ways the answer can be said. For example, "yes, it's 1234", or "my employee id is 1234", or "1234", or "it is 1234" and so on. We define a placeholder in this pattern for the number because that's the only thing that we are interested in, and we define the different options how the answer can be said. There's a designer that helps you creating the grammar.

The grammar will have an output variable which you can get when the caller is answered your question. Here, you need to write code - when the caller answered the question, you will get the employee ID into a variable that you can convert to a numeric value and you can do your actions based on this number - for example, you can look up this employee ID in Active Directory, etc.

I've prepared with a small application just to show how this thing works. What it does, it calls you up and it asks you about the number of computers and persons in your household and it submits the answers to a database. I could have written a more intelligent application as well, but this will be enough to understand how it works.

Prerequisites

To be able to play with the product, you need to install all components of it on your development environment.

In order to install the Speech Recognition Server component, you need to enable a few features if you don't have them already enabled. You need to re-start the installer every time you have enabled a feature - it won't refresh automatically. To save some time, copy my features list (Vista):

You also need Visual Studio 2005 with SP1 (VS 2005 RTM is no go), and the Visual Studio 2005 extensions for .NET Framework 3.0 (Windows Workflow Foundation) package in order to be able to install the Development Tools component of the product. Installing Visual Studio 2005 Service Pack 1 Update for Windows Vista is also recommended if you run Visual Studio 2005 on Vista.

After the product is set up, at least one language pack needs to be installed (you can find them on the installation DVD or your can download them from the Internet) for the Windows services to start. I've installed the English/UK pack. There's also a US and Australian English available on the DVD and 11 additional languages. The full list is:

- Chinese (People's Republic of China)

- Chinese (Taiwan)

- English (Australia)

- English (United Kingdom)

- English (United States)

- French (Canada)

- French (France)

- German (Germany)

- Italian (Italy)

- Japanese (Japan)

- Korean (Korea)

- Portuguese (Brazil)

- Spanish (Spain)

- Spanish (United States)

What is a Grammar? What is a Rule?

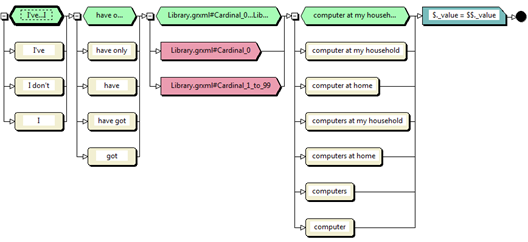

The "grammar" is a collection of "rules". A rule is a mini workflow where you can describe the expected sentence's structure. In my case, I expect the answer "I have X computer at home" or something similar from the end user. I designed my rule to accept a more sophisticated answer as well, like "I have only 2 computers at my household" or "I have got no computers". It's up to you how you make your rules finer and more resilient. The result of the rule is a value which is the number of computers in my case. The following is a screen shot of my rule from the Visual Studio Rule Editor:

The green shapes are called Lists, the white ones are Phrases. Only one of the Phrases apply inside a List shape. The pink shapes are Rule references (RuleRefs), they are used to reference to other rules. The two RuleRefs in my case are references to numeric rules, they are used to recognize the number 0 and the numbers 1 to 999 and convert the recognized words to a numeric value. Looking into those rules, they have several lines where they combine the recognized words and calculate the numeric result. The result is written to the $$._value member variable which is then copied to the $._value member variable by a Script Tag (blue). The value in $._value then can be referenced in the Voice workflow and can be used for further tasks (in my case, confirming the number of computers to the end user). After compiling the grammar, the outcome is an XML file, with a .grxml extension.

What is the Voice Workflow?

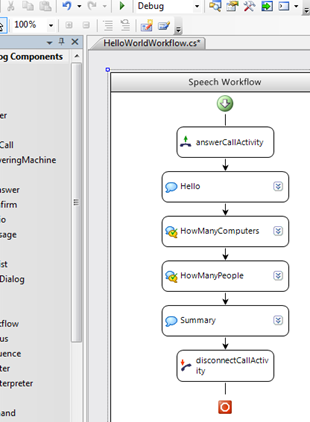

After designed the rule, let's work on the workflow part, which is the one that controls the main flow. The Rule that I've described above is evaluated in the HowManyComputers QuestionAnswer activity.

How can I start?

I recommend to install the developer samples. After installed, I suggest opening the HelloWorld project from the C:\Program Files\Microsoft Office Communications Server 2007 Speech Server\Samples\Workflow\HelloWorld folder and playing with it.

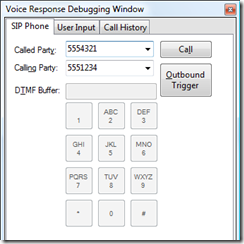

You can test your application by pressing F5 key - you'll get the Voice Response Debugging window where you need to click on the Call button:

When the workflow starts and the server asks you a question, you are redirected to the second tab and need to click on the Start Recording button. Say your answer and Speech Server will recognize it.

When your answer is recognized, click on the Submit button to post back your input to your workflow.

Questions?

Don't hesitate to ask!