Arbitration and Translation, Part 1

A while back Jake Oshins answered a question on NTDEV about bus arbitration and afterwards I asked him if he could write a couple of posts about it for the blog. Here is part 1.

History Lesson

In the history of computing, most machines weren’t PCs. PCs, and the related “industry standard” server platforms, may constitute a huge portion of the computers that have been sold in the last couple of decades, but even during that time, there have been countless machines, both big and small, which weren’t PCs. Windows, at least those variants which are derived from Windows NT, (which include Windows XP and everything since,) was originally targeted at non-PC machines, specifically those with a MIPS processor and a custom motherboard which was designed by in-house at Microsoft. In the fifteen years that followed that machine, NT ran on a whole pile of other machines, many with different processor architectures.

My own career path involved working on the port of Windows NT to PowerPC machines. I wrote HALs and worked on device drivers for several RS/6000 workstations and servers which (briefly) ran NT. When I came to Microsoft from IBM, the NT team was just getting into the meat of the PnP problem. The Windows 95 team had already done quite a bit to understand PnP, but their problem space was really strongly constrained. Win95 only ran on PCs, and only those with a single processor and a single root PCI bus.

Very quickly, I got sucked into the discussion about how to apply PnP concepts to machines which were not PCs, and also how to extend the driver model in ways that would continue to make it possible to have one driver which ran on any machine, PC or not. If the processor target wasn’t x86, you’d need to recompile it. But the code itself wouldn’t need changing. If the processor target was x86, even if the machine wasn’t strictly a PC, your driver would just run.

In order to talk about non-PC bus architectures, I want to briefly cover PC buses, for contrast. PC’s have two address spaces, I/O and memory. You use different instructions to access each. I/O uses “IN, OUT, INS, and OUTS.” That’s it. Memory uses just about any other instruction, at least any that can involve a pointer. I/O has no way of indirecting it, like virtual memory indirects memory. That’s all I’ll say about those here. If you want more detail, there have been hundreds of good explanations for this. My favorite comes from Mindshare’s ISA System Architecture, although that’s partly because that one existed back when I didn’t fully understand the problem space. Perhaps there are better ones now.

In the early PC days, the processor bus and the I/O bus weren’t really separate. There were distinctions, but those weren’t strongly delineated until PCI came along, in the early ‘90s. PCI was successful and enduring because, in no small part, it was defined entirely without reference to a specific processor or processor architecture. The PCI Spec has almost completely avoided talking about anything that happens outside of the PCI bus. This means, however, that any specific implementation has to have something which bridges the PCI spec to the processor bus. (I’m saying “processor bus” loosely here to mean any system of interconnecting processors, memory and the non-cache-coherent I/O domains. This sometimes gets referred to as a “North Bridge,” too.)

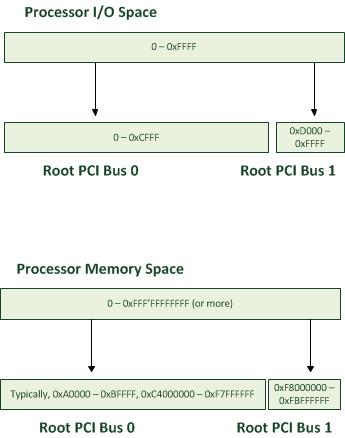

The processor bus then gets mapped onto the I/O subsystem, specifically one or more root PCI buses. The following diagram shows a machine that has two root PCI buses (which is not at all typical this year, but was very typical of PC servers a decade ago.) The specific addresses could change from motherboard to motherboard and were reported to the OS by the BIOS.

You’ll notice that processor I/O space is pretty limited. It’s even more limited when you look at the PCI to PCI bridge specification, which says that down-stream PCI busses must allocate chucks of I/O address space on 4K boundaries. This means that there are only a few possible “slots” to allocate from and a relatively small number of PCI busses can allocate I/O address space at all.

Attempts to expand I/O Space

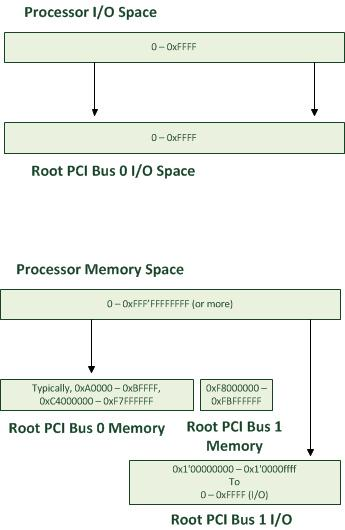

Today, this lack of I/O space problem is mostly handled by creating devices which only use memory space (or memory-mapped I/O space as it’s sometimes called.) But in the past, and in some current very-high-end machines, multiple PCI I/O spaces are mapped into a machine by mapping them into processor memory space rather than processor I/O space. I’ve debugged many a machine that had a memory map like the following.

In this machine, you need to use memory instructions, complete with virtual address mappings, if you want to manipulate the registers of your device, as long as that device is on Root PCI Bus 1 or one of its children. If your device is plugged into Root PCI Bus 0, then you use I/O instructions. While that’s a little bit hard to code for (more on that later) it’s nice because each PCI bus has its full 16K of I/O address space.

In theory, the secondary root PCI buses can have even more than 16K of space. The PCI spec allows for 32-bits of I/O space and devices are required to decode 32-bit addresses of I/O. Since it’s all just mapped into processor memory space, which is large, you can have a really large I/O space. In practice, though, many devices didn’t follow the spec and the one machine I’ve seen that depended on this capability had a very, very short list of compatible adapters.

Non-Intel Processors

If you’ve ever written code for a processor that Intel didn’t have a hand in designing, you’ve probably noticed that the concept of I/O address spaces is pretty rare elsewhere. (Now please don’t write to me telling me about some machine that you worked on early in your career. I’ve heard those stories. I’ll even bore you with my own as penance for sending me yours.) Let’s just stop the discussing by pointing out that MIPS, Alpha and PowerPC never had any notion of I/O address space and Itanic has an I/O space, but only if you look at it from certain angles. And those are the set of non-x86 processors that NT has historically run on.

Chipset designers who deal with non-PC processors and non-PC chipsets often do something really similar to what was just described above where the north bridge translates I/O to memory, except that not even PCI Bus 0 has any native I/O space mapping. All the root PCI buses map their I/O spaces into processor memory space.

Windows NT Driver Contract

About now, you’re probably itching to challenge my statement (above) where I said you could write a driver which runs just fine regardless of which sort of processor address space your device shows up in.

Interestingly, I’ve been working on HALs and drivers within Microsoft (and at IBM before that) for about 16 years now and I always knew that I understood the contract. I also knew that few drivers not shipped with NT followed the contract. What I didn’t know was that, even though the “rules” are more or less described in the old DDK docs, very few people outside of Microsoft had internalized those rules, and in fact one major driver consulting and teaching outfit (who shall remain nameless, but who’s initials are “OSR”) was actually teaching a different contract.

After much discussion about this a few years ago, and from my own experience, I believe that it was essentially an unreasonable contract, in that it was untestable if you didn’t own a big-iron machine with weird translations or a non-PC machine running a minority processor.

I’ll lay out the contract here, though, for the sake of completeness.

1. There are “raw” resources and “translated” resources. Raw resources are in terms of the I/O bus which contains the device. Translated resources are in terms of the processor. Every resource claim has both forms.

2. Bus drivers take raw resources and program the bus, the device or both so that the device registers show up at that set of addresses.

3. Function drivers take the translated resources and use them in the driver code, as the code runs on the processor. Function drivers must ignore the raw resource list. Even if the function driver was written by a guy who is absolutely certain that his device appears in I/O space, because it is a PCI device with one Base Address Register of type I/O, the driver must still look at the resource type in the translated resources.

4. If your device registers are in I/O space from the point of view of the processor, your translated resources will be presented as CmResourceTypePort. If your translated resources are of this type, you must use “port” functions to access your device. These functions have names that start with READ_PORT_ and WRITE_PORT_.

5. If your device registers are in memory space from the point of view of the processor, your translated resources will be presented as CmResourceTypeMemory. If they are of this type, you must first call MmMapIoSpace to get a virtual address for that physical address. Then you use “memory” functions, with names that start with READ_REGISTER_ and WRITE_REGISTER_. When your device gets stopped, you call MmUnmapIoSpace to release the virtual address space that you allocated above.

This contract works. (No, really, I’m certain. I’ve written a lot of code that uses it.) But it’s not an easy contract to code to, and I’ll lay out the issues:

· The “PORT” functions and the “REGISTER” functions are not truly symmetric. The forms that take a string and transfer it do different things. The PORT functions assume the register is a FIFO. The REGISTER functions assume it’s a region of memory space that’s being referred to. So you pretty much have to ignore the string forms of these and code your own with a loop.

· All access to your device either has an “if port then, else memory” structure to it. Or you create a function table that access the device, with variant port/memory forms.

· The ever-so-popular driver structure where you define your registers in a C-style struct and then call MmMapIoSpace and lay your struct over top of your device memory just doesn’t work in any machine that translates device memory to processor I/O. (Yes, I’ve even seen one of those.)

In the end, most driver writers outside of the NT team either ignore the contract because they are unaware of it, or ignore it because they have no way to test their driver in non-PC machines. Imagine telling your boss that you have functions which deal with I/O mapped into processor memory in your driver but you’ve never seen them run. So he can either ship untested code or pony up and buy you an HP Superdome Itanic, fully populated with 256 processors just to test on.