Scaling a Multi-Tenant Application with Azure DocumentDB

Best Practices for Tenant Placement and Load Balancing

Introduction

A question that we are frequently asked is “How do I design a multi-tenant application on top of Azure DocumentDB?” There are many answers to this question, and the best answer depends on your application’s particular scenario.

At a high-level, depending on the scale of your tenants you could choose to partition by database or collection. In cases where tenant data is smaller and tenancy numbers are higher – we suggest that you store data for multiple tenants in the same collection to reduce the overall resources necessary for your application. You can identify your tenant by a property in your document and issue filter queries to retrieve tenant-specific data. You can also use DocumentDB’s users and permissions to isolate tenant data and restrict access at a resource-level via authorization keys.

In cases where tenants are larger, need dedicated resources, or require further isolation - you can allocate dedicated collections or databases for tenants. In either case, DocumentDB will handle a significant portion of the operational burden for you as you scale-out your application’s data store.

In this article, we will discuss the concepts and strategies to help guide you towards a great design to fit your application’s requirements.

What is a Collection?

Before we dive deeper on partitioning data, it is important to understand what a collection is and what it isn’t.

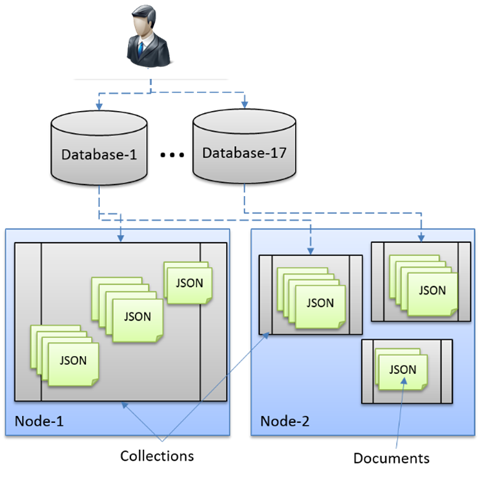

As you may already know, a collection is a container for your JSON documents. Collections are also DocumentDB’s unit of partition and boundary for the execution of queries and transactions. Each collection provides a reserved amount of throughput. You can scale out your application, in terms of both storage and throughput, by adding more collections, and distributing your documents across them.

It is absolutely imperative to understand that collections are not tables. Collections do not enforce schema; in other words, you can store different types of documents with different schemas in the same collection. You can track different types of entities simply by adding a “type” attribute to your document.

Unlike some other document-databases, there is nothing wrong with having a lot of collections to achieve greater scale and performance. You may, however, want to re-use collections from a cost-savings perspective.

Sharding with DocumentDB

You can achieve near-infinite scale (in terms of storage and throughput) for your DocumentDB application by horizontally partitioning your data – a concept commonly referred to as sharding.

Sharding is a standard application pattern for achieving high scalability for large web applications. For applications that require the benefits of transactions, an application will need to partition its data carefully based on a key with each partition small enough to fit into a transaction domain (i.e. a collection). Sharded applications must be aware that data is spread across a number of partitions or transaction domains and need logic to select a partition and interact with it based on a shard-key.

A DocumentDB Application sharded across databases and collections.

Partitioning Scopes

The decision of whether to shard across multiple transactional domains depends on the scale of your application – as sharding generally involves additional data management logic in your application.

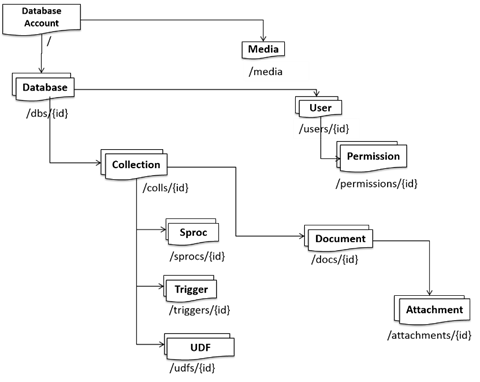

Hierarchical Resource Model under a Database Account

Let’s take a closer examination at the DocumentDB’s resource model and the various scopes that you could potentially partition your data across:

Placing all tenants in a single collection - This is generally a good starting point for most applications – e.g. whenever the total resource consumption across all of your tenants is well within the storage and throughput limitations of a single collection.

Security can be enforced at the application level simply by adding a tenant property inside your document and enforcing a tenant filter on all queries (e.g. SELECT * FROM collection c WHERE c.userId = “Andrew”). Security can be enforced at the DocumentDB level as well by creating a user for each tenant, assigning permissions to tenant data, and querying tenant data via the user’s authorization key.

Major benefits of storing tenant data within a single collection include reducing complexity, ensuring transactional support across application data, and minimizing financial cost of storage.

Placing tenants across multiple collections – In cases where your application needs more storage or throughput capacity than a single collection can handle, you can partition data across multiple collections. Fortunately, there is no need to set a limit on the size of your database and the number of collections. DocumentDB allows you to dynamically add collections and capacity as your application grows. You will, however, need to decide how the application will partition tenant data and route requests, which we’ll discuss in just a moment.

In addition to increased resource capacity, an advantage of partitioning data across multiple collections is the ability to allocate more resources for larger tenants over smaller tenants by placing them in less dense collections (e.g. you can place a tenant, who needs dedicated throughput, on to their own collection).

Placing tenants across multiple databases – For the most part, placing tenants across databases works fairly similar to placing tenants across collections. As shown in the resource model diagram above, the major distinction between DocumentDB collections and databases is that users and permissions are scoped at a database-level. In other words, each database has its own set of users and permissions – which you can use to isolate specific collections and documents.

Partitioning tenants by database may simplify user and permission management in scenarios where tenants require an extremely large set of users and permissions. When tenants are partitioned by database and the tenant leaves the application – the tenant’s data along with their users and permissions can all be discarded together simply by deleting the database.

Placing tenants across multiple database accounts – Likewise, placing tenants across database accounts works fairly similar to placing tenants across databases. The major distinction between database accounts and databases is that the master/secondary keys, DNS endpoints, and billing are scoped at a database account level.

Partitioning tenants by database account allows applications to maintain isolation between tenants in scenarios where tenants require access to their own database account keys (e.g. tenants require the ability to create, delete, and manage their own set of collections and users).

Common Sharding Patterns

If you’re partitioning data across multiple transactional domains, your application will need some sort of heuristic to partition its data and route requests to the correct partition. Some common heuristics for partitioning data include:

Range Partitioning

Partitions are assigned based on whether the partitioning key is inside a certain range. An example could be to partition data by timestamp or geography (e.g. zip code is between 30000 and 39999).

Range-Partitioning by Month

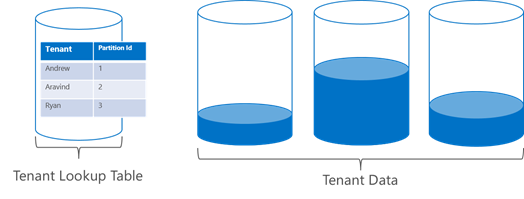

Lookup Partitioning

Partitions are assigned based on a lookup directory of discrete values that map to a partition. This is generally implemented by creating a lookup map that keeps track of which data is stored on which partition. You can cache look-up results to avoid making multiple round-trips. An example could be to partition data by user (e.g. partition G contains Harry, Ron, and Hermione and partition S contains Draco, Vincent, and Gregory) or country (e.g. partition Scandinavia contains Norway, Denmark, and Sweden)

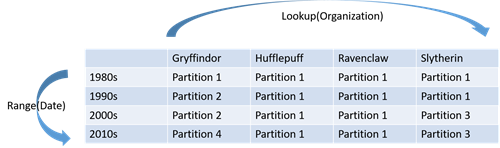

Lookup Partitioning

Hash Partitioning

Partitions are based on the value of a hash function – allowing you to evenly distribute across n number of partitions. An example could be to partition data by the hash code % 3 of the tenant to evenly distribute tenants across 3 partitions.

Hash Partitioning

So which partitioning strategy is right for you? Range partitioning is generally useful in the context of dates, as it gives you an easy and natural mechanism for ageing out partitions. Lookup partitioning, on the other hand, allows you to group and organize unordered and unrelated sets of data in a natural way (e.g. group tenants by organization or states by geographic region). Hash partitioning is incredibly useful for load balancing when it is difficult to predict and manually balance the amount of data for particular groups or when range partitioning would cause the data to be undesirably clustered.

You don’t have to choose just one partitioning strategy either! A composite of these strategies can also be useful depending on the scenario – for example, a composite range-lookup partitioning may provide you both the manageability of range partitioning as well as the explicit control of lookup partitioning.

Composite Range-Lookup Partitioning

Fan-Out Operations

A common method for operating on data across multiple transactional domains is to issue a fan-out query, in which the application queries each partition in parallel and then consolidate the results.

Fan-Out Query

A primary architecture decision is when the fan-out occurs. Let’s use a news-feed aggregation timeline as an example; some common strategies for fan-out include:

Fan-Out on Write – The idea here is to avoid computation on reads and to do most of the processing on writes, making read time access extremely fast and easy. This may be a good decision if the timeline resembles something like Twitter – a roughly permanent, append-only, per-user aggregation of a set of source feeds. When an incoming event is recorded, it is stored in the source feed and then a record containing a copy is created for each timeline that it must be visible in.

Fan-Out on Read – This may be a good decision if the timeline resembles something like Facebook – in which the feed is temporal and needs to support dynamic features (such as real-time privacy checks and tailoring of content). When an event is recorded, it is only stored in the source feed. Every time a user requests their individual timeline, the system fan-out reads to all of the source feed the user has visibility into and aggregates the feeds together.

Managing Storage and Throughput

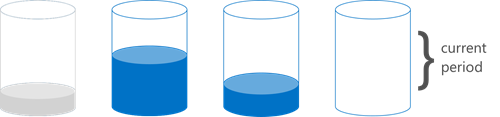

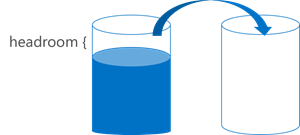

When a partition begins to fill up and run out of resources, it is time to spill-over or re-distribute data to another partition. This may occur when an outlying tenant is consuming more storage and/or throughput than expected, or the application is simply outgrowing the existing partitions. You will want to monitor and pick an appropriate threshold (e.g. 80% of storage consumed), based on the rate that which resources are consumed, to act upon to prevent the possibility of application failure.

Maintain enough headroom before having to spill-over/re-distribute data to avoid service-interruption.

One strategy to overcome storage-constraints may be to spill over incoming data in to a new collection. For example, spill-over may work well if your collections store highly temporal data and are partitioned by month or date. In this context, you could even consider pruning or archiving older collections as the age and become stale. It’s important to keep in mind that collections are the boundary for transactions and queries; you may have to fan-out your reads or writes if dealing with data across collections.

Another strategy to overcome storage and/or throughput constraints is to re-distribute your tenant data. For example, you could move an outlying tenant that consumes a lot more throughput than others to another collection. You may want to fan-out reads and/or writes during the migration process to avoid service interruption for the particular tenant.

Conclusion

DocumentDB has proven to scale for various multi-tenant scenarios here within Microsoft, including MSN which stores user data for Health & Fitness experiences (web and mobile) in a sharded database account. Check out the blog post on this use case!

For most things, the design for your application will depend heavily on its scenarios and data access patterns. Hopefully this article will serve as a great starting point to guide you in the right direction.

To learn how to get started with DocumentDB or for more information, please check out our website.

For more information on developing multi-tenant applications on Microsoft Azure in general, check out this great MSDN article.