SSIS Package Data flow Execution Slowness Troubleshooting

Hi all,

In this blog, we are covering "SQL SSIS package data flow execution slowness" troubleshooting guidelines. The approach mentioned in the blog can be used for reference while troubleshooting SSIS package data load performance issues.

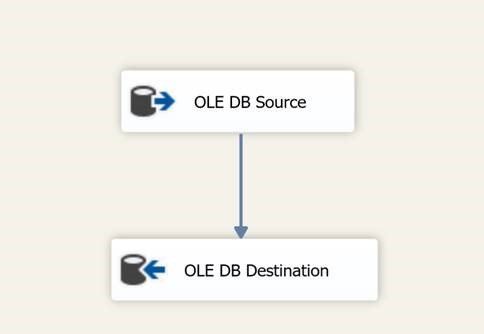

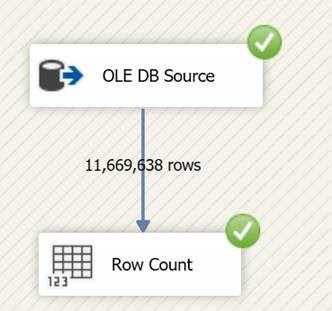

Consider a simple SSIS data flow task, which is selecting the data from the OLE DB source and inserts the rows to the destination table using OLE DB destination task.

For data flow execution slowness issues, its essential to narrow down which task in data flow is slow. The issue could be at:

OLE DB source

Transformation task

OLE DB destination

To identify if the Source is causing slowness:

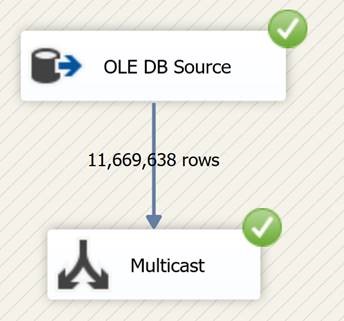

To check if the issue is with the source or not, replace OLE DB destination task with Multicast task and check the execution time. Multicast task Distributes every input row to every row in one or more outputs. For example, branch your data flow to make a copy of data so that some values can be masked before sharing with external partners. Record the execution time using SSIS execution logs.

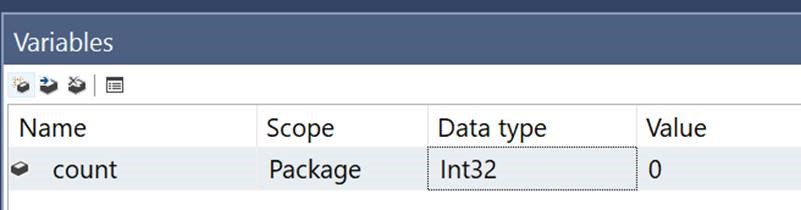

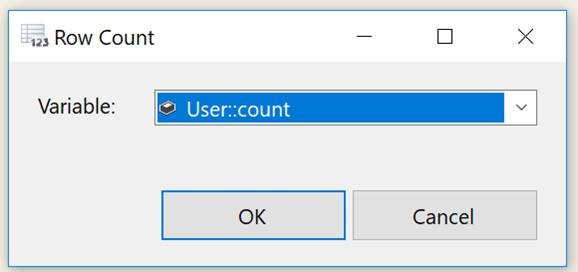

Another way to check the slowness is at Source or not, use Row count task. Create a user defined variable with Int data type and execute the package. Record the execution time and compare with the execution time with OLE DB destination task.

If The package execution with Row Count task takes more time to execute, then review the source database for performance bottlenecks. Review the execution time of the select when the package is executing on the source server.

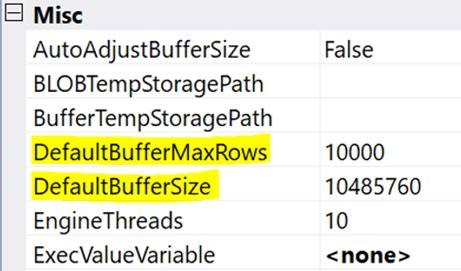

The data flow engine uses a buffer-oriented architecture to efficiently load and to manipulate datasets in memory. Reviewing the properties of the Data flow task has the below options:

DefaultBufferMaxRows, default value is 10000.

DefaultBufferSize, default value is 10 MB.

The data flow engine begins the task of sizing its buffers by calculating the estimated size of a single row of data. Then it multiplies the estimated size of a row by the value of DefaultBufferMaxRows to obtain a preliminary working value for the buffer size. Hence reduce your Estimated Row Size as much as possible by removing any unnecessary columns and configuring data types correctly.

- If the result is more than the value of DefaultBufferSize, the engine reduces the number of rows.

- If the result is less than the internally-calculated minimum buffer size, the engine increases the number of rows.

- If the result falls between the minimum buffer size and the value of DefaultBufferSize, the engine sizes the buffer as close as possible to the estimated row size times the value of DefaultBufferMaxRows.

Before adjusting the sizing of the buffers, improvement can be achieved to great extent by reducing the size of each row of data by removing unneeded columns and by configuring data types appropriately.

When sufficient memory is available, smaller number of large buffers provide better performance by reducing the total number of buffers required to hold the data, and by fitting as many rows of data into a buffer as possible. To determine the optimum number of buffers and their size, experiment with the values of DefaultBufferSize and DefaultBufferMaxRows while monitoring performance. Do not increase buffer size to the point where paging to disk starts to occur. Paging to disk hinders performance more than a buffer size that has not been optimized.

To identify if the Destination is causing slowness:

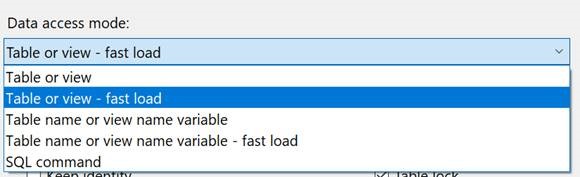

With the steps mentioned in above section, if execution of the data flow execution takes much less time in fetching the data from the source, then the focus should be to tune the destination. Tuning OLE DB destination involves, reviewing the Performance on destination server for any bottlenecks during data load, check for lock blocking, review wait types etc.

In the OLE DB destination task, review if changing the data access to mode to “Table or View- fast load” improves the performance.

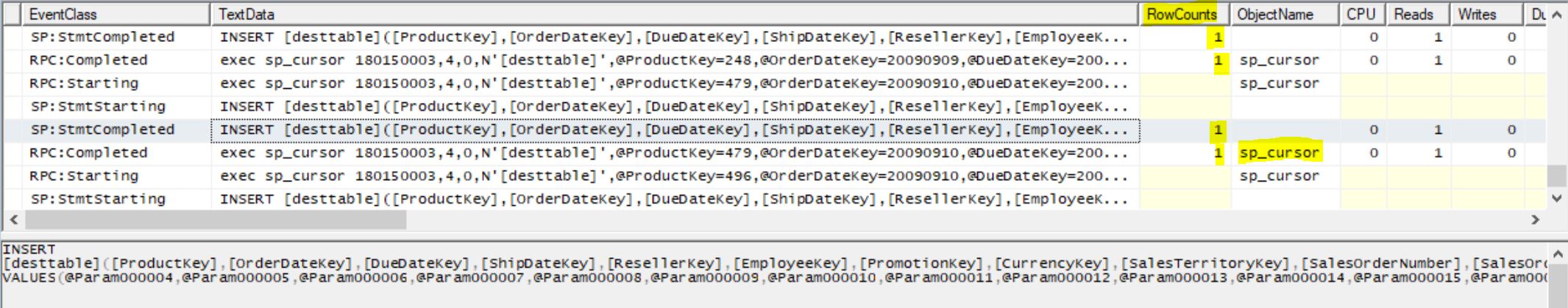

When Table or view data access mode is used, SQL uses sp_cursor to insert the rows on destination table. As per the screenshot, 1 row is inserted on destination at a time.

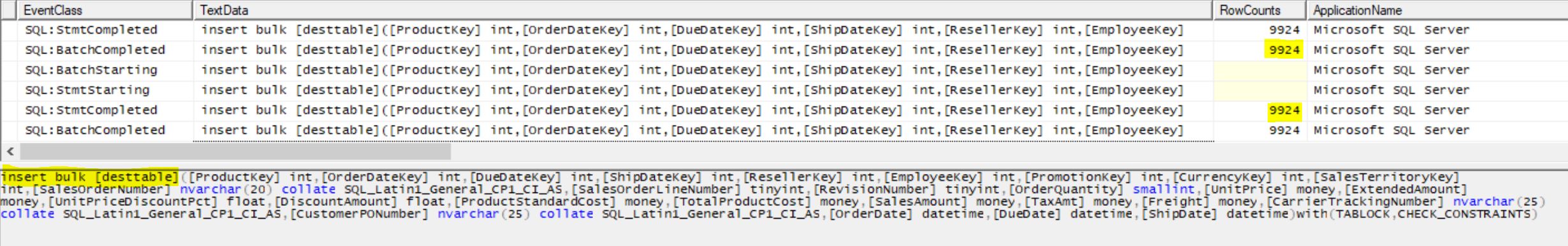

When "Table or view - fast load" data access method is used, SQL uses insert bulk command to insert the data on destination table. Review the RowCounts column.

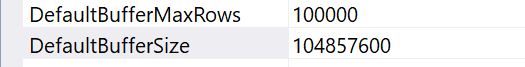

Modifying DefaultBufferMaxRows and DefaultBufferSize has an impact on the rows transferred in insert bulk command. As per below, when DefaultBufferMaxRows value is changed to 100000 and DefaultBufferSize to 100MB, SQL inserted 99879 rows in one batch.

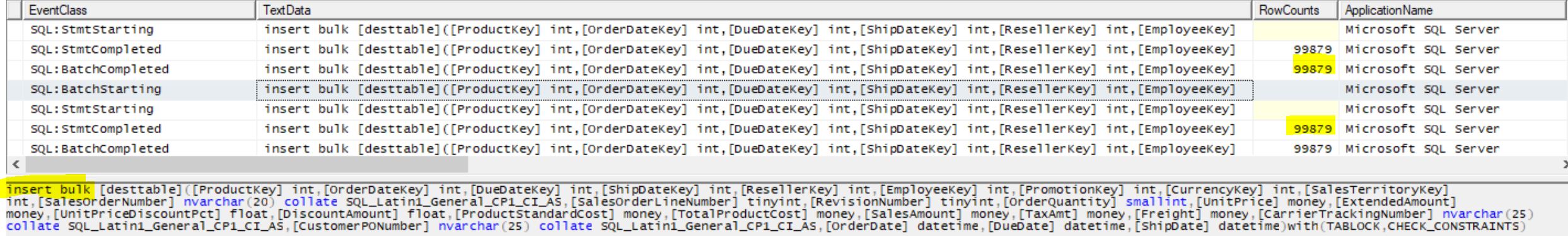

SSIS has a transformation task called the Balanced Data Distributor (BDD) which provides an easy way to make use of multi-processor and multi-core servers by introducing parallelism in the data flow of an SSIS package. Balanced Data distributor task can be used which evaluates and directs rows in a dataset to multiple destinations. Evaluate the SSIS package execution with BDD task and check the execution time for improvement. BDD can be downloaded from https://www.microsoft.com/en-us/download/details.aspx?id=4123

Here the number of rows is split across 4 OLE DB destination tasks to achieve parallelism.

Review the logic of the SSIS data load, if delta inserts are performed or complete reload of the table is done. In complete reload scenarios, we have seen performance gain by dropping Indexes before data load process and by creating them post data load. This will improve in scenarios where Index insert is causing an overhead impacting the performance.

Post implementing the above steps mentioned, post eliminating source and destination bottlenecks, evaluate the network from source to the destination. Check if the SSIS package is being called from an application server. Review if the Network packets are getting dropped/re transmit of Network packets which can cause major performance issues.

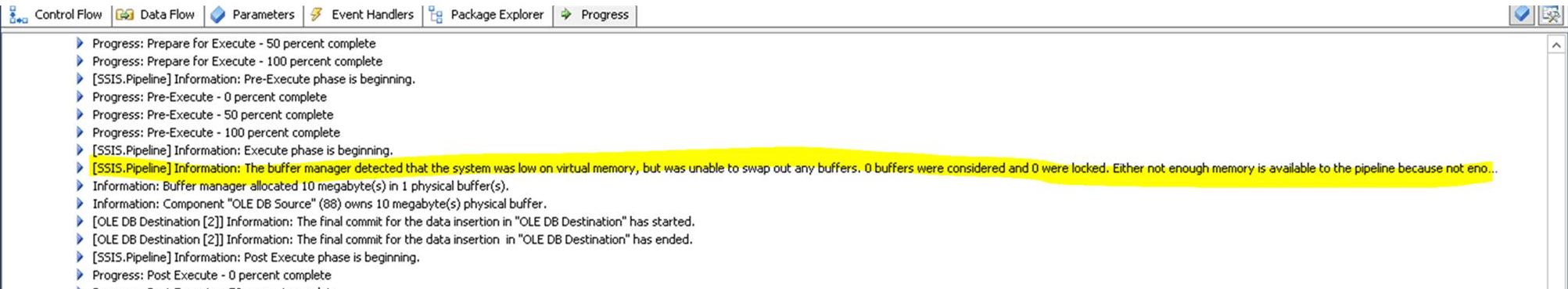

32-bit execution mode of SSIS package has process virtual memory address limitation.

With adequate memory, it’s possible to get as many records into a buffer with fewer but larger buffers, by tweaking DefaultMaxBufferRows and DefaultBufferSize appropriately. Please note that changing package execution mode to 64-bit would require updating the data providers users to connect to the source and destination. Please review if the source/destination is compatible with 64-bit providers and check if the package can be migrated to 64-bit.

Check if changing the provider at the source/destination has any impact on the data flow task. We have seen performance improvements in data flow, when Microsoft Attunity provider for Oracle is used for Oracle data source. So, check with different providers available and check if they improve the performance.

Hope the above steps mentioned help you in troubleshooting SSIS package execution slowness issues.

Please share your feedback, questions and/or suggestions.

Thanks,

Don Rohan Castelino | Premier Field Engineer | Microsoft

Disclaimer: All posts are provided AS IS with no warranties and confer no rights. Additionally, views expressed here are my own and not those of my employer, Microsoft.