Protocol Buffers and WCF

WCF performance has many aspects. In the previous series I explored how using GZip/Deflate compression can increase performance in areas with low network latency. However, the penalty is that the CPU utilization is much higher. Therefore, it does not apply to many people's situations.

Instead of compressing messages after they are built, it would be better if we could build smaller messages. Google has a format for serializing objects that is lightweight and fast called Protocol Buffers. The specification for Protocol Buffers is available publicly and there is a .Net implementation available called protobuf-net.

Similar lightweight serialization formats are used by other large companies and none of them are meant to be considered as standards for information interchange. But neither is Microsoft's binary serialization format. Binary serialization in WCF is meant for cases where you have WCF at both ends of the call. However, binary serialization is not as lightweight as Protocol Buffers because of one very important reason: Microsoft's binary serialization format uses an XML infoset. Think of it more as a binary XML format than anything else.

Luckily, the protobuf-net implementation is already set up to work with WCF. The protocol buffers serializer basically replaces the DataContractSerializer used by WCF. For this implementation, we simply need to add on the custom attributes protobuf-net requires to our existing DataContracts.

[DataContract]

[ProtoContract]

public partial class Order

{

[DataMember(Order = 0)]

[ProtoMember(1)]

public int CustomerID;

[DataMember(Order = 1)]

[ProtoMember(2)]

public string ShippingAddress1;

[DataMember(Order = 2)]

[ProtoMember(3)]

public string ShippingAddress2;

[DataMember(Order = 3)]

[ProtoMember(4)]

public string ShippingCity;

[DataMember(Order = 4)]

[ProtoMember(5)]

public string ShippingState;

[DataMember(Order = 5)]

[ProtoMember(6)]

public string ShippingZip;

[DataMember(Order = 6)]

[ProtoMember(7)]

public string ShippingCountry;

[DataMember(Order = 7)]

[ProtoMember(8)]

public string ShipType;

[DataMember(Order = 8)]

[ProtoMember(9)]

public string CreditCardType;

[DataMember(Order = 9)]

[ProtoMember(10)]

public string CreditCardNumber;

[DataMember(Order = 10)]

[ProtoMember(11)]

public DateTime CreditCardExpiration;

[DataMember(Order = 11)]

[ProtoMember(12)]

public string CreditCardName;

[DataMember(Order = 12)]

[ProtoMember(13)]

public OrderLine[] OrderItems;

}

[DataContract]

[ProtoContract]

public partial class OrderLine

{

[DataMember(Order = 0)]

[ProtoMember(1)]

public int ItemID;

[DataMember(Order = 1)]

[ProtoMember(2)]

public int Quantity;

}

The above shows the Order and OrderLine classes from the previous posts. Nothing particularly weird except it does seem a bit redundant to have two sets of custom attributes that mean the same thing. The DataContract portions could be taken off if you want it to be easier to read. Obviously, there are more options available in the Proto* attributes, which is why they decided to use new attributes instead of sticking with DataContract/DataMember.

To use the protocol buffers serializer in place of the DataContractSerializer, just add the endpoint behavior:

ServiceEndpoint endpoint = this.serviceHost.AddServiceEndpoint(

typeof(IOrderService), binding, "OrderService");

endpoint.Behaviors.Add(new ProtoEndpointBehavior());

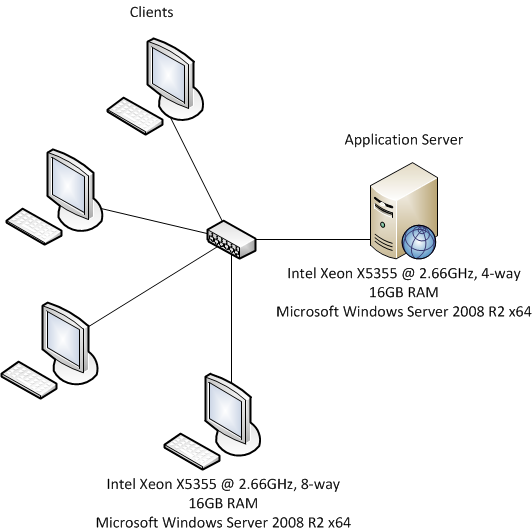

The performance tests on the protobuf-net site didn't really cover the WCF scenario. So I'm hoping this will fill in the gap for people interested in protocol buffers. The environment is the same as used before:

We have four client machines talking to a server over a 1Gb network. In each of the tests, the CPU utilization reached 98% or higher, so network bandwidth was not an issue. The transport channel is TCP. The measurements are taken on post-.Net 4.0 bits which are essentially equivalent to .Net 4.0 RTM bits.

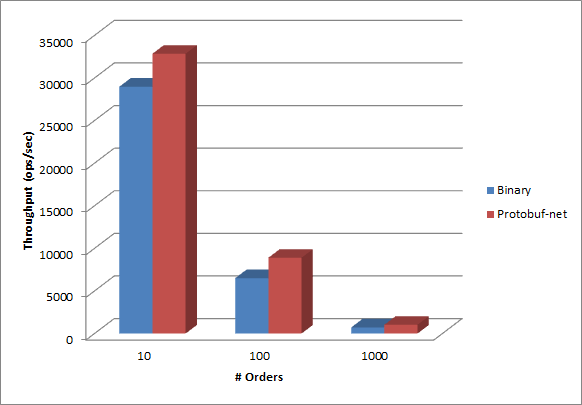

There is definitely a modest performance increase over binary. The actual numbers and network utilization numbers break down as follows:

| # Orders | Binary | Network Utilization | Protobuf-net | Network Utilization | Throughput increase |

|---|---|---|---|---|---|

| 10 | 28978 | 37.80% | 32847 | 30.80% | 13.35% |

| 100 | 6509 | 80.20% | 8904 | 77.90% | 36.80% |

| 1000 | 722 | 88.70% | 1036 | 90.90% | 43.49% |

While the numbers are not showing as dramatic an improvement as is shown on the protobuf-net site, there is definitely a significant gain here, esp. as the messages get larger. In the 1000 Order case, >40% more messages are sent with only a 1-2% increase in network traffic. The messages used in the sample are similar to the Northwind example and may not be ideal for taking advantage of protocol buffers.

One drawback about using protocol buffers is that you can only serialize object trees and not object graphs. This doesn't seem like too big of a deal though.