In some disaster recovery scenarios, a database needs to be restored to a point in time before a particular operation has occurred, e.g. before a user mistakenly dropped a table, ran a DELETE or UPDATE with no WHERE clause, etc. If the database is in the full recovery mode and the log backup chain is not broken, then this is can be achieved by using the RESTORE LOG command with the STOPAT clause. The STOPAT clause is used to specify a point in time when the recovery should stop during log restore. In this case, this would be the time immediately before the transaction containing the erroneous DROP TABLE command is committed. The problem is, frequently you do not know the specific point in time when the user made the mistake. On one hand, to minimize data loss, you need to stop the recovery immediately before the transaction commit. On the other hand, if you overshoot that point in time when restoring the log, you need to start the restore sequence from scratch, restoring the latest full backup, differential backups (if any), and a set of transaction log backups. This may have to be repeated several times, until you arrive at the exact STOPAT time by trial and error. Needless to say, this approach may not be realistic, particularly for large databases.

Luckily, in many cases there is a way to determine the precise point where the restore should stop. This approach may be difficult to implement in some cases, particularly on systems with a lot of write activity, but when it does work, it can save you a lot of time during disaster recovery, while minimizing data loss.

I am going to assume that the transaction log containing the transaction with the user error operation has been backed up – if that is not the case, you will need to perform the tail-log backup before starting with the steps below.

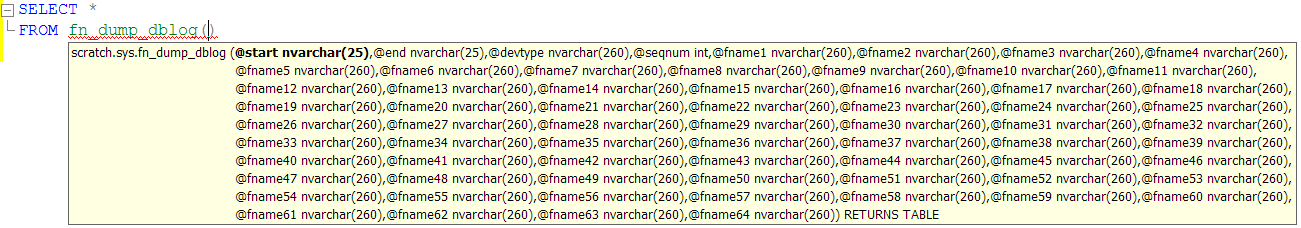

The approach is to use the undocumented fn_dump_dblog() table-valued function, which provides a rowset over the contents of a transaction log backup file (I described the function in an earlier blog post). Each row in the function’s result set represents a log record in the log backup file specified as the function argument. The first column in the result set is named Current LSN, and represents the Log Sequence Number of each log record in the backup file. The RESTORE LOG command has the STOPBEFOREMARK clause, which similarly to the STOPAT clause allows the recovery to be stopped immediately before a particular LSN (in SQL Server 2005 and later). Therefore, if we can determine the LSN associated with the commit of the transaction that included the erroneous command, we can specify that value in the STOPBEFOREMARK clause, and thus restore the database to a point in time immediately before the disaster occurred. The difficulty here is that it may not be easy or even possible to find the erroneous command in the large result set returned by the fn_dump_dblog() function, particularly if the database had a lot of write activity when the erroneous command was run.

The sample script below demonstrates the approach.

-- Create test database, switch it to full recovery model

CREATE DATABASE PointInTimeRestore;

GO

USE PointInTimeRestore;

GO

ALTER DATABASE PointInTimeRestore SET RECOVERY FULL;

GO

— Create the initial full backup

BACKUP DATABASE PointInTimeRestore

TO DISK = ‘C:\MSSQLData\MSSQL10.SS2008\MSSQL\Backup\PointInTimeRestore.bak’;

GO

— Create test table, insert sample data

CREATE TABLE Table1

(

Col1 INT PRIMARY KEY

);

GO

INSERT INTO Table1 (Col1)

VALUES (1), (2), (3);

GO

— Simulate user error and drop the table

DROP TABLE Table1;

GO

— Backup the log containing the erroneous command

BACKUP LOG PointInTimeRestore

TO DISK = ‘C:\MSSQLData\MSSQL10.SS2008\MSSQL\Backup\PointInTimeRestore.trn’;

GO

— Change database context to allow restore of the test database

USE tempdb;

GO

— Restore the database, but do not run recovery to be able to restore transaction logs

RESTORE DATABASE PointInTimeRestore

FROM DISK = ‘C:\MSSQLData\MSSQL10.SS2008\MSSQL\Backup\PointInTimeRestore.bak’

WITH REPLACE, NORECOVERY;

GO

— Examine log backup contents to find the LSN corresponding to the DROP TABLE transaction

SELECT *

FROM fn_dump_dblog

(

DEFAULT, DEFAULT, DEFAULT, DEFAULT,

‘C:\MSSQLData\MSSQL10.SS2008\MSSQL\Backup\PointInTimeRestore.trn’,

DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT,

DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT,

DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT,

DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT,

DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT,

DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT,

DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT, DEFAULT

);

GO

/*

In this case, the log record corresponding to the commit of the transaction

that dropped the Table1 table can be easily found in the result set – the

Operation column for the row is LOP_COMMIT_XACT, and the value in the

Transaction ID column corresponds to the value in the Transaction ID column for

an earlier row with Operation LOP_BEGIN_XACT and Transaction Name DROPOBJ.

We also happen to know that this is the only table that was dropped at the time

in the End Time column.

The LSN in the Current LSN column is 0000002a:00000074:0036.

*/

/*

Restore log to the point in time just before the table was dropped.

Note that we need to prepend 0x to the LSN value –

will get the “The named mark does not identify a valid LSN” an error otherwise.

*/

RESTORE LOG PointInTimeRestore

FROM DISK = ‘C:\MSSQLData\MSSQL10.SS2008\MSSQL\Backup\PointInTimeRestore.trn’

WITH STOPBEFOREMARK = ‘lsn:0x0000002a:00000074:0036’;

GO

— Confirm that the table is again present in the database

USE PointInTimeRestore;

GO

SELECT *

FROM Table1;

© 2019 Microsoft. All rights reserved.