A Microsoft DevSecOps Static Application Security Testing (SAST) Exercise

Static Application Security Testing (SAST) is a critical DevSecOps practice. As engineering organizations accelerate continuous delivery to impressive levels, it’s important to ensure that continuous security validation keeps up. To do so most effectively requires a multi-dimensional application of static analysis tools. The more customizable the tool, the better you can shape it to your actual security risk.

Static Analysis That Stays Actionable and Within Budget: A Formula

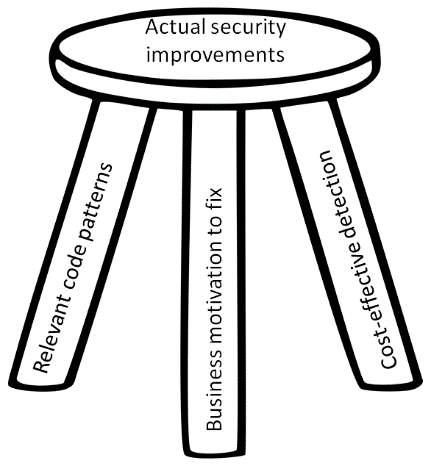

Let’s agree that we employ static analysis in good faith to improve security (and not, for example, to satisfy a checklist of some kind). With that grounding, static analysis is like a tripod: it needs three legs to stand:

- Relevant patterns.

- Cost-effective mechanisms to detect and assess.

- Business motivation to fix.

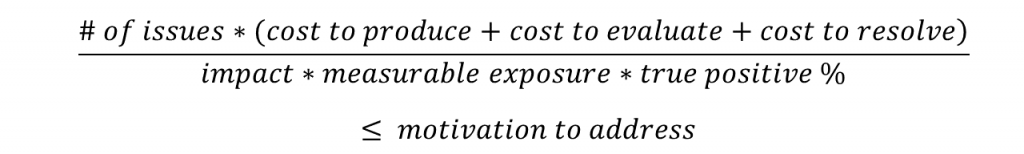

Here’s a formula that captures a healthy static analysis process. Has this alert ever gone red for you? The red mostly materializes in faces. The (hardly lukewarm) language that spews from mouths is blue.

This formula expresses the experience that value delivered is only in fixes for discovered issues. Everything else is waste. If we can correlate (or restrict) findings to those that lead to actual product failures, for example, we should see greater uptake.

Too many results overwhelm. Success is more likely with fewer false positives (reports that don’t flag a real problem). We can increase return on investment by looking for improvements in each formula variable. If the cost to resolve a result is high, that may argue for improving tool output to make it clearer and more actionable.

I’ve encoded security budget as ‘motivation to address’ (and not in terms of dollars or developer time) to make the point that a team’s capacity expands and contracts in proportion to the perceived value of results. A false positive that stalls a release is like a restaurant meal that makes you sick: it is much discussed and rarely forgotten. The indigestion colors a team’s motivation to investigate the next problem report.

A report that clearly prevents a serious security problem is a different story entirely and increases appetite. To succeed, therefore, analysis should embody the DevSecOps principle that security effort be grounded in actual and not speculative risk.

Multiple Security Test Objectives: Unblock the Fast and Thorough

“I know your works; that you are neither cold nor hot. Would that you were cold or hot! But because you are lukewarm, and neither cold nor hot, I will spew you from my mouth.” – Revelations 3:15 – 16.

A core problem with realizing the DevSecOps vision is that our developer inner loop doesn’t provide relevant security data. It is designed, rather, for the highly accelerated addition of new functionality (which inevitably adds security risk). Tools-driven code review can help close the gap.

To ensure that continuous security validation is pulling its weight without slowing things down, I recommend to teams at Microsoft that they divide results into three groups:

- The ‘hot’: lightweight, low noise results that flag security problems of real concern, with low costs to understand and investigate. These results are pushed as early into the coding process as possible, typically blocking pull requests. Example find: a hard-coded credential in a source file.

- The ‘cold’: deep analysis that detects serious security vulnerabilities. These results are produced at a slower cadence. They cost more to investigate and fix and are undertaken as a distinct activity. Results are filed as issues which are prioritized, scheduled and resolved as usual. A high-severity warning might block a release, but it should not block development. Example find: a potential SQL injection issue with a complex data-flow from untrusted source to dangerous sink.

- The ‘lukewarm’: everything else. Spew these low values results from your process mouth and recover the budget. Use the freed cycles to fund a ‘cold’ path analysis (if it doesn’t exist) or spend it on more feature work (if it does).

Besides identifying security vulnerabilities that are actually exploitable in code (we want these!), the cold path yields insights for tuning hot path analysis to keep it focused on what counts. And if you use the proper tools, this practice can be used to bring high impact analysis back to the inner loop, by authoring new checks that codify the reviewer’s knowledge of security and your project’s attack surface area.

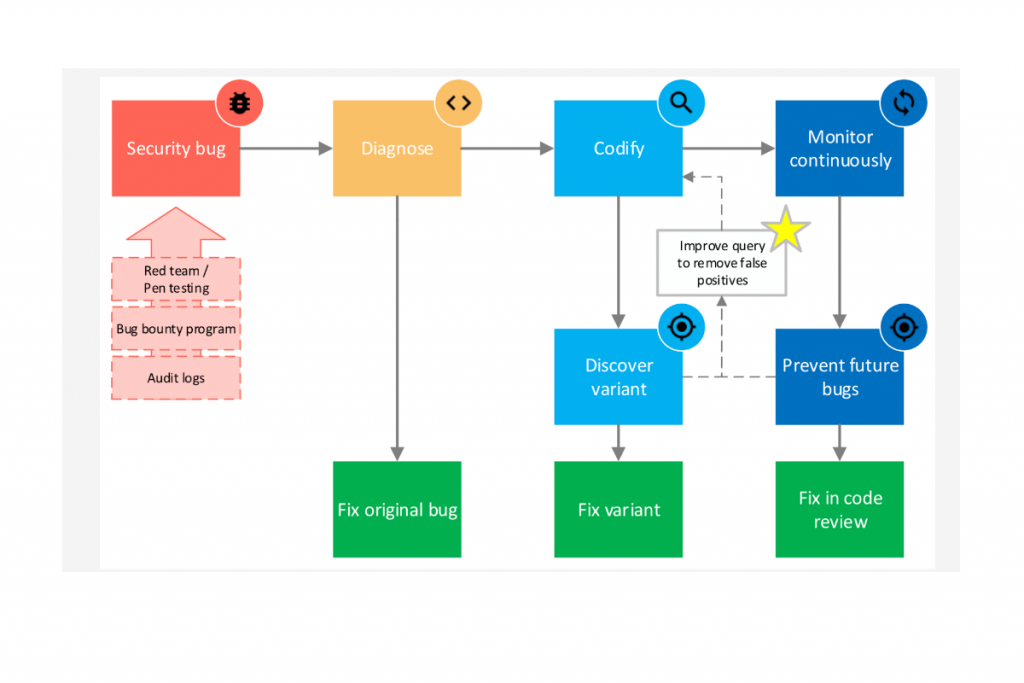

A successful cold path workflow first finds and fixes the original bug, then finds and fixes variants, and then monitors to prevent recurrence. Let’s look a concrete example of how this works at Microsoft.

An MSRC Secure Code Review Case Study

The Microsoft Security Response Center (MSRC) recently described a security review to me that integrated static analysis as a central component for the cold path (#2 above). It was striking how things lined up to make this deployment of static analysis an unqualified success.

The analysis engine utilized, authored by Semmle, converts application code into a database that can be queried via a scripting language (‘Semmle QL’). The query engine is multi-language and can track complex data flows that cross function or binary boundaries. As a result, the technology is quite effective at delivering high value security results at low costs relative to other analysis platforms. Today, MSRC and several other teams at Microsoft have 18+ months of evaluations and rules authoring, with thousands of bugs filed and fixed as proof points for the tech.

You can see read more about MSRC’s Semmle use in great technical detail on their blog. Here’s the setup for the effort we’re discussing here:

- In an end-to-end review of a data center environment, MSRC flagged some low-level control software as a potential source of problems. The risk related both to code function and its apparent quality.

- Because this source code was authored externally, Microsoft had no engineers with knowledge of it.

- Multiple versions of the code had been deployed.

- The code base was too large for exhaustive manual review.

- Due to the specialized nature of the software, no relevant out-of-box analysis existed for it. [This is typical. The more serious the security issue you’re looking for, the greater the likelihood you will need a custom or highly tuned analysis for acceptable precision and recall. Semmle shines in these cases due to the expressiveness of its authoring language and the low costs to implement and refine rules.]

This code base was deemed sufficiently sensitive to warrant MSRC review. If you’ve worked with a penetration-test or other advanced security review team, you know one way this plays out. The reviewer disappears for days or weeks (or months), emerges with several (or many) exploitable defects, files bugs, authors a report and moves on, often returning to help validate the product fixes that roll in. This exercise, supported by Semmle QL, showed how things can go when ‘powered up’ by static analysis:

- First, a security engineer compiled and explored the code to get familiar with it.

- After getting oriented, the engineer conducted a manual code review, identifying a set of critical and important issues.

- The reviewer prioritized these by exposure and impact and a second engineer authored Semmle QL rules to locate the top patterns of interest.

- Next, the rules author ran the analysis repeatedly over the code base to improve the analysis. The manually identified results helped eliminate ‘false negatives’ (that is, the first order of business was to ensure that the tool could locate every vulnerability that the human did). New results (found only by the tool) were investigated and the rules tuned to eliminate any false positives.

Two engineers worked together on this for three days. The productive outcomes:

- Static analysis tripled the number of total security findings (adding twice as many new results to those located by manual review).

- The engineers were able to apply the static analysis to multiple variants of the code base, avoiding a significant amount of (tedious and error prone) effort validating whether each defect was present in every version. This automated analysis in this way also produced a handful of issues unique to a subset of versions (contributing to the 3x multiplier on total findings).

- As fixes were made, the analysis was used to test the product changes (by verifying that bugs could no longer be detected in the patched code).

- On exit, the security team was left with an automatable analysis to apply when servicing or upgrading to new versions of the code base.

Continuously Reexamining Continuous Security Validation

Deep security work takes time, focus, and expertise that extends beyond a mental model of the code and its operational environment (these are essential, too, of course). Static analysis can clearly accelerate this process and, critically, capture an expert’s knowledge of a code base and its actual vulnerabilities in a way that can be brought back to accelerate the development inner loop.

Semmle continues to partner in the static analysis ecosystem for Microsoft, the industry at large and the open source community. Microsoft and Semmle are participants on a technical committee, for example, that is in the final stages of defining a public standard for persisting static analysis results (‘SARIF’, the Static Analysis Results Interchange Format). The format is a foundational component for future work to aggregate and analyze static analysis data at scale. We are working together to release a body of Semmle security checks developed internally at Microsoft to the open source community. We continue partnering to protect many of Microsoft’s security-critical code bases.

Light

Light Dark

Dark

0 comments