Creating fun & immersive audio experiences with Web Audio

Today, thanks to the power of the Web Audio API, you can create immersive audio experiences directly in the browser. No need for any plug-in to create amazing experiences. I’d like to share with you what I’ve learned while building the audio engine of our Babylon.js open-source gaming engine.

By the end of this article, you’ll know how to build this kind of experience:

Usage: move the cubes with your mouse or touch near the center of the white circle to mix my music in this 3D experience using WebGL & Web Audio. View the source code.

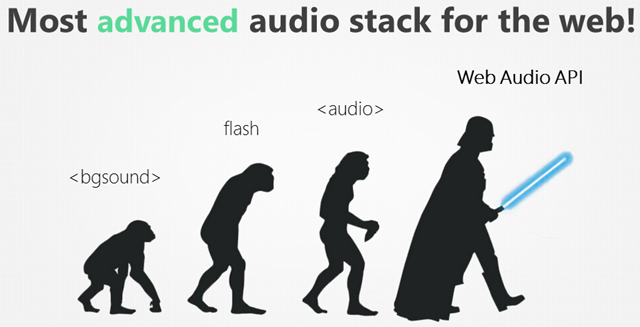

Web Audio in a nutshell

Web Audio is the most advanced audio stack for the web.

From Learn Web Audio API

If you ever tried to do something else than streaming some sounds or music using the HTML5 audio element, you know how limited it was. Web Audio allows you to break all the barriers and provides you access to the complete stream and audio pipeline like in any modern native application. It works thanks to an audio routing graph made of audio nodes. This gives you precise control over time, filters, gains, analyzer, convolver and 3D spatialization.

It’s being widely supported now (MS Edge, Chrome, Opera, Firefox, Safari, iOS, Android, FirefoxOS and Windows Mobile 10). In MS Edge (but I’m guessing in other browsers too), it’s been rendered in a separate thread than the main JS thread. This means that almost all the time, it will have few if no performance impact on your app or game. The codecs supported are at least WAV and MP3 by all browsers and some of them also support OGG. MS Edge even supports the multi-channel Dolby Digital Plus™ audio format!! You then need to pay attention to that to build a web app that will run everywhere and provides potentially multiple sources for all browsers.

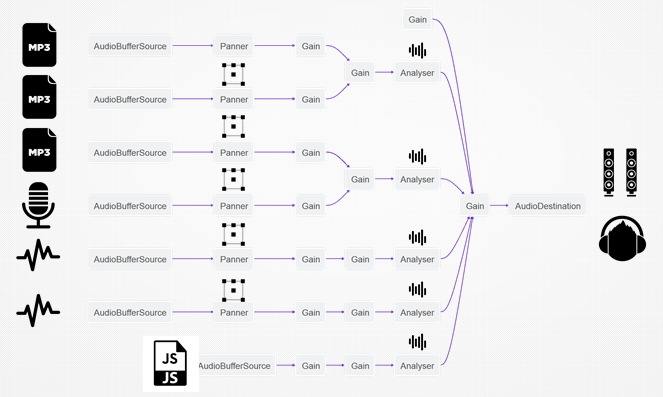

Audio routing graph explained

Note: this picture has been built using the graph displayed into the awesome Web Audio tab of the Firefox DevTools. I love this tool . :) So much that I’ve planned to mimic it via a Vorlon.js plug-in. I’ve started working on it but it’s still very early draft.

Let’s have a look to this diagram. Every node can have something as an input and be connected to the input of another node. In this case, we can see that MP3 files act as the source of the AudioBufferSource node, connected to a Panner node (which provides spatialization effects), connected to Gain nodes (volume), connected to an Analyser node (to have access to frequencies of the sounds in real-time) finally connected to the AudioDestination node (your speakers). You see also you can control the volume (gain) of a specific sound or several of them at the same time. For instance, in this case, I’ve got a “global volume” being handled via the final gain node just before the destination and some kind of tracks’ volume with some gains node placed just before the analyser node.

Finally, Web Audio provides 2 psychoacoustics models to simulate spatialization:

- equalpower (set by default) to support classic speakers for your laptop or floorstanding speakers.

- HRTF which provides the best results with an headphone as it simulates binaural recording.

Note: MS Edge currently only supports equalpower.

And you’re set for the basics. To start playing with some code, I would suggest you reading those great resources:

- The W3C spec: https://webaudio.github.io/web-audio-api/. Again, I’ve learned a lot by simply reading the spec.

- Getting Started with Web Audio API, Developing Game Audio with the Web Audio API and Mixing Positional Audio and WebGL on HTML5 Rocks. Definitely awesome content. They sometimes contain deprecated links to demos (using previous web audio specifications) but they’re still very relevant most the time. Beware also of the codec being used in the demo, it couldn’t be supported by your browser.

- Bringing Web Audio to Microsoft Edge for interoperable gaming and enthusiast media which is one of the most recent and thus accurate resource.

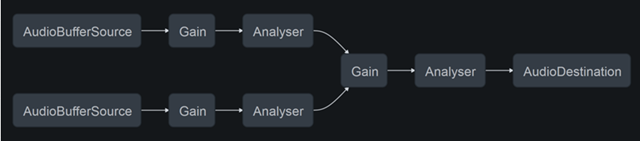

For instance, let’s imaging we’d like to create such an audio graph:

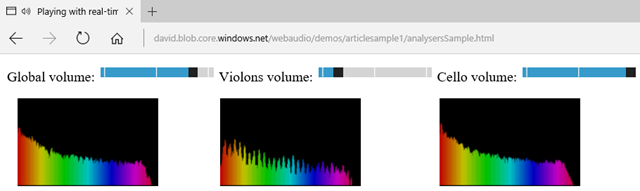

I’d like to play 2 synchronized music that will each have its own gain connected to their own analyser. This means that we’ll be able to display the frequencies of each music on separate canvas and play with the volume of each one separately also. Finally, we will have a global volume that will act on both music at the same time and a third analyser that will display the frequencies on both music cumulated on a third canvas.

You’ll find the demo here: analysersSample.html

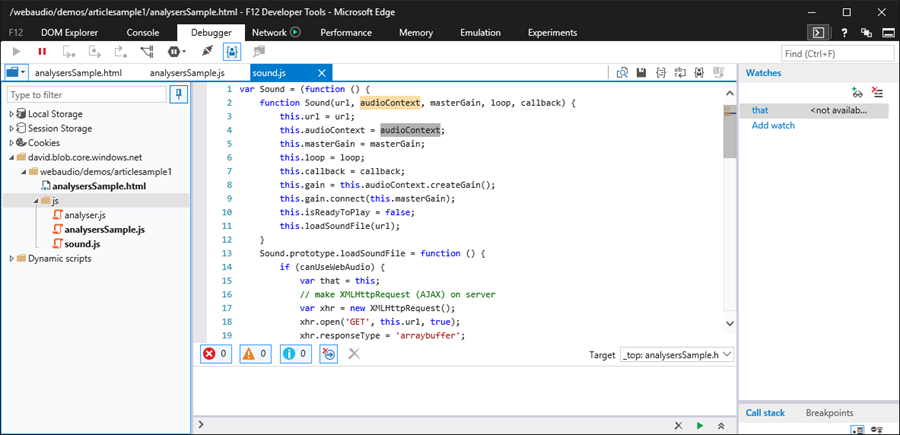

Simply have a look to the source code using your favorite browser and it’s F12 tool.

sound.js is abstracting the logic to load a sound, decode it and play it. analyser.js is abstracting the job to do to use the Web Audio analyser and display frequencies on a 2D canvas. Finally, analysersSample.js is using both files to create the above result.

Play with the sliders to act on the volume on each track or on the global volume to review what’s going on. For instance, try to understand if you’re putting the global volume to 0, why can you still see the 2 analysers showing data on the right?

To be able to play the 2 tracks synchronized, you just need to load them via a XHR2 request, decode the sound and as soon as those 2 asynchronous operations are done, simply call the Web Audio play function on each of them.

Web Audio in Babylon.js

Basics

I wanted to keep our “simple & powerful” philosophy while working on the audio stack. Even if Web Audio is not that complicated, it’s still very verbose to me and you’re quickly doing boring and repetitive tasks to build your audio graph. My objective was then to encapsulate that to provide a very simple abstraction layer and to let people create 2D or 3D sounds without being a sound engineer.

Here is the signature we’ve chosen to create a sound:

var newSound = new BABYLON.Sound("nameofyoursound", "URLToTheFile", yourBabylonScene,

callbackFunctionWhenSoundReadyToBePlayed,

optionsViaJSONObject);

For instance, here is how to create new sounds in Babylon.js:

var sound1 = new BABYLON.Sound("sound1", "./sound1.mp3", scene,

null, { loop: true, autoplay: true });

var sound2 = new BABYLON.Sound("sound2", "./sound2.wav", scene,

function () { sound2.play(); },

{ playbackRate: 2.0 });

var streamingSound = new BABYLON.Sound("streamingSound", "./sound3.mp3", scene,

null, { autoplay: true, streaming: true });

First sound will be played automatically in loop as soon as it will be loaded from the web server and decoded by Web Audio.

Second sound will be played once thanks to the callback function that will trigger the play() function on it once decoded and ready to be played. It will be played also at a 2x playback rate.

The last sound is using the “streaming: true” option. It means we’ll use the HTML5 Audio element instead of doing a XHR2 request and using a Web Audio AudioBufferSource object. It could be useful if you’d like to stream a music rather than waiting to download it completely. Still, you can apply all the magic of Web Audio on top of this HTML5 Audio element: analyser, 3D spatialization and so on.

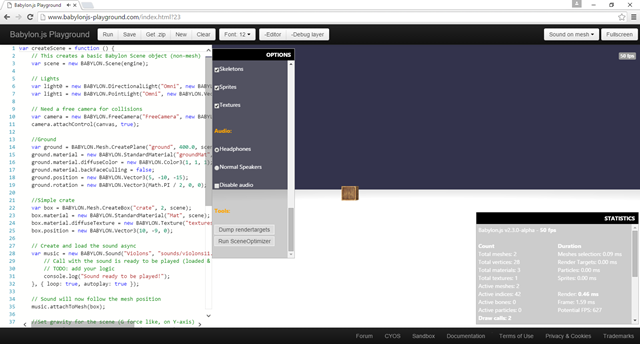

You can play with this logic into our playground such as with this very simple sample: https://www.babylonjs-playground.com/index.html?22

Engine, Tracks and memory foot print

The Web Audio audio context is only created at the very last moment. When the Babylon.js audio engine is created in the Babylon.js main engine constructor, it only checks for Web Audio support and set a flag. Also, every sound is living inside a sound track. By default, the sound will be added into the main sound track attached to the scene.

The Web Audio context and the main track (containing its own gain node attached to the global volume) are really created only after the very first call to new BABYLON.Sound().

We’ve done that to avoid creating some audio resources for scenes that won’t use audio, which is today the case of most of our scenes hosted on https://www.babylonjs.com. Still, the audio engine is ready to be called at any time. This helps us also to detach the audio engine from the core game engine if we’d like to build a core version of Babylon.js without any audio code inside it to make it more lightweight. At last, we’re currently working with big studios that pay of lot of attention to resources being used.

To better understand that, we’re going to use Firefox and its Web Audio tab.

- Navigate to: https://www.babylonjs-playground.com/ and open the Web Audio tab via F12. You should see that:

No audio context has been created yet.

- Into the playground editor on the left, add this line of code just before “return scene” for instance:

var music = new BABYLON.Sound("Violons", "sounds/violons11.wav",

scene, null, { loop: true, autoplay: true });

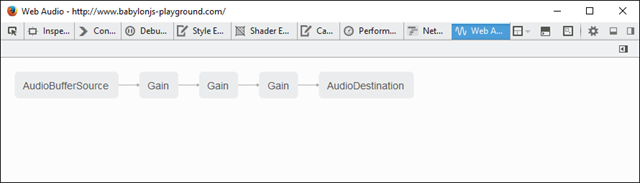

- You should now see this audio graph in F12:

The audio context has been dynamically created after the first call to new BABYLON.Sound() as well as the main volume, the main track volume and the volume associated to this sound.

3D Sounds

Basics

Web Audio is highly inspired by models used in libraries such as OpenAL (Open Audio Library) for 3D. Most of the 3D complexity will then be handled for you by Web Audio. It will handle the 3D position of the sounds, their direction and their velocity (to apply a Doppler effect).

As a developer, you then need to ask to Web Audio to position your sound in the 3D space and to set and/or update the position and orientation of the Web Audio listener (your virtual ears) using the setPosition() and setOrientation() function. But if you’re not a 3D guru, it could be a bit complex. That’s why, we’re doing that for you in Babylon.js. We’re updating the Web Audio listener with the position of our camera in the 3D world. It’s like some virtual ears (the listener) were stick onto some virtual eyes (the camera).

Up to now, we’ve been using 2D sound. To transform a sound into a spatial sound, you need to specify that via the options:

var music = new BABYLON.Sound("music", "music.wav",

scene, null, { loop: true, autoplay: true, spatialSound: true });

Default properties of a spatial sound are:

- distanceModel (the attenuation) is using a “linear” equation by default. Other options are “inverse” or “exponential”.

- maxDistance is set to 100. This means that once the listener is farther than 100 units from the sound, the volume will be 0. You can’t hear the sound anymore

- panningModel is set to “equalpower” following the specifications. The other available option is “HRTF”. The specification says it’s: “a higher quality spatialization algorithm using a convolution with measured impulse responses from human subjects. This panning method renders stereo output”. This is the best algorithm when using a headphone.

maxDistance is only used when using the “linear” attenuation. Otherwise, you can tune the attenuation of the other models using the rolloffFactor and refDistance options. Both are set to 1 by default but you can change it of course.

For instance:

var music = new BABYLON.Sound("music", "music.wav",

scene, null, {

loop: true, autoplay: true, spatialSound: true,

distanceModel: "exponential", rolloffFactor: 2

});

Default position of sound in the 3D world is (0,0,0) . To change that, use the setPosition() function:

music.setPosition(new BABYLON.Vector3(100, 0, 0));

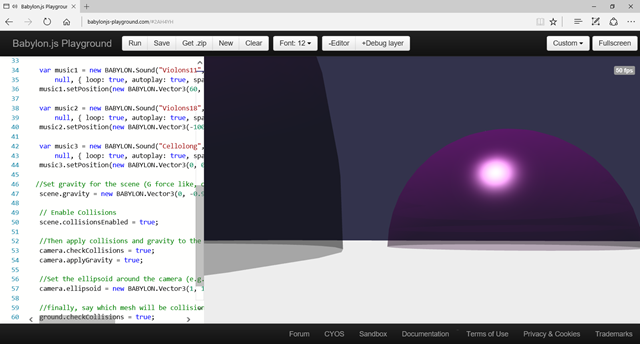

To have a better understanding, please have a look to this sample into our playground:

Move into the scene using keyboard & mouse. Each sound is represented by a purple sphere. When you’re entering a sphere, you’ll start hearing one the music. The sound is louder at the center of the sphere and fall down to 0 when leaving the sphere. You’ll also notice that the sound is being balanced between the right & left speakers as well as front / rear (which is harder to notice using only classic stereo speakers).

Attaching a sound to a mesh

The previous demo is cool but didn’t reach my “simple & powerful” bar. I wanted something even more simple to use. I finally ended up with the following approach.

Simply create a BABYLON.Sound, attach it to an existing mesh and you’re done! Only 2 lines of code! If the mesh is moving, the sound will move with it. You have nothing to do, everything else is being handled by Babylon.js.

Here’s the code to use:

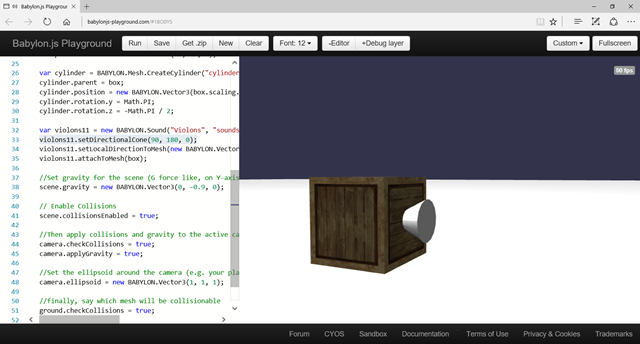

var music = new BABYLON.Sound("Violons", "sounds/violons11.wav",

scene, null, { loop: true, autoplay: true });

// Sound will now follow the box mesh position

music.attachToMesh(box);

Calling the attachToMesh() function on a sound will transform it automatically into a spatial 3D sound. Using the above code, you’ll fall into default Babylon.js values: a linear attenuation with a maxDistance of 100 and a panning model of type “equalpower”.

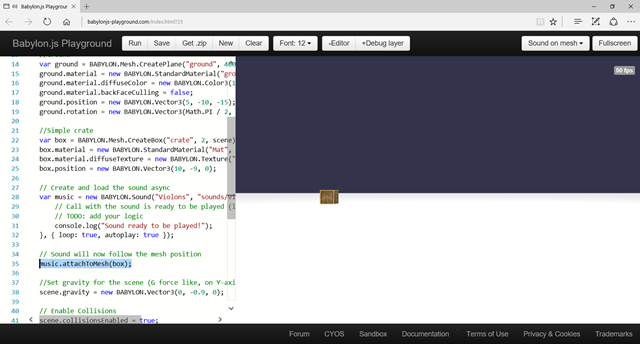

Put your headphone and launch this sample into our playground:

Don’t move the camera. You should hear my music rotating around your head as the cube is moving. Want to have an even better experience with your headphone?

Launch the same demo into a browser supporting HRTF (like Chrome or Firefox), click on the “Debug layer” button to display it and scroll down to the audio section. Switch the current panning model from “Normal speakers” (equalpower) to “Headphone” (HRTF). You should enjoy a slightly better experience. If not, change your headphone… or your ears. ;-)

Note: you can also move into the 3D scene using arrow keys & mouse (like in a FPS game) to check the impact of the 3D sound positioning.

Creating a spatial directional 3D sound

By default, spatial sounds are omnidirectional. But you can have directional sounds if you’d like to.

Note: directional sounds only work for spatial sounds attached to a mesh.

Here is the code to use:

var music = new BABYLON.Sound("Violons", "violons11.wav", scene, null, { loop: true, autoplay: true });

music.setDirectionalCone(90, 180, 0);

music.setLocalDirectionToMesh(new BABYLON.Vector3(1, 0, 0));

music.attachToMesh(box);

setDirectionalCone takes 3 parameters:

- coneInnerAngle: size of the inner cone in degree

- coneOuterAngle: size of the outer cone in degree

- coneOuterGain: volume of the sound when you’re outside the outer cone (between 0.0 and 1.0)

Outer angle of the cone must be superior or equal to the inner angle, otherwise an error will be logged and the directional sound won’t work.

setLocalDirectionToMesh() is simply the orientation of the cone related to the mesh you’re attached to. By default, it’s (1,0,0) . On the right of the object if you want to.

You can play with this sample from our playground to better understand the output:

Move into the 3D scene. If you’re inside the space defined by the grey cone, you should hear the music, if not you’ll not hear it as the coneOuterGain is set to 0.

Going further

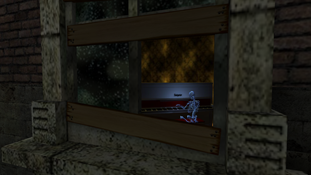

Play with our famous Mansion Web Audio demo:

Try to find the interactive objects in the Mansion scene, they’re almost all associated to a sound. To build this scene, our 3D artist, Michel Rousseau, hasn’t use a single line of code! He made it entirely using our new Actions Builder tool and 3DS Max. The Actions Builder is indeed currently only available in our 3DS Max exporter. We will soon expose it into our sandbox tool. If you’re interested in building a similar experience, please read those articles:

- Actions Builder from Julien Moreau-Mathis, our Babylon.js awesome intern who made the tool

- Create actions in BabylonJS by Michel Rousseau. He explains here how to use actions in a basic way. He has used the same approach to build the Mansion demo as the Actions Builder also supports sound actions.

But, the best thing to definitely understand and learn how we’ve been using Web Audio in our gaming engine is by reading our source code:

- BABYLON.AudioEngine being created by the Babylon.js core engine as static.

- BABYLON.SoundTrack containing several sounds. You have at least one track being created if you’re creating a sound, this is the main track.

- BABYLON.Sound containing the main logic you’ll interact with

- BABYLON.Analyser that can be attached to the audio engine itself (to view the output via the global volume) or on a specific track.

Please also have a look into BABYLON.Scene and the _updateAudioParameters() function to understand how we’re updating the Web Audio listener based on the current position & orientation of the camera.

Finally, here are some useful resources to read:

- Babylon.js audio documentation

- Learn Web Audio with the Flight Arcade experience where you’ll learn stuff I haven’t implemented yet in Babylon.js like convolvers.

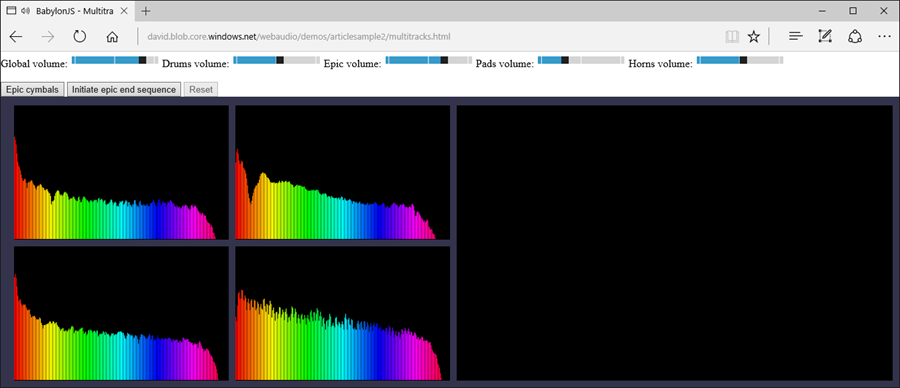

Building a demo using multitracks & analysers

In order to show you what you can quickly do with our Babylon.js audio stack, I’ve built this fun demo:

It uses several features from Babylon.js such as the assets loader that will automatically load the remote WAV files and display a default loading screen, multi sounds tracks and several analyser objects being connected to the sound tracks.

Please have a look to the source code of multitracks.js via your F12 tool to check how it works.

This demo is based on a music I’ve already composed in the past that I’ve slightly re-arranged: The Geek Council. You can do your own mixing for the first part of the music by playing with the sliders. You can click as many time and as quickly as possible on the “Epic cymbals” button to add a dramatic effect. Finally, click on the “Initiate epic end sequence”. It will wait for the end of the current loop and will stop the 4 first tracks (drums, epic background, pads & horns) to play the final epic sequence! :) Click on the “Reset” button to restart from the beginning.

Note: it turns out that to have great sounds loops, without any glitch, you need to avoid using MP3 as explained in this forum: “The simplest solution is to just not use .mp3. If you use .ogg or .m4a instead you should find the sound loops flawlessly, as long as you generate those files from the original uncompressed audio source, not from the mp3 file! The process of compressing to mp3 inserts a few ms of silence at the start of the audio, making mp3 unsuited to looping sounds. I read somewhere that the silence is actually supposed to be file header information but it incorrectly gets treated as sound data! ”. That’s why, I’m using WAV files in this demo. It’s the only clean solution working accross all browsers.

And what about the Music Lounge demo?

The music lounge demo is basically re-using everything being described before. But you’re missing a last piece to be able to recreate it as it’s based on a feature I haven’t explained yet: the customAttenuationFunction.

You’ve got the option to provide your own attenuation function to Babylon.js to replace the default one provided by Web Audio (linear, exponential and inverse) as explained in our documentation.

For instance, if you’re trying this playground demo, you’ll see I’m using this stupid attenuation function:

music.setAttenuationFunction(function (currentVolume, currentDistance,

maxDistance, refDistance, rolloffFactor) {

return currentVolume * currentDistance / maxDistance;

});

Near the object, volume is almost 0. The further, the louder. It’s completely counter intuitive but it’s a sample to give you the idea.

In the case of the Music Lounge demo, it has been very useful. Indeed, the volume of each track doesn’t depend on the distance of each cube from the camera but on the distance between the cube and the center of the white circle. If you’re looking at the source code, the magic happens here:

attachedSound.setAttenuationFunction((currentVolume, currentDistance,

maxDistance, refDistance, rolloffFactor) => {

var distanceFromZero = BABYLON.Vector3.Zero()

.subtract(this._box.getBoundingInfo().boundingSphere.centerWorld)

.length();

if (distanceFromZero < maxDistance) {

return currentVolume * (1 - distanceFromZero / maxDistance);

}

else {

return 0;

}

});

We’re computing the distance between the current cube (this._box) and the (0,0,0) coordinates which happens to be the center of the circle. Based on this distance, we’re setting a linear attenuation function for the volume of the sound associated to the cube. Rest of the demo is using sounds attached to the various meshes (the cubes) and an analyser object connected to the global volume to animate the outer circle made of particles.

I hope you’ll enjoy this article as much as I had writing it. I also hope that it will bring you new ideas to create cool Web Audio / WebGL applications or demos!

David