Windows Azure - What Happens in the Data Center?

So I've been wading through internal documents, watching PDC sessions, and having 1:1's with the team, all in an attempt to get my head around Windows Azure. There's a lot of moving parts, and a lot of complexity as you'd expect, but it also makes a lot of sense when it all starts to marinate into your brain.

So where to start? The data center is a good place.

So we have racks of servers:

Each server is configured to boot from the network, and is connected to the Windows Azure network. To get a server prepared to run a Windows Azure application, we turn it on, it boots from the network and downloads a maintenance OS.

The maintenance OS talks to a service called the Fabric Controller. The Fabric Controller, or the FC, knows how to manage Windows Azure's resources (servers, network infrastructure, applications, customer applications), and instructs the agent inside the maintenance OS to create a host partition that will hold the host OS.

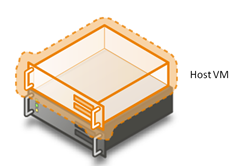

The host VM is a special VM, which controls access to the hardware of the underlying server, and provides a mechanism for other guest VMs (where our customers applications are deployed) to safely communicate with the outside world.

The server then restarts, booting natively into the host VHD OS (this is a new feature that will be available in Win7).

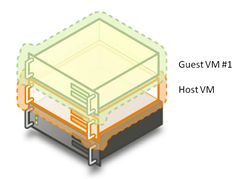

When the host VM starts-up, it has an agent that can talk to the FC too, and the FC tells it to setup one or many guest partitions. If it's the first time it's been done, a guest VM image will be downloaded to the server, a guest instance specific diff-disk will be downloaded too (to ensure the base guest VM can be reused multiple times while customer specific data is written to the diff disk).

Once the guest VM is ready, a customer role will be deployed to it and the FC will configure all the bits and pieces in the infrastructure (such as load balances, external and internal IP addresses [DIPs and VIPs], switches, etc.) to make it available to the world (well, only in the instance of a web role at the moment). Each guest VM hosts only one customer application, this way, we can ensure strong isolation between tenants. VMs provide the same isolation that native OS instances do, so it's a reliable isolation model.

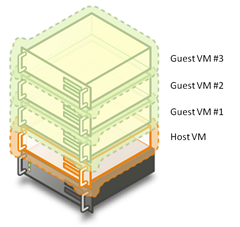

On each server, we have the ability to deploy many guest VMs to one host VM, this way we can maximize the use of each server.

Right now, we currently carve up the server resources in a way where each VM has the following predictable resources allocation:

- OS: 64-bit Windows Server 2008

- CPU: 1.5-1.7 Ghz x64 equivalent

- Memory: 1.7GB

- Network: 100Mbs

- Local Disk: 250GB (not really useful to developer at the mo')

- Windows Azure Storage: 50GB

To ensure one customer VM cannot overwhelm other VMs, resources are isolated and balanced so network, CPU, etc. is evenly apportioned.

So how do the VMs work together? They are each attached to a VMBus, which provides real world access from guest VMs through the host VM, remember we said only the host VM can access the outside world, the guest VMs cannot, so they talk through the host VM.

So that's it, that's how we configure all the hardware to provide the Windows Azure cloud. I'll follow up to this post (when I get a little time) on how the internal guest VM is structured to support Windows Azure applications (like the web and worker role).

For a more hardcore treatment of this, check out Erick and Chuck's PDC talk called "Under the Hood: Inside the Windows Azure Hosting Environment".

Technorati Tags: Windows Azure,Internals