"How To": Performance Tuning - High Frequency Queries - Test Harness

If I had a month free I’d like to do a brain dump on how to Load Test, Bulk Up data, find problem queries, refactor them with confidence etc. Then deliver it in one comprehensive guide. But that is unlikely, so I’ve decided to deliver little bits at a time then write an index to pull it all together if I ever finish.

In this post I’ll start with the really obvious. Often there you can think of multiple coding alternatives that yield the same answer. How do you know which code construct is the most efficient? This post offers one technique to help you determine that.

It is useful when comparing different approaches to those High Frequency – Low Impact queries. By that I mean, comparing queries that by themselves really don’t take long, but they are run so often that they begin to represent a significant portion of the load on your system. Another area of use is, testing a code construct in a Select List that might be executed once for each row, in a billion row update they can really influence the time to complete the query.

For Example

One of the columns in your select statement must display a cheque number with 10 digits. Eg: 1,234,567 displays as 0001234567. So you need to append leading zeros if your integer contains fewer than 10 digits. Which approach should you use?

1. Convert your int to a string, Find the length of the string. Subtract the length from 10 & pre-pend that many zeros.

SUBSTRING('0000000000', 1, 10 - LEN(CAST(@ChequeID AS varchar(10)))) + CAST(@ChequeID AS varchar(10))

2. Convert your int to a string, Prepend 10 zeros. Then take the Rightmost 10 chars.

RIGHT( '0000000000' + CAST(@ChequeID AS varchar(10)), 10)

3. Same as Approach 1 but test to see if the Integer converts to exactly 10 digits & only do the Substring manipulation when you have less than 10 digits.

CASE WHEN LEN(CAST(@ChequeID AS varchar(10))) < 10

THEN SUBSTRING('0000000000', 1, 10 - LEN(CAST(@ChequeID AS varchar(10)))) + CAST(@ChequeID AS varchar(10))

ELSE CAST(@ChequeID AS varchar(10))

END

Note:

From a practical point of view you may think "OK, this is trivial stuff, after all there is only a few milliseconds difference anyway".

Answer 1

You may be correct, a good index is likely to be far more important. But remember, multi-table joins can generate millions of rows before they are filtered by the where clause. Saving a few seconds CPU time on each query can have a cumulative effect on overall system performance.

It may not be a huge win, but if it takes just as long to write inefficient code as it does to write more efficient code. Why not learn which code constructs are more efficient?

Answer 2

Try looking at the difference when you call a User Function from your select list. It can make your query 50 times slower. Sometimes performance differences can be massive, these code alternatives can occasionally be much more important than an index.

The basics

For queries that are executed very frequently (High frequency queries), the normal approaches like “SET STATISTICS IO ON” & “SET STATISTICS TIME ON” are inadequate. You get thousands of rows of output all saying “0 ms”. So I’ve reverted back to creating a loop, executing the test many times then comparing the time at the start & end of the loop.

Simple code example

-- ---< Template Testing Loop code >---

DECLARE @cntLoop INT; SET @cntLoop = 1

DECLARE @StartTime DATETIME; SET @StartTime = GETDATE()

WHILE @cntLoop < 1000 BEGIN

-- < Insert your test code here >

SET @cntLoop = @cntLoop + 1

END

SELECT DATEDIFF(ms, @StartTime, GETDATE() ) AS 'Test Description Here (ms)';

Go

(NB: If you think the layout of the DECLARE - SET looks odd, I converted the 2008 code back to 2005. In 2005 it is milliseconds faster to Declare all variables in one statement separated by commas. Then set them all in a single SELECT Statement. In 2008 you can Declare & Initialise on the same line. So for readability I've used the SQL 2008 layout but in SQL2005 compatible syntax.)

Taking a Baseline

Unlike Heisenberg we can & should adjust for the overhead of our measuring equipment. So at a minimum we should run 3 tests :-

1. The test loop with nothing inside.

2. The test loop with our orignial TSQL code.

3. The test loop with our hopefully faster TSQL code.

That way we can subtract time it takes to run the test “doing nothing” from the other 2 runs in order to really understand the percentage improvement between our alternatives.

Example - Measure the overhead of your looping code

NB: To make it easier to manage multiple tests I use a SQLCMD Variable to set the maximum Iterations.

-- =========================================================================================

-- Test Harness to compare impact of small High Frequency Operations &/or Things called once for each row in a query.

-- Method: Remember to subtract the overhead of the Test Harness from each result.

-- Remember to Click "Menu: Query->SQLCMD mode" or the SETVAR MAXLOOPS line will give you an error.

-- =========================================================================================

-- Change this value to impact the duration of ALL tests.

:SETVAR MAXLOOPS 2000000

-- =========================================================================================

-- ---< Pre-Test: Calculate Overhead of Looping code >---

DECLARE @cntLoop INT; SET @cntLoop = 1

DECLARE @StartTime DATETIME; SET @StartTime = GETDATE()

DECLARE @Result VARCHAR(8); -- or whatever data type your test returns.

WHILE @cntLoop < $(MAXLOOPS) BEGIN

SELECT @Result = 1 -- Empty assignment

SET @cntLoop = @cntLoop + 1

END

SELECT DATEDIFF(ms, @StartTime, GETDATE() ) AS 'Empty MAXLOOPS Overhead';

go

-- =========================================================================================

-- ---< Test 1: Calculate Overhead of Calling existing User Function >---

DECLARE @cntLoop INT; SET @cntLoop = 1

DECLARE @StartTime DATETIME; SET @StartTime = GETDATE()

DECLARE @Result VARCHAR(8); -- or whatever data type your test returns.

WHILE @cntLoop < $(MAXLOOPS) BEGIN

-- Orignial version

SELECT @Result = [dbo].[uf_MinutestoStringTime] (@cntLoop)

SET @cntLoop = @cntLoop + 1

END

SELECT DATEDIFF(ms, @StartTime, GETDATE() ) AS 'Original';

go

-- =========================================================================================

-- ---< Test 2: Calculate Overhead of Calling "New Improved" Function >---

-- My results show this takes ~4% of Original ie: 25 Times faster. (or whatever you got)

DECLARE @cntLoop INT; SET @cntLoop = 1

DECLARE @StartTime DATETIME; SET @StartTime = GETDATE()

DECLARE @Result VARCHAR(8); -- or whatever data type your test returns.

WHILE @cntLoop < $(MAXLOOPS) BEGIN

-- Refactored Improved version

SELECT @Result = [dbo].[uf_MinutestoStringTime2] (@cntLoop)

SET @cntLoop = @cntLoop + 1

END

SELECT DATEDIFF(ms, @StartTime, GETDATE() ) AS 'Refactored to use Set functions';

go

-- =========================================================================================

Factor in the random crap

Of course the universe will gang up on you to ensure your results are meaningless.

- Clearly it is best if you can run it in a way to avoid load from others. Perhaps on a machine where you are the only user. eg: your workstation or a test server or after hours. But even then you are never alone, all sorts of background threads & tasks are going to partially impact a test run. eg: A SQL Checkpoint process could completely overlap just one of the tests & not the other. The SQL log may run out of space & need to be expanded in the middle of your test. etc

- Another bugger factor is that Windows is interrupt driven, at least every 15ms. (well on x86 PC architectures anyway). Your test could be paused while something else runs. So the smaller the timeframe the greater potential for statistical error. But as mentioned in the prior paragraph, if you loop more times, the longer the timeframe of the tests, the greater potential for impact via other threads.

- Another pain is that the test you try first could warm up the cache or environment & somehow assist the test that runs second. Making it look faster than it really is. Sure you could Free buffers &/or stop & restart the SQL Instance each time, but that slows you down & is probably not typical of the hot environment your queries will most likely be running in.

So some of the ways you can minimise errors are:-

- In the same test run, run each test more than once. I usually run 6 tests:-

a) Test Harness (for the baseline)

b) Test A

c) Test B

d) Test B (again)

e) Test A (again)

f) Test Harness (again)

That way you can check that each of the 3 tests deliver similar results in both executions. If they don’t, the entire test run is invalid. (Or at least can’t be reliably extrapolated) - Like all good statisticians. Run the test group a few times. 30 would be a statistically good number, but 5 is probably adequate especially if each run is giving similar results.

- If you can & it is important enough for you. Remember to try it on multiple machines.

CPU & Memory Access speeds often vary between machine & I have seen coding alternatives which are dramatically different on one machine be not as dramatic on another. (but usually there is a clear winner everywhere or there was only a negligible 1-3% difference anywhere.)

Especially important is different disk capacities. While this type of testing is less useful for I/O intensive queries, I don't know what you will be doing so maybe you need to think about it.

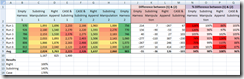

Example Results

These were obtained from running the 10 digit leading zero tests mentioned above.

In the above you can see the raw results in Columns B-I.

Column J shows the difference between the two Right Append Tests (cols D & G)

Cols N - Q shows the % change between each of the similar tests in that run. Where 100% is No difference. Anything greater or lower is bad.

While I'm not really happy with the huge variation within each test run, it is sufficient to make a decision. It is easy to see the difference between each type of test is even more dramatic than the margin of error in each test. So it is reasonable to conclude that the "Right()" approach is more efficient than the "Substring()" technique. And any benefit you thought you'd get by using a case to avoid any unnecessary substring commands is a waste of time.

Bonus TIP:

These results are consistent with every TSQL test I've done. In TSQL there is no point testing to see if an operation needs to occur & then doing it. Just do it to all rows.

For example this query is slower

SELECT CASE WHEN LastName IS NOT NULL THEN UPPER(LastName) END As Lastname

FROM Custs

than this query. The UPPPER() function is perfectly capable of dealing with NULL's.

SELECT UPPER(LastName) As Lastname

FROM Custs

On my machine the later took 100 units of time & the former (with the CASE statement) took 187 Units. Ie 87% slower.

Template Loop Code:

This code is extended slightly from the basic example above. Showing setting any other variables you may need in your test. Together with an improved way to view the results of the query. If you don't assign them to a variable they will be returned to the screen (or calling app), this will slow your test.

You will note the :SETVAR this lets me refer to a variable with a life beyond each batch. So remember to enable SQLCMD in SSMS. Eg: Menu: Query => SQLCMD Mode.

Changing this value will increase / decrease the length of each test. As Windows timer is in 15 ms chunks, it is best to adjust this so that each test runs for at least several seconds.

-- ---< Set Test Loop Counter >---

:SETVAR LOOPCNT 100000 -- < How many times each test will be repeated.

-- ===< Empty Test: Measure Test Harness overhead >==============

-- ---< Set Test Specific Variables >---

DECLARE @CommSourceID BIGINT; SELECT @CommSourceID = '1234567'

-- ---< Set Harness Variables >---

DECLARE @Results VARCHAR(20)

DECLARE @cnt INT; SET @cnt = 0

DECLARE @StartTime DATETIME; SET @StartTime = GETDATE()

-- ---< Execute Test many times >---

WHILE @cnt < $(LOOPCNT)

BEGIN

-- ---< Insert your Test Query Below here >---

-- < do nothing here - empty test >

-- ---< Insert your Test Query Above here >---

SET @cnt = @cnt + 1;

END

-- ---< Display Test Results >---

SELECT DATEDIFF(ms, @StartTime, GETDATE()) AS Duration_ms

,@Results AS 'Empty Harness 1' -- << Meaningful label for test

GO

I also like to display the value returned by my query (ie:@Results) this is a sanity check just to ensure that the codes snippets do actually return identical code. I realise this is only displaying the last value assigned & every value assigned in prior iterations of the loop were overwritten. Normally this would be bad, in this case I don't care, they should all be identical.

Sample Code to test the 3 alternatives discussed in the introduction

Use this as your template.

/* ===========================================================

** TEST HARNESS for small high frequency tests.

** eg: TSQL run for every row in a large query.

** Notes: Before reaching any conclusion.

** 1. You should repeat these tests at least 5 times

** & ensure the variance between each run is small.

** 2. You need to mirror the tests components

** ie: Harness, Test A, Test B, Test B, Test A, Harness.

** Then check if Harness = Harness, Test A = Test A, etc

** If not then the Test Run would likely to be adversely impacted by something.

** 3. It is safer to push the Test Output into a variable then to the screen.

** This removes delay caused but network & client.

** 4. This uses Old-Style Variable initialisation to ensure it works on pre-SQL2008

** 5. Ensure SQLCMD mode = ON

** ===========================================================

*/

SET NOCOUNT ON

-- ---< Set Test Loop Counter >---

:SETVAR LOOPCNT 100000 -- < How many times each test will be repeated.

-- ===< Empty Test: Measure Test Harness overhead >==============

-- ---< Set Test Specific Variables >---

DECLARE @CommSourceID BIGINT; SELECT @CommSourceID = '1234567'

-- ---< Set Harness Variables >---

DECLARE @Results VARCHAR(20)

DECLARE @cnt INT; SET @cnt = 0

DECLARE @StartTime DATETIME; SET @StartTime = GETDATE()

-- ---< Execute Test many times >---

WHILE @cnt < $(LOOPCNT)

BEGIN

-- ---< Insert your Test Query Below here >---

-- < do nothing here - empty test >

-- ---< Insert your Test Query Above here >---

SET @cnt = @cnt + 1;

END

-- ---< Display Test Results >---

SELECT DATEDIFF(ms, @StartTime, GETDATE()) AS Duration_ms

,@Results AS 'Empty Harness 1' -- << Meaningful label for test

GO

-- ===< TEST A: 1st Run >==============

-- ---< Set Test Specific Variables >---

DECLARE @CommSourceID BIGINT; SELECT @CommSourceID = '1234567'

-- ---< Set Harness Variables >---

DECLARE @Results VARCHAR(20)

DECLARE @cnt INT; SET @cnt = 0

DECLARE @StartTime DATETIME; SET @StartTime = GETDATE()

-- ---< Execute Test many times >---

WHILE @cnt < $(LOOPCNT)

BEGIN

-- ---< Insert your Test Query Below here >---

SELECT @Results = SUBSTRING('0000000000', 1, 10 - LEN(CAST(@CommSourceID AS varchar(10)))) + CAST(@CommSourceID AS varchar(10))

-- ---< Insert your Test Query Above here >---

SET @cnt = @cnt + 1;

END

-- ---< Display Test Results >---

SELECT DATEDIFF(ms, @StartTime, GETDATE()) AS Duration_ms

,@Results AS 'Substring Manipulation 1'

GO

-- ===< TEST B: 1st Run >==============

-- ---< Set Test Specific Variables >---

DECLARE @CommSourceID BIGINT; SELECT @CommSourceID = '1234567'

-- ---< Set Harness Variables >---

DECLARE @Results VARCHAR(20)

DECLARE @cnt INT; SET @cnt = 0

DECLARE @StartTime DATETIME; SET @StartTime = GETDATE()

-- ---< Execute Test many times >---

WHILE @cnt < $(LOOPCNT)

BEGIN

-- ---< Insert your Test Query Below here >---

SELECT @Results = RIGHT( '0000000000' + CAST(@CommSourceID AS varchar(10)), 10)

-- ---< Insert your Test Query Above here >---

SET @cnt = @cnt + 1;

END

-- ---< Display Test Results >---

SELECT DATEDIFF(ms, @StartTime, GETDATE()) AS Duration_ms

,@Results AS 'Right Append 1'

GO

-- ===< TEST C: 1st Run >==============

-- ---< Set Test Specific Variables >---

DECLARE @CommSourceID BIGINT; SELECT @CommSourceID = '1234567'

-- ---< Set Harness Variables >---

DECLARE @Results VARCHAR(20)

DECLARE @cnt INT; SET @cnt = 0

DECLARE @StartTime DATETIME; SET @StartTime = GETDATE()

-- ---< Execute Test many times >---

WHILE @cnt < $(LOOPCNT)

BEGIN

-- ---< Insert your Test Query Below here >---

SELECT @Results = CASE WHEN LEN(CAST(@CommSourceID AS varchar(10))) < 10

THEN SUBSTRING('0000000000', 1, 10 - LEN(CAST(@CommSourceID AS varchar(10)))) + CAST(@CommSourceID AS varchar(10))

ELSE CAST(@CommSourceID AS varchar(10)) END --AS BarcodeNumber,

-- ---< Insert your Test Query Above here >---

SET @cnt = @cnt + 1;

END

-- ---< Display Test Results >---

SELECT DATEDIFF(ms, @StartTime, GETDATE()) AS Duration_ms

,@Results AS 'CASE & Substring 1'

GO

-- ==============================================< Repeat Tests in reverse order >==============

-- ===< TEST C: 2nd Run >==============

-- ---< Set Test Specific Variables >---

DECLARE @CommSourceID BIGINT; SELECT @CommSourceID = '1234567'

-- ---< Set Harness Variables >---

DECLARE @Results VARCHAR(20)

DECLARE @cnt INT; SET @cnt = 0

DECLARE @StartTime DATETIME; SET @StartTime = GETDATE()

-- ---< Execute Test many times >---

WHILE @cnt < $(LOOPCNT)

BEGIN

-- ---< Insert your Test Query Below here >---

SELECT @Results = CASE WHEN LEN(CAST(@CommSourceID AS varchar(10))) < 10

THEN SUBSTRING('0000000000', 1, 10 - LEN(CAST(@CommSourceID AS varchar(10)))) + CAST(@CommSourceID AS varchar(10))

ELSE CAST(@CommSourceID AS varchar(10)) END --AS BarcodeNumber,

-- ---< Insert your Test Query Above here >---

SET @cnt = @cnt + 1;

END

-- ---< Display Test Results >---

SELECT DATEDIFF(ms, @StartTime, GETDATE()) AS Duration_ms

,@Results AS 'CASE & Substring 2'

GO

-- ===< TEST B: 2nd Run >==============

-- ---< Set Test Specific Variables >---

DECLARE @CommSourceID BIGINT; SELECT @CommSourceID = '1234567'

-- ---< Set Harness Variables >---

DECLARE @Results VARCHAR(20)

DECLARE @cnt INT; SET @cnt = 0

DECLARE @StartTime DATETIME; SET @StartTime = GETDATE()

-- ---< Execute Test many times >---

WHILE @cnt < $(LOOPCNT)

BEGIN

-- ---< Insert your Test Query Below here >---

SELECT @Results = RIGHT( '0000000000' + CAST(@CommSourceID AS varchar(10)), 10)

-- ---< Insert your Test Query Above here >---

SET @cnt = @cnt + 1;

END

-- ---< Display Test Results >---

SELECT DATEDIFF(ms, @StartTime, GETDATE()) AS Duration_ms

,@Results AS 'Right Append 2'

GO

-- ===< TEST A: 2nd Run >==============

-- ---< Set Test Specific Variables >---

DECLARE @CommSourceID BIGINT; SELECT @CommSourceID = '1234567'

-- ---< Set Harness Variables >---

DECLARE @Results VARCHAR(20)

DECLARE @cnt INT; SET @cnt = 0

DECLARE @StartTime DATETIME; SET @StartTime = GETDATE()

-- ---< Execute Test many times >---

WHILE @cnt < $(LOOPCNT)

BEGIN

-- ---< Insert your Test Query Below here >---

SELECT @Results = SUBSTRING('0000000000', 1, 10 - LEN(CAST(@CommSourceID AS varchar(10)))) + CAST(@CommSourceID AS varchar(10))

-- ---< Insert your Test Query Above here >---

SET @cnt = @cnt + 1;

END

-- ---< Display Test Results >---

SELECT DATEDIFF(ms, @StartTime, GETDATE()) AS Duration_ms

,@Results AS 'Substring Manipulation 2'

GO

-- ===< Empty Test: Measure Test Harness overhead >==============

-- ---< Set Test Specific Variables >---

DECLARE @CommSourceID BIGINT; SELECT @CommSourceID = '1234567'

-- ---< Set Harness Variables >---

DECLARE @Results VARCHAR(20)

DECLARE @cnt INT; SET @cnt = 0

DECLARE @StartTime DATETIME; SET @StartTime = GETDATE()

-- ---< Execute Test many times >---

WHILE @cnt < $(LOOPCNT)

BEGIN

-- ---< Insert your Test Query Below here >---

-- < do nothing here - empty test >

-- ---< Insert your Test Query Above here >---

SET @cnt = @cnt + 1;

END

-- ---< Display Test Results >---

SELECT DATEDIFF(ms, @StartTime, GETDATE()) AS Duration_ms

,@Results AS 'Empty Harness 2' -- << Meaningful label for test

GO

-- ===< END >==============

Example 2: Can trivial User Defined Functions slow you down.

This code sample has been truncated to save space it only shows tests running once.

It compares a User Defined Function,vs the UDF rewritten, vs the rewrite just executed in the query without calling a UDF vs the inline code trimmed to its essentials. While the rewritten UDF code was much faster, calling it as a UDF made the difference negligible. By removing the calling overhead & incorporating it into the TSQL Query that was calling the UDF you start to see real perf gains. Eg ~50 times faster.

Note 1: It is wrong to draw a conclusion from these results that all User Functions are bad & hurt performance. I have seen many occasions where they result in a perf gain. However, they are nearly always slower than putting the same code in-line or taking a huge join out of a UDF & extending the joins in your base table to include these extra table. Ie: It is more efficient to join an extra table to your base query.

NB: An exception to this, is if the UDF permits you to return 1 row based on a parameter, this becomes like a bookmark lookup & can be much more efficient than joining with a huge table.

Note 2: I agree it would be easier to use the SQL 2008 Time data type, this code was written long ago & needed to run on SQL 2000 & SQL 2005 systems.

Note 3: As we iterate thru the loop it is also passing all possible values as parameter to the User Function. Just in case one code path is slower than another.

Original User Function

-- =============================================

-- Author: Some Noddy

-- Create date: 5 June 2005

-- Description: This converts a number of Minutes into a Time string without a date.

-- =============================================

USE TempDB

go

CREATE FUNCTION [dbo].[uf_MinutestoStringTime] (@minutes int)

RETURNS varchar(8)

AS BEGIN

DECLARE @RETURN varchar(8),

@computedminutes int

IF @minutes IS NULL

RETURN ''

IF @minutes > 1440

RETURN ''

IF @minutes < 1

RETURN ''

IF @minutes = 720

SELECT @RETURN = '12:00 PM'

IF @minutes = 1440

SELECT @RETURN = '12:00 AM'

ELSE IF @minutes < 780

SELECT @RETURN = CAST(ABS(@minutes / 60) AS VARCHAR(3))+':'+ right('0'

+ CAST(@minutes - ( ABS(@minutes / 60) * 60) AS VARCHAR(4)),2) + ' AM'

ELSE IF @minutes > 780

BEGIN

SET @computedminutes = @minutes - 720

SELECT @RETURN = cast(abs(@computedminutes / 60) AS VARCHAR(3))+':'+ right('0'

+ CAST(@computedminutes - ( ABS(@computedminutes / 60) * 60) AS VARCHAR(4)),2)

+ CASE WHEN @minutes > 719 THEN ' PM'

WHEN @minutes < 719 THEN ' AM'

ELSE ''

END

END

RETURN @RETURN

END

Refactored User Function

-- =============================================

-- Author: David LEAN

-- Create date: 25 Sept 2009

-- Description: This converts a number of Minutes into a Time string without a date.

-- Note: It is an error to pass more minutes than contained in a single day. ie: 1400

-- It is an error to pass a negative value.

-- 0 = 12:00 AM, 59 = 12:59 AM

-- 719 = 11:59 AM, 720 = 12:00 PM

-- 1440 = 12:00 AM

-- =============================================

USE TempDB

GO

CREATE FUNCTION [dbo].[uf_MinutestoStringTime2] ( @minutes int )

RETURNS varchar(8)

AS BEGIN

IF (@minutes >= 1) AND (@minutes <= 1440)

RETURN ISNULL(LTRIM(STUFF(RIGHT( CONVERT( VARCHAR(20), DATEADD(mi, @minutes, '12:00 AM')) ,7 ), 6,0, ' ')), '')

RETURN ''

END

Sample Test Harness to compare both User Functions and Putting the code inline.

-- ==============================================

-- Test Harness to compare impact of small High Frequency Operations: eg: Things called once for each row in a query.

-- Method: Remember to subtract the overhead of the MAXLOOPS from each result.

-- Remember to Click Menu: Query->SQLCMD mode to allow the MaxMAXLOOPS Counter.

USE TempDB

SET NOCOUNT ON

-- Change this value to impact ALL tests

:SETVAR MAXLOOPS 200000

-- =========================================================================================

-- ---< Pre-Test: Calculate Overhead of Looping code >---

DECLARE @cntLoop INT

SET @cntLoop = 1

DECLARE @Minutes INT

DECLARE @Result VARCHAR(8);

DECLARE @StartTime DATETIME

SET @StartTime = GETDATE()

WHILE @cntLoop < $(MAXLOOPS) BEGIN

SET @Minutes = @cntLoop % 1441

SELECT @Result = 1 -- Empty assignment

SET @cntLoop = @cntLoop + 1

END

SELECT DATEDIFF(ms, @StartTime, GETDATE() ) AS 'Empty MAXLOOPS Overhead';

go

-- =========================================================================================

-- ---< Test 1: Calculate Overhead of Calling existing User Function >---

DECLARE @cntLoop INT

SET @cntLoop = 1

DECLARE @Minutes INT

DECLARE @Result VARCHAR(8);

DECLARE @StartTime DATETIME

SET @StartTime = GETDATE()

WHILE @cntLoop < $(MAXLOOPS) BEGIN

SET @Minutes = @cntLoop % 1441 -- Ensure you test every possible value.

SELECT @Result = [dbo].[uf_MinutestoStringTime] (@cntLoop)

SET @cntLoop = @cntLoop + 1

END

SELECT DATEDIFF(ms, @StartTime, GETDATE() ) AS 'uf_MinutestoStringTime';

go

-- =========================================================================================

-- ---< Test 2: Calculate Overhead of equivalent TSQL Statment >---

-- NB: This returns slightly different results as I believe the existing code gives wrong results between 12:00AM -1:00am & also 12:00pm & 1-pm

-- My results show this takes ~4% of Original ie: 25 Times faster.

DECLARE @cntLoop INT

SET @cntLoop = 1

DECLARE @Minutes INT

DECLARE @Result VARCHAR(8);

DECLARE @StartTime DATETIME

SET @StartTime = GETDATE()

WHILE @cntLoop < $(MAXLOOPS) BEGIN

SET @Minutes = @cntLoop % 1441

SELECT @Result = LTRIM(STUFF(RIGHT( CONVERT( VARCHAR(20), DATEADD(mi, @cntLoop, '12:00 AM')) , 7 ) , 6,0, ' '))

SET @cntLoop = @cntLoop + 1

END

SELECT DATEDIFF(ms, @StartTime, GETDATE() ) AS 'Ltrim Inline';

go

-- =========================================================================================

-- ---< Test 3: Calculate Overhead of equivalent TSQL Statment BUT no space between Time & AM/PM >---

-- NB: This returns slightly different results as I believe the existing code gives wrong results between 12:00AM -1:00am & also 12:00pm & 1-pm

-- My results show this takes ~2% of Original ie: 50 Times faster.

DECLARE @cntLoop INT

SET @cntLoop = 1

DECLARE @Minutes INT

DECLARE @Result VARCHAR(8);

DECLARE @StartTime DATETIME

SET @StartTime = GETDATE()

WHILE @cntLoop < $(MAXLOOPS) BEGIN

SET @Minutes = @cntLoop % 1441

SELECT @Result = (RIGHT( CONVERT( VARCHAR(20), DATEADD(mi, @cntLoop, '12:00 AM')) , 7 ) )

SET @cntLoop = @cntLoop + 1

END

SELECT DATEDIFF(ms, @StartTime, GETDATE() ) AS 'uf inline';

go

-- =========================================================================================

-- ---< Test 4: Calculate Overhead of equivalent TSQL Statment put into a User Function >---

-- NB: This returns slightly different results as I believe the existing code gives wrong results between 12:00AM -1:00am & also 12:00pm & 1-pm

-- My results show this takes ~98.5% of Original ie: Calling overhead on trivial User Functions can have a huge Performance Impact.

DECLARE @cntLoop INT

SET @cntLoop = 1

DECLARE @Minutes INT

DECLARE @Result VARCHAR(8);

DECLARE @StartTime DATETIME

SET @StartTime = GETDATE()

WHILE @cntLoop < $(MAXLOOPS) BEGIN

SET @Minutes = @cntLoop % 1441

SELECT @Result = [dbo].uf_MinutestoStringTime2 (@cntLoop)

SET @cntLoop = @cntLoop + 1

END

SELECT DATEDIFF(ms, @StartTime, GETDATE() ) AS 'uf_MinutestoStringTime2';

go

-- =========================================================================================

Warnings

This approach has its limitations. It tends to be less useful for comparing if a query works better with or without an index. In that case you really need either :-

- Run the same query against 2 databases, one with the indexes & the other without. OR

- Run the test, create the index & run the other test. OR

- Use Visual Studio for DB Professionals &/or RML Utilities to create & measure the load.

Fortunately using SET STATISTIC IO ON means you should be able to quickly see the winner without resorting to this timing loop approach.

Final Thought

So next time you wonder which is faster :-

GETDATE

() or CURRENT_TIMESTAMP

“ IF OBJECT_ID(N'dbo.MyTable', N'U') IS NOT NULL;

“ or“ IF NOT EXISTS (SELECT * FROM sys.objects where object_id = OBJECT_ID(N'dbo.MyTable') AND Type = N'U');Is there much overhead in putting this code in a USER DEFINED FUNCTION & calling it?

Is it better to check if a variable is NULL before needlessly passing it to a function? ie:

IF @productName IS NOT NULL BEGIN

SET @productName = LTRIM(@productName) SET @productName = RTRIM(@productName)

END

vs

SET @productName = LTRIM( RTRIM(@productName) )

Now you can quickly recycle this code from here & test it out.

(By the way the answers are; No Difference, 1st Option 60% faster, tiny udfs can be 50 times slower than inline., Later alternative is 2.5x faster)

Random Thought for the Post.

In meetings I often hear people say "There's no such thing as a stupid question".

That might be true, however I think "Excuse me Mr Customs man, do you think the heroin in my padded bra makes my breasts look unnaturally large?" must come close. … Especially if you are a man.

I hope you find this useful. As always I’m very interested in your comments, Good or bad. thx Dave