Database Design – Many to Many Join Tables

I was asked why my previous post suggested to “not use an Identity Column as a Key for Many to Many Join Tables”. This post outlines some of the pitfalls I’ve seen.

UPDATED: 17 May 09: To clarify a question about how to do this with higher normal forms <see Blue section at end>. I thought it may be worth sharing.

What are we talking about?

Using a Normalised design approach to your database schema, you will find that Many to Many Relationships between 2 objects are commonly expressed as another table, the Join Table. eg: Consider a Web site which allows you to customise your experience by setting your preference. eg: Smoking/Non-Smoking, Aisle/Window Seat, Rock/Classical/Jazz/Rap, Gay/Straight/Both/Neither. You may create the following tables.

Table 1: Customers (usual Login & demographic fields)

Table 2: Preferences (Pref_ID & Pref_Label)

Join Table: CustomerPreference (CustomerKey, PreferenceKey, & maybe other details about the relationship)

So the question is how could the Join Table be defined & what are the trade-off’s?

Design Alternative 1: Use the Primary Keys of the MANY tables as a Composite Key.

Sample code: Create the Primary Key as a composite key containing the 2 keys of the MANY tables & maybe other info about the relationship this row describes.

-- Define Table with the PRIMARY KEY as the Keys of the two MANY tables CREATE TABLE [dbo].[CustPref]( [CustID] [int] NOT NULL, [PrefID] [int] NOT NULL, [Details] [varchar](50) NULL, CONSTRAINT [PK_CustPref] PRIMARY KEY CLUSTERED ([CustID],[PrefID]) ) GO -- Define Foreign Keys to the two "MANY" tables. ALTER TABLE [dbo].[CustPref] WITH CHECK ADD CONSTRAINT [FK_CustPref_Customer] FOREIGN KEY([CustID]) REFERENCES [dbo].[Customer] ([CustID]) GO ALTER TABLE [dbo].[CustPref] CHECK CONSTRAINT [FK_CustPref_Customer] GO ALTER TABLE [dbo].[CustPref] WITH CHECK ADD CONSTRAINT [FK_CustPref_Preference] FOREIGN KEY([PrefID]) REFERENCES [dbo].[Preference] ([PrefID]) GO ALTER TABLE [dbo].[CustPref] CHECK CONSTRAINT [FK_CustPref_Preference] GO

Design Alternative 2: Give it its own Primary Key. (most likely machine generated)

Sample code: Create Key for the Join Table perhaps Identity Field, the 2 Primary Key of the MANY tables & maybe other info about the relationship this row describes. Optionally put a Unique Constraint on the two MANY keys.

-- Define Table with its own PRIMARY KEY. CREATE TABLE [dbo].[CustPrefWithID]( [CustPrefID] [int] PRIMARY KEY CLUSTERED IDENTITY(1,1) NOT NULL, [CustID] [int] NOT NULL, [PrefID] [int] NOT NULL, [Details] [varchar](50) NULL, CONSTRAINT [IX_CustPrefWithID] UNIQUE NONCLUSTERED ([CustID],[PrefID]) ) GO -- Define Foreign Keys to the two "MANY" tables. ALTER TABLE [dbo].[CustPrefWithID] WITH CHECK ADD CONSTRAINT [FK_CustPrefWithID_Customer] FOREIGN KEY([CustID]) REFERENCES [dbo].[Customer] ([CustID]) GO ALTER TABLE [dbo].[CustPrefWithID] CHECK CONSTRAINT [FK_CustPrefWithID_Customer] GO ALTER TABLE [dbo].[CustPrefWithID] WITH CHECK ADD CONSTRAINT [FK_CustPrefWithID_Preference] FOREIGN KEY([PrefID]) REFERENCES [dbo].[Preference] ([PrefID]) GO ALTER TABLE [dbo].[CustPrefWithID] CHECK CONSTRAINT [FK_CustPrefWithID_Preference] GO

The challenges I see with Option 2 are:-

- You need an extra INT column.

Worst case you’ve just increased the row length by 50%. Which increases the number of pages in the table by ~50%. This increases the table scan reads by ~50% etc. Clearly if you needed other columns to describe this M:M relationship the % increase would be less significant. Join tables are often heavily read, so maximising the number of rows in each page can reduce IO. - If you use the GUI designers, it will make the index on the PK, clustered. It is highly likely that you will get better perf if you cluster on one of the Foreign Keys, in this example the CustID.

- You need an extra Index, to hold the Unique Constraint. That causes extra maintenance, disk storage & Operations staff may need to defrag it.

- Worst still. I commonly see databases where they’ve forgotten to add the Unique Constraint. Because of this they never notice the error when they add the same combination multiple times. This usually means they don’t delete old or redundant relationships. I once saw a 2GB Table that only contained ~400K of Unique rows, the rest were duplicates & other garbage they didn’t need (LastUpdatedBy = “AnonymousWebUser”). This extra baggage had a massive adverse Perf impact.

The arguments for this approach are:-

- Everything can be joined with everything else & so eventually you have these really long composite keys.

For Example: An Education system has Tables for Course, Location, Teacher, Student.

A Class is a M:M join of a Course, Location, Teacher & Time. ie: Many Year 10 Maths Classes at same School in 2nd Period.

An Enrolment is a M:M join of Student & Class.

An Assignment if a few students collaborate on a project it could be a M:M join of a few enrolments & a time. (need to ensure that only students enrolled in the course can submit work)

Clearly the composite key approach can become unwieldy, so at some point you might find it worthwhile creating a Unique Key for a M:M table. But don’t overlook the advantage to carrying all these FK’s around in your design. It gives the optimiser many more alternatives & can save a huge number joins. ie: If I want to create a report listing all the assignments grouped by Teacher, I could just join the Teacher & Assignments table, saving the 4 Table Join; Teacher, Class, Enrolment, Assignment.

Last thoughts

I realise that design is personal & not everyone agrees with my opinions above. I’d love to hear your experience; either for, against or different to the points raised in this post

Hope this helps

Dave

= = = = = = = = < UPDATE 17 May 09 > = = = = = = = =

The QUESTION: Many to Many conundrum – Using DRI to enforce complex constraints / business relationships

Consider this:

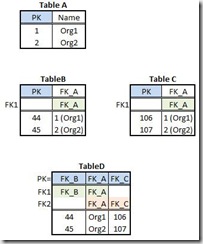

Table A has 2 child tables ( B & C )

The PK of B and C is an identity, and they both have a FK back to Table A.

I have a table D, which is a join table on B and C

Table D has PK of an identity and FK's back to B and C.

Here's some example Data

Table A: Two rows .... Org1 and Org2

Table B: Two rows .... 1 linked to Org 1 and 1 linked to Org 2 ( PK ids are 44(Org1) and 45(Org2) )

Table C: Two rows .... 1 linked to Org 1 and 1 linked to Org 2 ( PK ids are 106(Org1) and 107(Org2) )

I can add a row to Table D which has an 'B' id of "44" and a 'C' id of "107"

This is invalid ... the B id in Table D refers back to Org1 and the C id in Table D refers back to Org2

If I use composite primary keys I can stop this happening, but I cannot see how to stop this if I use identities ...

Alan

- - - -

I’m not clear on what you are saying. I think that you agreeing with me, in that creating an Identity col in Table D is a bad idea, Correct?

(Actually is not a bad idea, but by itself, it is adding overhead & leaving you exposed to data corruption issues.)

Below is a diagram of what I think you described. And a way to use Declarative Referential Integrity (DRI) constraints to enforce the correct relationship with Table A. These sorts of problems are why 4th & 5th Normal Form were introduced.

Dave

- - - -

Thank you very much for your reply, that's cleared up loads for me.

Obviously, you could still have an identity on table D, and then enforce a unique constraint on the the other 3 fields, but you wouldn't be gaining much by this.

Alan

- - - -

True there is no one correct way (there are plenty of incorrect ways or those that will yield potential for bad data)

If your data model has some kind of sub-domain ie: Product Design vs Sales OR (Courses & Classes) vs (Students & Enrolments). Then sometimes having an Identity key on TableD, means you don’t have to carry the 3 cols with all their constraints through all the other tables in your data model.

Eg: In an educational institution: TableA = Classes, TableB = Teachers who teach that Class, TableC = Venues suitable for that class (think Science Labs vs PE/Sport). TableD could be a Class. (An instance of that course/teacher/venue & date). Giving TableD an uniqueID (ie: Class ID), makes it simpler with the next set of tables (Students, Class Enrolments, Assignments, Payments, Exam Results etc) to have just the one FK to TableD.

Clearly you’d have to weigh up the benefits (eg: Less overhead in FK lookups to enforce the DRI, narrower rows) vs disadvantage (eg: need join thru TableD if you want to Group or filter on TableA,B or C)

I’m unclear if you will achieve much with a unique key over the 3 cols unless you also have the 2 FK constraints. But that really depends on your use of data. Good idea if you’ve designed it well with strong normalisation. But if you had other FK’s (ie a Time column) it may be OK to have duplicates, as the uniqueness is in the extra columns. Eg: This car drove over this bridge & the time, is sufficient to do billing. But I agree I’d prefer the unique constraint on all 4 cols.

Thanks for the feedback

Dave

Technorati Tags: SQL Server,TSQL,Database Design