Control the scale of a Logic App

[This article is an incomplete draft. I intend to augment it at a later date. It is posted now to make available information regarding Logic App engine behavior, concurrency control and visual designer support.]

In the context of building Logic Apps that perform well under high load and load spikes, it is important to consider the limits of each connector used. Take for instance the File System connector current limit of 100 calls per minute (reference here /en-us/connectors/filesystem/#Limits ). We had a customer perform a load test of a Logic App consisting of a File System new file trigger, followed by the actions of read file content then delete file. The customer dropped 500 files in the share and let the Logic App run.

The trigger of File System will notice the new files and initiate a first batch of 10 files. By default the designer will also generate split-on behavior for the array output of the trigger:

"triggers": { "When_a_file_is_added_or_modified_(properties_only)": { "inputs": { "host": { "connection": { "name": "@parameters('$connections')['filesystem']['connectionId']" } }, "method": "get", "path": "/datasets/default/triggers/batch/onupdatedfile", "queries": { "folderId": "\\\\someserver\\ShareFolderName\\SubfolderName", "maxFileCount": 10 } }, "recurrence": { "frequency": "Minute", "interval": 1 }, "splitOn": "@triggerBody()", "type": "ApiConnection" } }

So you have one call to trigger, then ten Logic App instances running Read File Content followed by Delete File in parallel. It doesn't stop there: the file system trigger also indicates in its response to Logic App that there is more items to be picked up, and requests for immediate rescheduling. So the Logic App engine will also in parallel call the trigger again. The second trigger run will in turn return another batch of 10 files, for which the Logic App engine will split the execution of another 10 parallel instances. And so on the trigger will repeatedly be immediately called again until it exhausts the backlog of added files. With 500 files, you have 50 trigger calls, and 2*500 action calls, all happening in very short order (because Logic App is built to run massive amounts of actions in parallel).

Quickly the File System connector limit of 100 calls per minute kicks-in and requests start bouncing with 429 HTTP error response code (see /en-us/azure/api-management/transform-api#protect-an-api-by-adding-rate-limit-policy-throttling for background information on throttling technology - this does not mean that Logic App is specifically tied to this implementation of throttling).

So what to do? The concurrency of the Logic App runs needs to be configured according to the specific demands of this Logic App definition.

This is not a new feature and some of our MVP have blogged about it here https://mlogdberg.com/logicapps/concurrency-control and here https://toonvanhoutte.wordpress.com/2017/08/29/logic-apps-concurrency-control/

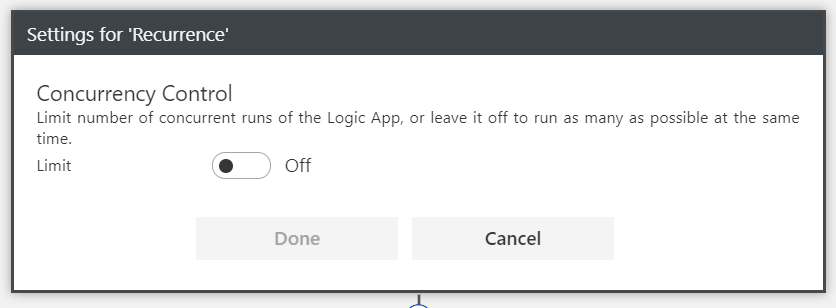

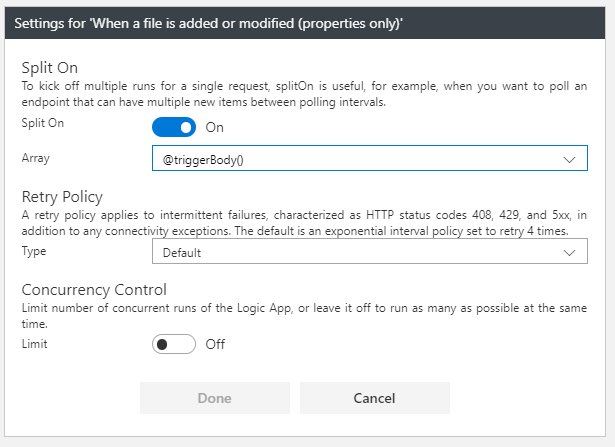

The feature has been improved since in that it now has support in the visual designer as visible in these couple screenshots (recurrence and File System, which is not to say that only those two support it):

[caption id="attachment_715" align="alignnone" width="836"] How to control the concurrency of a recurring trigger[/caption]

How to control the concurrency of a recurring trigger[/caption]

[caption id="attachment_725" align="alignnone" width="615"] How to control the concurrency for the File System trigger[/caption]

How to control the concurrency for the File System trigger[/caption]

Concurrency control is a generalization of the initial feature of single instance: the recurrence trigger will skip unless there are fewer than n active runs (where n=1 in case of single instance). To configure it, open the settings page by click the top right … button and choose ‘Settings’.

Also consider turning Off the Split On behavior and modifying the following actions definition to work on an array of elements at each call instead of only a single element. This will depend on the specific API used, whether it does support array input, or whether you need to add explicit for each loops on the array to call a given API with only a single element at a time.