Boost WCF performance using adaptive encoding

Authors: Dario Airoldi, Paolo Zavatarelli

Abstract

Interesting documentation can be found comparing the many options available in WCF to encode web services data. Any encoding strategy may have advantages and disadvantages in different environments and runtime conditions.

For example, binary encoding provides best performance on fast network connections, text encoding may be easier to debug and a custom compressed encoding may be better for big messages and slow network connections.

On the other side, a custom compressed encoding uses much CPU and may not be appropriate when the server is very busy; also, it would be completely useless for small messages or messages with data already compressed (i.e. images in a compressed format like jpg).

Then, an interesting point is:

- Is it possible to make the choice at runtime, and select the most appropriate encoding for each specific condition?

In fact it is, and you may get interesting performance benefits from such approach!

In this article we’ll show how to create a custom DynamicEncoder that extends existing encoders (such as WCF binaryMessageEncoding, textMessageEncoding etc.) selecting the most appropriate at runtime and applies compression selectively to the encoded stream to minimize calls latency and maximize their throughput.

Why adaptive encoding?

In the following link, Dustin Metzger proposes a very interesting analysis on the effect you may obtain applying compression to standard WCF binary encoding.

What seems evident by the numbers is that compression provides such a great benefit on medium and low bandwidth network connections at the cost of an even greater negative impact on high bandwidth network connections.

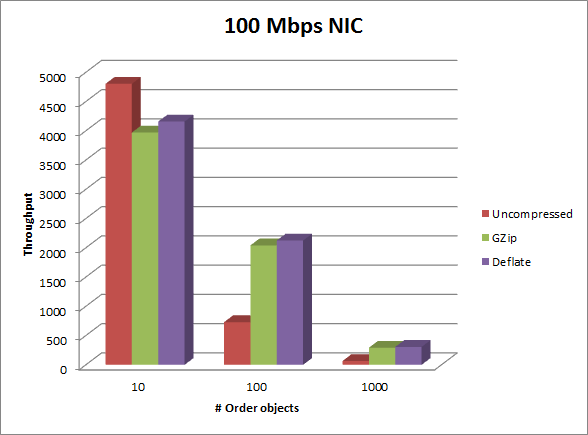

For example, some measures taken by Dustin show that adding compression to a binary encoded stream may provide significant benefit on a 100 Mbps network where the cost of invocations may be reduced by up to 4 times.

Figure 1: Throughput comparison, from Dustin Metzger article, between WCF binary encoding and compressed binary encoding on a 100 Mbps network, for different message sizes

Understandably, such benefit may grow by far on production environments where it’s common to find network bandwidths close to one megabit.

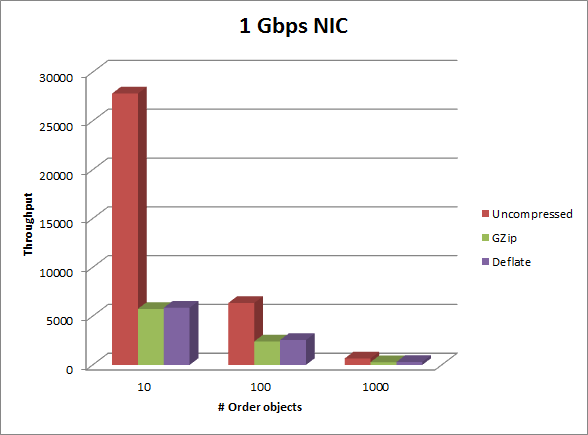

Dustin graph shows however that compressing small messages doesn’t really pay off the CPU and latency spent for compression. Also, the following graph from Dustin article shows that with high bandwidth networks or generally with CPU bound loads the cost of compressed encoding may outgrow the cost of standard binary encoding up to 5 times.

Figure 2: Throughput comparison, from Dustin Metzger article, between WCF binary encoding and compressed binary encoding on a 1 Gbps network, for different message sizes

Again, in real production environments you may find messages with images, pdf or xps documents that are already compressed so, compressing their stream at the encoder level wouldn’t provide any benefit.

For these reasons we chose to use an adaptive approach and we wrote an encoder (DynamicEncoder) to extend binary encoding that applies compression to the encoded stream selectively, based on few basic rules:

- Compression should be avoided for small messages.

- Compression should be disabled automatically when the server CPU load is too high.

- Compression should be disabled automatically for soap actions providing messages with bad compression ratio.

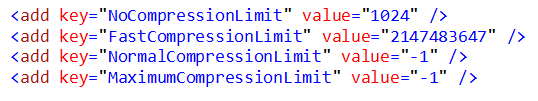

To avoid compressing small messages we chose to allow the configuration of size thresholds for the different compression levels:

In particular, DynamicEncoder avoids compressing messages with size below the value configured as NoCompressionLimit.

In the example above, the encoder is configured to avoid compression for all messages below 1024 KB and compress other messages with Fast Compression; thresholds for Normal and Maximum compression are set to -1 so they are not considered by the encoder.

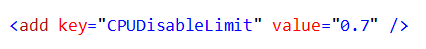

To prevent compression on CPU bound load we chose to allow the configuration of an additional threshold:

When the machine CPU load overtakes the CPUDisableLimit DynamicEncoder falls back progressively to standard binary encoding. In the example above, the encoder is configured to disable compression progressively when the server CPU usage outgrows the value of 70%.

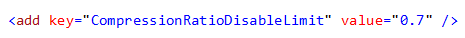

To prevent compression of uncompressible messages we chose to allow configuration of a last setting:

According to this setting, our dynamicEncoder will fall back to standard binary encoding on every invocation that shows an insufficient compression ratio. In the example above, the encoder is configured to disable compression for all soap Actions that show a compression ratio above the value of 70%.

The evaluation of the compression ratio for an outgoing message is based on the average ratio produced by the last messages with similar size, in the same soap Action. this means that messages with similar sizes, in the same soap action are assumed to produce similar compression ratios.

So, dynamicEncoder starts compressing messages for all soap Actions. Soap Actions that produce a compression ratio worse than the threshold defined by "CompressionRatioDisableLimit" are then disabled progressively.

This setting is very important because it allows DynamicEncoder disable compression on big complex messages that contain images or documents that are already compressed. Compressing them would waste a lot of CPU Usage and time without producing any benefit.

The following paragraph shows some measures of the performance we obtained with dynamicEncoder. We’ll then delve into few details of dynamicEncoder implementation.

Some measures of DynamicEncoder performance

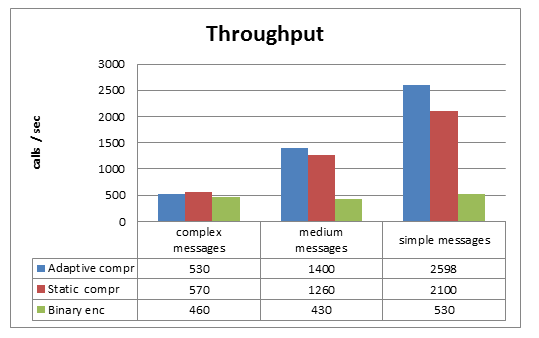

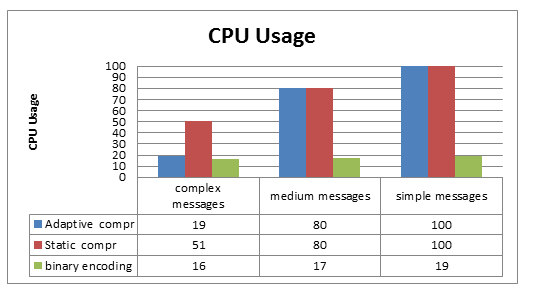

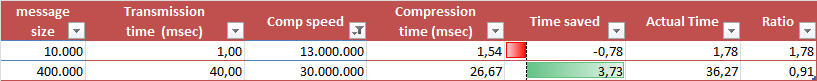

In this section we compare dynamicEncoder performance to WCF binary encoding with different message types and network conditions.

More specifically, we measure throughput and CPU usage obtained by a sample service returning messages of different sizes and complexities, respectively on a 100 Mbps and a 1.5 Mbps networks.

The performance measures are taken with 3 separate configurations for the service endpoint:

- Binary encoding: here the service is configured with WCF binary encoding; the values in this measure serve as a comparison to understand the impact of the compression strategies.

- Static compression: in this case the service is configured using dynamicEncoder with CompressionRatioDisableLimit=1 and NoCompressionLimit=0. This way compression is applied to all messages returned by the service to the client.

- Adaptive compression: here the service is configured using dynamicEncoder with CompressionRatioDisableLimit=0.7 and NoCompressionLimit=1024. This way compression is not applied to small messages and it is disabled on messages with bad compression ratio.

The different encoding strategies are compared configuring the sample service to return the following message types:

- Simple messages: these are messages with high redundancy; they are compressible by ten times on average (i.e. compression ratio close to 0.1).

- Medium complexity messages: these are messages with average redundancy; they are compressible by a factor close to 0.4.

- Complex messages: these are messages with low redundancy i.e. compressible by a factor between 0.8 and 1.

The measures were taken with random message sizes ranging between 0.6KB to 250KB on a distribution with most messages close to 150KB.

On a 100 Mbps network we observed the following results:

Figure 3: Throughput values produced by adaptive compression, static compression and binary encoding on a 100 Mbps network

Figure 4: CPU usage produced by adaptive compression, static compression and binary encoding on a 100 Mbps network

Figure 3 show that all encoding strategies provide similar throughput for complex messages. Indeed, complex messages produce a bad compression ratio so, only few bytes are saved when compressing them.

Figure 4 shows however that static compression wastes a lot of CPU usage for this case.

Adaptive compression instead, gives up compressing complex messages and saves the precious CPU resource for a better use.

This approach pays well in the other scenarios (and in real production environments) where the CPU is a more precious resource and adaptive compression is able to produce better throughput with lower CPU usage.

You may notice that only the test with simple messages was bound by CPU usage (i.e. CPU usage reached 100%) while all other measures were bound by network usage; Understandably, on a 1.5 Mbps network the bottleneck is always the network usage and the measures produced a similar pattern with much lower CPU usage and throughput values.

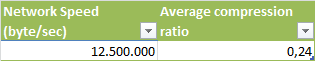

It’s great to get this better throughput but what about latencies?

How does the time spent for compressing messages compare to the time saved during transmission?

Given the network speed, the compression speed of a system and the compression ratio obtained on messages it is easy to calculate the time saved for transmission and the time spent for compression:

- TransmissionTimeSaved = messageSize *(1-compressionRatio) / networkSpeed

- CompressionTimeCost = messageSize *2 / compressionSpeed

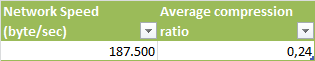

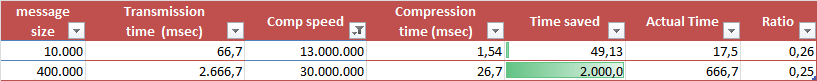

On our test systems we measured an average compression speed from few KB/sec for smallest messages up to 50 MB/sec for biggest messages, with an average compression ratio of 0.24.

On a 100MB network you may experience benefits in latency only for bigger messages, when the compression speed is higher.

Figure 5: Latency improvement applying compression to messages on 100MBit networks

In this scenario, to avoid latency penalties it may be appropriate to raise the NoCompressionLimit setting and apply compression only to bigger messages.

On a 1.5MB network you get more interesting results with a latency benefit from smaller messages.

Figure 6: Latency improvement applying compression to messages on 1.5MBit networks

Figure 6 shows that, in this scenario, both messages produce a transmission time improvement of 75% , with absolute latency improvement of 2 seconds for the bigger one.

Some measures on DynamicEncoder benefits in a production environment

Luckily enough, we could measure some benefits on our production systems too.

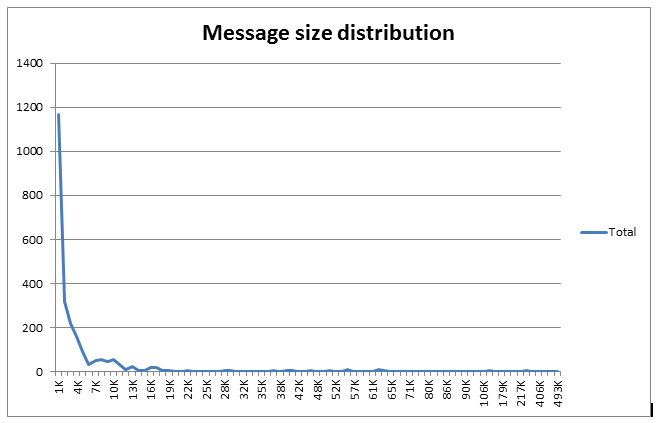

In our case, messages have the following distribution with sizes between 100B and 500KB.

Figure 7: Message size distribution for our production environment

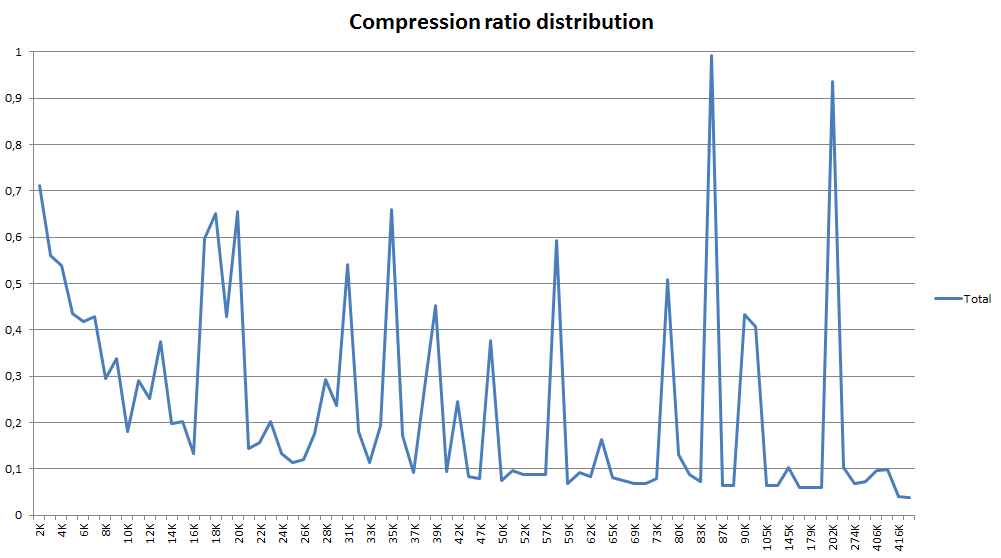

In such scenario, dynamic encoder ends up to apply compression to about half the messages with an average compression ratio of 0.22. Overall, the network traffic is reduced by a factor 0.31.

Figure 8: messages compression ratio obtained by dynamicEncoder on our production environment

It is important to notice that in this production environment we have tested the compression provided by the dynamicEncoder over the WCF Binary Encoding. Even if Binary Encoding is a quite efficient way the transmit data on the network, the dynamicEncoder has shown a significantdecrease in the network traffic.

Clearly the usage of the dynamicEncoder over the WCF Text Encoding, can demonstrate much better results.

For most clients, the available bandwidth to the server is around 2Mbit so the expected latency improvement may range between a few milliseconds for smallest messages up to a couple of seconds for the largest.

WCF Encoders creation process

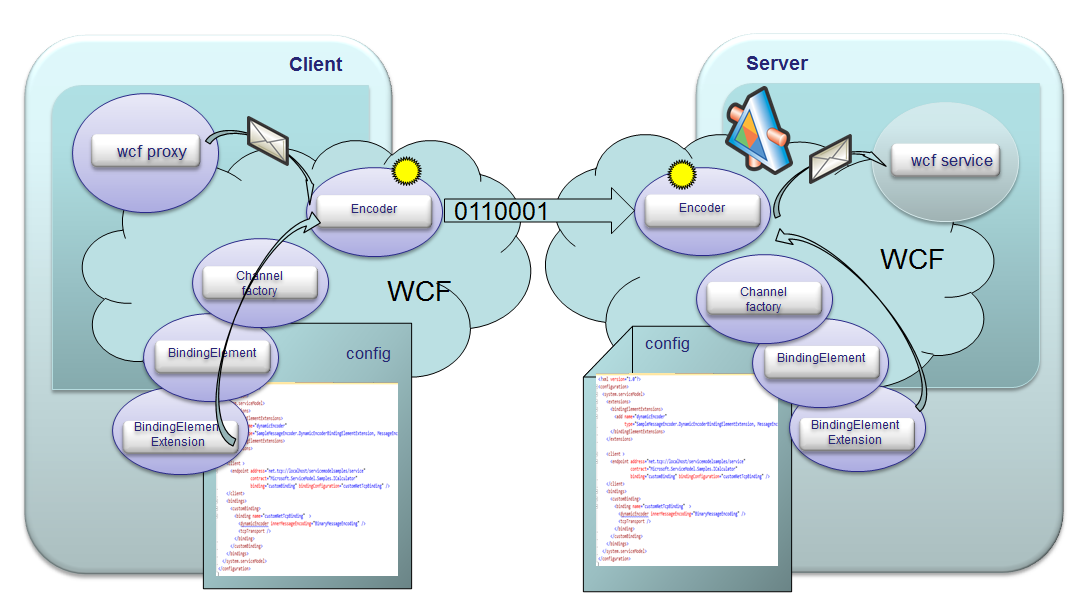

Writing a WCF encoder is just a matter of writing 4 classes, each one creating the other, the last of which (the encoder) does the job of translating incoming messages into the binary arrays sent over the wire.

Two classes of them, a BindingElementExtension and a MessageEncodingBindingElement class, are used to load the encoder configuration from the WCF endpoint configuration. A third MessageEncoderFactory class is needed to support the encoder creation process, according to the factory pattern. The last MessageEncoder class, which is the actual encoder, contains the code to translate WCF messages into binary arrays.

The figure below shows the classes involved into the encoder creation process and the message encoder working on a WCF method call.

Figure 9: classes involved into a WCF encoder creation

To write our DynamicEncoder we will then follow the following steps:

- Create a DynamicEncoderBindingElementExtension class

DynamicEncoderBindingElementExtension class must derive from BindingElementExtensionElement; it loads the encoder configuration from the binding configuration and participates to the binding elements creation.

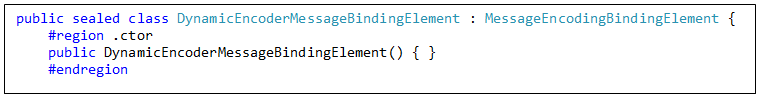

- Create a DynamicEncoderMessageBindingElement class

DynamicEncoderMessageBindingElement must derive from MessageEncodingBindingElement and will be invoked by WCF to obtain MessageEncderFactory instances.

- Create a DynamicEncoderFactory class

DynamicEncoderFactory class is used by WCF to generate encoder instances according to the factory pattern. The factory is invoked by WCF to obtain message encoder instances whenever needed.

- Create a DynamicEncoder class to do the encoding.

The message encoder job is to translate the WCF Message object into a buffer to be sent over the wire.

Once written, we’ll be able to activate DynamicEncoder on a WCF endpoint with few configuration steps:

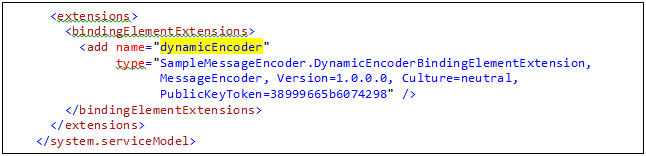

- Declare the DynamicEncoderBindingElementExtension in the extensions node of application configuration file:

Figure 10: dynamicEncoder definition in the application configuration file

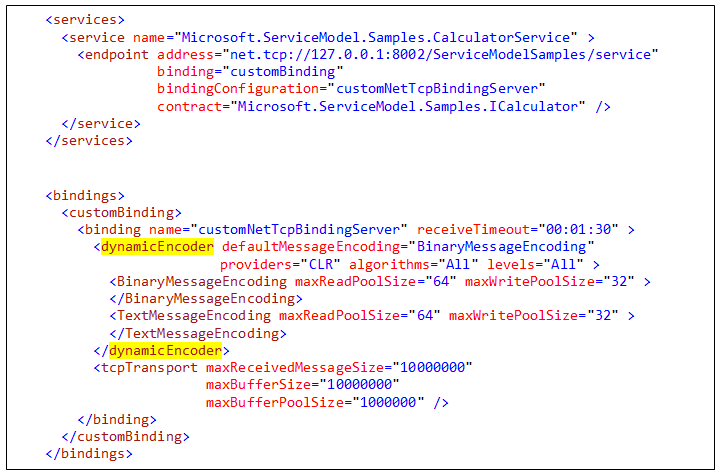

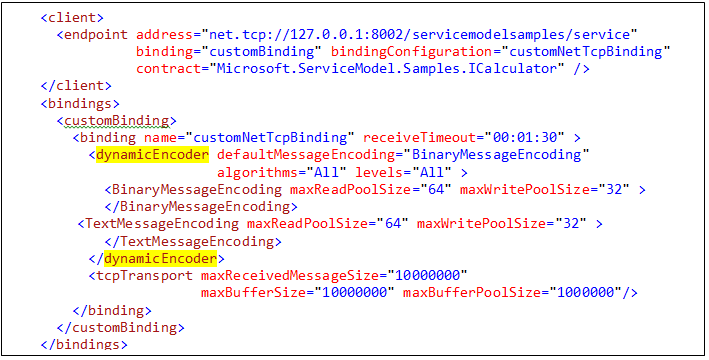

- Add a custom binding configuration to a WCF server endpoint with a reference to our dynamicEncoder binding element extension:

Figure 11: dynamicEncoder binding element extension configured into a server side endpoint

- Add a symmetric configuration to its corresponding WCF client endpoint:

|

Figura 12: dynamicEncoder binding element extension configured into a client side endpoint

In the configuration examples above dynamicEncoder is configured to work with two inner encodings (BinaryMessageEncoding and TextMessageEncoding) using BinaryMessageEncoding as the default.

This means that any client endpoint with BinaryMessageEncoding will be able to talk to the server without changes; of course, only client endpoints configured with our dynamicEncoder will be able to leverage the performance benefits of adaptive encoding and selective compression.

For this reason we’ll say that DynamicEncoder extends the message encoding specified as the default.

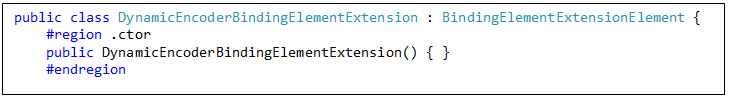

Step 1: Create a DynamicEncoderBindingElementExtension class

The role of a binding element extension is to deserialize a binding element configuration and expose WCF the methods to create its associated binding element.

DynamicEncoderBindingElementExtension inherits from BindingElementExtensionElement as shown below:

|

Figura 13: DynamicEncoderBindingElementExtension definition

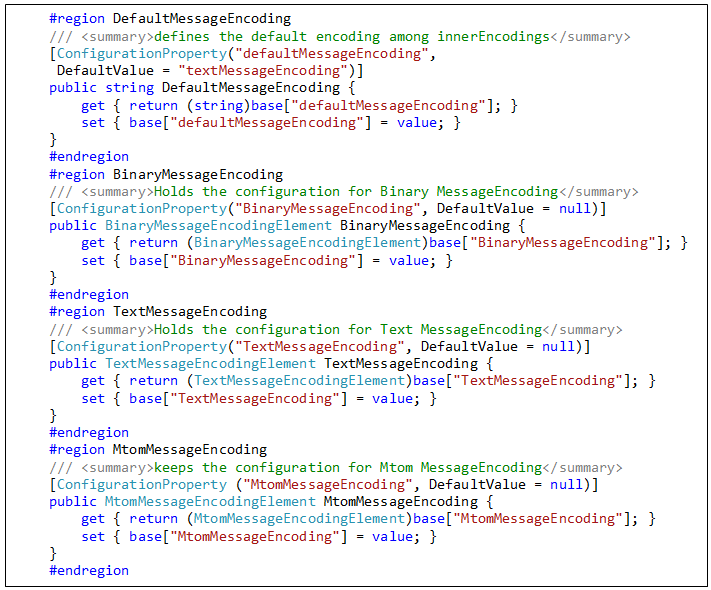

As shown in Figure 11 and 12 we use few properties to allow nesting the configuration of the inner encodings within the configuration of DynamicEncoderBindingElementExtension:

- BinaryMessageEncoding, TextMessageEncoding and MtomMessageEncoding are used to configure the supported inner message encoders

- DefaultMessageEncoding is used to define the one used by default.

|

Figure 14: DynamicEncoderBindingElementExtension properties to deserialize inner encodings configuration

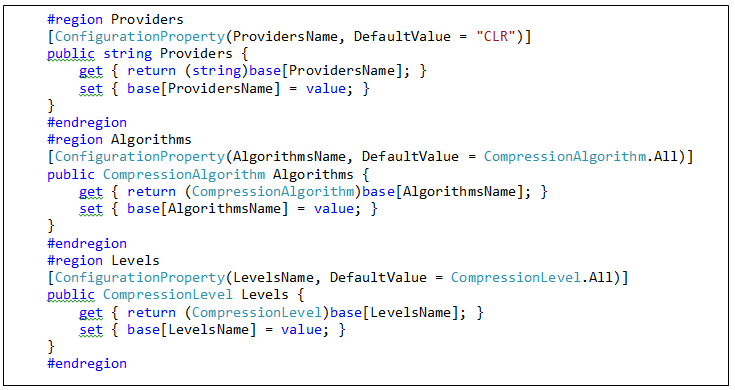

3 additional properties (Providers, Algorithms and Levels) are used to configure compression providers, algorithms and compression levels allowed for the encoder.

|

Figure 15: DynamicEncoderBindingElementExtension properties to deserialize compression settings

By default, our DynamicEncoder uses one compression provider (CLR) based on .Net System.Compression libraries.

Step 2: Create a DynamicEncoderMessageBindingElement class

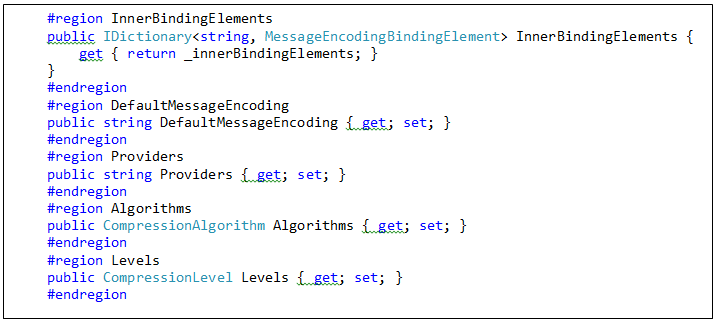

Our DynamicEncoderMessageBindingElement class needs to deliver inner encoders and compression configuration from the binding element extension to the encoder factory and DynamicEncoder instances.

For this reason, we’ll design it with a structure similar to that of DynamicEncoderBindingElementExtension.

|

|

Figura 16: DynamicEncoderBindingElement properties to hold inner encodings and compression settings

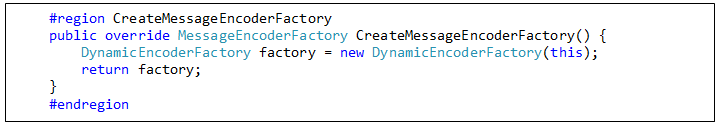

To get encoder factories from the binding element WCF will use the following entry point:

|

Figura 17: Encoder factory creation entry point

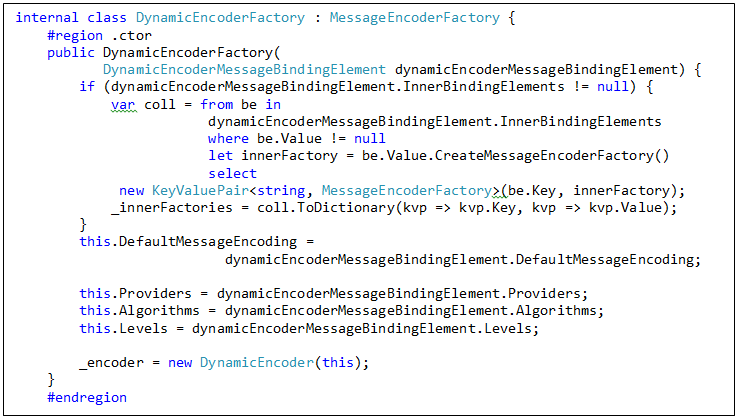

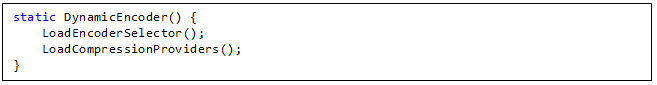

Step 3: Create a DynamicEncoderFactory class

The factory class role is to prepare everything possible to allow efficient encoder instantiation, according to the endpoint configuration.

So, we’ll design our factory class to hold factories instances for the inner encoders:

- InnerFactories collection is used to keep the instances of encoder factories necessary to obtain inner encoders.

- DefaultMessageEncoding property used to define the one used by default.

Inner factories and other configuration information is taken from the binding element received at construction time

|

Figura 18: encoder factory construction time

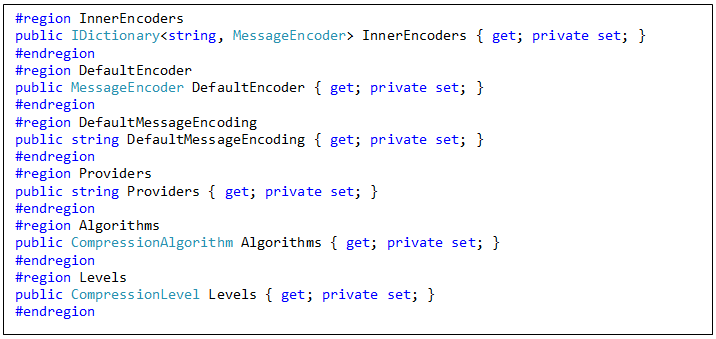

As usual, few properties are used to hold inner encoder factory instances and compression configuration

|

Figura 19: inner encoders factory instances and compression configuration into DynamicEncoderFactory

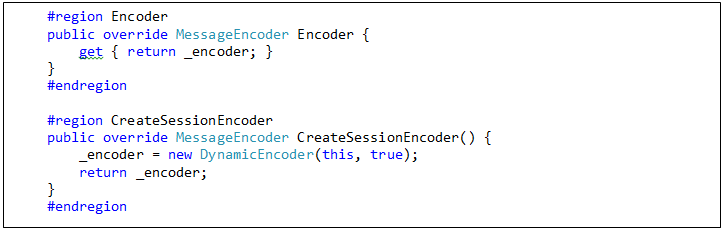

WCF will then be able to get encoder instances by means of Encoder property (for sessionless connections) and CreateSessionEncoder method.

|

Figura 20: DynamicEncoderFactory entry points used to deliver encoder instances to WCF

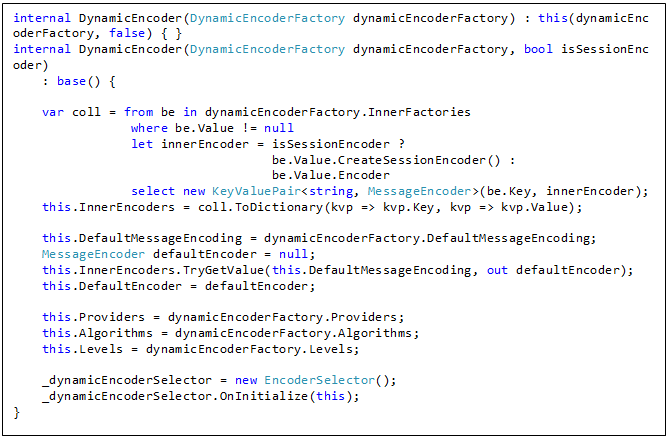

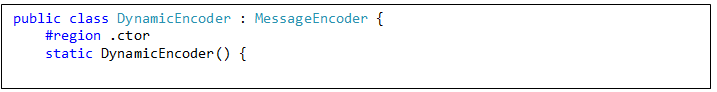

Step 4: Create a DynamicEncoder class to do the encoding

The DynamicEncoder class is the real WCF encoder class. At every service call WCF will make sure that our encoder be loaded and initialized by means of the encoder creation process described up to now.

As every WCF encoder, DyanmicEncoder class inherits from MessageEncoder class.

|

Not surprisingly, DynamicEncoder class will hold inner encoder instances, the default encoder and configuration settings about compression providers, algorithms and levels configured for the endpoint.

|

Any information necessary for operating is received by the encoder at construction time, from the factory object.

DynamicEncoder performs few basic tasks:

- It chooses what encoding algorithm should be used to encode the message

- It uses the selected inner encoder to encode the message into a binary stream

- It chooses whether the encoded stream should be compressed and what algorithm should be applied

- In case, It applies compression to the binary stream

Such tasks are performed into its WriteMessage overrides, by means of some provider classes that are loaded statically:

|

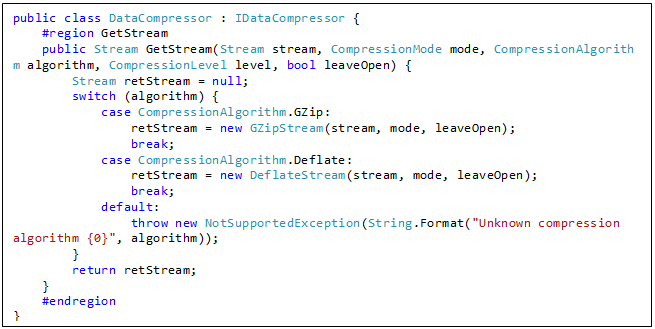

One or more compression providers expose compression algorithms to the encoder by means of IDataCompressor interface. DynamicEncoder supports use of multiple compression provider classes; by default, DynamicEncoder uses DataCompressor class: a very simple compression provider, based on .Net framework System.Compression libraries.

|

DataCompressor provider supports GZip and Deflate algorithms with only one compression level. Additional providers supporting different compression levels may be added easily.

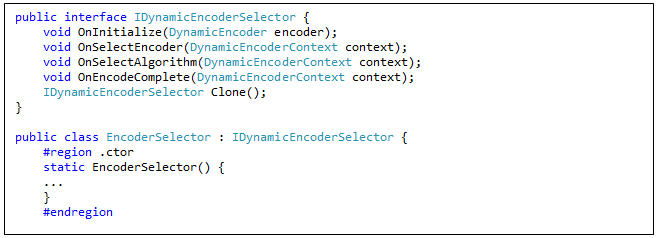

One encoder selector class contains all the rules for choosing the inner encoder and the compression settings to apply to the encoded stream.

The encoder selector exposes to the encoder such rules by means of interface IDynamicEncoderSelector.

Initialize() and Clone() are used by DynamicEncoder to initialize selector instances for any WCF channel.

OnSelectEncoder() , OnSelectAlgorithm() are invoked to choose the inner encoding and the compression settings to apply to the compressed stream.

OnEncodeComplete() is invoked after compression to allow gathering information on the encoded call that can be used to adapt encoder choices to the runtime conditions.

OnEncodeComplete() is used by the default EncoderSelector to gather information about the compression ratio obtained on outgoing messages. This information is used by EncoderSelector to honor CompressionRatioDisableLimit setting.

References

- Dustin Metzgar: Compressing messages in WCF part four - Network performance

https://blogs.msdn.com/b/dmetzgar/archive/2011/03/22/gzipmessageencoder-part-four.aspx