Hurricane Sandy Mash-Up: Hive, SQL Server, PowerPivot & Power View

Small Bites of Big Data

Authors: Cindy Gross Microsoft SQLCAT PM, Ed Katibah Microsoft SQLCAT PM

Tech Reviewers: Bob Beauchemin Developer Skills Partner at SQLSkills, Jeannine Nelson-Takaki Microsoft Technical Writer, John Sirmon Microsoft SQLCAT PM, Lara Rubbelke Microsoft Technical Architect, Murshed Zaman Microsoft SQLCAT PM

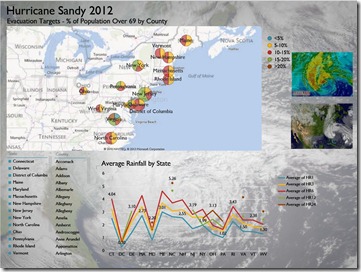

For my #SQLPASS Summit 2012 talk SQLCAT: Big Data – All Abuzz About Hive (slides available to all | recording available to PASS Summit 2012 attendees) I showed a mash-up of Hive, SQL Server, and Excel data that had been imported to PowerPivot and then displayed via Power View in Excel 2013 (using the new SharePoint-free self-service option). PowerPivot brings together the new world of unstructured data from Hadoop with structured data from more traditional relational and multi-dimensional sources to gain new business insights and break down data silos. We were able to take very recent data from Hurricane Sandy, which occurred the week before the PASS Summit, and quickly build a report to pinpoint some initial areas of interest. The report provides a sample foundation for exploring to find additional insights. If you need more background on Big Data, Hadoop, and Hive please see my previous blogs and talks.

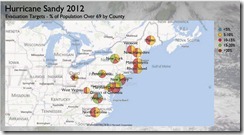

I will walk you through the steps to create the report including loading population demographics (census), weather (NOAA), and lookup table (state abbreviations) data into Hive, SQL Server, Excel, and PowerPivot then creating visualizations in Power View to gain additional insights. Our initial goal is to see if there are particular geographic areas in the path of Hurricane Sandy that might need extra assistance with evacuation. One hypothesis is that people above a given age might be more likely to need assistance, so we want to compare age data with the projected rainfall patterns related to the path of the hurricane. Once you see this basic demonstration you can envision all sorts of additional data sets that could add value to the model, along with different questions that could be asked given the existing data sets. Data from the CDC, pet ownership figures, housing details, job statistics, zombie predictions, and public utility data could be added to Hive or pulled directly from existing sources and added to the report to gain additional insights. Those insights might, for example, help first responders during future storms, assist your business to understand various ways it can help after a storm or major cleanup effort, or aid future research into reducing the damage done by natural disasters.

Prerequisites

- Excel 2013 (a 30 day trial is available)

- SQL Server 2008 R2 or later

- HDInsight Server or HDInsight Service (Service access will take a few days so plan in advance or use Server)

- Hive ODBC Driver (available with HDInsight)

- NOAA Data Set restored to SQL Server 2008 R2 or later (available from SkyDrive)

- Census Data Set (attached at bottom of blog)

SQL Server

Relational Data

One of the data sets for the demo is NOAA weather data that includes spatial characteristics. Since SQL Server has a rich spatial engine and the data set is well known and highly structured that data was a good fit for SQL Server. Spatial_Ed has a wiki post on how to create the finished data set from raw NOAA data. Ed also provides a backup of the completed data set for our use. Take the SQL Server database backup (download here) made available by Ed in his wiki post and restore it to your SQL Server 2008 R2 or later instance as a database called NOAA.

USE [master];

RESTORE DATABASE [NOAA] FROM DISK = N'C:\Demo\BigData\Sandy\NOAA.bak'

WITH MOVE N'NOAA2' TO N'C:\DATA\NOAA2.mdf',

MOVE N'NOAA2_log' TO N'C:\DATA\NOAA2_log.LDF';

GO

Since this data will be used by business users, add a view to the NOAA database with a friendlier name for the rainfall/flashflood data:

USE NOAA;

GO

CREATE VIEW flashflood AS SELECT * FROM [dbo].[nws_ffg7];

Take a look at a few rows of the data. For more information on what information is available in the census data, see the U.S. Census Bureau website.

SELECT TOP 10 * FROM flashflood;

Hive

Hive is a part of the Hadoop ecosystem that allows you to create tables and impose structure on Hadoop data. It is available in HDInsight which is Microsoft’s distribution of Hadoop, sometimes referred to as Hadoop on Azure or Hadoop on Windows. HDInsight is currently available for preview in both Azure (HDInsight Service) and on-premises (HDInsight Server) versions. The HDInsight Server version is lightweight and simple to install – you can even put it on your laptop as it is a single node installation for now. Or you can request access to an Azure Hadoop cluster via HDInsight Service, though this takes a few days in the CTP phase. Hive is automatically installed as part of Hadoop in the preview versions, though the Hive ODBC driver requires a separate setup step. Hive can be described in many ways, but for your purposes within this article the key point is that it provides metadata and structure to Hadoop data that allows the data to be treated as “just another data source” to an ODBC compliant application such as Excel. HiveQL, or HQL, looks very similar to other SQL languages and has similar functionality.

ODBC

Once you have access to an HDInsight cluster, install the Hive ODBC driver to your local box (where you have Excel 2013). Make sure you install the ODBC driver platform (32-bit or 64-bit) that matches the platform of Excel. We recommend the 64-bit version of Excel 2013 and of the Hive ODBC driver since PowerPivot is able to take advantage of the larger memory available in x64. The Hive ODBC driver is available from the Downloads tile in the HDInsight Service (Azure) or HDInsight Server (on-premises) portal. Click on the appropriate installer (32-bit or 64-bit) and click through the Microsoft ODBC Driver for Hive Setup. You will end up with version .09 of the Microsoft Hive ODBC driver.

Make sure the Hive ODBC port 10000 is open on your Hadoop cluster (instructions here).

Create a Hive ODBC system DSN pointing to your Hadoop cluster. For this example I used the ODBC Data Source Administrator to create a system DSN called CGrossHOAx64 pointing to my https://HadoopOnAzure.com instance cgross.cloudapp.net with port = 10000 and an account called cgross1. For the on-premise version you can use localhost for the Host value and you will not specify an account or password.

Note: With Windows 2008 R2 and earlier or Windows 7 and earlier if you must use 32-bit Excel and the 32-bit Hive ODBC driver (not recommended) on 64-bit Windows, to create the system DSN you have to use the 32-bit version of the ODBC Data Source Administrator. It is in a location similar to C:\Windows\SysWOW64\odbcad32.exe. In Windows 2012 or later or Windows 8 or later there is a single ODBC Data Source Administrator for both 32-bit and 64-bit drivers.

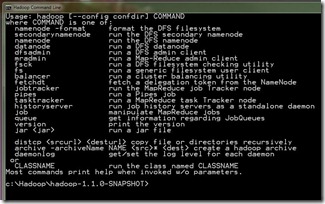

Key Hadoop Pointers

- The Hadoop Command Prompt is installed with HDInsight. There will be an icon added to the desktop on your head node. When you open it you will see that it is a basic Windows Command Prompt but with some specific settings applied.

- In early versions of HDInsight the Hive directory is not in the system path so you have to manually change to the Hive directory (cd %hive_home%\bin) to run Hive commands from the Hadoop Command Prompt. This issue will be fixed in future versions.

- HDFS is the scale-out storage technology that comes as part of core Hadoop. How it works behind the scenes is covered in great detail in many other places and is not relevant to this discussion. This is the default storage in HDInsight Server (on-prem) and the CTP of HDInsight Service (Azure).

- ASV, or Azure Storage Vault, is the Windows Azure implementation of HDFS that allows HDInsight to use Azure Blob storage. This will be the default for the production HDInsight Service.

Census Data

Census data has a format that can vary quite a bit. The data is collected in different ways in different countries, it may vary over time, and many companies add value to the data and make available extended data sets in various formats. It could contain large amounts of data kept for very long periods of time. In our example the pieces of census data we find useful and how we look at that data (what structures we impose) may change quite a bit as we explore the data. The variety of structures and the flexibility of the ways to look at the data make it a candidate for Hadoop data. Our need to explore the data from common BI tools such as Excel, which expect rows and columns with metadata, leads us to make it into a Hive table.

Census data was chosen because it includes age ranges and this fits with the initial scenario we are building to look at how many older individuals are in various high danger areas in the path of the hurricane. I have attached a demo-sized variation of this data set called Census2010.dat at the bottom of the blog. Download that tab-delimited U.S. census data set to the c:\data folder on the head node for your HDInsight cluster. The head node is the machine you installed your single node HDInsight Server (on-prem) on, such as your laptop, or the machine configured for Remote Desktop access from the portal in your HDInsight Service (Azure) cluster. If you wish to explore the raw census data used in this demo, take a look at this site: https://www.census.gov/popest/research/modified.html.

Next, load the census data to Hadoop’s HDFS storage. Unlike a relational system where the structure has to be well-known and pre-defined before the data is loaded, with Hadoop we have the option to load this data into HDFS before we even create the Hive table!

Note: Your client tool editor or the website may change the dashes or other characters in the following commands to “smart” characters. If you get syntax errors from a direct cut/paste, try pasting into notepad first or deleting then retyping the dash (or other special characters).

I will show you three of the many options for loading the data into an external Hive table (in the real world you would have multiple very large files in this directory, but for the purposes of the demo we have one small file), choose any one of the following three options. Note that the directories are created for you automatically as part of the load process. There are many ways to do the load, including cURL, SFTP, PIG, etc. but the steps below are good for illustration purposes.

1) To load the data into HDFS via the Hadoop Command Prompt, open the Hadoop Command Prompt and type:

hadoop fs -put c:\data\census2010.dat /user/demo/census/census.dat

Let’s break down the command into its component pieces. The fs tells Hadoop you have a file system command, put is a data load command, c:\data\census2010.dat is the location of the file within NTFS on Windows, and /user/demo/census/census.dat is the location where we want to put the file within HDFS. Notice that I chose to change the name of the file during the load, mostly just for convenience as the HDFS name I chose is shorter and more generic. By default HDInsight currently defaults to using HDFS so I don’t have to specify that in the command, but if your system has a different default or you just want to be very specific you could specify HDFS in the command by adding hdfs:// before the location.

hadoop fs -put c:\data\census2010.dat hdfs:///user/demo/census/census.dat

Nerd point: You can specify the system name (instead of letting it default to localhost) by appending hdfs://localhost (or a specific name, often remote) to the location. This is rarely done as it is longer and makes the code less portable.

hadoop fs -put c:\data\census2010.dat hdfs://localhost/user/demo/census/census.dat

2) If you are using HDInsight Service (Azure), you can choose to easily store the data in an Azure Blob Store via ASV. If you haven’t already established a connection from your HDInsight cluster to your Azure Blob Store then go into your HDInsight cluster settings and enter your Azure Storage Account name and key. Then instead of letting the data load default to HDFS specify in the last parameter that the data will be loaded to Azure Storage Vault (ASV).

hadoop fs -put c:\data\census2010.dat asv://user/demo/census/census.dat

3) From the JavaScript interactive console in the Hadoop web portal (https://localhost:8085/Cluster/InteractiveJS from the head node) you can use fs.put() and specify either an HDFS or ASV location as your destination (use any ONE of these, not all of them):

/user/demo/census/census.dat

asv://user/demo/census/census.dat

hdfs:///user/demo/census/census.dat

Check from a Hadoop Command Prompt that the file has loaded:

hadoop fs -lsr /user/demo/census

Results will vary a bit, but the output should include the census.dat file: /user/demo/census/census.dat. For example the Hadoop Command Prompt in HDInsight Server should return this row with a different date and time, possibly with a different userid:

-rw-r--r-- 1 hadoop supergroup 360058 2013-01-31 17:17 /user/demo/census/census.dat

If you want to run Hadoop file system (fs) commands from the JavaScript console, replace "hadoop fs -" with "#".

#lsr /user/demo/census

This tells Hadoop that we want to execute a file system command and recursively list the subdirectories and files under the specified directory. If you chose to use ASV then add that prefix (I will assume for the rest of this blog that you know to add asv:/ or hdfs:// if necessary):

hadoop fs -lsr asv://user/demo/census

If you need to remove a file or directory for some reason, you can do so with one of the remove commands (rm = remove a single file or directory, rmr = remove recursively the directory, all subdirectories, and all files therein):

hadoop fs –rm /user/demo/census/census.dat hadoop fs –rmr /user/demo/census

For more details on rm or other commands:

hadoop fs –help hadoop fs –help rm hadoop fs –help rmr

Hive Table

Create an external Hive table pointing to the Hadoop data set you just loaded. To do this from a command line open your Hadoop Command Prompt on your HDInsight head node. On an Azure cluster, look for the Remote Desktop tile from the HDInsight Service portal. This allows you to connect to the head node and you can optionally choose to save the connection as an RDP file for later use. In the Hadoop Command Prompt, type in the keyword hive to open the interactive Hive command line interface console (CLI). In some early versions of HDInsight the Hive directory isn’t in the system path so you may have to first change to the Hive bin directory in the Hadoop Command Prompt:

cd %hive_home%\bin

Now enter the Hive CLI from the Hadoop Command Prompt:

hive

Copy and Paste the below create statement into the Hive CLI window. Or you can paste the CREATE statement into the web portal via the Hive interactive screen. If you are using the ASV option, change the STORED AS line to include asv:/ before the rest of the string (asv://user/demo/census). Take note of the fact that you are specifying the folder (and implicitly all files in it) and not a specific file.

CREATE EXTERNAL TABLE census (State_FIPS int, County_FIPS int, Population bigint, Pop_Age_Over_69 bigint, Total_Households bigint, Median_Household_Income bigint, KeyID string) COMMENT 'US Census Data' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' STORED AS TEXTFILE LOCATION '/user/demo/census';

This CREATE command tells Hive to create an EXTERNAL table as opposed to an “internal” or managed table. This means you are in full control of the data loading, data location, and other parameters. Also, the data will NOT be deleted if you drop this table. Next in the command is the column list, each column uses one of the limited number of primitive data types available. The COMMENT is optional, you can also add a COMMENT for one or more of the columns if you like. ROW FORMAT DELIMITED means we have a delimiter between each column and TERMINATED BY ‘\t’ means the column delimiter is a tab. The only allowed row delimiter as of Hive 0.9 is a new line and that is the default so we don’t need to specify it. Then we explicitly say this is a collection of TEXTFILEs stored at the LOCATION /user/demo/census (notice that we are not specifying a specific file, but rather the specific directory). We could specify either hdfs:/// or asv:// in front of the location; by default the current default storage location is HDFS so it is an optional prefix (the default can change!).

Now check that the table has been created:

SHOW TABLES;

Check the definition of the table:

DESCRIBE FORMATTED census;

And look at a sampling of the data:

SELECT * FROM census LIMIT 10;

You can now choose to leave the Hive CLI (even the exit requires the semicolon to terminate the command):

EXIT;

Excel 2013 Visualizations

Preparation

In Excel 2013 you don’t have to download PowerPivot or Power View, you just need to make sure they are enabled as COM add-ins. All of the below steps assume you are using Excel 2013.

In Excel 2013 make sure the "Hive for Excel", "Microsoft Office PowerPivot for Excel 2013", and "Power View" add-in are enabled. To do this click on the File tab in the ribbon, then choose Options from the menu on the left. This brings up the Excel Options dialog. Choose Add-ins from the menu on the left. At the very bottom of the box you’ll see a section to Manage the Add-ins. Choose “COM Add-ins” and click on the Go… button. This brings up the COM Add-Ins dialog where you can double check that you have checkmarks next to the Hive, PowerPivot, and Power View add-ins. Click OK.

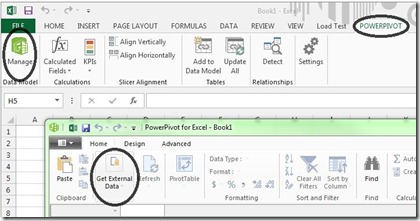

PowerPivot Data

Open a new blank workbook in Excel 2013. We are going to pull some data into PowerPivot, which is an in-memory tabular model engine within Excel. Click on the PowerPivot tab in the ribbon then on the Manage button in the Data Model group on the far left. Click on “Get External Data” in the PowerPivot for Excel dialog.

We’re going to mash up data from multiple sources – SQL, Hive, and Excel.

SQL Data

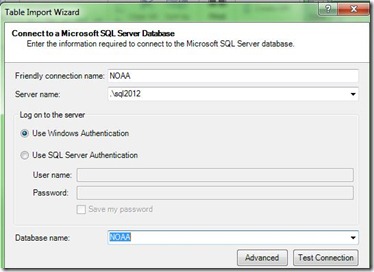

First, let’s get the SQL Server data from the NOAA database. Choose “From Database” then “From SQL Server”. In the Table Import Wizard dialog enter the server and database information:

Friendly connection name = NOAA

Server name = [YourInstanceName such as MyLaptop\SQL2012]

Log on to the server = “Use Windows Authentication”

Database name = NOAA

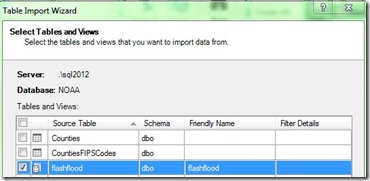

Choose “Next >” then “Select from a list of tables and views to choose the data to import”. Put a checkmark next to the flashflood view we created earlier. Click “Finish”. This will import the flash flood data from SQL Server into the in-memory xVelocity storage used by PowerPivot.

Hive Data

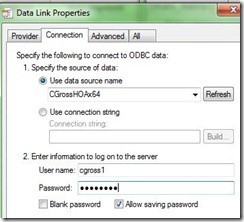

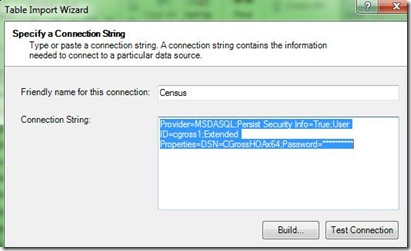

Now let’s load the Hive data. Click on “Get External Data” again. This time choose “From Other Sources” then “Others (OLEDB/ODBC)” and click on the "Next >" button. Enter Census for the friendly name then click on the "Build" button.

Friendly name for this connection = Census

Connection String = “Build”

Provider tab:

OLE DATABASE Provider(s) = “Microsoft OLE DATABASE Provider for ODBC Drivers”

Connection tab:

Use data source name = CGrossHOAx64 [the Hive ODBC DSN created above]

User name = [login for your Hadoop cluster, leave blank for on-premises Nov 2012 preview]

Password = [password for your Hadoop cluster, leave blank for on-premises Nov 2012 preview]

Allow saving password = true (checkmark)

This creates a connection string like this:

Provider=MSDASQL.1;Persist Security Info=True;User ID=cgross1;DSN=CGrossHOAx64;Password=**********

Choose “Next >” then “Select from a list of tables and views to choose the data to import”. Choose the census table then click on “Finish”. This loads a copy of the Hive data into PowerPivot. Since Hive is a batch mode process which has the overhead of MapReduce job setup and/or HDFS streaming for every query, the in-memory PowerPivot queries against the copy of the data will be faster than queries that go directly against Hive. If you want an updated copy of the data loaded into PowerPivot you can click on the Refresh icon at the top of the PowerPivot window.

Save the Excel file.

Excel Data

Our last data set will be a set of state abbreviations copied into Excel then accessed from PowerPivot. Go back to your Excel workbook (NOT the PowerPivot for Excel window). Rename the first sheet using the tab at the bottom to ShortState (just for clarity, this isn't used anywhere).

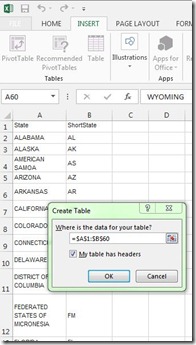

Open https://www.usps.com/send/official-abbreviations.htm in your web browser. Copy the entire "States" table including the headers. Paste the States data into the ShortState worksheet in Excel. Change the headers from State/Possession to State and from Abbreviation to ShortState. Highlight the columns and rows you just added (not the entire column or you will end up with duplicate values). Go to the Insert tab in the ribbon and click on Table in the Tables group on the far left. It will pop up a “Create Table” window, make sure the cells show “=$A$1:$B$60” and that “My table has headers” is checked.

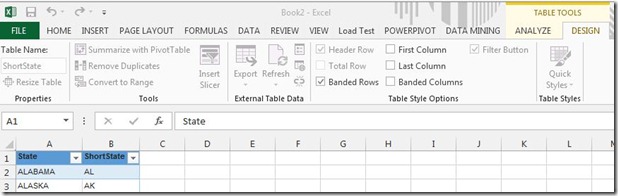

Now you will have a Table Tools ribbon at the top on the far right. Click on the Design tab then in the Properties group in the upper left change the Table Name to ShortState. The fact that the worksheet tab, the table, and one column have the same name is irrelevant, that just seemed less confusing to at the time. Now you have an Excel based table called ShortState that is available to be used in the tabular model.

Data Model

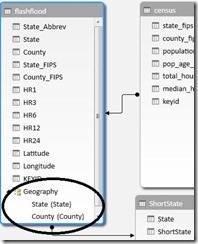

Click on the PowerPivot tab and choose Add to Data Model at the top in the Tables group. This will change the focus to the PowerPivot for Excel window. Go to the Home tab in the PowerPivot for Excel window and click on Diagram View in the View group in the upper right. You can resize and rearrange the tables if that makes it easier to visualize the relationships. Add the following relationships by clicking on the relevant column in the first table then dragging the line to the other column in the second table:

Table Flashflood, column State to table ShortState, column State

Table Census, column keyid to table flashflood, column KEYID

Click on the flashflood table and then on the Create Hierarchy button in the upper right of the table (as circled in the image above). Name the hierarchy Geography and drag State then County under it.

Save the Excel file. You may need to click on “enable content” if you close/reopen the file.

Calculations

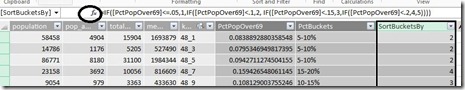

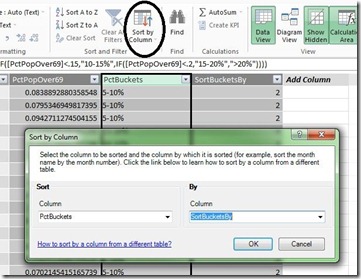

In the PowerPivot for Excel window, we’re going to add some calculated columns for later use. Click on the Data View button in the View group at the top. You should now see three tabs at the bottom, one for each of the tables in the model. Click on the census table and then right-click on “Add Column” at the far right of the column headers. Choose to insert column. This will create a new column named CalculatedColumn1. In the formula bar that opened above the column headers paste in “=[Pop_Age_Over_69]/[Population]” (without the quotes). This will populate the new column with values such as 0.0838892880358548. Right-click on the column and choose “Rename Column” – name it PctPopOver69 since it gives us the percentage of the total population that is over 69 years old. That’s the first formula below, repeat those steps for the 2nd and 3rd formulas. PctBuckets bucketizes the output into more meaningful groupings while SortBucketsBy gives us a logical ordering of the buckets. Since most of the numbers fall into a range of less than 20% the buckets are skewed towards that lower range that actually has a variety of data.

- PctPopOver69=[Pop_Age_Over_69]/[Population]

- PctBuckets=IF([PctPopOver69]<=.05,"<5%",IF([PctPopOver69]<.1,"5-10%", IF([PctPopOver69]<.15,"10-15%",IF([PctPopOver69]<.2,"15-20%",">20%"))))

- SortBucketsBy=IF([PctPopOver69]<=.05,1,IF([PctPopOver69]<.1,2, IF([PctPopOver69]<.15,3,IF([PctPopOver69]<.2,4,5))))

Now let’s use those new calculated columns. Highlight the new PctBuckets column (the 2nd one you just added) and click on the Sort by Column button in the Sort and Filter group. Pick Sort By Column and choose to sort PctBuckets by SortBucketsBy. This affects the order of data in the visualizations we will create later.

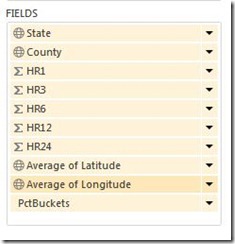

Since some of the columns are purely for calculations or IT-type use and would confuse end users as they create visualizations and clutter the field picker, we’re going to hide them. In the Census table right-click on the PctPopOver69 column and choose “Hide from Client Tools”. Repeat for census.SortBucketsBy, census.keyid, census.State_FIPS, census.County_FIPS, flashflood.State_FIPS, flashflood.County_FIPS, and flashflood.KEYID. Our PowerPivot data is now complete so we can close the PowerPivot for Excel window. Note that the Excel workbook only shows the ShortState tab. That’s expected as this is the Excel source data, not the in-memory PowerPivot tables that we’ve created from our three data sources. All of our data is now in the spreadsheet and ready to use in a report.

Save the Excel file.

Power View

Now let’s use Power View to create some visualizations. In the main Excel workbook, click on the Insert tab in the ribbon and then under the Reports group (near the middle of the ribbon) click on Power View.

This creates a new tab in your workbook for your report. Rename the tab to SandyEvacuation. In the Power View Fields task pane on the right, make sure you choose All and not Active so that every table in the model is visible. Uncheck any fields that have already been added to the report.

Where it says “Click here to add a title” enter a single space (we’ll create our own title in a different location later). In the Power View tab click on the Themes button in the Themes group. Choose the Solstice theme (7 from the end). This theme shows up well on the dark, stormy background we will add later and it has colors that provide good indicators for our numbers.

Now let's add some data to the report. In the Power View Fields task pane, expand the flashflood table and add a checkbox to State then to County. This will create a new table on the left. Add HR1, HR3, HR6, HR12, and HR24 from the flashflood table in that order (you can change the order later at the bottom of the task pane). Add Latitude and Longitude from flashflood. Expand census and click on PctBuckets. Now we have the data we need to build a map-based visualization that will show rainfall across a geographic area.

Note: If you get a message at any point asking you to "enable content", click on the button in the message to do so.

Map

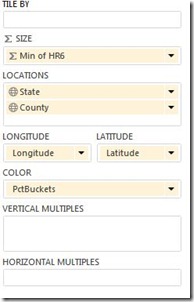

In the Power View Fields task pane go to the Fields section at the bottom. Click on the arrow to the right of Latitude and Longitude and change both from sum to average.

With the focus on the table in the main window, click on the Design tab then Map within the Switch Visualization group on the left. This creates one map per state and changes the choices available in the task pane. Change the following fields at the bottom of the screen to get a map that allows us to see the path of Hurricane Sandy as it crosses New England.

TILE BY = [blank]

SIZE = HR6 (drag it from the field list to replace HR1, set it to minimum)

LOCATIONS = Remove the existing field and drag down the Geography Hierarchy from flashflood

LONGITUDE = Longitude

LATITUDE = Latitude

COLOR = PctBuckets

VERTICAL MULTIPLES = [remove field so it's blank]

HORIZONTAL MULTIPLES = [blank]

With the focus still on the map, click on the LAYOUT tab in the ribbon. Set the following values for the buttons in the Labels group, they will apply to this particular visualization (the map):

Title = none

Legend = Show Legend at Right

Data Labels = Center

Map Background = Road Map Background

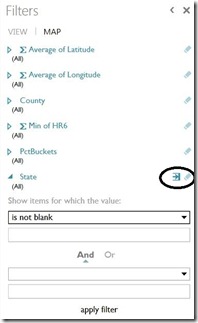

Go to the Power View tab, make sure the focus is on the map. Click on Map in the Filters section to the right of the report. Click on the icon to the far right of State. This adds another icon (a right arrow) for “Advanced filter mode”, click on that. Choose “is not blank” from the first drop down. Click on “apply filter” at the bottom. Repeat for County. This eliminates irrelevant data with no matching rows.

Make it Pretty

Go to the Power View tab so we can add some text. In the Insert group click on Text Box. It will insert a box somewhere on the page, paste in “Evacuation Targets - % of Population Over 69 by County” (without the quotes). Size the box so that it is a long rectangle with all the text on one line, then move the box above the map. Repeat for a text box that says “Hurricane Sandy 2012” and move that above the previous text box. Make the text size of the last box = 24 in the TEXT tab (resize the box if needed).

Hint: To move an object on the report, hover over the object until a hand appears then drag the object.

Download some Hurricane Sandy pictures. In the Power View tab go to the Background Image section. Choose Set Image and insert one of your hurricane images. Set Transparency to 70% or whatever looks best without overwhelming the report. You may need to change the Image Position to stretch. In the Themes group of the Power View tab set Background to “Light1 Center Gradient” (gray fade in to center). Move the two titles and the map as far into the left corner as you can to leave room for the other objects. If you like, reduce the size of the map a bit (hover near a corner until you get a double-headed arrow) add a couple more pictures on the right side (Insert group, Picture button).

Save the Excel file.

Slicers

Now let’s create some slicers and add another chart to make it more visually appealing and useful.

Click in the empty area under the map, we are going to create a new object. Click on flashflood.State in the task pane to add that column to the new table. Click on the scroll bar on the right side of the table to make sure the focus is on the table. Click on Slicer in the Design tab. Stay in the Design tab and under the Text section click on the “A with a down arrow” button to reduce the font by one size. If necessary, do some minor resizing of the table so it doesn’t fall off the page or cut off a state name in the middle but still shows a handful of rows. Expand Filters on the right side of the screen and drag flashflood.State to the filters section. Choose the Advanced button, “is not blank”, and “apply filter”. Repeat these steps to create a separate slicer for County. Now when you click on a particular county, for example, in the slicer everything on the report will be filtered to that one county.

Save the Excel file.

Rainfall Chart

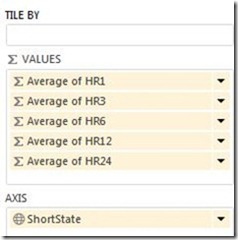

Click in the empty space to the right of the new County slicer. Add ShortState.ShortState and 5 separate flashflood columns, one for each time increment collected: HR1, HR3, HR6, HR12, HR24. In the Design tab choose “Other Chart” then Line. In the “Power View Fields” task pane make sure TILE BY is empty, VALUES for HR1-24 = AVERAGE, and AXIS = ShortState.

Reduce the font size by 1 in the Text group of the Design tab then resize to make sure all values from CT to WV show up in the chart (stretch it to the right). In the Power View tab click on Insert.Text Box, move the box to be above the new chart, and type in the text “Average Rainfall by State”.

Make sure the focus is on the rainfall chart and go to the LAYOUT tab:

Title = None

Legend = Show Legend at Right

Data Labels = Above

Save the Excel file.

That’s the completed report using the same steps as the report I demo’d at PASS Summit 2012 (download that report from the bottom of the blog). Try changing the SIZE field to HR1 (rainfall projection for the next hour), HR6, etc. Click through on the state map values to see the more detailed county level values. Click on a slicer value to see how that affects the map.

What you see in this report is a combination of SQL Server (structured) data and Hive (originally unstructured) data all mashed up into a single report so the end user doesn't even have to know about the data sources. You can add more types of data, change the granularity of the data, or change how you visualize the data as you explore. The Hive data in particular is very flexible, you can change the structure you've imposed without reloading the data and add new data sources without lots of pre-planning. Using BI client tools to access all the additional rich data companies are starting to archive and mine in Big Data technologies such as HDInsight is very powerful and I hope you are starting to see the possibilities of how to use Hive in your own work.

I hope you’ve enjoyed this small bite of big data! Look for more blog posts soon on the samples and other activities.

Note: the CTP and TAP programs are available for a limited time. Details of the usage and the availability of the CTP may change rapidly

Technorati Tags: Big Data,Hadoop,Hive,PowerPivot,Power View