Load SQL Server BCP Data to Hive

Load SQL Server BCP Data to Hive

Small Bites of Big Data

Cindy Gross, SQLCAT PM

As you start learning more about Hadoop you may want to take a look at how the same data and queries work for SQL Server and for Hadoop. There are various ways to do this. For now I’ll show you something that utilizes some of your existing SQL Server skills (BCP) and some new Hadoop skills (basic Hadoop FS and Hive commands). There are other methods such as FTP, cURL, and Sqoop that I won’t cover right now. Also, if you want to load data from the Azure DataMarket you can follow these instructions.

Today we’ll walk through making a copy of the FactProductInventory table from AdventureWorksDW2012 on a SQL Server 2012 instance. The below TSQL will generate BCP output commands for a single table in the current SQL Server database context and write tab delimited data to c:\temp. Comment out the @tablename references in the SELECT and WHERE clauses to generate the script for all tables in the database.

USE [AdventureWorksDW2012];

DECLARE @servername sysname, @dbname sysname, @tablename sysname, @outputdir sysname

SELECT @servername = @@SERVERNAME

,@dbname = DB_NAME()

,@outputdir = 'c:\temp\'

,@tablename = 'FactProductInventory'

SELECT 'bcp ' + OBJECT_SCHEMA_NAME(object_id) + '.' + name + ' out '

+ @outputdir + OBJECT_SCHEMA_NAME(object_id) + '_' + name + '.dat -b 10000 -d '

+ @dbname + ' -T -c -S ' + @servername

FROM sys.objects

WHERE type_desc = 'USER_TABLE'

AND name = @tablename

;

In this case the BCP code generated is (no line break):

bcp dbo.FactProductInventory out c:\temp\dbo_FactProductInventory.dat -b 10000 -d AdventureWorksDW2012 -T -c -S CGROSSBOISE\SQL2012

Paste the BCP command to a Command Prompt and run it.

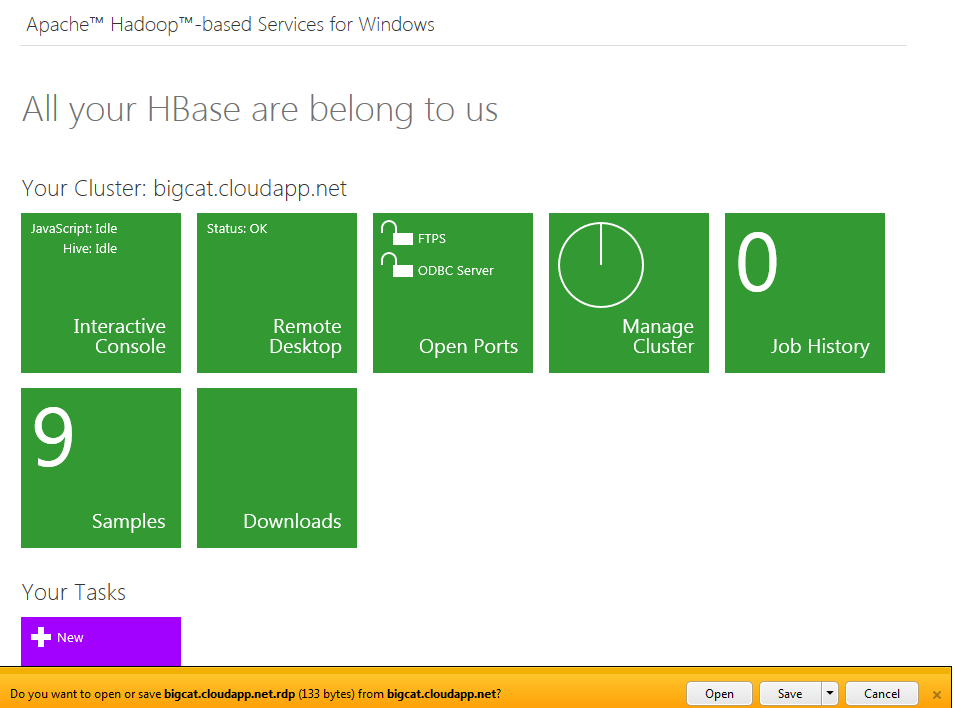

If you have not yet created an Apache™ Hadoop™-based Services for Windows Azure cluster follow these steps to do so (this is a CTP so the exact steps/screens will change over time).

From your Hadoop cluster portal click on the “Remote Desktop” button and choose to “Save As” the RDP that is generated.

Right click on the RDP you saved and choose “edit”. Go to the “Local Resources” tab click on “More…” under “Local devices and resources”. Add a check mark to “Drives” then click “OK”. Go back to the “General” tab and click on “Save”. Now choose “Connect” to open a remote desktop connection to your Hadoop head node.

Open the “Hadoop Command Shell”. Copy/paste or type these commands (beware of some editors changing dashes or other characters to non-executable values) to create a directory and copy the data file to your head node. The /y on the copy will overwrite the file if it already exists.

Md c:\data

Copy \\tsclient\C\temp\dbo_FactProductInventory.dat c:\data /y

Dir c:\data

Now from the same prompt load the data into Hadoop HDFS. The fs indicates you are running a filesystem command from a Hadoop script. Generally the same commands are available from the “Interactive JavaScript” console in the portal by replacing “hadoop fs –“ with “#”. For example, “hadoop fs –lsr /” from a Hadoop Command Prompt and “#lsr /” from the JavaScript console both return a recursive list of all directories and files starting at the root (/). Try some variations such as “#ls” (non-recursive contents of default directory) and “#lsr /user” (recursive list of the user directory).

hadoop fs -put c:\data\dbo_FactProductInventory.dat /demo/demo.dat

hadoop fs -lsr /

Now launch the command line version of Hive (you can alternatively use the Interactive Hive console in the portal, but I’m showing you the automatable version) and add metadata to the HDFS data. Note that the CREATE EXTERNAL TABLE statement wraps in the window, there is no line break. Because I choose to use the EXTERNAL keyword the data stays in its original HDFS location and will not be deleted when I drop the Hive table. Since the Hive keywords are different the data type names are not exactly the same as they were in SQL Server. Basically I generated the script for this table from SSMS then made a few changes. I removed the constraints and indexes then changed date and money to string. I also removed the brackets and the “dbo.” schema qualifier. If you don’t copy the empty line under the last command you will have to hit enter for the last command to complete.

hive;

CREATE EXTERNAL TABLE FactProductInventory(ProductKey int,DateKey int,MovementDate string,UnitCost string,UnitsIn int,UnitsOut int,UnitsBalance int) COMMENT 'Hive Demo for #24HOP' ROW FORMAT DELIMITED FIELDS TERMINATED by '\t' STORED AS TEXTFILE LOCATION '/demo';

quit;

Now let’s run some queries. You can either start Hive again from the command line or run it from the Interactive Hive portal in the GUI.

select * from FactProductInventory where ProductKey = 230 and DateKey = '20080220';

The output on my single data node Hadoop cluster looks like this (the line starting with 230 is the actual result set):

Total MapReduce jobs = 1

Launching Job 1 out of 1

Number of reduce tasks is set to 0 since there's no reduce operator

Starting Job = job_201209281938_0013, Tracking URL = https://10.119.36.106:50030/jobdetails.jsp?jobid=job\_201209281938\_0013

Kill Command = c:\Apps\dist\bin\hadoop.cmd job -Dmapred.job.tracker=10.119.36.106:9010 -kill job_201209281938_0013

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 0

2012-09-28 23:27:45,271 Stage-1 map = 0%, reduce = 0%

2012-09-28 23:27:58,301 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 3.187 sec

2012-09-28 23:27:59,316 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 3.187 sec

2012-09-28 23:28:00,316 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 3.187 sec

2012-09-28 23:28:01,332 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 3.187 sec

2012-09-28 23:28:02,347 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 3.187 sec

2012-09-28 23:28:03,363 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 3.187 sec

2012-09-28 23:28:04,379 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 3.187 sec

2012-09-28 23:28:05,394 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 3.187 sec

2012-09-28 23:28:06,410 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 3.187 sec

2012-09-28 23:28:07,425 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.187 sec

MapReduce Total cumulative CPU time: 3 seconds 187 msec

Ended Job = job_201209281938_0013

MapReduce Jobs Launched:

Job 0: Map: 1 Accumulative CPU: 3.187 sec HDFS Read: 31077011 HDFS Write: 38 SUCESS

Total MapReduce CPU Time Spent: 3 seconds 187 msec

OK

230 20080220 2008-02-20 20.3900 0 0 4

Time taken: 48.465 seconds

Note that if you run this same query in SSMS you will see an instant response but here in Hadoop it took 48 seconds of total time. No matter how many times you run this query you will see approximately the same execution time because the result set is not cached. This shows that we have used Hadoop in an anti-pattern way – we went after a single row of data. Filtered row sets are a strength of well-indexed relational systems while querying entire, very large, unindexed data sets is a strength of Hadoop. Hive generates MapReduce code and that MapReduce code goes through the same steps each time to find the data, distribute job tasks across the data nodes (map), and then bring the results sets back (reduce). The cumulative CPU time once it actually executes the map phase is still over 3 seconds. I chose this example both to illustrate that point and because it gives you data you are familiar with to ease you into the Hadoop and Hive worlds.

If you want to remove the metadata from Hive and the data you’ve just loaded from Hadoop HDFS execute these steps from a Hadoop Command Shell:

hive

drop table FactProductInventory;

quit;

hadoop fs -rmr /demo/demo.dat

Now you know one way to copy data from SQL Server to Hadoop. Keep exploring Hadoop and keep buzzing about Hive!

I hope you’ve enjoyed this small bite of big data! Look for more blog posts soon on the samples and other activities.

Note: the CTP and TAP programs are available for a limited time. Details of the usage and the availability of the CTP may change rapidly.