F#, Wordle and Visual Text Analysis

In the previous post, I presented Wordle as a beautiful tool to compress natural language “documents”, i.e. a document is a sequence of words, into an aggregate – the word cloud. Consequently, while a multiset of documents may be hard to understand from the standpoint of information content, presented concepts, etc. an aggregate, summary, abstract, the gist of them may yield quick (but incomplete) insight. For this, terms such as (customer) sentiment analysis, document summarisation, information retrieval and natural language understanding often pop up, when value (in a business or purely abstract meaning) is sought to be gained from natural language (documents).

This blog post aims much lower in that I want to use a tool chain, i.e. F# and Wordle to aggregate a document into something that can be analysed by the (currently available) best processor – the human brain. So, assuming a text is made up of words that represent the topic(s) of the text, those words that appear frequently might help us to hypothesise about the topic(s) of the text.

Now, the problem is phrased in such a way that it actually gives away the simple and unrealistic solution:

Extract words from text.

A word is a sequence of letters of an alphabet.

For simplicity’s sake, an english word is made up of only letters. That is, we do not accept contractions, clitics, named entities (like R2D2, etc.), numbers, etc.. Words are delivered as a sequence line by line.Remove words that are invalid (with respect to point (1.)).

Transform words into a common format, i.e. lower case without whitespace at pre/post-fix position.

Stop words, i.e. frequent words increasing noise in a text, will be removed.

Count each word, i.e. whenever a word occurs we give it a one (remember how we used to count as a kid …).

Output the count of each word, i.e. “<word> 1”.

If you feel like you have seen this a lot recently – Yes, it looks and feels like the Hadoop “Hello World” example. Consequently, the code is similar and laid out in such a way that you can use it in a Hadoop streaming scenario, i.e. the code is put into a container/ program "called FMapper, which takes as input from stdin:

1: let invalidWordsReg = Regex( "^\d+$|^\d+\w+$|^\w+\d+$|^\d+.+$|\s+|(�|©|@|®)+" )

2: let mutable line = ""

3: while line <> null do

4:

5: line <- Console.ReadLine()

6:

7: if (String.IsNullOrEmpty(line) = false) then

8:

9: let words = line.Split([|" "; "/"; "\\"; ","; "."; ";"; "?"; "!"; ":";

10: ")"; "("; "<"; ">"; "["; "]"; "}"; "{";

11: "+"; "-"; "*"; "%"; "_"; "#"; "&";

12: "§"; "$"; "~"; "|"; "^";

13: "\""; "'"; "="; "”"; "“"|], StringSplitOptions.RemoveEmptyEntries)

14:

15: words

16: |> Seq.map(fun word -> word.Trim() )

17: |> Seq.map(fun word -> word.Replace("”", "").Replace("“", ""))

18: |> Seq.map(fun word -> word.ToLowerInvariant())

19: |> Seq.filter(fun word -> (invalidWordsReg.IsMatch word ) = false)

20: |> Seq.filter(fun word -> ( StopWords.isEnglishStopWord word ) = false)

21: |> Seq.map(fun w -> (w, 1))

22: |> Seq.iter(fun (w, c) -> printfn "%s\t%d" w c )

23:

24: done

As you can see, the sample follows the imperative style (while loop). However, you might use a recursive function peeking on stdin and calling the word processing pipeline whenever there is a line of word(s) available to be processed. Is it necessary to output a 1 for each word? For the simple example, that is discussed here – NO. However, instead of outputting each single word, you might think about computing some summary statistic from that word, group of words, sentence, etc. For example, we might group the word sequence using a word as a key. Furthermore, imagine the distributed (Hadoop streaming) case, where local knowledge (each map step) has to be integrated to form global knowledge (the reduce step). Consequently, the simple mapper step serves two points:

- Performing analysis (a very simple one in this case)

- Imposing a schema, i.e. from a non-relational text input to relational output “<word>, <count>”

Now, the above program executed on a sample text taken from a SQL Server 2008 help page yields the following output, which illustrates the point of stop words. Yes, we did remove some of them but depending on your application domain you might even remove different ones or more.

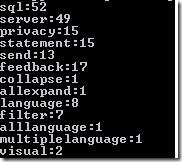

What are we left with? We need to integrate the individual word counts. The following “Reducer” program takes care of this using a Dictionary. Global word counts are printed to standard output so that they can be used for further computation.

1: let mutable line = ""

2: let xmap = Dictionary<string,int>()

3:

4: while ( line <> null ) do

5:

6: line <- Console.ReadLine()

7:

8: if String.IsNullOrEmpty(line) = false then

9: let vLine = line.Split([|"\t"|], StringSplitOptions.RemoveEmptyEntries)

10:

11: if Array.length vLine <> 2 then failwith "invalid format of input data"

12:

13: let key = vLine.[0]

14: let cnt = Int32.Parse(vLine.[1])

15:

16: if ( xmap.ContainsKey key ) then

17: let v = xmap.Item( key )

18: let newv = v + cnt

19: xmap.Item(key) <- newv

20: else

21: xmap.Add( key, cnt )

22:

23: done

24:

25: xmap

26: |> Seq.iter(fun kvp -> printfn "%s:%d" kvp.Key kvp.Value )

Now, we can test the tool chain by invoking the following command line:

FMap.exe < "C:\TestData\SQLTestDocument.txt" | FReduce.exe

The result of this computation can be seen in the following:

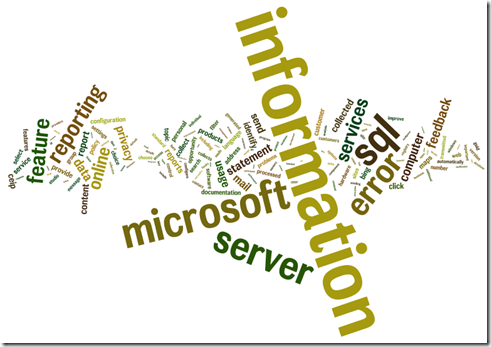

The last step left from our discussion is Wordle. That is, we feed those <word,count> pairs to Wordle. Either by going to the web site or by using the code snippet I presented previously. Either way - our result looks something like this:

Now, simply by looking at the word cloud, we might conclude that the (unknown) document we analysed, might contain information about a “server” from “microsoft”, which has something to do with “sql”, “reporting” among other “services”. Maybe we could use this to query our search engine of choice, leading to maybe this result (or read the document ![]() ):

):

Closing remarks:

The method presented is far from a real world example of automatic summarisation, customer sentiment analysis and other computational problems, which aim to bring structure and/or sense to natural language documents. The meaning of and relationship between words was totally ignored. For example:

1.

SQL is an acronym for structured query language but if both occur in a document, we have 4 words (SQL, structured, query, and language) instead of just on representative for SQL.

2.

No handling of synonyms. Assuming the text contained some word and a lot of (different) synonyms for that word, depending on the frequency of the word and its synonyms, we might or might not have noticed the topic the word and its synonyms represent.

3.

To be or not to be. That is, the small word not (or no, etc.) reverses a statement (negation) or is used to emphasise a statement ( rhetorical question ). Now assuming, each time our high frequency words occurred with a negation, we would still have gotten high counts for those words but the documents topic might be opposite to our impression.

4.

A more realistic solution would make use of machinery, such as LSA, PCA, tf-idf and the vector space model (as a common metaphore).

Takeaway:

Natural language processing, F# and Wordle are fun and go together excellently ![]() - Read you soon!

- Read you soon!