Launching a Startup mobile App in Australia? How hard can it *really* be?? Day 5 Report

Things really got interesting today! I received the first delivery of the data from my researcher. This means I can now setup my Azure tables and data access layer. I plan on making the data accessible via oData feeds as well as via web services.

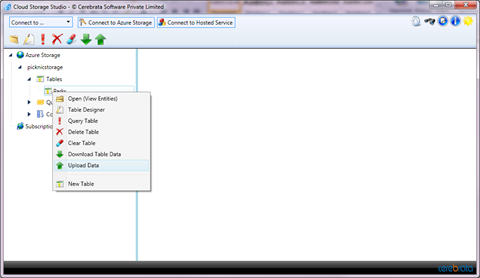

I use a nifty little tool called Cerebrata Cloud Storage to access & manipulate my azure table storage. Its a really handy tool for working with both local development storage and the Azure based storage.

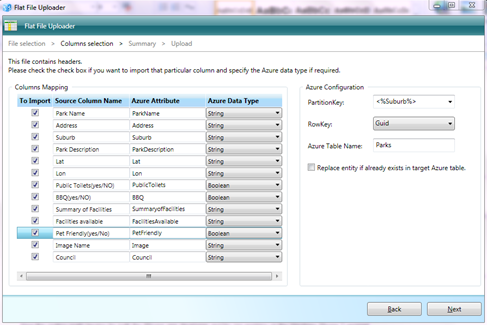

I was able to create my tables easily, and then use the tool to upload the data I have in CSV to my table storage. You can also use the Cerebrata free tool on Codeplex to upload my excel file (saved as CSV) directly into the cloud: https://azurefileupload.codeplex.com/ They have the extra benefit of allowing you to define the columns & keys through the simple GUI tool.

Be sure to remove any non alphanumeric (i.e. spaces etc – although an underscore is permitted) from the column names. It is also recommended to use as short column names as possible to save on space used – but for this example I am keeping long names for ease of demonstration.

Another thing that is interesting to note is the use of Suburb as the Partition Key & Unique GUID as my choice for the partition & row key. I spent a lot of time thinking about this as the correct choice for these two columns will be what determines the optimal search query return time. The partition key is used to split the data over different partitions. The Row key must be unique for each row. I had initially thought of using the Lat/Lon or a combination of the two as my row key as it is unlikely that there will be 2 parks in the same location. My concern with this was when I open the API to allow manual addition of parks, this may become an issue in future. I chose Suburb name as the partition key because I think it will be the most even way to distribute the data over the tables and also the most common way that we will query the data. There is a great description of Table Storage on Azure here on Steve Nagy’s Blog that I recommend you read.

The first challenge with my data import came up as soon as the data was imported I and realised the comma’s in the file I received were corrupting the columns in csv format and needed some massaging. I was able to quickly empty the data in the azure tables via the cerebrata tool & then look at the best way to convert the data. I ended up using tab separated Text Files by saving as text from within excel & then re uploading the data.

The next challenge was the pure number & size of images. I thought about building a simple image uploaded (and thumbnail creation tool) much like the simple getting started tutorials for Azure – but instead decided to setup a dropbox folder that the data researcher can upload to & then I can once again use the handy Cerebrata tool to upload too. Mind you I could have used Skydrive just as easily for this as well – except that it is full of my own photos already! ![]()

Now the coding really begins for both the iPhone app developer and for me working on the Windows Phone 7 version!