UAP: Speech Recognition (part 1)

Frankly speaking I am not sure if it’s a good idea to talk to your computer but it depends on different factors. Of course, if you are going to move a mouse cursor using your voice, probably, it’s not a very good idea but what about IoT devices or scenarios with many different options. In some cases you already use your voice for managing some devices like GPS navigator. In case of GPS you use voice in both directions. It’s not easy to check your way all time, especially if you are in traffic but modern GPS system can notify you about directions, the right lanes and roads condition using text to speech technologies. Additionally it’s not easy to type a new address but it’s easy to pronounce it. So, speech engines can be very useful in some cases and I understand Microsoft presenters, who like to start their presentations with Cortana because things like Cortana allow to introduce new user experience and open doors for developers.

Let’s see how to use speech recognition engine for UAP (Universal Application Platform) applications. I am going to discuss Text to Speech classes as well as Speech recognition classes.

Text to Speech

In order to transform your text to speech you can use SpeechSynthesizer class from Windows.Media.SpeechSynthesis namespace. In the simplest case you can use the following code:

private async void TalkSomething(string text)

{

SpeechSynthesizer synthesizer = new SpeechSynthesizer();

SpeechSynthesisStream synthesisStream =

await synthesizer.SynthesizeTextToStreamAsync(text);

media.AutoPlay = true;

media.SetSource(synthesisStream, synthesisStream.ContentType);

media.Play();

}

In the first two lines we create SpeechSynthesizer object and use SynthesizeTextToStreamAsync method to get a reference to the stream, which should contain audio output. In order to play the audio in our application we can use MediaElement which you can place in any place of your page. This code is very simple and you should not ask any permissions or anything of the kind. But if you are going to use Text to Speech service in a more advanced way you need to spend more time. For example, this code doesn’t answer questions like: “what is the language there?” or “how to pronounce complex text?”

Let’s start with the language. Because your application can use just installed languages you cannot assume that user’s computer is able to speak Russian or Spanish. So, if you are going to use non-English, it’s better to check if the language is available. If you are using English it’s better to check that default language is English because in some cases it’s not true. In order to do it you can use the following static properties of SpeechSynthesizer class:

DefaultVoice – provide information about default voice which your application will use if you don’t setup different one;

AllVoices – allows to get access to list of all voices in the system. You can use LINQ or indexer to find the right voice;

Pay special attention that if you are looking for particular language you can find several voices there because voice is not just language. For example I have two default voices in my system and both of them are English but the first one is related to a male while the second one to a female. DefaultVoices and AllVoices properties allow to work with VoiceInformation objects which contain all needed information like Language, DisplayName and Gender.

So, if you want to check language you can use the following code:

var list = from a in SpeechSynthesizer.AllVoices

where a.Language.Contains("en")

select a;

if (list.Count() > 0)

{

synthesizer.Voice = list.Last();

}

If you look at SpeechSynthesizer methods you can find that there are two methods SynthesizeTextToStreamAsync and SynthesizeSsmlToStreamAsync. The second method allows to implement more complex Text to Speech scenarios. It supports Speech Synthesis Markup Language (SSML), which allows to make more complex sentences and manage how to pronounce it. Of course SSML is a text format which is based on XML, so you can easily create your own or modify existing one.

Let’s look at some SSML elements:

speak – root element for SSML which allows to setup the default language as well;

audio – allows to include to the speech an audio file. It allows to include some effects, music etc.;

break – you can declare pause using this element. It has some attributes like duration and strength;

p or s – it allows to define paragraph which has own language. Thanks to this element you can use different (supported) languages in the speech;

prosody – allows to setup volume;

voice – allows to select on of predefined languages based on its attributes like gender;

Here is an example of a short SSML file which combine English and Russian sentences:

<speak version="1.0"

xmlns="https://www.w3.org/2001/10/synthesis"

xml:lang="ru-RU">

<s xml:lang="ru">Чтобы сказать добрый день по английски, произнесите</s>

<s xml:lang="en">Good morning</s>

</speak>

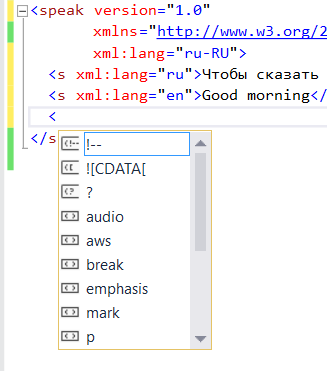

If you are going to create SSML in Visual Studio, you need to use XML template, define speak element and Visual Studio will start to support Intellisense system there.

In order to use SSML file you need download it like a string and pass to SynthesizeSsmlToStreamAsync method.