The Power of Speech in Your Windows Phone 8.1 Apps–Speech Recognition

Previous posts in this series:

The Power of Speech in Your Windows Phone 8.1 Apps

The Power of Speech in Your Windows Phone 8.1 Apps – Text-to-Speech

In the first two parts in this series, you learned how to enable users to launch your app using voice commands and how to allow your apps to read out information to your users. To tie it all together, we will incorporate speech recognition within a Windows Phone app.

Speech recognition allows your user to talk to your app once the app is launched and in the foreground. You can program your app to react to specific words or phrases, as well as handle unknown commands.

The Flow

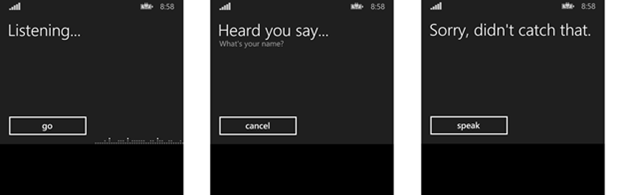

Speech recognition follows a simple process. The app “listens” for the user’s input. It then processes the captured speech. If it is able to match what the user said, the app will then ask the user to “confirm” the match. If the user’s speech was inaudible or could not be matched, then an error screen will display.

SpeechRecognizer and Speech Constraints

To use speech recognition within your Windows Phone app, you will need to first create a new instance of the SpeechRecognizer object, which allows you to use the built-in dialogs or a custom UI to prompt the user for speech input, display a confirmation if the input is recognized, or display an error if the input is not recognized.

The next step is to select which speech constraints you wish to use, and add them to the Constraints collection on the SpeechRecognizer instance. There are 3 types of speech constraints:

- SpeechRecognitionTopicConstraint - based on a dictation or web search

- SpeechRecognitionListConstraint - based on a list of words or phrases

- SpeechRecognitionGrammarFileConstraint - based on a Speech Recognition Grammar Specification (SRGS) file

At least one constraint must be added before speech recognition can be performed, as shown below:

using Windows.Media.SpeechRecognition;

...

private SpeechRecognizer speechRecognizer;

public async Task InitializeSpeechRecognizerAsync(string topicHint)

{

speechRecognizer = new SpeechRecognizer();

//add dication grammar to the recognizer

SpeechRecognitionTopicConstraint topicConstraint =

new SpeechRecognitionTopicConstraint(SpeechRecognitionScenario.Dictation,

"dictation");

speechRecognizer.Constraints.Add(topicConstraint);

//compile constraints before performing speech recognition

await speechRecognizer.CompileConstraintsAsync();

}

Using the Built-In Prompts

Once you have created a SpeechRecognizer instance, and added the necessary constraints, you can then prompt the user to speak and capture the user’s speech input. The easiest way to do this is to leverage the built-in dialogs that are available, by calling the RecognizeWithUIAsync method on the SpeechRecognizer instance. That’s all you need. One line of code.

public async Task SpeakTextAsync()

{

SpeechRecognitionResult recognitionResult =

await speechRecognizer.RecognizeWithUIAsync();

}

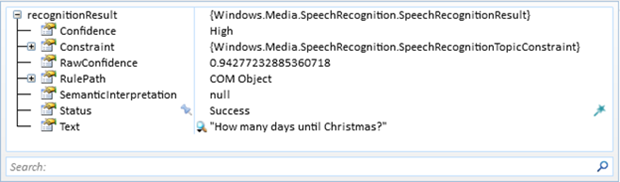

f you want to know what word or phrase the recognizer derived from the user’s speech, and the confidence level of that match, you can check the SpeechRecognitionResult that is returned:

Setting the UI Options

The built-in dialogs also provide a degree of customization, by changing the following property values in the SpeechRecognizer.UIOptions class:

- AudiblePrompt – the prompt message displayed on the Listening screen

- ExampleText – the sample message displayed below the AudiblePrompt on the Listening screen

- IsReadBackEnabled – if true, the text that was recognized on the Heard You Say screen will be read back to the user

- ShowConfirmation – if true, the Heard You Say screen will display upon recognition of the user’s speech input

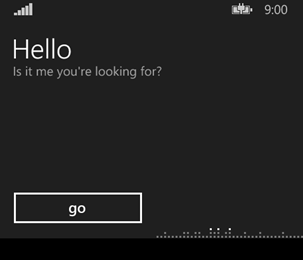

In the example below, I simply want to change the main prompt and example text that is displayed on the Listening screen:

public async Task SpeakTextAsync()

{

speechRecognizer.UIOptions.AudiblePrompt = "Hello";

speechRecognizer.UIOptions.ExampleText = "Is it me you're looking for?";

SpeechRecognitionResult recognitionResult =

await speechRecognizer.RecognizeWithUIAsync();

}

The above code will cause the Listening dialog to look like this:

And that’s all there is to it!

Wrapping Up

In this series, we discussed the reasons why you should leverage the power of speech within your apps. We demonstrated how to use the Speech API to allow your users to launch your app using voice commands and continue to communicate with your app once it is launched using speech recognition. We also demonstrated how to allow your app to read out information to the user, enabling a hands-free experience.

Now it’s time to build a new app, incorporating speech, and submitting it in the next #DevMov challenge!