Using the SEO Toolkit to generate a Sitemap of a remote Web Site

The SEO Toolkit includes a set of features (like Robots Editor and Sitemap Editor) that only work when you are working with a local copy of your Web Site. The reason behind it is that we have to understand where we need to save the files that we need to generate (like Robots.txt and Sitemap XML files) without having to ask for physical paths as well as to verify that the functionality is added correctly such as only allowing Robots.txt in the root of a site, etc. Unfortunately this means that if you have a remote server that you cannot have a running local copy then you cannot use those features. (Note that you can still use Site Analysis tool since that will crawl your Web Site regardless of platform or framework and will store the report locally just fine.)

The Good News

The good news is that you can technically trick the SEO Toolkit into thinking you have a working copy locally and allow you to generate the Sitemap or Robots.txt file without too much hassle (“too much” being the key).

For this sample, lets assume we want to create a Sitemap from a remote Web site, in this case I will use my own Web site (https://www.carlosag.net/ , but you can specify your own Web site, below are the steps you need to follow to enable those features for any remote Web site (even if it is running in other versions of IIS or any other Web Server).

Create a Fake Site

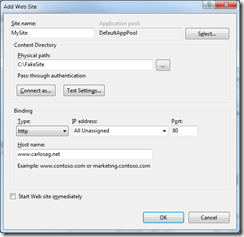

- Open IIS Manager (Start Menu->InetMgr.exe)

- Expand the Tree until you can see the “Sites” node.

- Right-click the “Sites” node and select “Add Web Site…”

- Specify a Name (in my case I’ll use MySite)

- Click “Select” to choose the DefaultAppPool from the Application Pool list. This will avoid creating an additional AppPool that will never run.

- Specify a Physical Path where you will want the Robots and Sitemap files to be saved. I recommend creating just a temporary directory that clearly states this is a fake site. So I will choose c:\FakeSite\ for that.

- Important. Set the Host name so that it matches your Web Site, for example in my case www.carlosag.net.

- Uncheck the “Start Web site immediately”, since we do not need this to run.

- Click OK

This is how my Create site dialog looks like:

Use the Sitemap Editor

Since we have a site that SEO Toolkit thinks it is locally now you should be able to use the features as usual.

- Select the new site created above in the Tree

- Double-click the Search Engine Optimization icon in the Home page

- Click the link “Create a new Sitemap”

- Specify a name, in my case Sitemap.xml

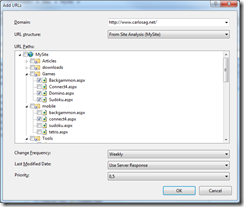

- Since this is a remote site, you will see that the physical location option shows an empty list, so change the “URL structure” to will use the “<Run new Site Analysis>..” or if you already have one you can choose that.

- If creating a new one, just specify a name and click OK (I will use MySite). At this point the SEO Toolkit starts crawling the Remote site to discover links and URLs, when it is done it will present you the virtual namespace structure so you can work with.

- After the crawling is done, you can now check any files you want to include in your Sitemap and leverage the Server response to define the changed date and all the features as if the content was local. and Click OK

This is the way the dialog looks when discovered my remote Web site URLs:

You will find your Sitemap.xml generated in the physical directory specified when creating the site (in my case c:\FakeSite\Sitemap.xml").

Use the Robots Editor

Just as with the Sitemap Editor, once you prepare a fake site for the remote server, you should be able to use the Robots Editor and leverage the same Site analysis output to build your Robots.txt file.

Summary

In this blog I show how you can use the Sitemap and Robots Editor included in the SEO Toolkit when working with remote Web sites that might be running in different platforms or different versions of IIS.