Managing Your HDInsight Cluster and .Net Job Submissions using PowerShell

This post explains how best to manage an HDInsight cluster using a management console and Windows PowerShell. The goal is to outline how to create a simple cluster, provide a mechanism for managing an elastic service, and demonstrate how to customize the cluster creation.

Before provisioning a cluster one need to ensure the Azure subscription has been correctly configured and a management station has been setup.

Managing Your HDInsight Cluster

Prepare Azure Subscription Storage

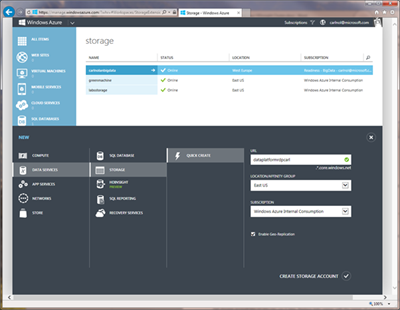

Under the subscription in which the HDInsight is going to be running one needs a storage account defined, and a management certificate uploaded.

Create a Storage Account

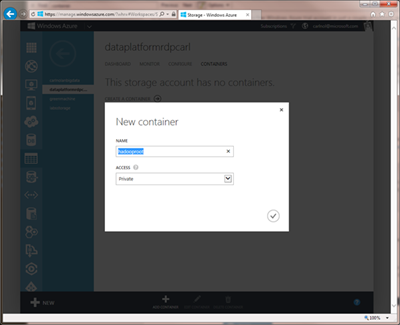

Next, under the storage account management create a new default container:

At this point the Azure subscription is ready.

Setting Up Your Environment

Once the Azure subscription has been configured, the next step is to configure your management environment.

The first step is to install and configure Windows Azure PowerShell. Windows Azure PowerShell is the scripting environment that allows you to control and automate the deployment and management of your workloads in Windows Azure. This includes provisioning virtual machines, setting up virtual networks and cross-premises networks, and managing cloud services in Windows Azure.

To use the cmdlets in Windows Azure PowerShell you will need to download and import the modules, as well as import and configure information that provides connectivity to Windows Azure through your subscription. For instructions, see Get Started with Windows Azure Cmdlets.

Prepare the Work Environment

The basic process for running the cmdlets by using the Windows Azure PowerShell command shell is as follows:

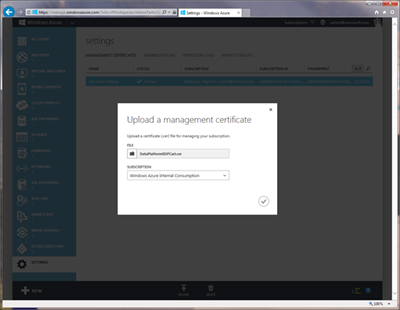

· Upload a Management Certificate. For more information about how to create and upload a management certificate, see How to: Manage Management Certificates in Windows Azure and the section entitled “Create a Certificate”.

· Install the Windows Azure module for Windows PowerShell. The install can be found on the Windows Azure Downloads page, under Windows.

· Finally set the Windows PowerShell execution policy. To achieve this run the Windows Azure PowerShell as an Administrator and execute the following command:

Set-ExecutionPolicy RemoteSigned

The Windows PowerShell execution policy determines the conditions under which configuration files and scripts are run. The Windows Azure PowerShell cmdlets need the execution policy set to a value of RemoteSigned, Unrestricted, or Bypass.

Create a Certificate

From a Visual Studio Command Prompt run the following:

makecert -sky exchange -r -n "CN=<CertificateName>" -pe -a sha1 -len 2048 -ss My "<CertificateName>.cer"

Where< CertificateName> is the name that you want to use for the certificate. It must have a .cer extension. The command loads the private key into your user store, indicated by the “-ss My” switch. In the certmgr.msc, it appears in the path of Certificates -Current User\Personal\Certificates.

Upload the new certification to Azure:

If one needs to connect from several machines the same certificate should be installed into the user store on that machine also.

Configure Connectivity

There are two ways one can configure connectivity between your workstation and Windows Azure. Either manually by configuring the management certificate and subscription details with the Set-AzureSubscription and Select-AzureSubscription cmdlets, or automatically by downloading the PublishSettings file from Windows Azure and importing it. The settings for Windows Azure PowerShell are stored in: <user>\AppData\Roaming\Windows Azure PowerShell.

The PublishSettings file method works well when you are responsible for a limited number of subscriptions, but it adds a certificate to any subscription that you are an administrator or co-administrator for. This can be achieved by:

· Execute the following command:

Get-AzurePublishSettingsFile

· Save the .publishSettings file locally.

· Import the downloaded .publishSettings file by running the PowerShell command:

Import-AzurePublishSettingsFile< publishSettings-file>

Security note: This file contains an encoded management certificate that will serve as your credentials to administer all aspects of your subscriptions and related services. Store this file in a secure location, or delete it after you follow these steps.

In complex or shared development environments, it might be desirable to manually configure the publish settings and subscription information, including any management certificates, for your Windows Azure subscriptions. This is achieved by running the following script:

$mySubID = "<subscritionID>"

$certThumbprint = "<Thumbprint>"

$myCert = Get-Item cert:\CurrentUser\My\$certThumbprint

$mySubName = "<SubscriptionName>"

$myStoreAcct = "<StorageAccount>"

Set-AzureSubscription -SubscriptionName $mySubName -Certificate $myCert -SubscriptionID $mySubID

Select-AzureSubscription -Default $mySubName

Set-AzureSubscription –SubscriptionName $mySubName -CurrentStorageAccount $myStoreAcct

You can view the details of the Azure Subscription run the command:

Get-AzureSubscription –Default

Or:

Get-AzureSubscription -Current

This script uses the Set-AzureSubscription cmdlet. This cmdlet can be used to configure a default Windows Azure subscription, set a default storage account, or a custom Windows Azure service endpoint:

Set-AzureSubscription -DefaultSubscription $mySubName

You can also just select a subscription for the current session using:

Select-AzureSubscription -SubscriptionName $mySubName

When managing your cluster and you have multiple subscription it is advisable to set the default subscription.

Install HDInsight cmdlets

Ensure the HDInsight binaries are accessible and loaded. They can be downloaded from:

https://www.microsoft.com/en-gb/download/details.aspx?id=40724

Once downloaded and installed they are ready to be loaded into Azure PowerShell environment:

Import-Module "C:\Program Files (x86)\Windows Azure HDInsight PowerShell\Microsoft.WindowsAzure.Management.HDInsight.Cmdlet\Microsoft.WindowsAzure.Management.HDInsight.Cmdlet.dll"

Assuming the Windows Azure HDInsight PowerShell Tools were installed to the default directory.

Cluster Provisioning

There are various ways one can provision a new cluster. If using the Management Portal there is a simple UX process once can follow:

However for an automated process it is recommended that PowerShell is utilized. In running the script to be presented one has to remember load the HDInsight management binaries, as outlined in the “Install HDInsight cmdlets” step.

The following sections present a series of scripts for automating cluster management. Further examples can also be found at Microsoft .Net SDK for Hadoop - PowerShell Cmdlets for Cluster Management.

Provision Base Cluster

Firstly one need access to the Subscription Id and Certificate, for the current Azure Subscription, which was registered in the “Setting Up Your Environment” step.

When running these scripts the italicized variables will first need to be defined.

1. In this version you need to explicitly specify subscription information for cmdlets. The plan is after the cmdlets are integrated with Azure PowerShell tools this step won’t be necessary. Once can get the Default or Current subscription:

$subscriptionInfo = Get-AzureSubscription -Default

2. Once the Subscription Information has been obtained the properties of the Subscription are accessible:

$subName = $subscriptionInfo | %{ $_.SubscriptionName }

$subId = $subscriptionInfo | %{ $_.SubscriptionId }

$cert = $subscriptionInfo | %{ $_.Certificate }

$storeAccount = $subscriptionInfo | %{ $_.CurrentStorageAccountName }

3. Get the Storage Account Key so you can use it later.

$key = Get-AzureStorageKey $storeAccount | %{ $_.Primary }

4. Next get the Storage Account information:

$storageAccountInfo = Get-AzureStorageAccount $storeAccount

$location = $storageAccountInfo | %{ $_.Location }

5. Define the PS Credentials to be used when creating the cluster:

$hadoopUsername = "Hadoop"

$clusterUsername = "Admin"

$clusterPassword = "MyPassword"

$secpasswd = ConvertTo-SecureString $clusterPassword -AsPlainText -Force

$clusterCreds = New-Object System.Management.Automation.PSCredential($clusterUsername, $secpasswd)

6. Define the final cluster creation parameters:

$clusterName = $Cluster

$numberNodes = $Hosts

$containerDefault = $StorageContainer

$blobStorage = "$storeAccount.blob.core.windows.net"

7. Finally create new cluster. The cmdlet will return Cluster object in about 10-15 minutes when cluster is finished provisioning:

New-AzureHDInsightCluster -Subscription $subId -Certificate $cert -Name $clusterName -Location $location -DefaultStorageAccountName $blobStorage -DefaultStorageAccountKey $key -DefaultStorageContainerName $containerDefault -Credential $clusterCreds -ClusterSizeInNodes $numberNodes

Once the cluster has been created you will see the results displayed on the screen:

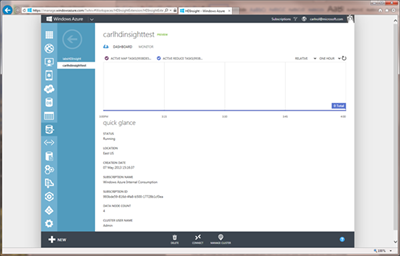

Name : carlhdinsighttest

ConnectionUrl : https://carlhdinsighttest.azurehdinsight.net

State : Running

CreateDate : 07/11/2013 14:16:37

UserName : Username

Location : Location

ClusterSizeInNodes : 4

You will also be able to see the cluster in the management portal:

If the subscription one is managing is not the configured default, before creating the cluster, one should execute the following command:

Set-AzureSubscription -DefaultSubscription $mySubName

To create a script that enables one to pass in parameters, such as the number of required hosts, you can define a Param statement as the first line of the script:

Param($Hosts = 4, [string] $Cluster = $(throw "Cluster Name Required."), [string] $StorageContainer = "hadooproot")

The complete script would thus be as follows:

Param($Hosts = 4, [string] $Cluster = $(throw "Cluster Name Required."), [string] $StorageContainer = "hadooproot")

# Import the management module and assemblies

Import-Module "C:\Program Files (x86)\Windows Azure HDInsight PowerShell\Microsoft.WindowsAzure.Management.HDInsight.Cmdlet\Microsoft.WindowsAzure.Management.HDInsight.Cmdlet.dll"

Add-Type –AssemblyName System.Web

# Get the subscription information and set variables

$subscriptionInfo = Get-AzureSubscription -Default

$subName = $subscriptionInfo | %{ $_.SubscriptionName }

$subId = $subscriptionInfo | %{ $_.SubscriptionId }

$cert = $subscriptionInfo | %{ $_.Certificate }

$storeAccount = $subscriptionInfo | %{ $_.CurrentStorageAccountName }

$key = Get-AzureStorageKey $storeAccount | %{ $_.Primary }

$storageAccountInfo = Get-AzureStorageAccount $storeAccount

$location = $storageAccountInfo | %{ $_.Location }

$hadoopUsername = "Hadoop"

$clusterUsername = "Admin"

$clusterPassword = "mypassword"

$secpasswd = ConvertTo-SecureString $clusterPassword -AsPlainText -Force

$clusterCreds = New-Object System.Management.Automation.PSCredential($clusterUsername, $secpasswd)

$clusterName = $Cluster

$numberNodes = $Hosts

$containerDefault = $StorageContainer

$blobStorage = "$storeAccount.blob.core.windows.net"

# Create the cluster

Write-Host "Creating '$numbernodes' Node Cluster named: $clusterName" -f yellow

Write-Host "Storage Account '$storeAccount' and Container '$containerDefault'" -f yellow

Write-Host "User '$clusterUsername' Password '$clusterPassword'" -f green

New-AzureHDInsightCluster -Subscription $subId -Certificate $cert -Name $clusterName -Location $location -DefaultStorageAccountName $blobStorage -DefaultStorageAccountKey $key -DefaultStorageContainerName $containerDefault -Credential $clusterCreds -ClusterSizeInNodes $numberNodes

Write-Host "Created '$numberNodes' Node Cluster: $clusterName" -f yellow

The script can then be executed as follows:

. "C:\Scripts\ClusterCreate.ps1" -Hosts 4 -Cluster "carlhdinsighttest" -StorageContainer "hadooproot"

Of course other variables can also be passed in.

Manage Cluster

One can view your current clusters within the current subscription using the following command:

Get-AzureHDInsightCluster -Subscription $subId -Certificate $cert

To view the details of a specific cluster use the following command:

Get-AzureHDInsightCluster $clusterName -Subscription $subId -Certificate $cert

To delete the cluster run the command:

Remove-AzureHDInsightCluster $clusterName -Subscription $subId -Certificate $cert

Thus a script one could use to delete clusters would be:

Param($Cluster = $(throw "Cluster Name Required."))

# Import the management module

Import-Module "C:\Program Files (x86)\Windows Azure HDInsight PowerShell\Microsoft.WindowsAzure.Management.HDInsight.Cmdlet\Microsoft.WindowsAzure.Management.HDInsight.Cmdlet.dll"

# Get the subscription information and set variables

$subscriptionInfo = Get-AzureSubscription -Default

$subName = $subscriptionInfo | %{ $_.SubscriptionName }

$subId = $subscriptionInfo | %{ $_.SubscriptionId }

$cert = $subscriptionInfo | %{ $_.Certificate }

$storeAccount = $subscriptionInfo | %{ $_.CurrentStorageAccountName }

$clusterName = $Cluster

# Delete the cluster

Write-Host "Deleting Cluster named: $clusterName" -f yellow

Remove-AzureHDInsightCluster $clusterName -Subscription $subId -Certificate $cert

The script can then be executed as follows:

. "C:\Scripts\ClusterDelete.ps1" -Cluster "carlhdinsighttest"

There are few word that need to be mentioned around versioning and cluster name.

When a cluster is currently provisioned you will always get the latest build. However if there are breaking changes between your current build and latest build currently executing code could break. Future versions of the cluster provisioning may allow for a version number to be specified.

Also, when you delete a cluster there is no guarantee that when you recreate the cluster the name will be available. To ensure that your name is not going to be used abstract cluster names should be used.

Provision Customized Cluster

You can also provision cluster and configure it to connect to more than one Azure Blob storage or custom Hive and Oozie meta-stores.

This advanced feature allows you to separate the lifetime of your data and metadata from the lifetime of the cluster. This not only includes job submission details but also allows one to retain Hive metadata, and hence table definitions, as long as Hive External tables are used.

Using the same principal of defining the italicized variables first, the first step is to get the subscription details and the storage key of the storage accounts you want to connect your cluster to, in this case 2 storage accounts:

$subscriptionInfo = Get-AzureSubscription -Default

$subName = $subscriptionInfo | %{ $_.SubscriptionName }

$subId = $subscriptionInfo | %{ $_.SubscriptionId }

$cert = $subscriptionInfo | %{ $_.Certificate }

$storeAccount = $subscriptionInfo | %{ $_.CurrentStorageAccountName }

$storeAccountBck = $storeAccount + "bck" # Assume same name with bck suffix

$key = Get-AzureStorageKey $storeAccount | %{ $_.Primary }

$keyBck = Get-AzureStorageKey $storeAccountBck | %{ $_.Primary }

$storageAccountInfo = Get-AzureStorageAccount $storeAccount

$location = $storageAccountInfo | %{ $_.Location }

$hadoopUsername = "Hadoop"

$clusterUsername = "Admin"

$clusterPassword = "Password"

$secpasswd = ConvertTo-SecureString $clusterPassword -AsPlainText -Force

$clusterCreds = New-Object System.Management.Automation.PSCredential($clusterUsername, $secpasswd)

$clusterName = $Cluster

$numberNodes = $Hosts

$containerDefault = $StorageContainer

$blobStorage = "$storeAccount.blob.core.windows.net"

$blobStorageBck = "$storeAccountBck.blob.core.windows.net"

In this instance I have assumed that the secondary (backup) storage account has the same name as the original with a “bck” prefix. This however can be configured for your environment.

The next step is to create your custom SQL Azure Database and Server following this blog post or get credentials to the existing one.

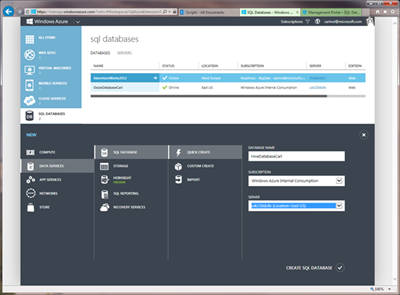

To create a SQL Database one can easily use the management portal:

Using this connectivity information you can create cluster config object, pipe in additional storage and meta-stores configuration, and then finally pipe this config object into the New-AzureHDInsightCluster cmdlet to create a cluster based on this custom configuration.

New-AzureHDInsightClusterConfig -ClusterSizeInNodes $numberNodes

| Set-AzureHDInsightDefaultStorage -StorageAccountName $blobStorage -StorageAccountKey $key -StorageContainerName $containerDefault

| Add-AzureHDInsightStorage -StorageAccountName $blobStorageBck -StorageAccountKey $keyBck

| Add-AzureHDInsightMetastore -SqlAzureServerName $sqlServer -DatabaseName $databaseOozie -Credential $databaseCreds -MetastoreType OozieMetastore

| Add-AzureHDInsightMetastore -SqlAzureServerName $sqlServer -DatabaseName $databaseHive -Credential $databaseCreds -MetastoreType HiveMetastore

| New-AzureHDInsightCluster -Subscription $subId -Certificate $cert -Credential $clusterCreds -Name $clusterName -Location $location

In this instance I have again assumed that the Oozie and Hive database servers are the same and that the credentials are shared. S before this can easily be configured for your environment.

As before one can use the PowerShell Param option to create a script that enables passing in parameters; such as number hosts, cluster name, etc.

A complete configurable script would be as follows:

Param($Hosts = 4, [string] $Cluster = $(throw "Cluster Name Required."), [string] $StorageContainer = "hadooproot")

# Import the management module and assemblies

Import-Module "C:\Program Files (x86)\Windows Azure HDInsight PowerShell\Microsoft.WindowsAzure.Management.HDInsight.Cmdlet\Microsoft.WindowsAzure.Management.HDInsight.Cmdlet.dll"

Add-Type –AssemblyName System.Web

# Get the subscription information and set variables

$subscriptionInfo = Get-AzureSubscription -Default

$subName = $subscriptionInfo | %{ $_.SubscriptionName }

$subId = $subscriptionInfo | %{ $_.SubscriptionId }

$cert = $subscriptionInfo | %{ $_.Certificate }

$storeAccount = $subscriptionInfo | %{ $_.CurrentStorageAccountName }

$storeAccountBck = $storeAccount + "bck" # Assume same name with bck suffix

$key = Get-AzureStorageKey $storeAccount | %{ $_.Primary }

$keyBck = Get-AzureStorageKey $storeAccountBck | %{ $_.Primary }

$storageAccountInfo = Get-AzureStorageAccount $storeAccount

$location = $storageAccountInfo | %{ $_.Location }

$hadoopUsername = "Hadoop"

$clusterUsername = "Admin"

$clusterPassword = "Password"

$secpasswd = ConvertTo-SecureString $clusterPassword -AsPlainText -Force

$clusterCreds = New-Object System.Management.Automation.PSCredential($clusterUsername, $secpasswd)

$clusterName = $Cluster

$numberNodes = $Hosts

$containerDefault = $StorageContainer

$blobStorage = "$storeAccount.blob.core.windows.net"

$blobStorageBck = "$storeAccountBck.blob.core.windows.net"

# Define variables for the SQL database connectivity

$sqlServer = "servername.database.windows.net"

$sqlUsername = "SqlUsername"

$sqlPassword = "SqlPassword"

$sqlpasswd = ConvertTo-SecureString $sqlPassword -AsPlainText -Force

$databaseCreds = New-Object System.Management.Automation.PSCredential($sqlUsername, $sqlpasswd)

$databaseHive = "HiveDatabaseName"

$databaseOozie = "OozieDatabaseName"

# Create the cluster

Write-Host "Creating '$numberNodes' Node Cluster named: $clusterName" -f yellow

Write-Host "Storage Account '$storeAccount' and Container '$containerDefault'" -f yellow

Write-Host "Storage Account Backup '$storeAccountBck'" -f yellow

Write-Host "User '$clusterUsername' Password '$clusterPassword'" -f green

New-AzureHDInsightClusterConfig -ClusterSizeInNodes $numberNodes | Set-AzureHDInsightDefaultStorage -StorageAccountName $blobStorage -StorageAccountKey $key -StorageContainerName $containerDefault | Add-AzureHDInsightStorage -StorageAccountName $blobStorageBck -StorageAccountKey $keyBck | Add-AzureHDInsightMetastore -SqlAzureServerName $sqlServer -DatabaseName $databaseOozie -Credential $databaseCreds -MetastoreType OozieMetastore | Add-AzureHDInsightMetastore -SqlAzureServerName $sqlServer -DatabaseName $databaseHive -Credential $databaseCreds -MetastoreType HiveMetastore | New-AzureHDInsightCluster -Subscription $subId -Certificate $cert -Credential $clusterCreds -Name $clusterName -Location $location

Write-Host "Created '$numberNodes' Node Cluster: $clusterName" -f yellow

The script can then be executed as follows:

. "C:\Scripts\ClusterCreateCustom.ps1" -Hosts 4 -Cluster "carlhdinsighttest" -StorageContainer "hadooproot"

Of course other variables can also be passed in.

Job Submission

In addition to managing the HDInsight cluster the Microsoft .Net SDK for Hadoop also allows one to write MapReduce jobs utilizing the .Net Framework. Under the covers this is employing the Hadoop Streaming interface. The documentation for creating such jobs can be found at:

https://hadoopsdk.codeplex.com/wikipage?title=Map%2fReduce

MRRunner Support

To submit such jobs there is a command-line utility called MRRunner that should be utilized. To support the MRRunner utility you should have an assembly (a .net DLL or EXE) that defines at least one implementation of HadoopJob<>.

If the Dll contains only one implementation of Hadoop<>, you can run the job with:

MRRunner -dll MyDll

If the Dll contains multiple implementations of HadoopJob<>, then you need to indicate the one you wish to run:

MRRunner -dll MyDll -class MyClass

To supply options to your job, pass them as trailing arguments on the command-line, after a double-hyphen:

MRRunner -dll MyDll -class MyClass -- extraArg1 extraArg2

These additional arguments are provided to your job via a context object that is available to all methods on HadoopJob<>.

Local PowerShell Submission

Of course one can submit job directly from a .Net executable and thus PowerShell scripts.

MapReduceJob is a property on Hadoop connection object. It provides access to implementation of IStreamingJobExecutor interface which handles the creation and execution of Hadoop Streaming jobs. Depending on how the connection object was created it can execute jobs locally using Hadoop command-line or against remote cluster using WebHCat client library.

Under the covers the MRRunner utility will invoke LocalStreamingJobExecutor implementation on your behalf.

Alternatively one can invoke the executor directly and request it execute a Hadoop Job:

var hadoop = Hadoop.Connect();

hadoop.MapReduceJob.ExecuteJob<JobType>(arguments);

Utilizing this approach one can thus integrate Job Submission into cluster management scripts:

# Define Base Paths

$BasePath = "C:\Users\Carl\Projects"

$dllPath = $BasePath + "\WordCountSampleApplication\Debug"

$submitterDll = $dllPath + "\WordCount.dll"

$hadoopcmd = $env:HADOOP_HOME + "/bin/hadoop.cmd"

# Add submission file references

Add-Type -Path ($dllPath + "\microsoft.hadoop.mapreduce.dll")

# Define the Type for the Job

$submitterAssembly = [System.Reflection.Assembly]::LoadFile($submitterDll)

[Type] $jobType = $submitterAssembly.GetType("WordCountSampleApplication.WordCount", 1)

# Connect and Run the Job

$hadoop = [Microsoft.Hadoop.MapReduce.Hadoop]::Connect()

$job = $hadoop.MapReduceJob

$result = $job.ExecuteJob($jobType)

Write-Host "Job Run Information"

Write-Host "Job Id: " $result.Id

Write-Host "Exit Code: " $result.Info.ExitCode

The only challenge becomes managing the generic objects within PowerShell.

In this case I have used a version of the WordCount sample that comes with the SDK. I have removed the Driver entry point and compiled the assembly into a DLL rather than an EXE.

Remote PowerShell Submission

Of course the ultimate goal is to submit the job from your management console. The process for this is very similar to the Local submission, with the inclusion of the connection information for the Azure cluster.

To provide flexibility in the job configuration allowing for a configuration object to be defined in the PowerShell script and for the mappers and reducers to be defined is desirable. This the full submission script would be as follows:

Param($Cluster = $(throw "Cluster Name Required."), [string] $StorageContainer = "hadooproot", [string] $InputPath = $(throw "Input Path Required."), [string] $OutputFolder = $(throw "Output Path Required."), [string] $Dll = $(throw "MapReduce Dll Path Required."), [string] $Mapper = $(throw "Mapper Type Required."), [string] $Reducer = $Null, [string] $Combiner = $Null)

# Define all local variable names for script execution

$submitterDll = $Dll

$dllPath = Split-Path $submitterDll -parent

$dllFile = Split-Path $submitterDll -leaf

# Import the management module and assemblies

Import-Module "C:\Program Files (x86)\Windows Azure HDInsight PowerShell\Microsoft.WindowsAzure.Management.HDInsight.Cmdlet\Microsoft.WindowsAzure.Management.HDInsight.Cmdlet.dll"

Add-Type -Path ($dllPath + "\microsoft.hadoop.mapreduce.dll")

Add-Type -Path ($dllPath + "\microsoft.hadoop.webclient.dll")

# Get the subscription information and set variables

$subscriptionInfo = Get-AzureSubscription -Default

$subName = $subscriptionInfo | %{ $_.SubscriptionName }

$subId = $subscriptionInfo | %{ $_.SubscriptionId }

$cert = $subscriptionInfo | %{ $_.Certificate }

$storeAccount = $subscriptionInfo | %{ $_.CurrentStorageAccountName }

$key = Get-AzureStorageKey $storeAccount | %{ $_.Primary }

$storageAccountInfo = Get-AzureStorageAccount $storeAccount

$clusterUsername = "Admin"

$clusterPassword = "Password"

$hadoopUsername = "Hadoop"

$containerDefault = $StorageContainer

$clusterName = $Cluster

$clusterHttp = "https://$clusterName.azurehdinsight.net"

$blobStorage = "$storeAccount.blob.core.windows.net"

# Define the Types for the Job (Mapper. Reducer, Combiner)

$submitterAssembly = [System.Reflection.Assembly]::LoadFile($submitterDll)

[Type] $mapperType = $submitterAssembly.GetType($Mapper, 1)

[Type] $reducerType = $Null

if ($Reducer) { $reducerType = $submitterAssembly.GetType($Reducer, 1) }

[Type] $combinerType = $Null

if ($Combiner) { $combinerType = $submitterAssembly.GetType($Combiner, 1) }

# Define the configuration properties

$config = New-Object Microsoft.Hadoop.MapReduce.HadoopJobConfiguration

[Boolean] $config.Verbose = $True

$config.InputPath = $InputPath

$config.OutputFolder = $OutputFolder

# Define the connection properties

[Boolean] $createContinerIfNotExist = $True

[Uri] $clusterUri = New-Object System.Uri $clusterHttp

# Connect and Run the Job

Write-Host "Executing Job Dll '$dllFile' on Cluster $clusterName" -f yellow

$hadoop = [Microsoft.Hadoop.MapReduce.Hadoop]::Connect($clusterUri, $clusterUsername, $hadoopUsername, $clusterPassword, $blobStorage, $key, $containerDefault, $createContinerIfNotExist)

$job = $hadoop.MapReduceJob

$result = $job.Execute($mapperType, $reducerType, $combinerType, $config)

Write-Host "Job Run Information" -f yellow

Write-Host "Job Id: " $result.Id

Write-Host "Exit Code: " $result.Info.ExitCode

The HadoopJobConfiguration allows for the definition of input and output files, along with other job parameters.

To execute the job one has to provide the types for the Mapper, Reducer, and Combiner. If a Reducer or Combiner are not needed then the parameter value of $Null can be used:

. "C:\Scripts\JobSubmissionAzure.ps1" -Cluster "carlhdinsighttest" -StorageContainer "hadooproot" -InputPath "/wordcount/input" -OutputFolder "/wordcount/output" -Dll "C:\Projects\WordCountSampleApplication\ \Debug\WordCount.dll" -Mapper "WordCountSampleApplication.WordCountMapper" -Reducer "WordCountSampleApplication.WordCountReducer" -Combiner "WordCountSampleApplication.WordCountReducer"

One has to remember in this case as we are submitting the job to an Azure cluster the default file system will be WASB.

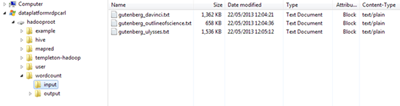

To run this sample I uploaded some text files to the appropriate Azure storage location:

The output then will show up in the wordcount/output container.

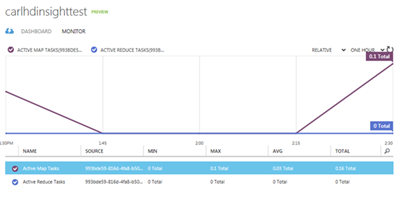

After running this job, from within the management portal, you should see the metrics for the number of mapper and reducers executed be updated:

Unfortunately at this point in time you will not see your job within the job history when managing the cluster. For this you will have to use the standard Hadoop UI interfaces; accessible by connecting to the head node of the cluster.

As an alternative to his approach one could define a HadoopJob class configuration within the code; in addition to the mappers and reducers, such as:

class WordCount : HadoopJob<WordCountMapper, WordCountReducer, WordCountReducer>

To execute this job one would just need to define the type for the HadoopJob, which provides the necessary configuration details:

[Type] $jobType = $submitterAssembly.GetType("WordCountSampleApplication.WordCount", 1)

...

$result = $job.Execute($jobType)

The code in either case is very similar but the former approach is probably more flexible.

Specifying WASB Paths

As mentioned the default schema when submitting MapReduce jobs is Azure Blob Storage. This means the default container specified when creating the cluster is used as the root.

In the example above the path “/wordcount/input” actually equates to:

wasb://hadooproot@mystorageaccount.blob.core.windows.net/wordcount/input

Thus if one wanted to operate out of a different default container such as “data” fully-qualified names could be specified such as:

-InputPath "wasb://data@ mystorageaccount.blob.core.windows.net/books"

-OutputFolder "wasb://data@mystorageaccount.blob.core.windows.net/wordcount/output"

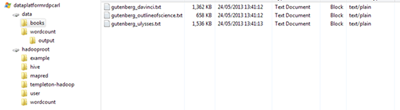

This would equate to the “data” container:

The containers being referenced just need to be under the same storage account.

Elastic MapReduce Submissions

The idea behind an Elastic Service is that the cluster can be brought up, with the necessary hosts, when job execution is necessary. To achieve this, with the provided scripts, one can use the following approach:

Param($Hosts = 4, $Cluster = $(throw "Cluster Name Required."), [string] $StorageContainer = "hadooproot", [string] $InputPath = $(throw "Input Path Required."), [string] $OutputFolder = $(throw "Output Path Required."), [string] $Dll = $(throw "MapReduce Dll Path Required."), [string] $Mapper = $(throw "Mapper Type Required."), [string] $Reducer = $Null, [string] $Combiner = $Null)

# Create the Cluster

. ".\ClusterCreate.ps1" -Hosts $Hosts -Cluster $Cluster –StorageContainer $StorageContainer

# Execute the Job

. ".\JobSubmissionAzure.ps1" -Cluster "carlhdinsighttest" -StorageContainer = "hadooproot" -InputPath "/wordcount/input" -OutputFolder "/wordcount/output" -Dll "C:\Projects\WordCountSampleApplication\ \Debug\WordCount.dll" -Mapper "WordCountSampleApplication.WordCountMapper" -Reducer "WordCountSampleApplication.WordCountReducer" -Combiner "WordCountSampleApplication.WordCountReducer"

# Delete the Cluster

. ".\ClusterDelete.ps1" -Cluster Cluster

To execute the script one just has to execute:

. "C:\Scripts\JobSubmissionElastic.ps1" –Hosts 4 -Cluster "carlhdinsighttest" -StorageContainer = "hadooproot" -InputPath "/wordcount/input" -OutputFolder "/wordcount/output" -Dll "C:\Projects\WordCountSampleApplication\ \Debug\WordCount.dll" -Mapper "WordCountSampleApplication.WordCountMapper" -Reducer "WordCountSampleApplication.WordCountReducer" -Combiner "WordCountSampleApplication.WordCountReducer"

This will create the cluster, run the required job, and then delete the cluster. During the creation the cluster name and number of hosts is specified, and during the job submission the input and output paths are specified.

One could of course roll all the script elements into a single reusable script, as follows:

Param($Hosts = 4, $Cluster = $(throw "Cluster Name Required."), [string] $StorageContainer = "hadooproot", [string] $InputPath = $(throw "Input Path Required."), [string] $OutputFolder = $(throw "Output Path Required."), [string] $Dll = $(throw "MapReduce Dll Path Required."), [string] $Mapper = $(throw "Mapper Type Required."), [string] $Reducer = $Null, [string] $Combiner = $Null, $MapTasks = $Null)

# Define all local variable names for script execution

$submitterDll = $Dll

$dllPath = Split-Path $submitterDll -parent

$dllFile = Split-Path $submitterDll -leaf

# Import the management module and assemblies

Import-Module "C:\Program Files (x86)\Windows Azure HDInsight PowerShell\Microsoft.WindowsAzure.Management.HDInsight.Cmdlet\Microsoft.WindowsAzure.Management.HDInsight.Cmdlet.dll"

Add-Type –AssemblyName System.Web

Add-Type -Path ($dllPath + "\microsoft.hadoop.mapreduce.dll")

Add-Type -Path ($dllPath + "\microsoft.hadoop.webclient.dll")

# Get the subscription information and set variables

$subscriptionInfo = Get-AzureSubscription -Default

$subName = $subscriptionInfo | %{ $_.SubscriptionName }

$subId = $subscriptionInfo | %{ $_.SubscriptionId }

$cert = $subscriptionInfo | %{ $_.Certificate }

$storeAccount = $subscriptionInfo | %{ $_.CurrentStorageAccountName }

$storeAccountBck = $storeAccount + "bck" # Assume same name with bck suffix

$key = Get-AzureStorageKey $storeAccount | %{ $_.Primary }

$keyBck = Get-AzureStorageKey $storeAccountBck | %{ $_.Primary }

$storageAccountInfo = Get-AzureStorageAccount $storeAccount

$location = $storageAccountInfo | %{ $_.Location }

$hadoopUsername = "Hadoop"

$clusterUsername = "Admin"

$clusterPassword = [System.Web.Security.Membership]::GeneratePassword(20, 5)

$secpasswd = ConvertTo-SecureString $clusterPassword -AsPlainText -Force

$clusterCreds = New-Object System.Management.Automation.PSCredential($clusterUsername, $secpasswd)

$clusterName = $Cluster

$numberNodes = $Hosts

$containerDefault = $StorageContainer

$clusterHttp = "https://$clusterName.azurehdinsight.net"

$blobStorage = "$storeAccount.blob.core.windows.net"

$blobStorageBck = "$storeAccountBck.blob.core.windows.net"

# Define variables for the SQL database connectivity

$sqlServer = "mydatabaseserver.database.windows.net"

$sqlUsername = "SqlUsername"

$sqlPassword = "SqlPassword"

$sqlpasswd = ConvertTo-SecureString $sqlPassword -AsPlainText -Force

$databaseCreds = New-Object System.Management.Automation.PSCredential($sqlUsername, $sqlpasswd)

$databaseHive = "HiveDatabaseCarl"

$databaseOozie = "OozieDatabaseCarl"

# Create the cluster

Write-Host "Creating '$numberNodes' Node Cluster named: $clusterName" -f yellow

Write-Host "Storage Account '$storeAccount' and Container '$containerDefault'" -f yellow

Write-Host "Storage Account Backup '$storeAccountBck'" -f yellow

Write-Host "User '$clusterUsername' Password '$clusterPassword'" -f green

New-AzureHDInsightClusterConfig -ClusterSizeInNodes $numberNodes | Set-AzureHDInsightDefaultStorage -StorageAccountName $blobStorage -StorageAccountKey $key -StorageContainerName $containerDefault | Add-AzureHDInsightStorage -StorageAccountName $blobStorageBck -StorageAccountKey $keyBck | Add-AzureHDInsightMetastore -SqlAzureServerName $sqlServer -DatabaseName $databaseOozie -Credential $databaseCreds -MetastoreType OozieMetastore | Add-AzureHDInsightMetastore -SqlAzureServerName $sqlServer -DatabaseName $databaseHive -Credential $databaseCreds -MetastoreType HiveMetastore | New-AzureHDInsightCluster -Subscription $subId -Certificate $cert -Credential $clusterCreds -Name $clusterName -Location $location

Write-Host "Created '$numberNodes' Node Cluster: $clusterName" -f yellow

# Define the Types for the Job (Mapper. Reducer, Combiner)

$submitterAssembly = [System.Reflection.Assembly]::LoadFile($submitterDll)

[Type] $mapperType = $submitterAssembly.GetType($Mapper, 1)

[Type] $reducerType = $Null

if ($Reducer) { $reducerType = $submitterAssembly.GetType($Reducer, 1) }

[Type] $combinerType = $Null

if ($Combiner) { $combinerType = $submitterAssembly.GetType($Combiner, 1) }

# Define the configuration properties

$config = New-Object Microsoft.Hadoop.MapReduce.HadoopJobConfiguration

[Boolean] $config.Verbose = $True

$config.InputPath = $InputPath

$config.OutputFolder = $OutputFolder

if ($MapTasks) {$config.AdditionalGenericArguments.Add("-Dmapred.map.tasks=$MapTasks")}

# Define the connection properties

[Boolean] $createContinerIfNotExist = $True

[Uri] $clusterUri = New-Object System.Uri $clusterHttp

# Connect and Run the Job

Write-Host "Executing Job Dll '$dllFile' on Cluster $clusterName" -f yellow

$hadoop = [Microsoft.Hadoop.MapReduce.Hadoop]::Connect($clusterUri, $clusterUsername, $hadoopUsername, $clusterPassword, $blobStorage, $key, $containerDefault, $createContinerIfNotExist)

$job = $hadoop.MapReduceJob

$result = $job.Execute($mapperType, $reducerType, $combinerType, $config)

Write-Host "Job Run Information" -f yellow

Write-Host "Job Id: " $result.Id

Write-Host "Exit Code: " $result.Info.ExitCode

# Delete the cluster

Write-Host "Deleting Cluster named: $clusterName" -f yellow

Remove-AzureHDInsightCluster $clusterName -Subscription $subId -Certificate $cert

In this instance the cluster password is randomly generated, as it only needs to exist for the lifetime of the job execution. In reality one could also randomly generate the name of the cluster.

Of course one could also customize these scripts to including additional parameters such as additional job arguments, etc. The script presented here does take into consideration the number of mappers; specified with the MapTasks parameter.

This Elastic Job Submission process is possible because the storage used for input and output is Azure Blob Storage. As such the cluster is only needed for compute operations and not storage. In addition the job history is persisted, across cluster creations, in the specified SQL databases.

Of course other parameters could be included in this model to make the PowerShell script more applicable to more job types; such as number of mappers, additional job arguments, etc.

References

Get Started with Windows Azure Cmdlets

Microsoft .Net SDK for Hadoop - PowerShell Cmdlets for Cluster Management

How to: Manage Management Certificates in Windows Azure

Windows Azure SQL Database Management with PowerShell

Microsoft .Net SDK for Hadoop - Map/Reduce

Conclusion

This document hopefully explains how best to manage an HDInsight cluster and submit .Net MapReduce jobs using a management console and Windows PowerShell. The ultimate goal was to provide an approach one could use for managing Elastic Job Submissions where the cluster only exists for the lifetime of the job.

This Elastic approach to job submissions is possible when using Windows Azure Storage Blobs for data retention and Windows Azure SQL Database for job history. Configuring the cluster such that there is a separate of compute and storage processes allows for the cluster to exist when and only when it is needed for computational processing.