Hadoop Streaming and Windows Azure Blob Storage

One of the cool features of the Microsoft Distribution of Hadoop (MDH) is the native support for Windows Azure Blob Storage.

When performing HDFS operations by default one can omit the scheme such that:

hadoop fs -lsr /mobile

Is equivalent to:

hadoop fs -lsr hdfs:///mobile

The commands are defaulting to the HDFS scheme. Although Hadoop comes with its own Distribution File System (HDFS), it does have a general-purpose file system abstraction. With MDH, a new scheme, “asv”, has been introduced to allow one to seamlessly access Windows Azure Blob Storage. Thus one can write commands such as:

hadoop fs -copyFromLocal C:\SampleData\MobileSampleDataBrief.txt asv://mobiledata/data/sampledata0.txt

hadoop fs -copyFromLocal C:\SampleData\MobileSampleDataFull.txt asv://mobiledata/data/sampledata1.txt

Performing a query on the data:

hadoop fs -lsr asv://mobiledata/data

You will see:

c:\apps\dist>hadoop fs -lsr asv://mobiledata/data

-rwxrwxrwx 1 0 2012-01-05 18:10 /data/$$$.$$$

-rwxrwxrwx 1 4000 2011-12-30 20:01 /data/sampledata0.txt

-rwxrwxrwx 1 5015508 2011-12-30 20:01 /data/sampledata1.txt

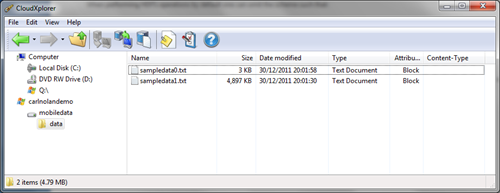

Using CloudXplorer, one can validate the data in Azure:

To enable this feature one just has to update the core-site.xml configuration file and add the following node entries (of course with one’s account name and key):

<property>

<name>fs.azure.buffer.dir</name>

<value>/tmp</value>

</property>

<property>

<name>fs.azure.storageConnectionString</name>

<value>DefaultEndpointsProtocol=http;AccountName=MyAccountName;AccountKey=MyAccountKey</value>

</property>

And that's it. So now onto streaming jobs.

In a previous post I covered running Hadoop Streaming jobs. The usual way of running these jobs is to use HDFS files:

hadoop.cmd jar lib/hadoop-streaming.jar

-input "/mobile/release" -output "/mobile/querytimesrelease"

-mapper "..\..\jars\FSharp.Hadoop.Mapper.exe"

-reducer "..\..\jars\FSharp.Hadoop.Reducer.exe"

-file "C:\bin\Release\FSharp.Hadoop.Mapper.exe"

-file "C:\bin\Release\FSharp.Hadoop.Reducer.exe"

-file "C:\bin\Release\FSharp.Hadoop.MapReduce.dll"

-file "C:\bin\Release\FSharp.Hadoop.Utilities.dll"

However with the addition of the “asv” scheme one can now use Windows Azure Blob Storage directly for running streaming jobs:

hadoop.cmd jar lib/hadoop-streaming.jar

-input "asv://mobiledata/data" -output "/mobile/querytimesazure"

-mapper "..\..\jars\FSharp.Hadoop.Mapper.exe"

-reducer "..\..\jars\FSharp.Hadoop.Reducer.exe"

-file "C:\bin\Release\FSharp.Hadoop.Mapper.exe"

-file "C:\bin\Release\FSharp.Hadoop.Reducer.exe"

-file "C:\bin\Release\FSharp.Hadoop.MapReduce.dll"

-file "C:\bin\Release\FSharp.Hadoop.Utilities.dll"

As you can see, in this instance the MapReduce job is pulling the data from the Windows Azure Blob Storage.

Conclusion

The beauty of the integration of MDH with Windows Azure Blob Storage it that one does not need to copy all your raw data from Azure to HDFS. If your data has been collected in Windows Azure then you can run your MapReduce jobs directly against the data at source.