Azure Machine Learning – Data Preparation

In my last post (HERE) we started a more pragmatic look at the Azure Machine Learning service using the CRISP Data Mining methodology as our outline. We began by looking at Data Understanding, which is Phase 2 and includes data acquisition, data exploration and insight. In this post we will move on to Phase 3, Data Preparation.

Data Prep

The data preparation step encapsulates all activities that are required to transform the raw data into data that is suitable for the experiment at hand. These activities can and usually do vary as they are dependent not only on the experiment but also on the data. Some common examples of these types of activities include:

- Removing duplicate records: Data sets can contain records which have been duplicated for one reason or another. These records typically must be identified and removed from the final data set.

- Missing or NULL Value handing: Missing or NULL values can be problematic depending upon the experiment at hand. When these values are identified, they can either be removed or strategically replaced with another value such as the mean or median value for the variable.

- Handling Categorical & Continuous Variables: Variables can be classified as continuous or quantitative (a person’s income or portfolio value) and categorical or discrete (a person’s gender or asset class). The experiment activity at hand will often dictate the most suitable variable classification.

- Outlier clipping: Outliers within your data set can skew predictions leading to an inaccurate or unreliable model. These data points can be identified in a number of different ways such as calculating the standard deviation for the field/variable and removing any records that fall outside the acceptable threshold.

- Data Normalization or Feature Scaling: Many machine learning algorithms function by calculating distance between points. This can be problematic when independent variables have different significantly different scales such as a person’s age and their salary or net worth. This characteristic can result in the independent variable with larger scale being arbitrarily weighted more heavily by the algorithm. To avoid this issue, the values are normalized or scaled mathematically to similar range (for example to values between 0 and 1).

For the remainder of this post we will look at tasks and examples for implementing each of these activities within the Azure Machine Learning service. Please note that this is intended as only a cursory introduction to the task and is not an exhaustive discussion.

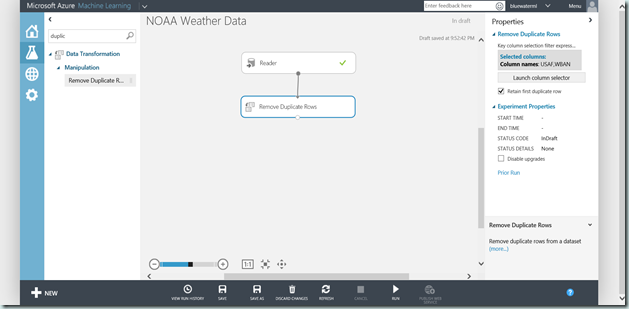

Handling Duplicate Records

Finding duplicate records in a data set is a common occurrence that if led unhandled can skew the results of your experiment. To handle these records, we identify and remove the duplicates using the Remove Duplicate Rows task in ML Studio. This task takes your dataset as input with uniqueness defined by either a single or combination of columns defined in the Column Selector. It’s noteworthy that NULLs are treated differently than empty strings, so you will need to take that into consideration if your data set contains both. The output for the task is your data sets with duplicate records removed.

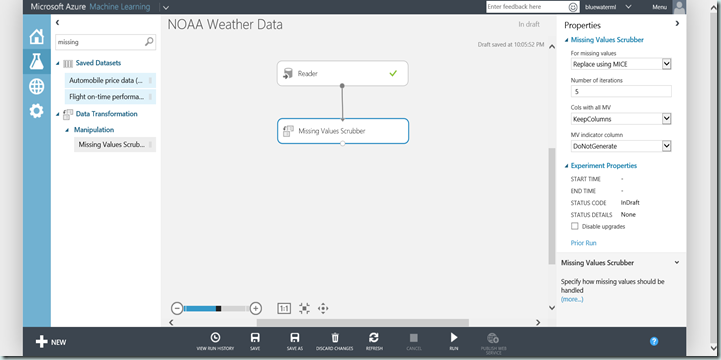

Missing and Null Values

Another common data quality issue that arises in our data sets is the presence of missing or null values. This issue can likewise cause issues downstream in our experiment and typically must be handled. How we handle the missing or null value however can vary either by removing the offending row or by substituting in a value for the one(s) missing. In either case, the Missing Value Scrubber task is the tool of choice for handling this scenario.

To configure the Missing Value Scrubber task we must first decide how we want to handle the missing values. This task supports both the removal and multiple replacement scenarios. The options available are:

- Replace using MICE or Multiple Imputation by Chained Equation

- Custom Value Substitution (a fixed value)

- Mean

- Median

- Mode

- Remove Row

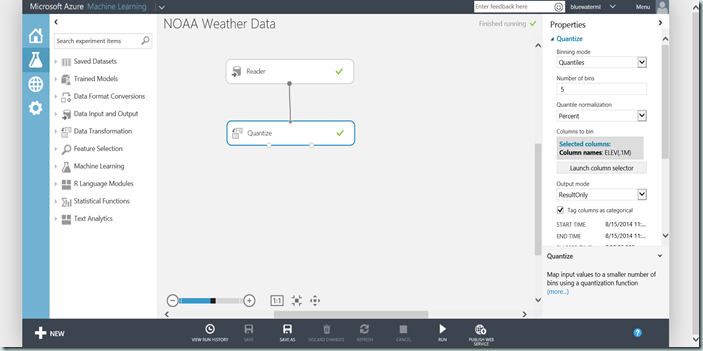

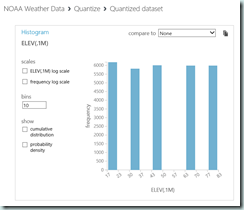

Binning and Bucketing Data

A fair number of machine learning algorithms are designed to work exclusively with categorical variables or inputs. This is problematic if your data set contains continuous features. To work around this issue, the data scientist will bin or bucket the continuous values into groups using a technique known as quantization.

The Quantize task in ML Studio provides this capability out-of-the-box and supports a user-defined number of buckets and multiple quantile normalization functions (Percent, PQuartile, QuartileIndex). In addition this task is capable of appending the bin assignment to the dataset, replacing the dataset value or even returning a dataset of only the resulting bins.

Outliers

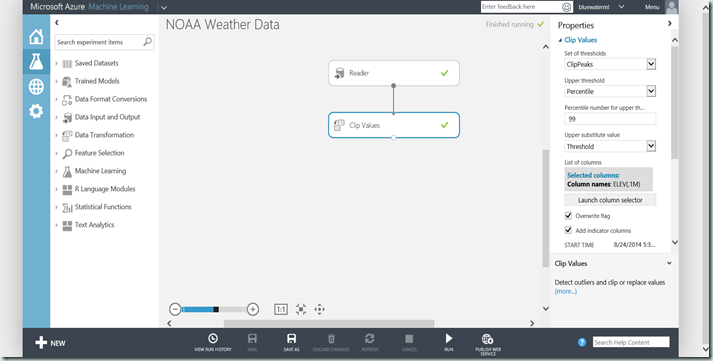

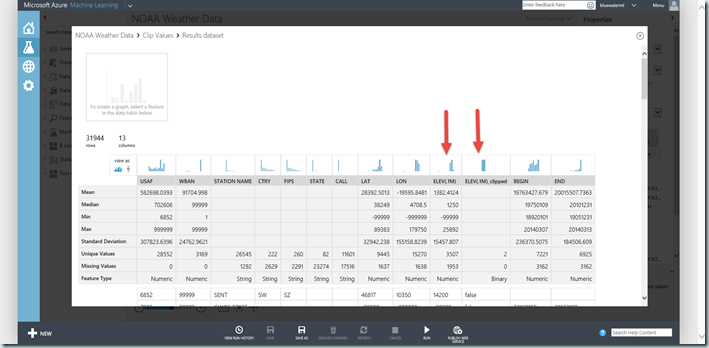

Outliers in data sets are those measurements that are significantly distant from other observations and can be a result of variability or even error. When not handled, outliers can skew the results of your experiments meaning leading to suboptimal results. To remove outliers or clip them, we will use the Clip Values task in ML Studio.

This task accepts a data set as input and is capable of clipping both peaks, sub-peaks or both using either a specified constant or percentile for the selected columns. The outliers, both peak and sub-peak, can be replaced with the threshold, mean, median or a missing value. Optionally, a column can be added to indicate whether a value was clipped or not.

Data Normalization

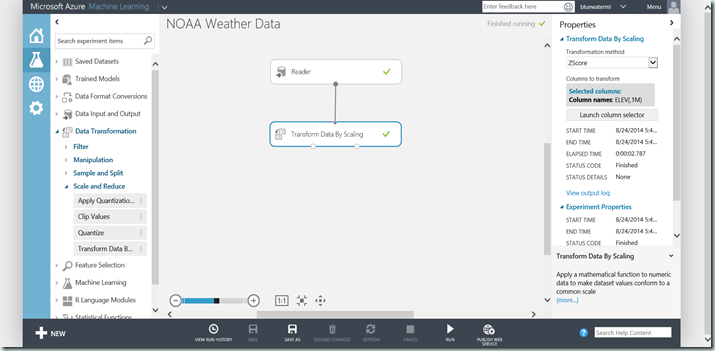

Normalizing variables within our data set is necessary when the scale among variables is significantly different to the extent it can potentially add error or distort our experiment. To prevent this and normalize variables to a common scale we use the Transform Data by Scaling task within ML Studio.

The scaling task offers five different mathematical techniques (z-score, min-max, logistics, log-normal and tanh) for normalization and can operate on one or more exclusively grouped columns.

Wrap-Up

In this post, we reviewed data cleaning and transformation that occur in a typical machine learning experiment and the tasks that are available out-of-the-box with the Azure Machine Learning Service. In the next post of this series we will discuss feature selection.

Till next time!

Chris