Azure Machine Learning – A Deeper Look

In the previous post, we took a cursory look at the newly announced Azure Machine Learning Service. Using the well-worn Adventure Works sample, we implemented the Targeted Mailing example using the Two-Class Boosted Decision Tree algorithm. In this follow-up blog post we will attempt to refine and improve our results first by evaluating other machine learning models and then by adjusting the configuration of the model. We will wrap-up this introductory look by selecting the best outcome and exposing it as an endpoint so that our new model can be integrated into external applications.

Getting Set-up

To get started, let’s log in to ML Studio and create a copy of the experiment we created in the previous post (go HERE for the prior post). Once you are logged in, navigate to the experiments page and select the Simple Bike Buyer experiment. After the experiment launches, simply click the ‘Save As’ button in the toolbar and name the new experiment ‘Bike Buyer Evaluation’.

Evaluating Multiple Models

One of the nice (and easy-to-use) characteristics of ML Studio is the iterative ability to include and evaluate multiple mining models within the experiment. This is a handy feature particularly if you aren’t a data scientist or didn’t happen to sleep through a PhD in Machine Learning and have a strong grasp on all the different models and their characteristics. Let’s take a look at how it works.

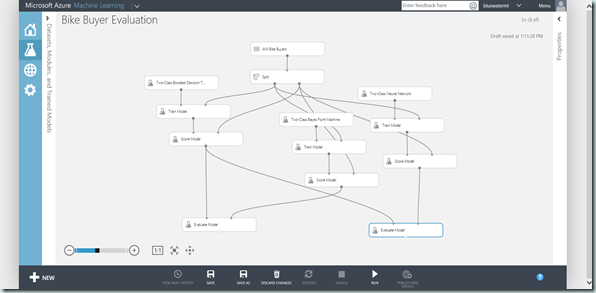

In the initial experiment we used a single model, the Two-Class Boosted Decision Tree. To determine if this is the best model for our data, let’s expand the scope and evaluate both the Two-Class Bayes Point Machine and the Two-Class Neural Network.

The steps for adding both models to the experiment are identical to those used in the previous experiment and can be summarized as follows:

- Add the appropriate Initialize Model item to your experiment.

- Add a Train Model item and wire it up to your model and the training data set. Note that in the Train Model we have to indentify the column we are attempting to predict using the Column Selector in the item properties menu. For this example use the BikeBuyer column.

- Add a Score Model item and wire it up to your trained model and testing data set.

- Use the Evaluate Model item to visualize the results once your experiment has been run.

The one subtle difference with using multiple models, is how we wire up the Evaluate Model item since it only accepts two Score Dataset inputs. For this experiment we will compare our original model (Two-Class Boosted Decision Tree) to each of the new models meaning that we will have two Evaluate Model items and the Scored Dataset for the original model will be passed in as the left-most input for both. After you’ve added your new models and with a bit of organization you should arrive at a screenshot that looks like the one below.

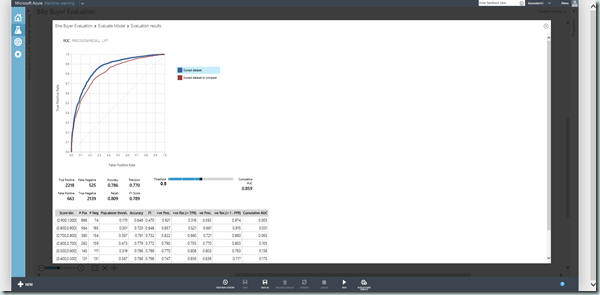

After you run your experiment, the models can be compared to one-another by using the Visualize option on the Evaluate Model output. Notice that the charts plot both models for the purpose of comparison. The scored dataset is always the left-input (the decision tree in the example) and the scored dataset to compare is the right-input.

As the result indicate, both of the new models we included in our experiment faired worse than our original selection. The Two-Class Bayes Point Machine faired significantly worse and for conciseness we will go ahead and eliminate it from our experiment. The Two-Class Neural Network on the other hand faired on slightly worse relatively speaking and deserves a closer look.

Tuning Models

Each model implementation with ML Studio includes a host of properties which can be tuned to alter its behavior in an attempt to improve the outcome. Unfortunately, in order to precisely tune the properties, a fair to deep understanding of each model is required. But another option exists, the Parameter Sweep.

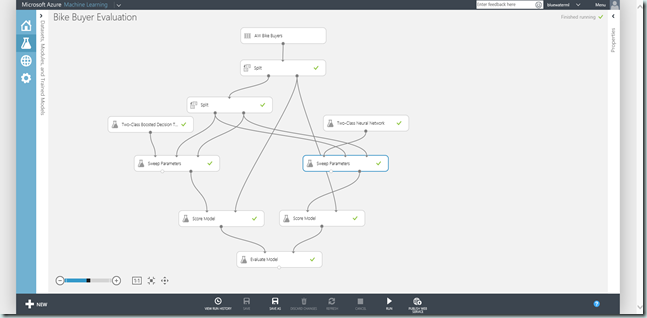

The Parameter Sweep item, takes an untrained model, and two data sets, one for training and one for validation. It then iteratively trains and evaluates the model with different model configurations to determine the most optimal model configuration based on the metric you specify (i.e. Accuracy, Recall, etc).

Let’s implement this item to determine if we can improve the results of our experiment. Start by deleting the existing Train Model items the use the following steps for each model.

- Since, the Parameter Sweep requires both a training and validation set, we need to further split the training data. Add a Split (Data Transformation >> Sample and Split) item and wire the original Split output into this Split’s input. In the properties page, change the Split fraction to 0.8, for an 80/20 split.

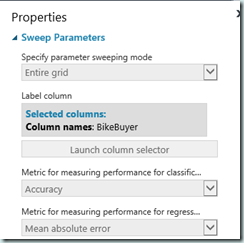

- Add a Sweep Parameters (Machine Learning >> Train) item and wire the untrained model, the 80% split output into the the training input and the 20% output into the validation input. For the Sweep Parameters, select ‘Entire Grid’ for the Sweeping Mode, ‘BikeBuyer’ in the Column Selector and leave the default Accuracy as the metric used to evaluate performance.

- Wire the second output (the best trained model), back into the Score item used previously.

The resulting experiment should look similar to the above screenshot. Next, run the experiment and evaluate the result. Did either model see any improvement over the baseline? Which model performs best?

It looks like in the end the Two-Class Boosted Decision Tree still out performed the other model making this our selection for the purpose of this demo.

Publishing a Model

Now that we have identified and selected a model we are satisfied with, one use case involves exposing the model so that it can be consumed and integrated into existing functions or applications. The Azure Machine Learning services makes this easy using the following steps:

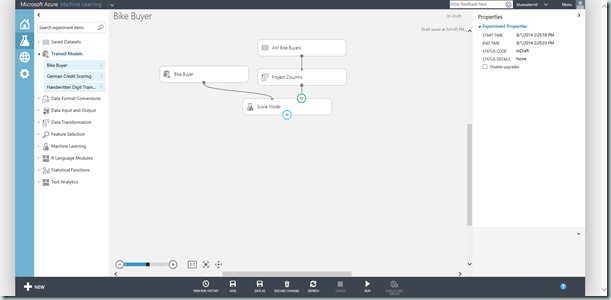

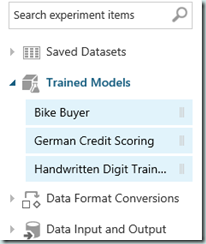

- On the Parameter Sweep item for the Two-Class Boosted Decision Tree, right-click the right-output (Best Trained Model) and choose ‘Save as Trained Model’. In the save dialog, enter ‘Bike Buyer’ as the name. After you click ‘Save’, you will find your trained model in the item toolbox under the Trained Models category.

- Create a new copy of the experiment by clicking ‘Save As’ and naming the new experiment ‘Bike Buyer’.

- Delete all items from the experiment except for the initial AW Bike Buyer data set.

- From the Trained Models, find the Bike Buyer model you just created and drag it to the design surface.

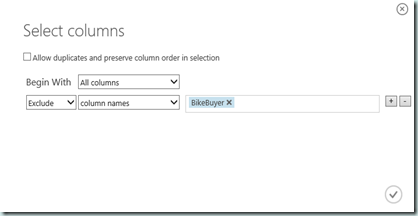

- Add a Project Columns (Data Transformation >> Manipulation) item from the toolbox and wire it to the data set. Next, open the Column Selector from the properties window and change the Begin With to All Columns then exclude the BikeBuyer column as seen below.

- Add a Score Model item from the toolbox and wire the Bike Buyer model and Project Columns output to it.

- On the Score Model item, right-click the data set input and click ‘Set as Publish Input’. Next, right-click the output and click ‘Set as Publish Output’.

The resulting experiment should look similar to the above screenshot. Not that initial data set and subsequent Project Column is used on for column inputs and metadata and is not actually used or scored. Before we can publish the web service, we must run the experiment.

When the experiment is finished running, click the ‘Publish Web Service’ button in the toolbar and then confirm your option by clicking ‘Yes’ when prompted.

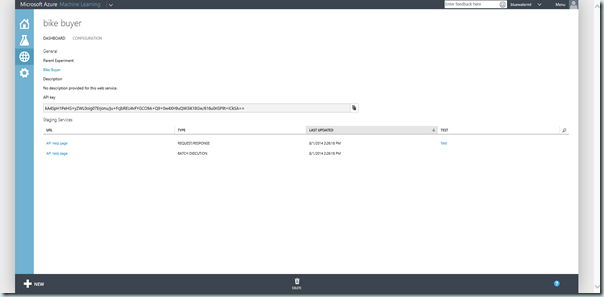

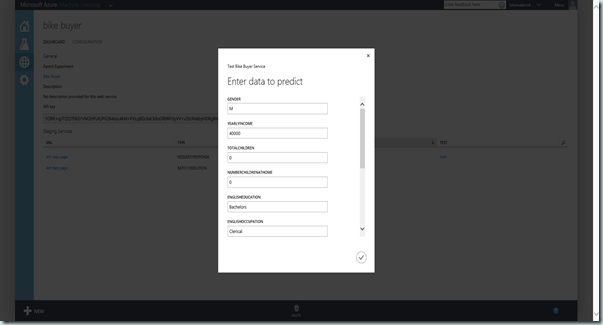

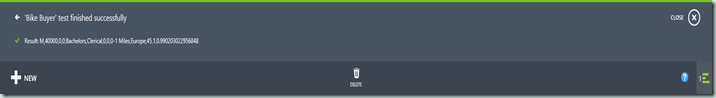

The web service is deployed by default in Staging mode and has two modes or endpoints: request/response and batch. You are now ready to test the service by clicking the Test link and entering parameters (seen below with results surfaced in the status bar) manually or get thorough documentation and code samples (C#, Python & R) on the API Help Page to develop a custom solution.

Wrap-Up

In this second post on Azure Machine Learning, we dug deeper into ML Studio and out AdvenureWorks Bike Buyer experiment to explore how we can iteratively evaluate different models and different model configurations. Once we arrived at a satisfactory result, we published the model out as a web service.

In the next post we will switch context and focus on accomplishing other common tasks such as data preparation while identifying & solving common problems encountered during experimentation.

Till next time!