High Performance Computing – On-Premise and In the Windows Azure Cloud

If you are an ISV with a product or service for industries such as financial services, pharmaceuticals, and others you may be able to make cost-effective use of the technology discussed in this post.

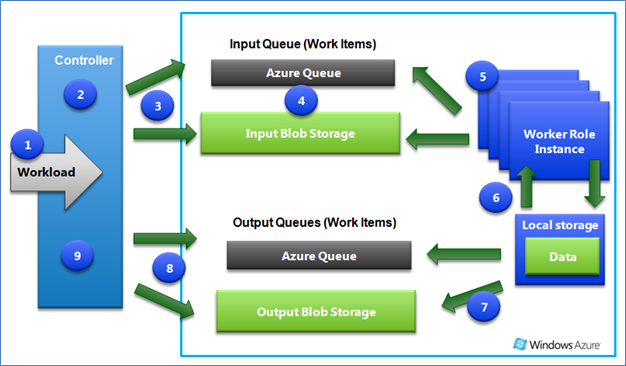

Back in June I posted about an Azure High Scale Compute project that I participated in for a major pharmaceutical company.

At the time of that project (and the posting) I was careful to distinguish between High Performance Computing (HPC) and High Scale Computing (HSC).

HPC and HSC are characterized by a divide and conquer approach that breaks a problem up into many parallel solution paths. The objective is to shorten the total compute time required to solve a problem.

Depending on it’s characteristics it may be the kind of problem where you can just distribute the workload to many parallel servers and then collect the results at the end. In other cases, however, it may be the kind of problem also requiring extremely low latency between it’s compute components during the solution phase of the problem.

In the former case, which fit the project discussed above, Windows Azure was a perfect solution. That problem was easily and much less expensively accomplished using a single on-premise controller node but running all the worker nodes in the cloud.

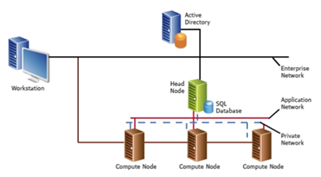

For HPC applications requiring extremely low latency Microsoft has for some time had a product, HPC Server 2008 that provides an on-premise architecture for building HPC clusters requiring low latency.

Since most real world problems require a mix of high and low latency communications between compute nodes it is only natural that Microsoft would extend HPC Server 2008 to the cloud. ![]()

Starting with HPC Server 2008 R2 you can allocate both on-premise and Azure compute nodes to solve a problem. Compute nodes completely on-premise, completely in the cloud or a mixture can be used to fulfill the worker node requirement. In this approach the controller or head node running normally on-premise can be used to distribute computational workload to a combination of on-premise and Azure based compute nodes.

See this article for more information on how one company leveraged this capability and what it did for them.

Bill Zack