New Load Test and Web Test features in the Orcas release

Here is a summary of just a few of the enhancements to Web testing and load testing coming in the Orcas release of Visual Studio Team System (other team members will be blogging about other enhancements):

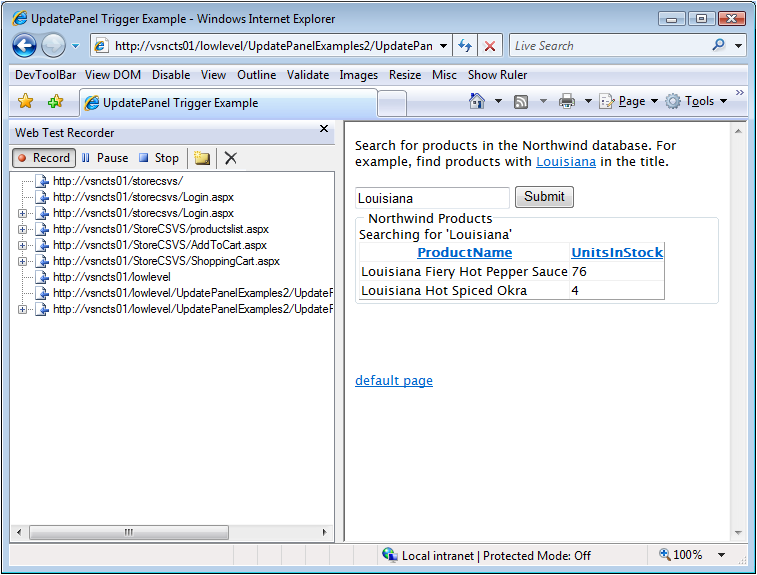

The Web Test recorder now records AJAX requests and other requests missed by the VSTS 2005 Web Test recorder. This should mean that you no longer need to use Fiddler to record Web test requests, though Fiddler is still very useful for diagnosing problems recording or replaying Web tests. Note that dependent requests that will be parsed and automatically submitted when the Web test is run are still not recorded since that would be redundant.

Load Test enhancements

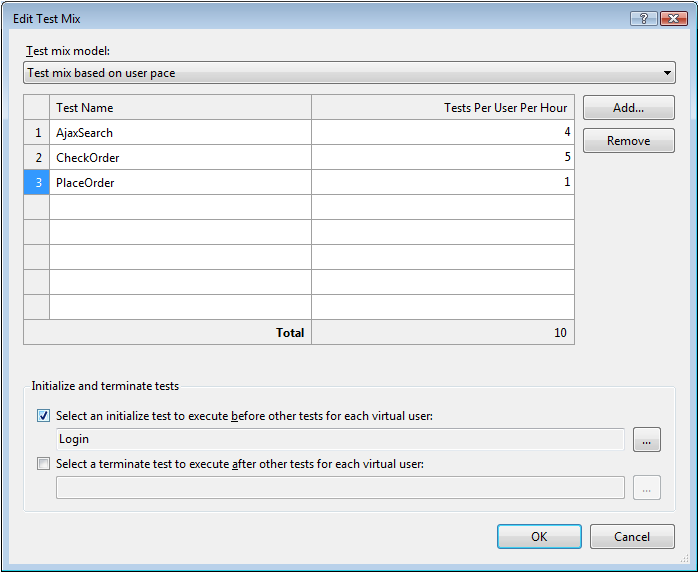

New load model based on user pacing

With this load model, rather than specifying a percentage for each of the tests in one of the load test's scenario, you specify the rate at which each virtual user runs tests. For example:

TestA: 4 tests per user/hr

TestB: 2 tests per user/hr

TestC: 0.125 tests per user/hrVirtual users may be idle between running test depending on the pacing and the duration of the individual tests. With the example above, if each test runs for 1 minute, then each virtual users will be running tests for just 6.125 minutes of every hour. This more closely simulates the real-world where users of an application come and go while doing other things.

Here is a screen shot of the dialog to specify the pacing:

Load test duration based on test iteration count rather than time

The Orcas release supports the option of running the load test for a fixed number of test iterations rather than a fixed length of time. This is a load test run setting rather than a scenario setting, so the load test completes onces the total number of tests run in all of the scenarios reaches the specified number of test iterations.

Performance improvements for load tests containing unit tests

The overhead of running unit tests in a load test has been dramatically reduced. The rate of Tests / Sec that a single load test agent machine can drive has increased by a factor of about 10 when running empty unit tests. The percentage improvement will be significantly smaller when using unit tests that actually do something. For a Web service unit test, we measuread an improvement in throughput driven from one load test agent of approximately 25%.