How to allow Spark to access Microsoft SQL Server

Today we will look at configuring Spark to access Microsoft SQL Server through JDBC. On HDInsight the Microsoft SQL Server JDBC jar is already installed. On Linux the path is /usr/hdp/2.2.7.1-10/hive/lib/sqljdbc4.jar. If you need more information or to download the driver you can start here Microsoft

SQL Server JDBC

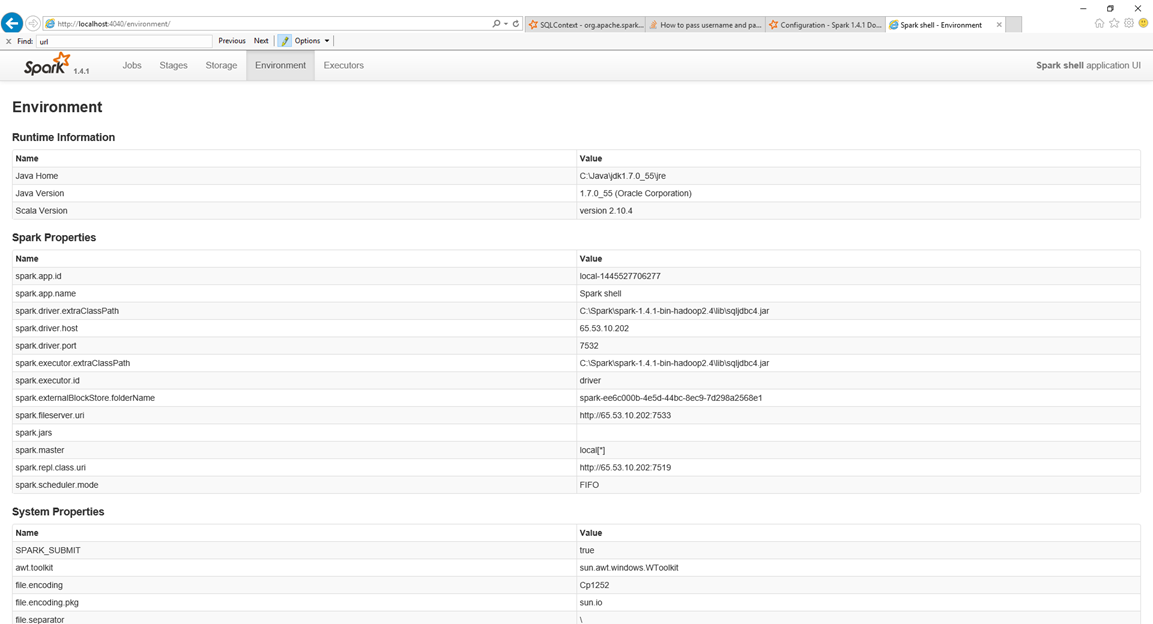

Spark needs to know the path to the sqljdbc4.jar. There are multiple ways to add the path to Spark's classpath. Spark has two runtime environment properties that can do this spark.driver.extraClassPath and spark.executor.extraClassPath. To review all the properties available, see Spark's Configuration - Spark 1.4.1 Documentation.

If you use spark-shell or spark-submit you can pass these properties with –conf. I like to add the properties to Spark's default configuration file at /etc/spark/conf/spark-defaults.conf. A third option is to include the sqljdbc.jar in your assembly jar. This same technique works for other jars that your Spark application might need. Whichever technique you choose, Spark needs to know where to find the sqljdbc4.jar for both the driver application and the executors.

You can check the environment tab in the Spark Properties section to verify the properties are set.

Spark's API is very dynamic and changes are being made with each new release, especially around JDBC. If you are going to use Spark with JDBC I would suggest reviewing Spark's API documentation for the version of Spark you are using Spark 1.4.1 API to make sure the methods are still valid and the same behavior exists. Depending on the release there are a few places to look for methods involving JDBC, which include SQLContext, DataFrame, and JdbcRDD. Also notice that some methods are marked experimental and or deprecated. Make sure you test your code.

Some issue to consider are:

- Make sure firewall ports are open for port 1433.

- If using Microsoft Azure SQL Server DB, tables require a primary key. Some of the methods create the table, but Spark's code is not creating the primary key so the table creation fails.

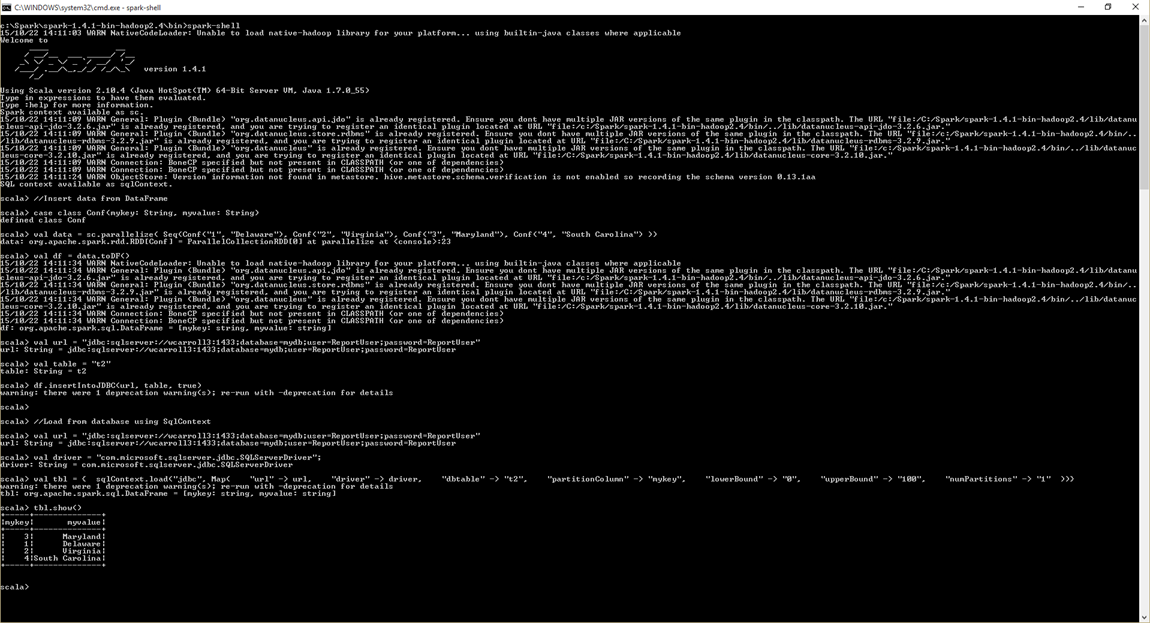

Here are some code snippets. A DataFrame is used to create the table t2 and insert data. The SqlContext is used to load the data from the t2 table into a DataFrame. I added the spark.driver.extraClassPath and spark.executor.extraClassPath to my spark-default.conf file.

//Spark 1.4.1

//Insert data from DataFrame

case class Conf(mykey: String, myvalue: String)

val data = sc.parallelize( Seq(Conf("1", "Delaware"), Conf("2", "Virginia"), Conf("3", "Maryland"), Conf("4", "South Carolina") ))

val df = data.toDF()

val url = "jdbc:sqlserver://wcarroll3:1433;database=mydb;user=ReportUser;password=ReportUser"

val table = "t2"

df.insertIntoJDBC(url, table, true)

//Load from database using SqlContext

val url = "jdbc:sqlserver://wcarroll3:1433;database=mydb;user=ReportUser;password=ReportUser"

val driver = "com.microsoft.sqlserver.jdbc.SQLServerDriver";

val tbl = { sqlContext.load("jdbc", Map( "url" -> url, "driver" -> driver, "dbtable" -> "t2", "partitionColumn" -> "mykey", "lowerBound" -> "0", "upperBound" -> "100", "numPartitions" -> "1" ))}

tbl.show()

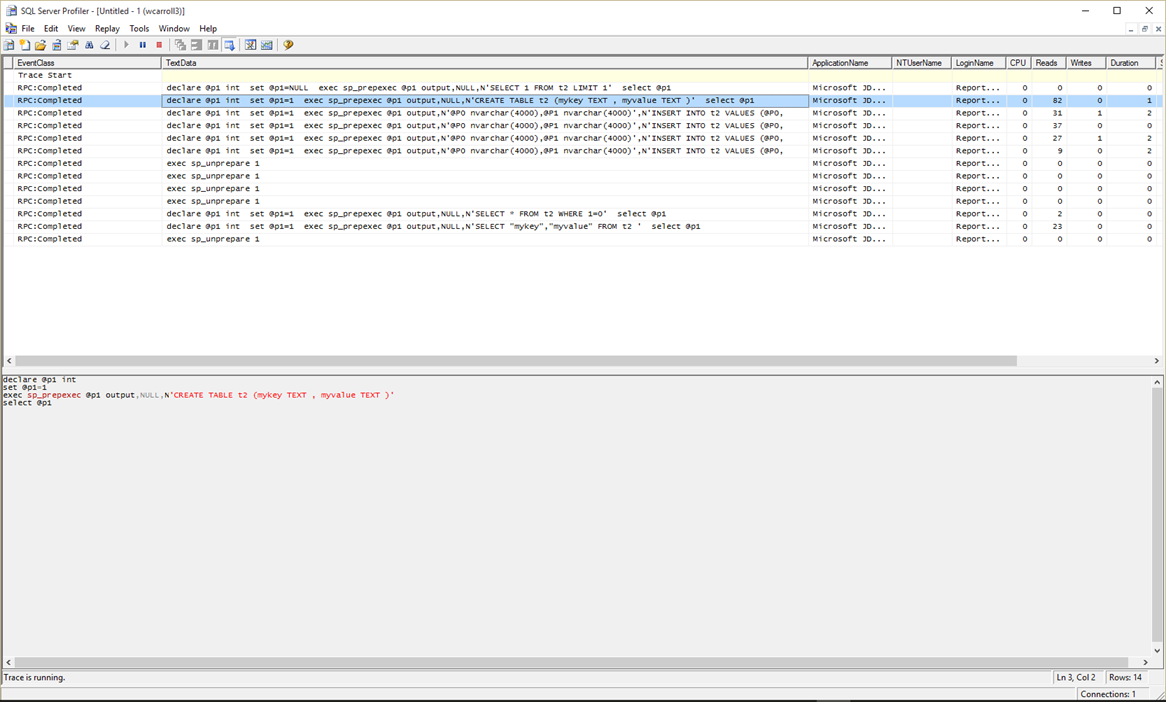

If you run a Microsoft SQL Server profiler trace while running the spark-shell you can see the table being created, data inserted and then data being read.

HDInsight and Spark is a great platform to process and analyze your data, but often data resided in a relational database system like Microsoft SQL Server. Allowing Spark to read and write data from Microsoft SQL Server allows you to create a richer pipeline.

Hope this helps,

Bill