Querying HDInsight Job Status with WebHCat via Native PowerShell or Node.js

One of the great things about HDInsight is that under the covers, it has the same capabilities as other Hadoop installations. This means that you can use regular Hadoop endpoints like Ambari and WebHCat (formerly known as Templeton) to interact with an HDInsight Cluster.

In this blog post, I’ll provide a couple of samples that show how to retrieve Job information from your HDInsight cluster. The first will use native PowerShell (Version 3.0 or higher) and the second will utilize Node.js (Tested with version 0.10.26). I have tested the code against HDInsight 3.0 and 2.1 clusters.

While the samples below are using native capabilities in each language, there are also some fully featured command libraries available for PowerShell and for Node.js. Check out Azure Powershell and the Azure Cross-platform CLI to learn more.

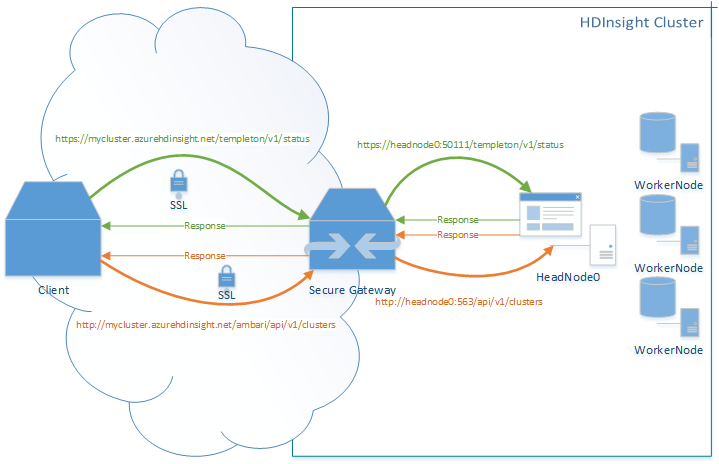

First I’d like to illustrate how this call will actually make it to your HDInsight cluster. Every cluster is isolated from the Internet behind a secure gateway. This gateway is responsible for forwarding requests to the appropriate endpoints in the private address space of your cluster. This is the reason why HDInsight can make use of REST URI’s like: https://mycluster.azurehdinsight.net/ambari/api/v1/clusters and https://mycluster.azurehdinsight.net/templeton/v1/status without using different port numbers. On many standalone Hadoop clusters, you would have to direct these requests to different port numbers like 563 for Ambari or 50111 for WebHCat.

Each of the samples below are functionally equivalent. They follow the basic pattern:

- Acquire credentials for the cluster’s Hadoop Services.

- Make a REST call to the WebHCat endpoint /templeton/v1/jobs

- Parse the response

- For each Job Id in the response, get detailed job status information from /templeton/v1/jobs/:jobid

- Parse the response and display the .status element on the console.

The first example shows how this is accomplished in PowerShell:

PowerShell: listjobswebhcat.ps1 Download

# Get credential object to use for authenticating to the cluster

if(!$ClusterCredential) { $ClusterCredential = Get-Credential }

$ClusterName = 'clustername' # just the first part, we'll add .azurehdinsight.net below when we build the Uri

# Make the REST call, defaults to GET and parses JSON response to PSObject

$Jobs = Invoke-RestMethod -Uri "https://$ClusterName.azurehdinsight.net/templeton/v1/jobs?user.name=$($ClusterCredential.UserName)&showall=true" -Credential $ClusterCredential

Write-Host "The following job information was retrieved:`n"

$Jobs | ft

# Iterate through the jobs

foreach($JobId in $Jobs.id)

{

#Get details specific to this JobId

$Job = Invoke-RestMethod -Uri "https://$ClusterName.azurehdinsight.net/templeton/v1/jobs/$JobId`?user.name=$($ClusterCredential.UserName)" -Credential $ClusterCredential

Write-Host "The following details were retrieved for JobId $JobId`:`n"

$Job | ft

# Powershell doesn't like the fact that the response includes jobId and jobID elements, so I'm going to modify the one that contains a Hash.

# Invoke-RestMethod would have automatically converted the JSON to a PSObject if thow two tags hadn't been there, so post-convering.

$Job = ConvertFrom-Json ($Job -creplace 'jobID','jobIDObj')

Write-Host "`nThe following is the parsed Status for JobId $JobId`:"

$Job.status | fl

Write-Host "-------------------------------------------------------"

}

Here's an example of the output from running this PowerShell script:

The following job information was retrieved:

id detail

-- ------

job_1397520080955_0001

job_1397520080955_0002

-------------------------------------------------------

The following details were retrieved for JobId job_1397520080955_0002:

{"status":{"mapProgress":1.0,"reduceProgress":1.0,"cleanupProgress":0.0,"setupProgress":0.0,"runState":2,"startTime":1397852941726,"queue":"default","priority":"NORMAL","schedulingInfo":"NA","failureInfo":"NA","job

ACLs":{},"jobName":"mapreduce.BaileyBorweinPlouffe_1_100","jobFile":"wasb://clustername@blobaccount.blob.core.windows.net/mapred/history/done/2014/04/18/000000/job_1397520080955_0002_conf.xml","finishTime":13978531674

44,"historyFile":"","trackingUrl":"headnodehost:19888/jobhistory/job/job_1397520080955_0002","numUsedSlots":0,"numReservedSlots":0,"usedMem":0,"reservedMem":0,"neededMem":0,"jobPriority":"NORMAL","jobID":{"id":2,"j

tIdentifier":"1397520080955"},"jobId":"job_1397520080955_0002","username":"userthatranjob","state":"SUCCEEDED","retired":false,"uber":false,"jobComplete":true},"profile":{"user":"userthatranjob","jobFile":"wasb://clustername@blob

account.blob.core.windows.net/mapred/history/done/2014/04/18/000000/job_1397520080955_0002_conf.xml","url":null,"queueName":"default","jobName":"mapreduce.BaileyBorweinPlouffe_1_100","jobID":{"id":2,"jtIdentifier":"1

397520080955"},"jobId":"job_1397520080955_0002"},"id":"job_1397520080955_0002","parentId":null,"percentComplete":null,"exitValue":null,"user":"userthatranjob","callback":null,"completed":null,"userargs":{}}

The following is the parsed Status for JobId job_1397520080955_0002:

mapProgress : 1.0

reduceProgress : 1.0

cleanupProgress : 0.0

setupProgress : 0.0

runState : 2

startTime : 1397852941726

queue : default

priority : NORMAL

schedulingInfo : NA

failureInfo : NA

jobACLs :

jobName : mapreduce.BaileyBorweinPlouffe_1_100

jobFile : wasb://clustername@blobaccount.blob.core.windows.net/mapred/history/done/2014/04/18/000000/job_1397520080955_0002_conf.xml

finishTime : 1397853167444

historyFile :

trackingUrl : headnodehost:19888/jobhistory/job/job_1397520080955_0002

numUsedSlots : 0

numReservedSlots : 0

usedMem : 0

reservedMem : 0

neededMem : 0

jobPriority : NORMAL

jobIDObj : @{id=2; jtIdentifier=1397520080955}

jobId : job_1397520080955_0002

username : userthatranjob

state : SUCCEEDED

retired : False

uber : False

jobComplete : True

-------------------------------------------------------

(continues...)

The next example shows similar steps in Node.js:

Node.js: listjobswebhcat.js Download |

Notes:

|

var https = require('https');

// Cluster Authentication Setup

var clustername = "clustername";

var username = "clusterusername";

var password = "clusterpassword"; // Reminder: Don`t share this file with your password saved!

// Set up the options to get all known Jobs from WebHCat

var optionJobs = {

host: clustername + ".azurehdinsight.net",

path: "/templeton/v1/jobs?user.name=" + username + "&showall=true",

auth: username + ":" + password, // this is basic auth over ssl

port: 443

};

// Make the call to the WebHCat Endpoint

https.get(optionJobs, function (res) {

console.log("\nHTTP Response Code: " + res.statusCode);

var responseString = ""; // Initialize the response string

res.on('data', function (data) {

responseString += data; // Accumulate any chunked data

});

res.on('end', function () {

// Parse the response, we know it`s going to be JSON, so we`re not checking Content-Type

var Jobs = JSON.parse(responseString);

console.log("The following job information was retrieved:");

console.log(Jobs);

Jobs.forEach(function (Job) {

// Set up the options to get information about a specific Job Id

var optionJob = {

host: clustername + ".azurehdinsight.net",

path: "/templeton/v1/jobs/" + Job.id + "?user.name=" + username + "&showall=true",

auth: username + ":" + password, // this is basic auth over ssl

port: 443

};

https.get(optionJob, function (res) {

console.log("\nHTTP Response Code: " + res.statusCode);

var jobResponseString = ""; // Initialize the response string

res.on('data', function (data) {

jobResponseString += data; // Accumulate any chunked data

});

res.on('end', function () {

var thisJob = JSON.parse(jobResponseString); // Parse the JSON response

console.log("The following is the Status for JobId " + Job.id);

console.log(thisJob.status); // Just Log the status element.

});

});

});

});

});

Here's an example of the output from running this script through Node.js:

HTTP Response Code: 200

The following job information was retrieved:

[ { id: 'job_1397520080955_0001', detail: null },

{ id: 'job_1397520080955_0002', detail: null } ]

HTTP Response Code: 200

The following is the Status for JobId job_1397520080955_0002

{ mapProgress: 1,

reduceProgress: 1,

cleanupProgress: 0,

setupProgress: 0,

runState: 2,

startTime: 1397852941726,

queue: 'default',

priority: 'NORMAL',

schedulingInfo: 'NA',

failureInfo: 'NA',

jobACLs: {},

jobName: 'mapreduce.BaileyBorweinPlouffe_1_100',

jobFile: 'wasb://clustername@blobaccount.blob.core.windows.net/mapred/history/don

e/2014/04/18/000000/job_1397520080955_0002_conf.xml',

finishTime: 1397853167444,

historyFile: '',

trackingUrl: 'headnodehost:19888/jobhistory/job/job_1397520080955_0002',

numUsedSlots: 0,

numReservedSlots: 0,

usedMem: 0,

reservedMem: 0,

neededMem: 0,

jobPriority: 'NORMAL',

jobID: { id: 2, jtIdentifier: '1397520080955' },

jobId: 'job_1397520080955_0002',

username: 'userthatranjob',

state: 'SUCCEEDED',

retired: false,

uber: false,

jobComplete: true }

(continues...)

Hopefully this provides a good starting point to show how easy it is to integrate external or on-premises PowerShell or Node.js solutions with HDInsight. Listing Job information is just a simple example, but with the WebHCat API, you can query the Hive/HCatalog metastore, Create/Drop Hive Tables, and even launch MapReduce Jobs, Hive Queries and Pig Jobs. The ability to execute work and monitor your HDInsight cluster externally can allow you to integrate with other systems and solutions, using whatever language or platform works best for you.