How to deploy a Python module to Windows Azure HDInsight

Introduction

In a previous post, I explained how to run Hive + Python  in HDInsight (Hadoop as a service in Windows Azure).

in HDInsight (Hadoop as a service in Windows Azure).

The sample showed a Python script using standard modules such as hashlib. In real life, modules need to be installed on the machine before they can be used. Recently, I had to use the shapely, shapefile and rtree modules.

Here is how I did and why.

A quick recap on how HDInsight works

HDinsight is a cluster created on top of Windows Azure worker roles. This means that each VM in the cluster can be reimaged i.e. replaced by another VM with the same original bits on it. In terms of configuration, the “original bits” contain what was declared when the cluster was created (Windows Azure storage accounts, …). So installing something after the cluster was created can run until a node is reimaged.

NB: In practice, a node would be typically reimaged while the underlying VM host is rebooted because it gets security patches installed. This does not happen every day, but good practices in cloud development should take those constraints into account.

Install on the fly

So the idea is to install the module on the fly, while executing the script.

Python is flexible enough

to let you catch exception while importing modules.

to let you catch exception while importing modules.

So the top of the Python script looks like this:

import sys

import os

import shutil

import uuid

from zipfile import *

py_id = str(uuid.uuid4())

#sys.stderr will end up in Hadoop execution logs

sys.stderr.write(py_id + '\n')

sys.stderr.write('My script title.\n')

sys.stderr.flush()

# try to import shapely module. If it has already been install this will succeed

# otherwise, we'll install it on the fly

try:

from shapely.geometry import Point

has_shapely = True

except ImportError:

has_shapely = False

if (has_shapely == False):

# shapely module was not installed on this machine.

# let's install all the required modules which are brought near the .py script as zip files

sys.stderr.write(py_id + '\n')

sys.stderr.write('will unzip the shapely module\n')

sys.stderr.flush()

#unzip the shapely module files (a Python module is a folder) in the python folder

with ZipFile('shapely.zip', 'r') as moduleZip:

moduleZip.extractall('d:\python27')

#unzip the rtree module files in the Python lib\site-packages folder

with ZipFile('rtree.zip', 'r') as moduleZip:

moduleZip.extractall('d:\python27\Lib\site-packages')

#add a required dependency (geos_c.dll) and install the shapefile module (just one .py file, no zip required)

try:

sys.stderr.write(py_id + '\n')

sys.stderr.write('trying to copy geos_c.dll to python27\n')

sys.stderr.flush()

shutil.copyfile('.\geos_c.dll', 'd:\python27\geos_c.dll')

shutil.copyfile('.\shapefile.py', 'd:\python27\shapefile.py')

except:

sys.stderr.write(py_id + '\n')

sys.stderr.write('could not copy geos_c.dll to python27. Ignoring\n')

sys.stderr.flush()

pass

#now that the module is installed, re-import it ...

from shapely.geometry import Point

# ... and also import the second module (rtree) which was also installed

from shapely.geometry import Polygon

import string

import time

import shapefile

from rtree import index

(…)

How to package the modules

So the next questions are:

- where do the .zip files come from?

- how to send them with the .py script so that it can find them when unzipping?

As the approach is to prepare the module so that it can be unzipped rather than installed, the idea is to install the module manually on a similar machine, then package it for that machine.

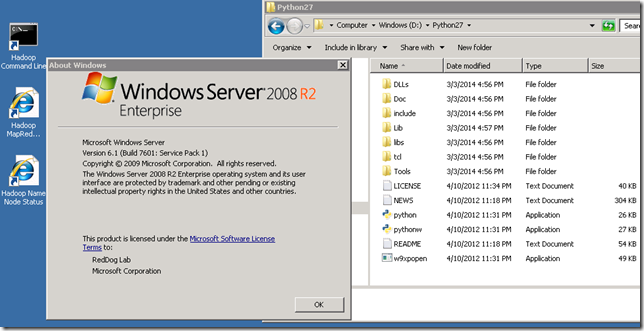

An HDInsight machine is installed has shown below:

Well, this was the head node, the worker node don’t look much different:

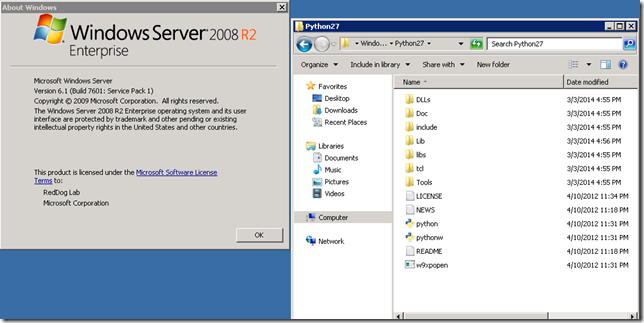

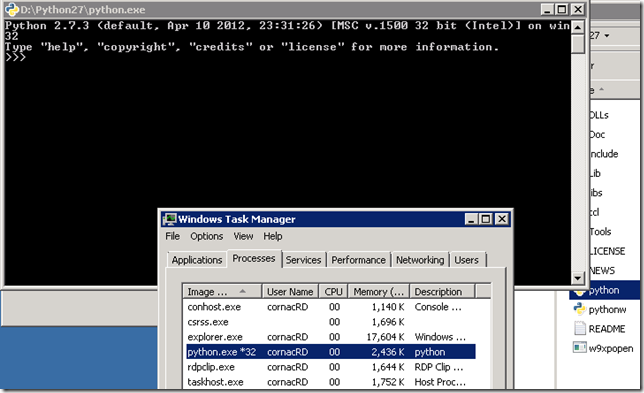

and this is Python 32 bits (on a 64-bit Windows Server 2008 R2 OS):

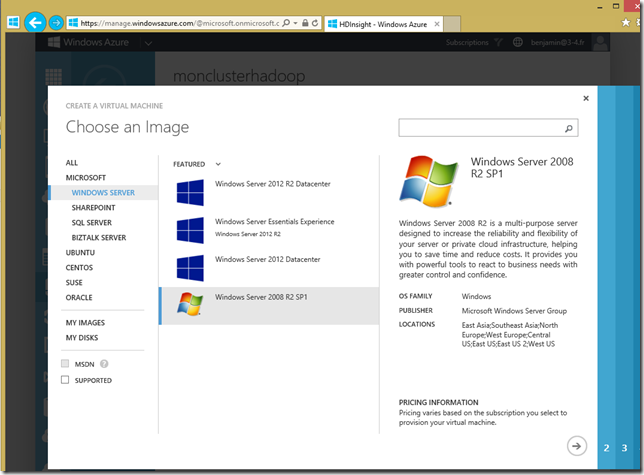

If you don’t have that kind of environment, you can just create a virtual machine on Windows Azure. The OS is available in the gallery:

Once you have created that VM, installed Python 2.7 32 bits on it and installed the required modules manually, you can zip them.

Then, you just have to send them with the .py python script. Here is what I have in my example (in PowerShell):

$hiveJobVT = New-AzureHDInsightHiveJobDefinition -JobName "my_hive_and_python_job" `

-File "$mycontainer/with_python/my_hive_job.hql"

$hiveJobVT.Files.Add("$wasbvtraffic/with_python/geos_c.dll")

$hiveJobVT.Files.Add("$wasbvtraffic/with_python/shapely.zip")

$hiveJobVT.Files.Add("$wasbvtraffic/with_python/my_python_script.py")

$hiveJobVT.Files.Add("$wasbvtraffic/with_python/rtree.zip")

$hiveJobVT.Files.Add("$wasbvtraffic/with_python/shapefile.py")

In the HIVE job, the files must also be added:

(...)

add file point_in_polygon.py;

add file shapely.zip;

add file geos_c.dll;

add file rtree.zip;

add file shapefile.py;

(...)

INSERT OVERWRITE TABLE my_result

partition (dt)

SELECT transform(x, y, z, dt)

USING 'D:\Python27\python.exe my_python_script.py' as

(r1 string, r2 string, r3 string, dt string)

FROM mytable

WHERE dt >= ${hiveconf:dt_min} AND dt <= ${hiveconf:dt_max};

(...)

![]()

Benjamin (@benjguin)