Controlling Azure Costs with proper tagging and the billing APIs

Hi,

At the time of writing this blog post, it is hard to be entirely satisfied with the existing Azure cost control solutions such as Cloudyn or the Microsoft Azure Consumption Insights Power BI app, should you envision a very granular way of analyzing costs.

Indeed, both Cloudyn and the Power BI app help to analyze costs per subscription and even per resource group to some extent but none of these solution focuses on tags, although tags are the only way you can really tie all things together, as for instance tagging whatever Azure Resource with a project code that'd be the identifier of the associated project you're running. Having a granular way of calculating costs allows you to come back to your stakeholders with what they are consuming and potentially charge them back.

Limitations of existing solutions

Currently, in Cloudyn, not all tags are brought back in the UI as it seems that only tags associated to VMs are surfaced, which is far from representing all kind of costs incurred by your activities, although VMs are indeed costly resources. With the Power BI app, tags are there but on their original form, meaning an arbitrary array of tags for each tagged resource. I say arbitrary as some tags are added by Azure itself. Therefore, it is very hard if not impossible to exploit this in reports, even when using Advanced Filtering. Moreover, as you don't have control over its datasource (because it's a third-party app), it gets hard to achieve reporting objectives with regards to tags.

Fortunately, the Billing APIs come to the rescue and it is possible to consume them to extract the required information, perform some calculation, store the results in a database and build some Power BI reports. You might think that you're re-inventing the wheel, which is true to some extent but after all, it's not that much work and you'll have full control over the data you want to explore. Also, I'm not telling you to use this technique as a substitute for Cloudyn or the Power BI App, you can simply combine them all together, but at least, you'll have an overview of your costs ventilated over all your tags for any type of resource.

Scenario

So, in this article, just to make a POC, I will assume that I want to split costs by project and environment (DEV, ACC, PRD) as distinguishing environments may help in identifying useless costs (as for instance running multiple instance of an app service in DEV). So, to cover these basic requirements, I will need two tags, namely Project Code and Environment.

For sake of simplicity, I will only use a single subscription and I'll tag my resources manually using the Azure Portal but of course, feel free to come up with your own tagging strategy. For information only, beware that you can tag resources in 3 ways: using ARM templates, using PowerShell and using Policies. I'm not going to cover this in the article as it'd take too much time but feel free to PM me if needed.

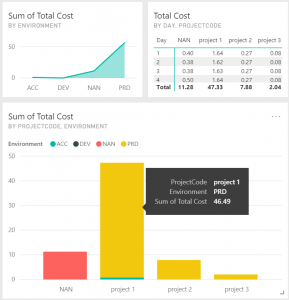

So, for things to be clear for everyone, here is a visual representation of what I want to achieve.

where I can see costs scattered by environment and project. The above picture isn't realistic since I arbitrary tagged resources on a MSDN subscription.

and here are the high level steps required to build such reports:

- Creating a database

- Tagging resources (mandatory of course but I'll skip the explanation)

- Getting service costs via the RateCard API in PowerShell

- Getting usage via the usage API in PowerShell

- Calculating your own costs based on the data retrieved from both API and store results in a database

- Building PowerBI reports (I'll also skip that one)

- Once your PowerShell testing is done, you can optionally create a runbook in Azure Automation and schedule your script to run daily.

Steps 3, 4 and 5 are all done by the PowerShell script

Before we start

Before we dive into the steps, let's first explain how these APIs (still in preview) work.

- The RateCard API gives you generic prices for a given service, according to your region and the type of subscription you are running. Basically, other than the cost itself, the key information returned by this API is the MeterId which is the identifier of a service (ie: storage, app service, etc.), and that very same identifier is returned by the usage API. So, RateCard returns the cost per unit while usage returns the amount of units you have consumed. As you understood, multiplying these things gives you your own cost.

- The Usage API returns the amount of units you are consuming hourly or daily for a given type of service. The MeterId is returned along with the quantity of consumed units.

One remark here, costs for services do not vary everyday, so you might consider loading them in memory and not storing them into a database as it will eat up some space, but in that case, you won't be able to make reports over the price history of the MS offering. For sake of brevity, I won't store them in a database.

Creating a database

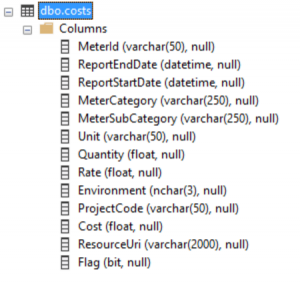

I'm not going to explain how to setup a database but I'll rather show you the data I want to store for my simple scenario:

So, I have a single table with all the fields I'm interested it. As you can see, I have one column per tag, namely Environment and ProjectCode. I'm storing the calculated cost as ultimately, I'll get one row per day per component with its cost based on the price of that day retrieved from the RateCard API. If you're working with multiple subscriptions, you should also store its identifier and probably start working with multiple tables. Whatever you do, the advantage of having your own database is that you can easily create views afterwards instead of fiddling with DAX in Power BI.

Writing the PowerShell script

There are several options here as the Usage API comes with a set of cmdlets (Get-UsageAggregates, etc... ) but the RateCard API doesn't. So, I guess, the easiest way to proceed for the time being, is to get an AccessToken and perform the web requests manually for both, the Usage & RateCard APIs. So, here is the script (didn't make too much exception handling nor is it flexible but I want to keep in short for the article).

</pre>

Try

{

#initializing (could take this as params)

$SubscriptionId = "yourid"

$SqlConnection = New-Object System.Data.SqlClient.SqlConnection

$SqlConnection.ConnectionString = "Server = theserver; Database = thedb; Integrated Security = false; User ID = thedbuser; Password = thedbpassword;"

$UserPassword="thepassword"

$UserName="user"

$OfferId="MS-AZR-yourofferid"

$tenant="yourtenant.onmicrosoft.com"

#buidling the access token request using the password credential grant

#feel free to use another flow

$body="resource=https%3A%2F%2Fmanagement.azure.com%2F"+

"&client_id=aadapp"+

"&grant_type=password"+

"&username=$($UserName)"+

"&password=$($UserPassword)&scope=openid"

#getting the access token

$resp=Invoke-WebRequest -UseBasicParsing -Uri "https://login.windows.net/$($tenant)/oauth2/token" `

-Method POST `

-Body $body| ConvertFrom-Json

#preparing RateCard API request

$RateCardRequest="https://management.azure.com/subscriptions/$($subscriptionId)/providers/Microsoft.Commerce/RateCard"+

"?api-version=2016-08-31-preview"+

"&`$filter=OfferDurableId%20eq%20'$($OfferId)'%20and%20Currency%20eq%20'EUR'%20and%20Locale%20eq%20'en-US'%20and%20RegionInfo%20eq%20'EU'"

#adding the access token to the request

$headers = @{

Authorization = "Bearer $($resp.access_token)"

}

#Calling RateCard & loading the costs in memory, could store that in DB for cost history

$costs = New-Object 'system.collections.generic.dictionary[[string],[double]]'

$RateResponse=Invoke-WebRequest -UseBasicParsing -Uri $RateCardRequest -Headers $headers| ConvertFrom-Json

$RateResponse.Meters |% {

$MeterRate=0

if($_.MeterRates -ne $null)

{ #always taking the first rate here. A few resources

#have different rates according to the consumption

#I'm taking the easy path by taking the first rate since it only

#applies to some specific things. However, feel free to adapt this.

$Definition=($_.MeterRates | Get-Member @(0)).Definition

$MeterRate=[double]$Definition.Substring($Definition.IndexOf("=")+1).Replace(",",".")

}

$costs.Add($_.MeterId,$MeterRate)

}

$SqlConnection.Open()

#I'm hardcoding dates but of course, if you make it a daily job, you could compute them

$ReportedStartTime = [DateTime]::Today.AddDays(-2).ToString("yyyy-MM-dd")

$ReportedEndTime = [DateTime]::Today.AddDays(-1).ToString("yyyy-MM-dd")

#preparing the usage request

$UsageRequest="https://management.azure.com/subscriptions/$($subscriptionId)/providers/Microsoft.Commerce/UsageAggregates"+

"?api-version=2015-06-01-preview"+

"&reportedstartTime=$($ReportedStartTime)T00%3a00%3a00Z"+

"&reportedEndTime=$($ReportedEndTime)T00%3a00%3a00Z"

$UsageData = Invoke-WebRequest -UseBasicParsing -Uri $UsageRequest -Headers $headers| ConvertFrom-Json

Do {

$UsageData.value |%{

$project="NAN"

$env="NAN"

#getting tags if any

if($_.Properties.InstanceData -ne $null)

{

$json = $_.Properties.InstanceData |ConvertFrom-Json

$ResourceUri = $json.'Microsoft.Resources'.resourceUri

if($json.'Microsoft.Resources'.tags -ne $null)

{

$project=$($json.'Microsoft.Resources'.tags.'Project Code')

$env=$($json.'Microsoft.Resources'.tags.'Environment')

}

}

#getting related price. If not found, flagging record to invalid.

if(($rate=$costs[$_.Properties.MeterId]) -ne $null)

{

$ResourceCost = $rate * $_.Properties.Quantity

$valid=$true;

}

else

{

$valid=$false;

$rate=0

$ResourceCost

}

#preparing the SQL statement

$SqlCmd = New-Object System.Data.SqlClient.SqlCommand

$SqlCmd.CommandText = `

"insert into costs("+

"MeterId,ReportEndDate,ReportStartDate,"+

"MeterCategory,MeterSubCategory,Unit,"+

"Quantity,Rate,Environment,ProjectCode,Cost,ResourceUri,Flag)"+

" values('$($_.Properties.MeterId)','$($reportedEndTime)',"+

"'$($reportedStartTime)','$($_.Properties.MeterCategory)',"+

"'$($_.Properties.MeterSubCategory)','$($_.Properties.Unit)',"+

"$($_.Properties.Quantity),$($rate),'$($env)','$($project)',"+

"$($ResourceCost),'$($ResourceUri)','$($valid)')"

write-host $SqlCmd.CommandText

$SqlCmd.Connection = $SqlConnection

$SqlCmd.ExecuteNonQuery()

}

#nextLink allows for paging which occurs in case of large amount of data

if ($UsageData.nextLink -ne $null) {

$UsageData = Invoke-WebRequest -UseBasicParsing -Uri $UsageData.nextLink -Headers $headers| ConvertFrom-Json

}

} until ($UsageData.nextLink -eq $null)

}

Finally

{

if($SqlConnection.State -eq "Open")

{

$SqlConnection.Close()

}

}

<pre>

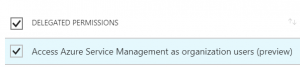

Although reading comments in the script should already help understanding it, I have a few extra indications. To authenticate against the billing API, I registered an Azure AD App and gave it the following permission:

I then used it to get a token using the password credentials grant and I use this token to perform requests against both the RateCard and Usage APIs. The RateCard API returns prices according to a given subscription type. This is the OfferDurableId param you pass along to the API. You can get this information from the subscription properties using the Azure Portal. At last, I'm taking today -2 and today -1 midnight as starting & ending dates to get the usage of the previous day. Ideally, you should double-check the database to delete existing rows should the script be re-run for the same day (in case of crash in the middle) and/or use database transactions, but I let this to you.

As you can see, it's a rather small script although it could be a bit more with proper error handling and if you decide to record the price history in the database as well, but even then, it'd remain rather straightforward.

Happy Azure