Configuring Service Fabric security hardened cluster (ALB and ILB)

Special thanks to Chacko Daniel for helping out with the SFRP connectivity issue.

Introduction

Today, the default Service Fabric configuration exposes the ports 19080 and 19000 publicly. Those ports are usually protected by certificate-based security or AAD, but it’s definitely a good idea to hide those ports from the Internet.

There are multiple ways to achieve this goal:

- Using Network Security Groups to limit traffic to selected public networks

- Exposing internal services using Internal Load Balancer to a private VNET, while still exposing public services with Azure Load Balancer

- More complex solutions

In this article, I will focus on the second approach.

Network Security Groups

When starting with NSG, I definitely recommend Chacko Daniel’s quick start template: https://github.com/Azure/azure-quickstart-templates/tree/master/service-fabric-secure-nsg-cluster-65-node-3-nodetype. It’s quite complex, however it contains all the rules that are required for the Service Fabric cluster to work, and is well documented.

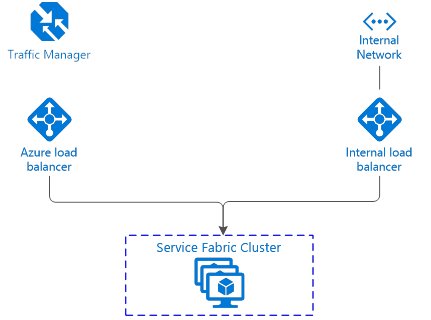

Dual load balancer config

This config requires to set up two load balancers using an ARM template to configure such a cluster. We will start with this basic template: https://github.com/Azure/azure-quickstart-templates/tree/master/service-fabric-secure-cluster-5-node-1-nodetype (it is also available from Azure SDK in Visual Studio).

- Azure Load Balancer will receive traffic on the public IP addresses

- Internal Load Balancer will recieve traffic on the private VNET

Important: Service Fabric Resource Provider (SFRP) integration

There is a slight issue with this configuration, as for SF runtime 5.4, SFRP requires access to the SF endpoints and ports 19000 and 19080 for management purposes (and it is able to use only public addresses for that).

The current VMSS implementation allows neither referencing a single port on two load balancers, nor configuring multiple IP configs per NIC, nor configuring multiple NICs per node. This makes exposing the single port 19080 for both load balancers virtually impossible. Even if possible, it would make the configuration much more complex and would require a Network Security Group.

Fortunately, this is no longer an issue in 5.5. Starting from this version, SF requires only an outbound connection to the SFRP https://<region>.servicefabric.azure.com/runtime/clusters/, which is provided by ALB to all the nodes.

ALB and ILB step-by-step

Below is a short step-by-step guide. A lot of points in this guides apply also to configuring ALB and ILB for Virtual Machine Scale Sets without Service Fabric.

Basic cluster configuration

- Create a project using the template service-fabric-secure-cluster-5-node-1-nodetype from the quickstart gallery.

- Make it running (you need to do the standard steps with Key Vault etc). It is a good idea to deploy it just to make sure the cluster is up and running – ILB can be added later on by redeploying a modified template.

Configuring ILB and ALB

Now you need to create a secondary subnet in which your ILB will expose its front endpoint. In azuredeploy.json:

Step 1. After the subnet0Ref variable, insert these:

[code lang="js"]

"subnet1Name": "ServiceSubnet",

"subnet1Prefix": "10.0.1.0/24",

"subnet1Ref": "[concat(variables('vnetID'),'/subnets/',variables('subnet1Name'))]",

"ilbIPAddress": "10.0.1.10",

Step 2. Find where the virtual network is defined and add an additional subnet definition. You can deploy your template afterwards.

[code lang="js" highlight="9,10,11"]

"subnets": [

{

"name": "[variables('subnet0Name')]",

"properties": {

"addressPrefix": "[variables('subnet0Prefix')]"

}

},

{

"name": "[variables('subnet1Name')]",

"properties": {

"addressPrefix": "[variables('subnet1Prefix')]"

}

}]

Step 3. Now let's define variables for the ILB. After the lbNatPoolID0 variable, insert new variables:

[code lang="js"]

"ilbID0": "[resourceId('Microsoft.Network/loadBalancers',concat('ILB','-', parameters('clusterName'),'-',variables('vmNodeType0Name')))]",

"ilbIPConfig0": "[concat(variables('ilbID0'),'/frontendIPConfigurations/LoadBalancerIPConfig')]",

"ilbPoolID0": "[concat(variables('ilbID0'),'/backendAddressPools/LoadBalancerBEAddressPool')]",

Step 4. Now you can create the ILB. Find the section responsible for creating ALB - it has "name": "[concat('LB','-', parameters('clusterName'),'-',variables('vmNodeType0Name'))]", and after this entire large section, insert the ILB config:

{

"apiVersion": "[variables('lbApiVersion')]",

"type": "Microsoft.Network/loadBalancers",

"name": "[concat('ILB','-', parameters('clusterName'),'-',variables('vmNodeType0Name'))]",

"location": "[variables('computeLocation')]",

"properties": {

"frontendIPConfigurations": [

{

"name": "LoadBalancerIPConfig",

"properties": {

"privateIPAllocationMethod": "Static",

"subnet": {

"id": "[variables('subnet1Ref')]"

},

"privateIPAddress": "[variables('ilbIPAddress')]"

}

}],

"backendAddressPools": [

{

"name": "LoadBalancerBEAddressPool",

"properties": {}

}],

"loadBalancingRules": [],

"probes": [],

},

"tags": {

"resourceType": "Service Fabric",

"clusterName": "[parameters('clusterName')]"

}

},

You should now add reference to the Backend Address Pool of the fresh-configured ILB to the VMSS NIC configuration:

[code lang="js" highlight="5-7"]

"loadBalancerBackendAddressPools": [

{

"id": "[variables('lbPoolID0')]"

},

{

"id": "[variables('ilbPoolID0')]"

}

],

You can deploy it and you have the ILB up and running along with ALB, but it has zero rules.

At this point, you can reconfigure the ALB and the ILB: for example, you can move loadBalancingRules and probes for 19000 and 19080 ports to the ILB config:

Step 5. Move the loadBalancingRules and change ip pool references:

[code lang="js" highlight="2-39"]

"loadBalancingRules": [

{

"name": "LBRule",

"properties": {

"backendAddressPool": {

"id": "[variables('ilbPoolID0')]"

},

"backendPort": "[variables('nt0fabricTcpGatewayPort')]",

"enableFloatingIP": "false",

"frontendIPConfiguration": {

"id": "[variables('ilbIPConfig0')]"

},

"frontendPort": "[variables('nt0fabricTcpGatewayPort')]",

"idleTimeoutInMinutes": "5",

"probe": {

"id": "[variables('lbProbeID0')]"

},

"protocol": "tcp"

}

},

{

"name": "LBHttpRule",

"properties": {

"backendAddressPool": {

"id": "[variables('ilbPoolID0')]"

},

"backendPort": "[variables('nt0fabricHttpGatewayPort')]",

"enableFloatingIP": "false",

"frontendIPConfiguration": {

"id": "[variables('ilbIPConfig0')]"

},

"frontendPort": "[variables('nt0fabricHttpGatewayPort')]",

"idleTimeoutInMinutes": "5",

"probe": {

"id": "[variables('lbHttpProbeID0')]"

},

"protocol": "tcp"

}

}

],

And move the probes:

[code lang="js" highlight="2-19"]

"probes": [

{

"name": "FabricGatewayProbe",

"properties": {

"intervalInSeconds": 5,

"numberOfProbes": 2,

"port": "[variables('nt0fabricTcpGatewayPort')]",

"protocol": "tcp"

}

},

{

"name": "FabricHttpGatewayProbe",

"properties": {

"intervalInSeconds": 5,

"numberOfProbes": 2,

"port": "[variables('nt0fabricHttpGatewayPort')]",

"protocol": "tcp"

}

}

],

You also need to update the probe variables to make them reference the ILB:

[code lang="js"]

"lbProbeID0": "[concat(variables('ilbID0'),'/probes/FabricGatewayProbe')]",

"lbHttpProbeID0": "[concat(variables('ilbID0'),'/probes/FabricHttpGatewayProbe')]",

At this point, you can deploy your template, and Service Fabric administrative endpoints are only available at your ILB IP 10.0.1.10.

Step 6. It is also good idea to get rid of the rule allowing remote desktop access to your node cluster on its public IP (you can still access them from your internal network on addresses like 10.0.0.4, 10.0.0.5, etc.).

i) You need to delete it from the ALB configuration:

[code lang="js" highlight="2-13"]

"inboundNatPools": [

{

"name": "LoadBalancerBEAddressNatPool",

"properties": {

"backendPort": "3389",

"frontendIPConfiguration": {

"id": "[variables('lbIPConfig0')]"

},

"frontendPortRangeEnd": "4500",

"frontendPortRangeStart": "3389",

"protocol": "tcp"

}

}

]

ii) And also from NIC IP Configuration:

[code lang="js" highlight="2-4"]

"loadBalancerInboundNatPools": [

{

"id": "[variables('lbNatPoolID0')]"

}

],

NOTE: If you have already deployed the template, you need to do 6ii, redeploy and then 6i. Otherwise you will get an error: LoadBalancerInboundNatPoolInUseByVirtualMachineScaleSet.

Step 7. Last thing – there is an option in the ARM template for Service Fabric called managementEndpoint – the best idea is to reconfigure it to the Fully Qualified Domain Name of your ILB IP Address. This option is related to the aforementioned SFRP-integration issue in 5.4 and earlier.

What's next

You can now freely configure all your services and decide which one is exposed on which load balancer.

Complete ARM template

You can see the complete modified ARM template here: https://gist.github.com/mkosieradzki/a892785483ec0f7a4c330f38c3d98be9.

More complex scenarios

There are many more complex solutions using multiple node types. For example, here's one described by Brent Stineman: https://brentdacodemonkey.wordpress.com/2016/08/01/network-isolationsecurity-with-azure-service-fabric/.