Race conditions in Microservices

Race conditions can come in many forms and two common scenarios are when technical resources do not load and/or available in the desired sequence, and when multiple operations are performed not in the desired sequence. This post will illustrate a scenario of when requests are handled out of sequence. For a well written post illustrating the loading of technical resources, see Preventing Race Conditions Between Containers in ‘Dockerized’ MEAN Applications.

In this post Azure Functions and Azure Webjobs are used but this does apply to many implementations and highlights more a design flaw than a technical choice mistake.

The scenario involves logging when a person accesses some resource. For this we are going to log the access to an Azure storage table with the number of times the user accessed the resource. To keep with the microservice scenario, imagine one team is responsible for building the service accessing the resource where another team is responsible for logging the action. To minimize the impact, the teams decided to use a queue to log the action and then process the queue item asynchronously as illustrated below:

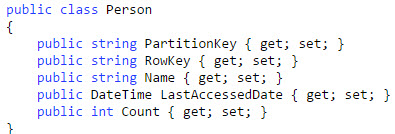

The team responsible for logging the action has decided to use an Azure Function to log the action to an Azure Table using the following structure:

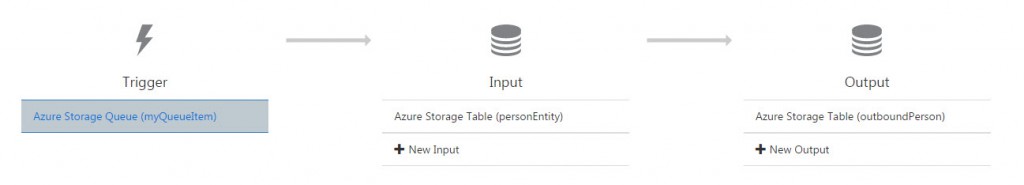

The idea is when a user accessed the resource a new item is added to the queue which triggers the function to start. The function then either creates a new Person entity setting the count to 1 or retrieves an existing Person entity with the same name and increases the count by 1. The following illustrates the flow from the Integrate tab in an Azure Function:

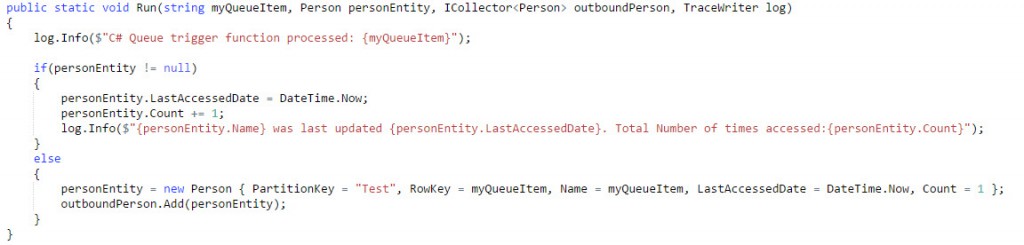

The function itself is shown below and when run in the portal performs the action in less than a second. Good start.

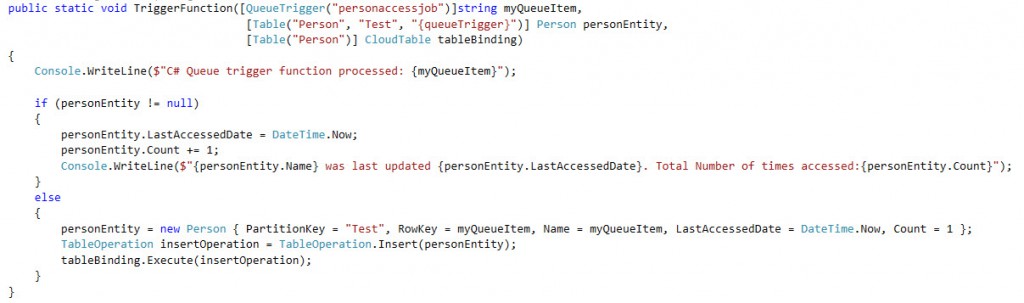

To make this illustration a bit more interesting, the following is the same functionality implemented as an Azure Webjob (but using a different Queue):

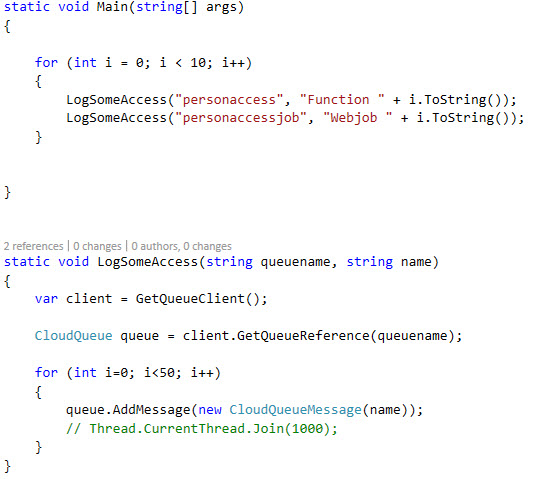

The race condition can be exploited by generating enough queue items so that a run of the function or webjob starts before a previous one has completed. This was done by submitting to the queue 50 items for 20 users (10 function users and 10 webjob users):

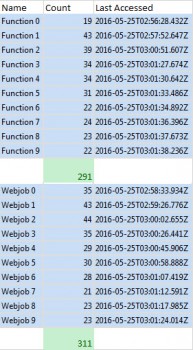

Note the 1 second throttle (thread join) is commented out. After a run, a review of the table showed a disappointing result where a significant number of accessed failed to process:

Subsequent runs showed similar results but where Functions had less failures. Adding in a one second delay when adding to the queue did reduce the number of failures but did not eliminate it completely. Of course in a real world scenario, reducing the rate of access is not realistic.

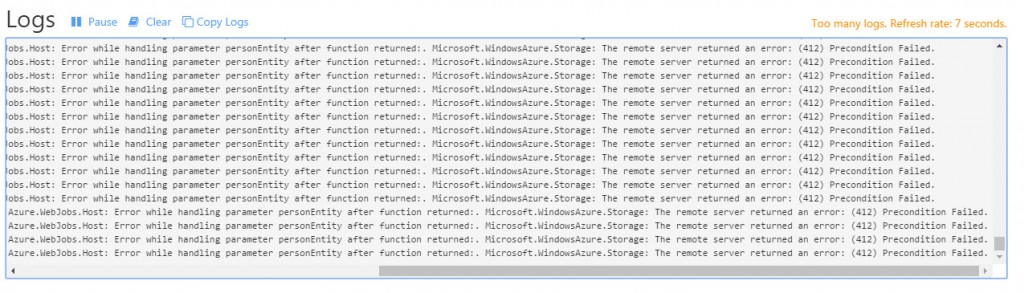

It is interesting to note that both the Functions and Webjobs logs in the portal logged the same error which was returned from the table storage client (412 - Precondition Failed.):

Azure Table Storage does support hundreds of transactions per second so in this instance storage is telling us that the entity to be updated has been updated by another transaction.

So what steps can be taken to reduce potential race conditions?

The obvious one is to change the design to not maintain a count but a collection of records so the action is always an insert. Table storage is cheap and maybe there could be some benefit to a more granular approach like determining a pattern to the access (re., at the end of the month, once a week on tuesday, etc.), but then some mechanism would be required to aggregate the collection together.

And, what if the schema for the table is fixed?

One idea would be to nicely ask Microsoft to perform all the actions of the function as a transaction and only remove the queue item when the transaction completes successfully. Or if support for Service Bus Queues with sessions. Maybe we will see these features become available to Functions and Webjobs in the future.

Another idea would be to add in a form of retry on the insert or update to the table. This is interesting in that it would change both implementations to handle the insert or update to table storage from within the method and not as an out parameter.

It is details like the above that make programming more interesting and more difficult.