Organize your pipeline and recurring jobs easily with Data Lake Analytics (part 1)

Tagging and exploring pipeline and recurring jobs with metadata

In a previous blog post, we introduced how managing/monitoring pipeline and recurring jobs is made easier with new concepts we added to Data Lake Analytics. Let's take a deeper look at how to leverage this new capability. This is part 1 of a 2-part series.

In this blog, we will show you how to tag and explore your pipeline and recurring jobs:

- Tag jobs with the new pipeline and recurring job metadata using Azure PowerShell

- Explore pipeline and recurring jobs using Azure Portal and Azure PowerShell

In part 2, we will show you how to leverage pipeline and recurring job information to reduce failed jobs and identify performance problems:

- Use this pipeline and recurring job information to reduce failed AU Hours using Azure Portal and Azure PowerShell

- Identify performance differences using Azure Portal and Azure PowerShell

We'll use Contoso, a fictitious local retail startup that sells clothes online, to illustrate these capabilities. To learn more about what their customer base does before making a purchase, Contoso runs a series of U-SQL jobs each week to inspect usage patterns on their website and mobile app. They also run a series of U-SQL jobs to track how often customers encounter errors. These U-SQL jobs are the perfect candidates for illustrating the power of managing and monitoring pipeline and recurring jobs in Azure Data Lake because they run on a regular cadence and work together to provide .

1) How to tag jobs with the new pipeline and recurring job metadata

Contoso automates their own job submission using Azure PowerShell. To ensure that pipeline information is given with each job submission, they use two different PowerShell scripts depending on whether they are dealing with a pipeline job or a recurring job. Here are examples of the different Contoso scripts that you may make your own.

How to tag pipeline job through Azure PowerShell

The method for tagging pipelines is easy. It applies to both a single or recurring pipelines. To make sure pipeline information is given with each job submission, Contoso simply modifies the job by adding new a set of new properties after the -ScriptPath (for additional detail see documentation):

$adlaAccount = "contosopipelineaccount"

$currentDate = Get-Date -Hour 0 -Minute 0 -Second 0 -Millisecond 0

$lastWeekStartDate = $currentDate.AddDays(-($currentDate.DayOfWeek.value__+7))

$lastWeekStart = $lastWeekStartDate.ToString("yyyy-MM-dd")

$pipelineId = "4a144082-3fcb-43ae-b027-e0c10adf8a2e"

$pipelineName = "Customer Usage Trend Analysis"

$pipelineUri = "https://internal.contoso.com/pipelineStatus/$pipelineId"

$runId = [GUID]::NewGuid().Guid

# Submit SalesAggregation job on last week's data

$job = Submit-AdlJob -Account $adlaAccount `

-Name "Customer Usage Pattern-$lastWeekStart" `

-ScriptPath "./customerUsagePattern.usql" `

-RecurrenceId "e68fab5c-546e-4d7a-baa7-794c407f443b" `

-RecurrenceName "Customer Usage Pattern" `

-PipelineId $pipelineId `

-PipelineName $pipelineName `

-PipelineUri $pipelineUri `

-RunId $runId

How to tag recurring jobs through Azure PowerShell

In some cases, Contoso doesn't have a whole pipeline, but simply a job that runs regularly.

When submitting multiple jobs, are specified after the -ScriptPath. This is replicated for each recurring job to be tagged.

$adlaAccount = " contosopipelineaccount "

$currentDate = Get-Date -Hour 0 -Minute 0 -Second 0 -Millisecond 0

$lastWeekStartDate = $currentDate.AddDays(-($currentDate.DayOfWeek.value__+7))

$lastWeekStart = $lastWeekStartDate.ToString("yyyy-MM-dd")

# Submit MyData job on yesterday's data

$job = Submit-AdlJob -Account $adlaAccount `

-Name "Task Analysis-$lastWeekStart" `

-ScriptPath "./customerUsagePattern.usql" `

-RecurrenceId "a92d6e70-5bed-4439-8cc9-c349147e4034" `

-RecurrenceName "Task Analysis"

2) How to explore pipeline and recurring jobs

Now that Contoso has setup their job submission system to tag jobs, they can see jobs grouped by pipeline and the recurrence patterns related to those jobs. The can visualize these pipelines and recurrence patterns either through the Portal or through PowerShell scripts

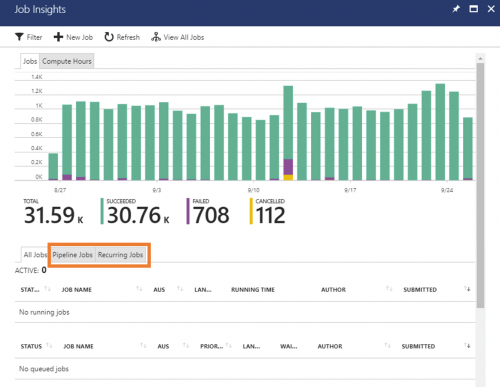

How to explore pipeline and recurring jobs using Azure Portal

- Open the Data Lake Analytics account

- On the Table of Contents on the left, click on "Job Insights"? or click on the graph

- There are two new tabs in this view "Pipeline Jobs"? and "Recurring Jobs"?. Note: these two tabs only appear if you have submitted a pipeline or recurring job

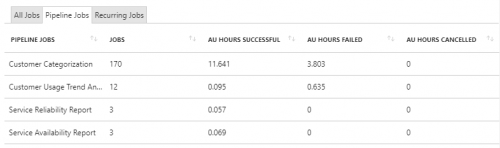

- A list of pipelines which contain U-SQL jobs along with some aggregated statistics to quickly identify waste is shown.

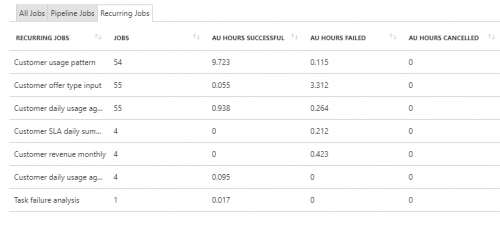

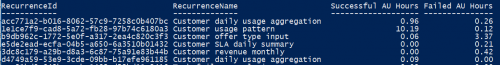

- Clicking on "Recurring Jobs" shows a list of jobs that are recurring along with some aggregated statistics to quickly identify waste. We will go over this in more details in the next section.

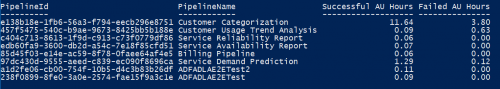

How to explore pipeline and recurring jobs using Azure PowerShell

- To list all pipelines that contain a U-SQL job

Get-AdlJobPipeline -Account $adlaAccount | Format-Table PipelineId, PipelineName, AuHoursSucceeded, AuHoursFailed

- To list all recurring jobs

Get-AdlJobRecurrence -Account $adlaAccount | Format-Table RecurrenceName, AuHoursSucceeded, AuHoursFailed

That concludes the basics of tagging a job and browsing tagged jobs. Look out for part 2, where we will show you how to leverage pipeline and recurring job information to reduce failed jobs and identify performance problems.