Scalable LOB Networking Architecture in Azure

Mesh and Hub-and-Spoke Architectural Considerations using Virtual Network Peering in Azure

Most organizations solve their need for network connectivity between various business units by creating a mesh network architecture among the various virtual networks. All nodes in the network are interconnected, so network traffic is fast and can be easily redirected as needed. This topology in common for various line of businesses (LoB) deployments in the cloud.

At Azure, we are seeing a growing number of enterprises use Azure Virtual Network Peering (VNet Peering) to provide connected, secure and isolated workspaces for their business units. Virtual Networks (the peers) are directly linked to each other using private IP addresses. The result is a low-latency, high bandwidth connection using the Microsoft backbone without the use of virtual private networks or gateways.

VNet Peering gives Azure customers a way to provide managed access to Azure for multiple teams or to merge teams from different companies altogether. But as enterprises expand their networks, they may encounter subscription limits, such as the default limit for maximum number of peering links. Most of these limits can be increased through a support request.

The disadvantage of a mesh topology is that it requires many connections, making it costlier to operate and manage than other topologies. And the number of peering links required can quickly reach the Azure limits as Table 1 shows. The number of virtual network peering links is directly proportional to the number of virtual networks and business units.

Table 1. Proportional relationship of virtual networks to required peering links in a mesh topology.

| Number of virtual networks | 10 | 20 | 50 | 100 |

| Peering links required | 45 | 190 | 1,225 | 4,950 |

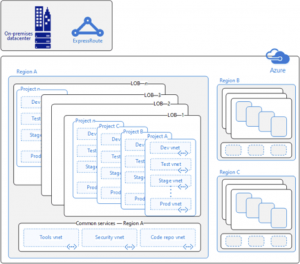

One Azure enterprise customer, typical of many we work with, supports multiple LOBs on Azure. Their IT group manages the process, provisioning Azure subscriptions for each business unit and enabling teams to work on their projects in relative isolation as well as keep track of costs. Each project commonly includes several environments such as development, production, and staging. This customer’s security policy was to isolate the environments within their existing Azure Active Directory tenant by providing each with its own virtual network as Figure 1 shows.

Their virtual network was composed of a front-end subnet, middle tier, and back-end subnet. Working with subnets gave the teams fine-grained control over their isolation boundaries and helped meet compliance requirements.

[caption id="attachment_1505" align="alignright" width="452"] Figure 1: Typical LOB enterprise network configuration with Express Route connection to on-premises datacenter.[/caption]

Figure 1: Typical LOB enterprise network configuration with Express Route connection to on-premises datacenter.[/caption]

Very few projects run entirely in isolation. As Figure 1 shows, business units need access to:

- Other lines of business.

- A datacenter on premises, often through a shared Express Route connection.

- A common services virtual network used to host services shared by the organization.

For example, in one Azure region, an enterprise might have dozens of LOBs, each with multiple virtual networks supporting development, testing, staging, and production environments. Yet they need to share source code repositories, developer tools, and security resources.

Using virtual network peering, these networks can be connected using either a mesh topology, ensuring all peers have access to all other peers, or a chaining topology to aggregate resources in hubs that can be shared by the spokes in the network.

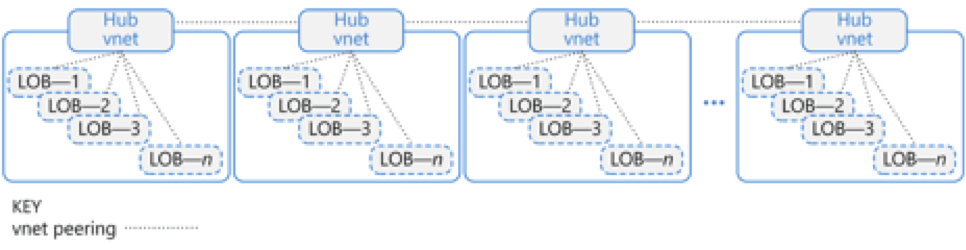

In a network chaining architecture, also known as hub and spoke, the topology connects groups of virtual networks to a hub virtual network, which then connects to other hub virtual networks like links in a chain, as Figure 2 shows. This can provide an alternative architecture that enables a larger number of virtual networks to be connected, beyond what the traditional mesh network architecture can today achieve with the virtual networking peering limitations.

[caption id="attachment_1515" align="alignnone" width="644"] Figure 2: Peering connects one virtual network to another. It is not transitive—for example, LOB1 on the first hub cannot connect to LOB1 on the second hub.[/caption]

Figure 2: Peering connects one virtual network to another. It is not transitive—for example, LOB1 on the first hub cannot connect to LOB1 on the second hub.[/caption]

In this model, it’s easy to add and remove spokes without affecting the rest of the network, giving business units great flexibility within their environments. This topology supports centralized monitoring and management. In addition, hubs aggregate connections so you’re less likely to encounter the peering link limit. Azure also provides many ways to control network traffic using network security groups that specify rules to allow or deny traffic and user-defined routes that control the routing of packets.

Virtual network chaining supports a layered approach to isolation, which some large organizations require. For example, one multinational Azure customer wanted to provide greater security for their large-scale engineering projects, so they deployed one virtual network per project environment. A chaining model enables them to host each development, test, staging, and production environment in its own virtual network.

This topology does introduce a few tradeoffs. The hubs can become a potential single-point of failure—if one hub goes down for any reason, it breaks the chain. In addition, when communication between virtual networks travels through two or more hubs, the custom route tables and network virtual appliances (NVAs) can cause higher latency. The cost of these resources can add up, too, while the combination of traffic rules and peering links can make deployments challenging to deploy and manage.

For implementation details, see Implement a hub-spoke network topology in Azure.

In our recently published whitepaper (https://aka.ms/HubAndSpoke) on mesh and hub-and-spoke networks in Azure, we have outlined some of the trade-offs between hub-and-spoke network topology and mesh networks in Azure. Additionally, we have also provided more insights into controlling access of the various VNets through policy and role-based access.

We hope you find this article and that whitepaper useful.